Document 10540778

advertisement

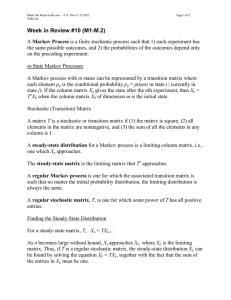

c Math 166, Fall 2015, Robert Williams Ch M- Markov Chains Recall: A finite stochastic process is a finite sequence of experiments in which the outcomes and associated probabilities of each experiment depends on the outcomes of all proceeding experiments. A Markov process is a stochastic process in which each experiment only depends on the outcome of the experiment before it. That is, for a Markov process, the following are true: 1. A finite sequence of experiments are taking place 2. Each experiment has the same set of possible outcomes 3. The probability of each outcome depends only on the outcome of the last experiment Example 1 A bored person decides to play with a fair coin. Whenever the coin shows heads, the person changes it to tails. Whenever the coin shows tails, he flips it. Assuming that when he finds the coin it is showing tails, make a tree diagram for the first three states of the coin. 1 c Math 166, Fall 2015, Robert Williams Since Markov processes only rely on the previous state, it is useful to describe them using a transition matrix that gives the probabilities of going from one state to another. Current Next Session State p1,1 p1,2 p2,1 p2,2 For example, the process in the previous example has the following transition matrix: H T H T 0 0.5 1 0.5 In general, a matrix T is called a stochastic or transition matrix if 1. T is a square matrix 2. all entries in T are non-negative 3. the sum of the elements in any column of T is 1 When the probability of being in a state or the distribution between states is written as a column vector X, then after one trial, the new probability of being in each state/ distribution of objects between the states is T X. We will often write X0 as the initial state and Xn as the state after n trials. Example 2 Suppose the same experiment takes place as in the previous example, except when the person finds the coin it has an equal chance of being heads or tails. Find the probability distribution for the coin’s state after 4 rounds of the experiment is performed. 2 c Math 166, Fall 2015, Robert Williams Example 3 Assume that 8% of the current population currently attends a university. Every year, a person who attended a university the previous year has a 73% chance to continue attending, while a person who did not attend a university the previous year has a 5% to start attending one. What portion of the population will attend a university next year? What portion of the population will attend a university 5 years from now? Example 4 A group of friends like to eat at a Mexican, Chinese, or Greek restaurant every week. If they last ate Mexican food, they have a 8% chance to eat Mexican again and a 40% chance to eat Chinese. If they last ate Chinese food, they have a 12% chance to eat Chinese again and a 46% chance to eat Greek. If they last ate Greek food, they have a 10% chance to eat Greek again and a 52% chance to eat Mexican. Find the probability of the friends choosing any one of these food types three weeks from now given that they ate Mexican food this week. 3 c Math 166, Fall 2015, Robert Williams Whenever an experiment results in shifting distributions, it is natural to wonder if there is some distribution that, once achieved, will remain unchanged throughout further experiments. Such a state, if one exists, is called a steady-state distribution. A steady-state distribution exists exactly when there is a limiting transition matrix. That is, for the transition matrix T , there is a matrix L such that as n gets larger, T n gets closer to L. This is called the steady-state matrix. We can see that the distribution XL is a steady-state distribution for the transition matrix T whenever T XL = XL . Using this equality, along with the fact that the entries of XL must sum to 1, we can find the steady-state whenever it exists. Moreover, if we let L be the steady-state matrix of T , then L is the matrix where each column is XL . Example 5 Find the steady-state distribution and steady-state matrix for example 3. Recall that the transition matrix was T = University Non-University University Non-University .73 .05 .27 .95 4 c Math 166, Fall 2015, Robert Williams A stochastic matrix T is called regular if it has a limiting matrix (i.e. regular stochastic matrices are precisely those that have a steady-state). Theorem 1 A stochastic matrix T is regular precisely when there is some power of T , say T n , such that all entries of T n are positive. Example 6 For each of the following matrices, determine whether or not they are stochastic. If the matrix is stochastic, determine whether or not it is regular. 0.4 0 • 0.6 1 0.7 0.3 • 0.6 0.4 0.28 • 0.72 1 0 Example 7 In a local town, it is discovered that if it rained today, there is an 80% chance that it will rain tomorrow. If it did not rain today, however, there is only a 3% chance that it will rain tomorrow. Write a transition matrix for the weather in this town. If it is regular, find the steady-state probability that it will rain the next day. 5 c Math 166, Fall 2015, Robert Williams If a Markov process has a state that is impossible to leave, then that state is called an absorbing state. A Markov process is called absorbing if 1. There is at least one absorbing state 2. It is possible to move from any nonabsorbing state to one of the absorbing states in a finite number of steps The transition matrix for an absorbing Markov process is called an absorbing stochastic matrix. Example 8 Determine if each of the following are absorbing stochastic matrices: 1 0.23 • 0 0.41 0 0.36 1 0 • 0 0.45 0 0.55 0 0.25 • 1 0.4 0 0.35 0.16 0.32 0.52 0 0.72 0.28 0.65 0.15 0.2 6 c Math 166, Fall 2015, Robert Williams Example 9 A student attempts to learn a complicated structure by working examples of it. Every time the student works an example, the student has a 36% chance of learning the structure. Once the structure is learned, the student will not forget it. Write the transition matrix for this process. Explain why this is an absorbing Markov process. Example 10 Find the steady-state and limiting matrix for the following transition matrix. 0.5 0.02 1 0.07 0 0.5 0 0.47 0.5 0.08 0 0.03 0 0.4 0 0.5 7