Charge Transport, Configuration Interaction and Rydberg States under Density Functional Theory

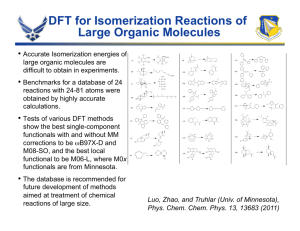

advertisement

Charge Transport, Configuration Interaction and

Rydberg States under Density Functional Theory

by

Chiao-Lun Cheng

B.S., Univ. of California, Berkeley (2003)

B.A., Univ. of California, Berkeley (2003)

Submitted to the Department of Chemistry

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2008

c Massachusetts Institute of Technology 2008. All rights reserved.

Author . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Department of Chemistry

Aug 15, 2008

Certified by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Troy Van Voorhis

Associate Professor of Chemistry

Thesis Supervisor

Accepted by . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Robert W. Field

Chairman, Department Committee on Graduate Students

This doctoral thesis has been examined by a Committee of the Department of Chemistry as follows:

Professor Jianshu Cao . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Chairman, Thesis Committee

Associate Professor of Chemistry

Professor Troy Van Voorhis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Thesis Supervisor

Associate Professor of Chemistry

Professor Robert W. Field . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

Member, Thesis Committee

Haslam and Dewey Professor of Chemistry

2

Charge Transport, Configuration Interaction and Rydberg

States under Density Functional Theory

by

Chiao-Lun Cheng

Submitted to the Department of Chemistry

on Aug 15, 2008, in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy

Abstract

Density functional theory (DFT) is a computationally efficient formalism for studying

electronic structure and dynamics. In this work, we develop DFT-based excitedstate methods to study electron transport, Rydberg excited states and to characterize

diabatic electronic configurations and couplings. We simulate electron transport in a

molecular wire using real-time time-dependent density functional theory in order to

study the conduction of the wire. We also use constrained density functional theory to

obtain diabatic states and diabatic couplings, and use these excited-state properties

in a configuration-interaction method that treats both dynamic and static correlation.

Lastly, we use eDFT, an excited-state self-consistent-field method, to determine the

energies of excited Rydberg atomic states.

Thesis Supervisor: Troy Van Voorhis

Title: Associate Professor of Chemistry

3

4

Acknowledgments

I thank my advisor Prof. Troy Van Voorhis for his leadership and guidance. His

door was always open when I had questions or ideas (however silly and impractical)

I needed advice on, and his broad knowledge base and endless patience as a mentor

has had a huge impact on my learning the past five years. I am especially thankful

to Troy for giving me the independence to pursue my own ideas, even with their

dismal success rate. This exploration space has helped me develop as a scientist and

a thinker over my years of graduate study.

I would like to thank my thesis committee members Profs. Robert W. Field and

Jianshu Cao for their advice. I thank my undergraduate physical chemistry lecturer

Prof. Max Wolfsberg for starting me on this journey - without those early discussions

I would not have chosen this path. I thank my undergraduate research advisors Profs.

Paul A. Bartlett and Judith P. Klinman for introducing me to the world of research.

I would especially like to thank my Klinman group mentor Matthew P. Meyer for

showing me what an interesting place the world can be.

Fellow physical chemistry colleagues have helped make my five years very enjoyable. I thank Jim Witkoskie, Steve Presse, Qin Wu, Xiaogeng Song, Jeremy

Evans, Seth Difley and Steve Coy for many interesting and stimulating discussions.

I thank Ziad Ganim for his assistance in figuring out CHARMM. I would also like to

thank Aiyan Lu, Yuan-Chung Cheng, Shilong Yang, Xiang Xia, Jianlan Wu, Serhan

Altunata, Vassiliy Lubchenko, Leeping Wang, Tim Kowalczyk and Ben Kaduk for

maintaining the spirit of camaraderie I’ve felt in the “zoo”.

I thank friends in Salon Chaos, the MIT Aikido Club, MIT ROCSA and the MIT

Singapore Students Society - they have been an invaluable source of warmth and

sanity. Salon Chaos offered a truly unique forum for exchanging ideas, I would like

to thank Mo-Han Hsieh and I-Fan Lin for organizing it. I thank my neighbors Emma

Chung and her housemates for providing me with a second home during my last few

years of graduate school. I thank Rebecca Somers for her perspective during stressful

times, and Huiyi Chen for always being there.

5

I thank my sister Ting-Yen for her encouraging words throughout the years. Most

of all, I thank my parents, a constant source of support throughout my life, for

granting me the freedom to pursue my dreams. This dissertation is dedicated to

them.

6

To my parents

7

8

Contents

1 Introduction

1.1

1.2

1.3

19

The Many-Body Schroedinger Equation . . . . . . . . . . . . . . . . .

19

1.1.1

Hartree-Fock . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

1.1.2

Electronic Correlation . . . . . . . . . . . . . . . . . . . . . .

26

Density Functional Theory . . . . . . . . . . . . . . . . . . . . . . . .

30

1.2.1

The Hohenberg-Kohn Theorems . . . . . . . . . . . . . . . . .

30

1.2.2

The Kohn-Sham Formalism . . . . . . . . . . . . . . . . . . .

32

1.2.3

Constrained Density Functional Theory (CDFT) . . . . . . . .

34

1.2.4

Time-Dependent Density Functional Theory (TDDFT) . . . .

37

1.2.5

Approximate Exchange-Correlation Potentials . . . . . . . . .

40

Structure of this Dissertation . . . . . . . . . . . . . . . . . . . . . .

47

2 Molecular Conductance using

Real-Time Time-Dependent

Density Functional Theory

49

2.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

2.1.1

The Landauer-Büttiker Model . . . . . . . . . . . . . . . . . .

49

2.1.2

Non-Equilibrium Green’s Function (NEGF) Methods . . . . .

50

2.1.3

Time-Dependent Density Functional Theory . . . . . . . . . .

51

Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

53

2.2.1

Integrating the TDKS equations . . . . . . . . . . . . . . . . .

53

2.2.2

Numerical Validation . . . . . . . . . . . . . . . . . . . . . . .

59

Application: Conductance of a molecular wire . . . . . . . . . . . . .

61

2.2

2.3

9

2.4

2.3.1

Voltage Definitions . . . . . . . . . . . . . . . . . . . . . . . .

62

2.3.2

Current Averaging . . . . . . . . . . . . . . . . . . . . . . . .

65

2.3.3

Comparison to NEGF results . . . . . . . . . . . . . . . . . .

68

2.3.4

Voltage Biased Case . . . . . . . . . . . . . . . . . . . . . . .

70

2.3.5

Transient Fluctuations . . . . . . . . . . . . . . . . . . . . . .

71

Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

74

3 Dissociation Curves using

Constrained Density Functional Theory

Configuration Interaction

77

3.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

77

3.2

State of the Art . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

78

3.3

Our Method: Constrained Density Functional Theory Configuration

Interaction (CDFT-CI) . . . . . . . . . . . . . . . . . . . . . . . . . .

79

3.3.1

Identifying an Active Space . . . . . . . . . . . . . . . . . . .

80

3.3.2

Computing Matrix Elements . . . . . . . . . . . . . . . . . . .

81

Application . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

85

3.4.1

H+

2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

85

3.4.2

H2 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

87

3.4.3

LiF . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

88

3.5

Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

91

3.6

Acknowledgements . . . . . . . . . . . . . . . . . . . . . . . . . . . .

92

3.4

4 Rydberg Energies using Excited-State DFT

93

4.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

93

4.2

Ground State Kohn-Sham DFT . . . . . . . . . . . . . . . . . . . . .

95

4.3

Excited State Kohn-Sham DFT . . . . . . . . . . . . . . . . . . . . .

96

4.4

State of the Art: Linear Response TDDFT . . . . . . . . . . . . . . .

97

4.5

Application . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

98

4.5.1

The Excited-State Exchange-Correlation Potential . . . . . . .

98

4.5.2

Numerical Evaluation of Rydberg Energies . . . . . . . . . . . 103

10

4.6

Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

4.6.1

4.7

Extrema of the ground state energy functional . . . . . . . . . 106

Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

5 Conclusion

113

A Magnus and Runge-Kutta Munthe-Kaas Integrators

115

A.1 Magnus Integrators . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

A.2 RKMK Integrators . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

11

12

List of Figures

1-1 UHF orbital filling - ↑ are α-electrons, ↓ are β-electrons. Each horizontal line represents a different orbital, and the α and β electrons fill

different orbitals in an Aufbau manner, from the bottom up. In this

particular example, there are 4 α electrons, 4 β electrons and 6 basis

functions forming 12 spin-orbitals . . . . . . . . . . . . . . . . . . . .

25

1-2 Typical Algorithm for Solving the HF equations . . . . . . . . . . . .

26

1-3 Some CASSCF(2,3) configurations

. . . . . . . . . . . . . . . . . . .

29

1-4 Algorithm for Solving the CDFT equations . . . . . . . . . . . . . . .

36

1-5 H+

2 Energies in cc-PVTZ basis . . . . . . . . . . . . . . . . . . . . . .

45

1-6 H2 Energies in cc-PVTZ basis . . . . . . . . . . . . . . . . . . . . . .

46

2-1 Predictor-Corrector routine for the 2nd order Magnus integrator. The

order row shows the time order (in dt) to which the matrices in the

same column are correct to. . . . . . . . . . . . . . . . . . . . . . . .

58

2-2 Minimum wall time required to obtain a prescribed average absolute

error in the final density matrix of methane (B3LYP/6-31G*, 120

a.u of propagation) using various approximate propagators: 2nd order

Magnus (green dashed), 4th order Runge-Kutta (teal dot-dashed with

squares), 4th order Magnus (red solid) and 6th order Magnus (blue

dotted). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

60

2-3 Schematic of the source-wire-drain geometry used in the present simulations. The bias is applied to the left and right groups of atoms, which

act as a source and drain for electrons, respectively. For different wire

lengths (e.g. 50 carbons versus 100) the wire length is kept fixed and

the size of the source and sink are varied. . . . . . . . . . . . . . . . .

61

2-4 Initial density corresponding to a chemical potential bias in polyacetylene. Red indicates charge accumulation and green charge depletion

relative to the unbiased ground state. At time t=0 the bias is removed

and current flows from left to right. . . . . . . . . . . . . . . . . . . .

65

2-5 Transient current through the central four carbons in C50 H52 at a series

of different chemical potential biases. There is an increase in current

as voltage is increased, along with large, persistent fluctuations in the

current. The currents are converged with respect to time step and

the apparent noise is a result of physical fluctuations in particle flow

through the wire. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

65

2-6 Transient current through the central four carbons in C50 H52 at a series

of different chemical potential biases smoothed over a time window of

width ∆t = .36f s. The average currents are now move clearly visible.

The slow decay of the current at later times results from the partial

equilibration of the finite left and right leads. . . . . . . . . . . . . . .

66

2-7 Maximum smoothed current through the central four carbons in C50 H52

as a function of chemical potential bias (red pluses). For comparison,

we also present the analogous result for the central carbons in C100 H102

(green squares) demonstrating convergence of the calculation with respect to lead size. The blue line is a linear fit to the C50 H52 data

at low bias indicating that polyacetylene is an Ohmic resistor with a

conductance of ≈ .8G0 . . . . . . . . . . . . . . . . . . . . . . . . . .

14

67

2-8 Maximum smoothed current through the central four carbons in C50 H52

as a function of chemical potential bias using real-time TDDFT (top

red line) and an NEGF approach described in the text (bottom blue

line). The two calculations are nearly identical at low bias and differ

somewhat at higher biases due to the lack of self-consistency in the

NEGF results. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

2-9 Maximum smoothed current through the central four carbons in C50 H52

as a function of chemical potential bias (top red line) and voltage bias

(bottom green line). The results are quite similar until 4 V, at which

point the bias is so large that the finite width of the valence band for

polyacetylene causes the conductance to plateau in the voltage biased

case. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

70

2-10 Transient current through the central four carbons in C100 H102 at a

series of different chemical potential biases. The current fluctuations

previously observed with smaller reservoirs in C50 H52 persist and are

therefore not associated with a finite size effect. . . . . . . . . . . . .

71

2-11 Statistical noise in the current through the central four carbons in

C100 H102 . The data (squares) can be fit to a sub-Poissonian distribution

(line). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

72

3-1 Figure taken from Ref. [1] Obtaining effective constraint value from

promolecular densities. The constraints are on Fragment A. Integration

over the striped area will give NCeff . . . . . . . . . . . . . . . . . . . .

83

3-2 Figure taken from Ref. [1] Potential curves of H+

2. . . . . . . . . . . .

86

3-3 Figure taken from Ref. [1] Potential curves of H2 . . . . . . . . . . . .

88

3-4 Figure taken from Ref. [1] Error comparison of UB3LYP and CB3LYPCI. Errors are calculated against full CI results. . . . . . . . . . . . .

15

89

3-5 Figure taken from Ref. [1] Singlet potential curves of LiF by B3LYP

and CB3LYP-CI as compared to the triplet curve by B3LYP. The Ms =0

curve of B3LYP is a broken-symmetry solution at large R and has a

positive charge of 0.3 on Li at the dissociation limit. . . . . . . . . . .

90

3-6 Figure taken from Ref. [1] CB3LYP-CI(S), CB3LYP-CI and CBLYPCI potential curves as compared to OD(2). CB3LYP-CI(S) is the

CB3LYP-CI using stockholder weights.

. . . . . . . . . . . . . . . .

91

3-7 Figure taken from Ref. [1] Weights of configurations in the final ground

state. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

92

4-1 Hydrogen atom excited state exchange potentials (eV vs. Angstroms).

The solid red line shows the exact exchange potential and the subsequent lines (moving upward at the origin) show the 1s, 2s and 3s LSDA

exchange potentials from eDFT using the exact density. While none of

the potentials individually has the correct decay, subsequent potentials

decay more and more slowly. . . . . . . . . . . . . . . . . . . . . . . . 100

4-2 Exchange potentials for hydrogen. The dotted green line is the groundstate LSDA exchange potential [2]. The solid red line is the exact exchange potential. The blue crosses are the excited-state density LSDA

exchange potential at the classical turning points r = 2n2 . . . . . . . 102

A-1 Extrapolation routine for the 4th order Magnus integrator. The order

row shows the time order (in dt) to which the matrices in the same

column are correct to. . . . . . . . . . . . . . . . . . . . . . . . . . . 116

16

List of Tables

4.1

eDFT orbital eigenvalues of the hydrogen atom computed using LSDA

exchange and the exact densities. Each row corresponds to a particular

excited state, and the columns give the appropriate orbital eigenvalues. Note that, as one occupies more and more diffuse orbitals, the 1s

orbital eigenvalue approaches the correct value of -13.6 eV, naturally

correcting for the poor LSDA exchange-only estimate of the ionization

energy of ǫ1s =-6.3 eV. . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2

99

Hydrogen Atom eDFT Excitation Energies (eV). Italics indicate difference from the exact energy. NC indicates no converged state was

found. Average and Root Mean Square errors are also indicated. . . . 103

4.3

Lithium Atom Excitation Energies (eV). Italics indicate difference

from the experimental energy. ELDA−X and EB3LY P denote eDFT energies with the appropriate functional, while TDLDA and TDB3LYP

are the corresponding TDDFT energies. CS00 [4] contains an asymptotic corrections to the B3LYP exchange potential. In the TDDFT

columns NA indicates energy levels above the ionization energy. Experimental numbers from Ref. [5]. . . . . . . . . . . . . . . . . . . . . 105

17

18

Chapter 1

Introduction

In this chapter, we introduce some of the fundamental laws and approaches to electronic structure, which will set the background and notation for the work in this

dissertation. We end by describing how the rest of this dissertation is structured.

1.1

The Many-Body Schroedinger Equation

Here we introduce the Schroedinger equation, assuming non-relativistic electrons, i.e.

the kinetic energy is small compared to the rest mass of the electron, and also immobile nuclei, i.e. the Bohn-Oppenheimer approximation and no magnetic interactions.

The electronic Hamiltonian H is a Hermitian operator with units of energy, and

can be expressed as the sum of several parts:

H = T + vext + vee

X 1

T =

− ∇2i

2

i

X

vext =

vext (ri )

(1.1)

i

vee =

X

i<j

1

|ri − rj |

T is the kinetic energy operator, and is the sum of kinetic energy operators for

each electron coordinate ri . vext is the external potential, which is the single-particle

external potential summed over all the electron coordinates. The single-particle ex19

ternal potential is a function which maps a single 3-d electron coordinate in length

units to an energy, vext (r). It represents the electric potential experienced by electrons, which in molecular systems originates from the nuclei. vee is the Coulomb

repulsion between electrons, and is a sum of the reciprocal distance between all pairs

of electron positions.

A given electronic system is completely specified by the function vext , and has a

state specified by a wavefunction Ψ(x1 , x2 , · · · , xN ) where N is the number of electrons

and xi include both spin and spatial coordinates.

Assuming that Ψ is normalized, the energy of a system in the state Ψ is obtained

by taking its expectation with respect to the Hamiltonian H.

E=

Z

Ψ† (~r)HΨ(~r)d~r

We now extremize the energy of an electronic system while enforcing the constraint

of normalizability using the Lagrange multiplier λ

W =

Z

†

Ψ (~r)HΨ(~r)d~r − λ ·

Z

†

Ψ (~r)Ψ(~r)d~r − 1

By using the Lagrange multiplier λ, we have converted the optimization of E over

normalizable Ψ to an optimization of W over all Ψ and λ. For a given value of λ, we

extremize W over Ψ using the Euler-Lagrange equations

∂W

= HΨ(~r) − λ · Ψ(~r) = 0

∂Ψ(~r)

(1.2)

By taking the inner product of equation 1.2 against Ψ† , we find that λ = E. We

thus derive the Schroedinger equation:

HΨi (~r) = Ei Ψi (~r)

(1.3)

Equation 1.3 is a linear differential equation, and its solutions Ψi are the eigen20

states of the Hamiltonian H. The eigenstate with the lowest Ei is the ground state.

The Ψi form a complete orthonormal basis, and any state Φ can be expanded as a linP

ear combination of the Ψi , Φ(~r) = i Ci Ψi (~r), and the time-dependent Schroedinger

equation

HΦ(~r, t) = i

dΦ(~r, t)

dt

then gives its time-evolution as

Φ(~r, t) =

X

Ci Ψi (~r)e−Ei t

i

1.1.1

Hartree-Fock

For electrons, the wavefunction Ψ has to be normalized and completely antisymmetric. Both requirements can be satisfied by the Slater determinant form [6] for the

wavefunction. Hartree-Fock is based on this functional form, and is a mean-field theory, where each electron only feels the average effects of the other electrons on itself.

This simplifies the interaction into an effective one-body potential, which in turn allows the wavefunction of 3N dimensions to be expressed in terms of N 3-dimensional

spin-orbitals.

The Slater determinant takes the set of orthonormal spin-orbitals φi(x), where

x includes both spatial r and spin coordinates s, and constructs Ψ by taking the

determinant of matrix φi (xj ) where i and j are the row and column indices start from

1 and run up to the number of electrons N:

φ1 (x1 ) φ1 (x2 )

1 φ2 (x1 ) φ2 (x2 )

Ψ(x1 , x2 , · · · , xN ) = √ .

..

N! ..

.

φN (x1 ) φN (x2 )

Since the orbitals are normalized, the factor of

tion for the many-body wavefunction.

21

√1

N!

···

···

..

.

···

φ1 (xN ) φ2 (xN ) .. . φN (xN )

provides the right normaliza-

Since all electron positions are equivalent, the expectation of a one-body operator

in terms of the orbitals is then

Z

Ψ† (x1 , x2 , · · · , xN )

= N

=

Z

XZ

X

i

Oi Ψ(x1 , x2 , · · · , xN )dx1 dx2 · · · dxN

Ψ† (x1 , x2 , · · · , xN )O1 Ψ(x1 , x2 , · · · , xN )dx1 dx2 · · · dxN

φ†j (x1 )O1 φj (x1 )dx1

j

and the expectation of a two-body operator is

Z

Ψ† (x1 , x2 , · · · , xN )

X

i<j

Oij Ψ(x1 , x2 , · · · , xN )dx1 dx2 · · · dxN

N(N − 1)

Ψ† (x1 , x2 , · · · , xN )O12 Ψ(x1 , x2 , · · · , xN )dx1 dx2 · · · dxN

2

Z

1X

φ†j (x1 )φ†k (x2 )O12 φj (x1 )φk (x2 )dx1 dx2

(1.4)

=

2 j,k

Z

1X

φ†j (x1 )φ†k (x2 )O12 φj (x2 )φk (x1 )dx1 dx2

(1.5)

−

2 j,k

=

Z

The detailed derivation of these rules can be found in reference [7]. For the

inter-electron Coulomb operator vee , the integral corresponding to line 1.4 is simply

the classical Coulomb repulsion denoted EJ and is strictly positive, while line 1.5

corresponds to the energy savings that come from the anti-symmetry of the singledeterminant wavefunction, has a strictly negative contribution, and is called the exchange term, denoted as EK . The Hartree-Fock energy is then

22

EHF = ET + Evext + EJ − EK

XZ †

ET =

φi (r1)T1 φi (x1 )dx1

i

Evext =

EJ

EK

XZ

φ†i (r1)vext (x1 )φi (x1 )dx1

i

Z

1X

1

=

φ†j (x1 )φ†k (x2 )

φj (x1 )φk (x2 )dx1 dx2

2 j,k

|r1 − r2 |

Z

1

1X

φ†j (x1 )φ†k (x2 )

φj (x2 )φk (x1 )dx1 dx2

=

2 j,k

|r1 − r2 |

(1.6)

(1.7)

By minimizing EHF with respect to the orbitals, and enforcing normality of the

orbitals with Lagrange multipliers ǫi , one gets the Hartree-Fock equations

HHF φi = ǫi φi

(1.8)

(1.9)

where the effective single-particle Hamiltonian, the Fock operator HHF , is given by

HHF = T + vext + J − K

(1.10)

and the Coulomb J and exchange K operators have been defined as

!

X Z φ†j (x2 )φj (x2 )

Jf (x1 ) =

dx2 f (x1 )

|r1 − r2 |

j

!

X Z φ†j (x2 )f(x2 )

Kf (x1 ) =

dx2 φj (x1 )

|r

−

r

|

1

2

j

The eigenstates of the Fock operator are the Hartree-Fock orbitals. In this picture,

the electrons interact with each other purely through the Coulomb term J and the

23

exchange term K.

The Hamiltonian has no spin dependence, and hence commutes with the spin

operator. This makes the spatial and spin dependence of each spin-orbital separable

- each spin-orbital φi (x) can be expressed as φi (r)σi (s) where σ has two possible

values, α and β. Accounting for this explicitly, we get the unrestricted Hartree-Fock

equations

HU HF φi = ǫi φi

(1.11)

HU HF = T + vext + J − K

!

X Z φ†j (r2 )φj (r2 )

Jf (r1 ) =

dr2 f (r1 )

|r

−

r

|

1

2

j

!

X Z φ†j (r2 )f(r2 )

Kf (r1 ) =

dr2 φj (r1 )δσj ,σf

|r

−

r

|

1

2

j

where all the operators are now spatial integrals. The orbitals are occupied as in

figure 1-1.

So far, by assuming the single determinant form, we have reduced the search for

the N-electron wavefunction Ψ(x1 , x2 , · · · , xN ) to a search for N orbitals φi (r). Each

orbital at this point could be any 3-d function. We introduce a further simplification

of a basis set at this point. A basis set is a set of functions which is used to expand

the orbital functions: φi is represented as a linear combination of basis functions:

P

φi (r) = j Cij χj (r). Within a given basis set, the orbital coefficients Cij then specify

the orbital.

We now introduce a change in the representation of state. The one-body reduced

P

density matrix ρσ (r, r′ ) = i δ(σ, σi )φi (r)φ†i (r′ ) is a one-body projection operator onto

the occupied orbital space, and can be built from the orbitals. The UHF orbitals

can also be obtained from it (up to a unitary transformation) by diagonalization.

This operator contains the same information as the orbitals, and is generally more

convenient to work with.

Equation 1.11 is an integro-differential equation because of the J and K terms,

24

Figure 1-1: UHF orbital filling - ↑ are α-electrons, ↓ are β-electrons. Each horizontal

line represents a different orbital, and the α and β electrons fill different orbitals in

an Aufbau manner, from the bottom up. In this particular example, there are 4 α

electrons, 4 β electrons and 6 basis functions forming 12 spin-orbitals

and is usually solved using iterative techniques (fig. 1-2) which compute J and K

from {φi} and use numerical linear algebra to solve the eigenproblem in equation 1.11.

For the solution to equation 1.11 to be a minimum, the self-consistency criterion must

be satisfied, i.e. φi must be the eigensolution to the mean-field Fock matrix HU HF

computed from itself, which also means that the reduced density matrix ρσ (r, r′ )

commutes with the Fock matrix HU HF

Z

ρσ (r, r′ )HUHF(r′ , r′′ ) − HUHF (r, r′ )ρσ (r′ , r′′ )dr′ = 0

The Direct Inversion in Iterative Space (DIIS) step in figure 1-2 is an extrapolation

method that uses past Fock matrices to produce a better guess for the next iteration

[8, 9].

The Hartree-Fock energy is variational, i.e. it is always higher than the correct

ground-state energy.

25

Figure 1-2: Typical Algorithm for Solving the HF equations

EHF ≥ Eground

This is because the only approximation we have made is to restrict the search to the

space of single-determinant wavefunctions made of orbitals in some finite basis, which

is a subset of the space of all antisymmetric wavefunctions.

1.1.2

Electronic Correlation

Eigenstates of the full Hamiltonian (equation 1.1) are 3N-dimensional antisymmetric,

normalized functions. N-body eigenstates of non-interacting Hamiltonians are Slater

determinants, which are a subset of these functions. Under Hartree-Fock, all the

interactions come in the form of a effective one-body operator, the Fock operator,

which means many many-body effects are being neglected. This is why we call Slater

determinants non-interacting states.

The correlation energy is defined as the difference between the ground-state energy

and the Hartree-Fock energy in the complete basis limit, and is negative because of

26

the variational nature of the Hartree-Fock energy. Even in the complete basis limit,

the correlation energy is non-zero because the exact ground-state wavefunction is not

a single determinant.

Ec = Eground − EHF

As described before, the Slater determinant takes N spin-orbitals and constructs

an N-body wavefunction out of them. The non-interacting ground-state is the Slater

determinant constructed from the N lowest eigenvalue spin-orbitals. Non-interacting

excited-states are also Slater determinants, and are constructed from all the other

sets of N spin-orbitals. Altogether, from a basis of M spin-orbitals, M

Slater

N

determinants including the ground state can be formed. These are all the eigenstates

of a Hermitian N-body operator, and thus form a complete basis for expressing any

other N-body wavefunction, including the interacting ground-state Φ0 . Following

chapter 4 from Ref. [7], we denote the non-interacting ground state with Ψ0 and

denote the excited states by listing the orbital substitutions. For example, the excitedstate determinant that substitutes unoccupied orbitals r and s for occupied orbitals

a and b would be denoted as Ψrs

ab .

Φ0 = c0 Ψ0 +

X

ar

cra Ψra +

X

a<b

r<s

rs

crs

ab Ψab +

X

rst

crst

abc Ψabc +

a<b<c

r<s<t

X

a<b<c<d

r<s<t<u

rstu

crstu

abcd Ψabcd + · · · (1.12)

This is the CI expansion for the exact wavefunction. Given a basis of reference

spin-orbitals, usually from Hartree-Fock, the exact ground state is expressable in

terms of the coefficients c in equation 1.12. There are, however, M

coefficients, a

N

number which grows exponentially with the basis size - as of 2008, the biggest systems

treatable with Full CI are only of 10-12 electrons.

All differences between the exact wavefunction Φ0 and the Hartree-Fock wavefunction Ψ0 are bundled under the title of correlation. Correlation is further qualitatively

divided into two types, dynamical and static correlation.

27

Static correlation is the long-range correlation that results from there not being a

good single-determinant representation for the ground state. For example, the exact

electronic wavefunction in a dissociated singlet H· -H· molecule is equal parts H↑ -H↓

and H↓ -H↑ . However, in the large R limit, the best single-determinant wavefunction

is

1

1 √2 (φL↑ (r1 ) + φR↑ (r1 ))

Ψ(r1 , r2 ) = √ 2 √1 (φL↓ (r1 ) + φR↓ (r1 ))

2

√1 (φL↑ (r2 )

2

1

√ (φL↓ (r2 )

2

+ φR↑ (r2 ))

+ φR↓ (r2 ))

∝ {(φL↑ (r1 ) + φR↑ (r1 ))(φL↓ (r2 ) + φR↓ (r2 ))} − {r1 ↔ r2 }

= {φL↑ (r1 )φL↓ (r2 ) + φR↑ (r1 )φR↓ (r2 ) + φL↑ (r1 )φR↓ (r2 ) + φR↑ (r1 )φL↓ (r2 )}

− {r1 ↔ r2 }

The first two terms are energetically unfavorable ionic H−↑↓ -H+ and H+ -H−↑↓

terms which have energies that go as −1/R [10]. This is a case we discuss further in

chapter 3. Static correlation can be treated by explicitly using multiple determinants

in multi-reference methods [11].

Dynamic correlation is a short-range effect that results from electrons dynamically

avoiding each other and thus lowering inter-electron repulsion. A simple example

would be the Helium atom, which has two electrons in close proximity. It can be

treated starting from a single-determinant reference and subsequently adding contributions from excited determinants either variationally or perturbatively. In cases with

dynamic correlation, the actual wavefunction may have a good overlap (90%-95%)

with the single-determinant wavefunction, with the remaining bit being the result of

summing small contributions from many other determinants. Those small contributions are significant energetically because they allow the electrons to move in such

a way as to avoid each other. These contributions are best treated by perturbative

methods like MP2 [12].

28

Active Space Methods

Active space methods solve the Schroedinger equation within a subspace of the

M

N

determinant complete basis. That subspace is known as the active space, and CI

methods use the matrix elements of the full electronic Hamiltonian, and the overlap

within that space to compute the c coefficients. The relatively small number of

determinants needed to treat static correlation motivates the use of active space

methods.

For example, in Complete-Active-Space Self-Consistent-Field (CASSCF), one picks

a subset of the M orbitals Ms and number of electrons Ns , and within the resulting

Ms

space of determinants, alternately solves for the CI coefficients c and the optimal

Ns

orbitals {φi } under a given set of CI coefficients.

Figure 1-3: Some CASSCF(2,3) configurations

In figure 1-3 we have shown some possible configurations under CASSCF(2,3),

2

where 2 electrons are placed in any of the 31 possible configurations with the same

total spin.

We develop a DFT-based active space CI method for treating both dynamic and

static correlation in chapter 3.

29

1.2

1.2.1

Density Functional Theory

The Hohenberg-Kohn Theorems

Most of the difficulties in conventional wavefunction-based electronic structure stem

from the complexity of the many-body wavefunction Ψ(~r). It is, however, possible to

avoid the many-body wavefunction. Density Functional Theory (DFT) is the study

of electronic structure with single-particle density as the independent parameter. The

single-particle density is obtained from the many-body wavefunction by integrating

out all but one position variable.

ρ(x1 ) =

Z

Ψ† (x1 , x2 , · · · , xN )Ψ(x1 , x2 , · · · , xN )dx2 dx3 · · · dxN

The single-particle density is a vastly simpler object than the many-body wavefunction, having only 3, as opposed to 3N, coordinates. Surprisingly, as proven by the

Hohenberg-Kohn theorem [13], the single-particle density contains all the information

of the many-body ground-state wavefunction. The crux of the Hohenberg-Kohn theorem is the idea that, even though electronic Hamiltonians are two-body operators,

the difference between any two electronic Hamiltonians is a one-body operator.

We will now present a proof via reductio ad absurdum for the first HohenbergKohn theorem, which states that there exists a one-to-one correspondence between

densities ρ and external potentials vext . We start by assuming the converse, that there

exist two external potentials vext1 and vext2 which differ by more than a constant

which give rise to two different wavefunctions Ψ1 and Ψ2 and two different energies

E1 and E2 but the same density ρ. The expectation of E1 − E2 is given by

30

E1 − E2 =

=

=

Z

Z

Z

Ψ†1 (~r)H1 Ψ1 (~r)d~r

−

Ψ†1 (~r)H1 Ψ1 (~r)d~r

−

Ψ†1 (~r)H1 Ψ1 (~r)d~r

−

Z

Z

Z

Z

Ψ†2 (~r)H2 Ψ2 (~r)d~r

Ψ†2 (~r)(H2 − H1 + H1 )Ψ2 (~r)d~r (1.13)

Ψ†2 (~r)H1 Ψ2 (~r)d~r

Ψ†2 (~r)(H2 − H1 )Ψ2 (~r)d~r

Z

Z

†

=

Ψ1 (~r)H1 Ψ1 (~r)d~r − Ψ†2 (~r)H1 Ψ2 (~r)d~r

Z

−

ρ(~r)(vext2 (~r) − vext1 (~r))d~r

−

(1.14)

By the variational principle, we know that

Z

Ψ†1 (~r)H1 Ψ1 (~r)d~r

−

Z

Ψ†2 (~r)H1 Ψ2 (~r)d~r < 0

(1.15)

and so

E1 − E2 < −

Z

ρ(~r)(vext2 (~r) − vext1 (~r))d~r

(1.16)

We could, however, also have chosen to do the same proof for the expectation of

E1 − E2 , which would merely have swapped the labels 1 and 2, giving us

E1 − E2 >

Z

ρ(~r)(vext1 (~r) − vext2 (~r))d~r

(1.17)

Combining inequalities 1.16 and 1.17, we get the contradiction

E1 − E2 > E1 − E2

Thus it must not be possible for two external potentials differing by more than a

constant to give rise to the same density. This proves the existence of a mapping from

densities ρ(~r) to external potentials vext (~r). The converse mapping is simply solving

the Schroedinger equation for external potential vext (~r) and integrating the resulting

31

wavefunction to obtain the density ρ(~r). This is the first Hohenberg-Kohn theorem

- the existence of a one-to-one map between the set of single-particle ground-state

densities and the external potentials which generate them.

Since the external potential defines the ground-state wavefunction from which

every ground-state observable can be obtained, the first Hohenberg-Kohn proof establishes the existence of density functionals for every property of the corresponding

ground-state wavefunction. This includes, crucially, its energy expectation under any

other Hamiltonian. Using this fact, the second Hohenberg-Kohn theorem expresses

the variational principle in a density functional form, proving the existence of a universal functional G[ρ] which can be used to find the energy of any ground-state density

under any other Hamiltonian as defined by external potential vext (r)

Ev [ρ] =

Z

1

vext (r)ρ(r)dr +

2

Z

ρ(r)ρ(r′ )

drdr′ + G[ρ]

|r − r′ |

(1.18)

where G[ρ] is universal and only dependent on the density, and Ev [ρ] is variational.

Even though it is proven to exist, however, the explicit form of the energy functional

G[ρ] is not known. There are, instead, numerous attempts at approximations to the

energy functional. One of the key approaches, in which the work of this thesis is

couched, is the Kohn-Sham formalism [14].

1.2.2

The Kohn-Sham Formalism

To derive the Kohn-Sham formalism, one begins by considering the set of noninteracting Hamiltonians

Hs = −

1 2

∇ + vext

2

(1.19)

Notice that the Hohenberg-Kohn theorem also applies for this set of non-interacting

Hamiltonians Hs, and therefore a one-to-one mapping exists between the non-interacting

ground-state densities ρ and vext which give rise to them. A density functional of the

non-interacting kinetic energy, Ts [ρ] then exists. All the ground states to Hamiltoni32

ans 1.19 are single determinants, and Ts can be computed exactly from the orbitals

that make up the determinant

ρ(r) =

XZ

φ†i (r)φi (r)

i

Ts [ρ] =

XZ

φ†i (r)

i

1 2

∇ φi (r)dr

2

Using Ts , Kohn and Sham define Exc , the exchange-correlation energy, as such:

G[ρ] = Ts [ρ] + Exc [ρ]

(1.20)

Substituting G[ρ] into equation 1.18 then gives

Ev [ρ] = Ts [ρ] +

Z

1

vext (r)ρ(r)dr +

2

Z

ρ(r)ρ(r′ )

drdr′ + Exc [ρ]

|r − r′ |

(1.21)

Extremizing Ev [ρ] with respect to Kohn-Sham orbitals φi (r) and using the EulerLagrange equations then gives the Kohn-Sham equations

HKS φi (r) = ǫi φi (r)

dEv [ρ]

HKS =

dφi (r)

1

= − ∇2 + vext + J + vxc [ρ]

2

dExc [ρ]

vxc [ρ] =

dρ

Kohn-Sham DFT is a search over N-body single determinant space, and is solved

using highly similar techniques to Hartree-Fock, like that depicted in fig. 1-2. The

Kohn-Sham wavefunction is the exact ground-state solution to a non-interacting

Hamiltonian, the Kohn-Sham Hamiltonian. The resulting density is assumed to also

be a fully-interacting ground-state density corresponding to some other external po33

tential vext (r). This is the assumption of v-representability. This is a requirement

inherited from the Hohenberg-Kohn theorem, which is the proof of a one-to-one mapping only within a subset of all possible ground-state densities - densities which originate from the ground state under some vext . Examples of densities which are not

v-representable include one-electron excited state densities, and also other specific

examples invented to explore this assumption [15, 16]. In the absence of a rigorous

proof, however, KS-DFT has been applied to many ground-state molecular systems

and found to work quite well empirically. This suggests that non v-representable

densities must be quite rare in practice. We explore this issue further in chapter 4.

Given v-representability, Kohn-Sham DFT (KS-DFT) is in principle an exact

formulation of many-body quantum mechanics. However, just like the case for the

energy functional Ev [ρ], the expression for Exc [ρ], the exchange-correlation functional,

is not known. The achievement of KS-DFT lies in the non-interacting kinetic energy

Ts [ρ] being a good approximation to the exact kinetic energy, making Exc [ρ] much

smaller in magnitude than G[ρ], and correspondingly easier to approximate. Despite

this, the development of exchange-correlation functionals remains an active field of

research, and the quality of the exchange-correlation functional used has a big effect

on the efficacy of KS-DFT.

1.2.3

Constrained Density Functional Theory (CDFT)

KS-DFT searches for the lowest energy solution amongst all possible single-determinant

Kohn-Sham wavefunctions. Constrained DFT is a search that operates only over the

space of Kohn-Sham wavefunctions that satisfy the specified density constraints [17].

Constraints come in the form of

Z

wc (r)ρ(r)dr − Nc = 0

(1.22)

where wc is a space dependent weight function, ρ(r) is the electron density and Nc

is the target value of the constraint operator. Spin indices have been suppressed for

clarity. Possible constraints are any function of position, i.e. dipole and multipole

moments and the total electron density on a subset of the atoms. Some operators like

34

the momentum operator cannot be expressed in this form.Constraints are imposed

using Lagrange multipliers, such that instead of finding the minimum of Ev [ρ] we find

the extrema of W [ρ, Vc ]:

W [ρ, Vc ] = Ev [ρ] + Vc

Z

wc (r)ρ(r)dr − Nc

We only show one constraint here, but the extension to multiple constraints is

straightforward. The resulting Kohn-Sham equations then look like they have an

extra external potential Vc wc (r) added. This potential is a function of the density,

and CDFT introduces no new assumptions over those already needed for KS-DFT.

δW [ρ, Vc ]

= HCDF T = HKS +Vc wc

δρ

HCDF T φi (r) = ǫi φi (r)

(1.23)

(1.24)

The derivative with respect to Vc must also be zero when the constraint is satisfied:

* 0 ∂W [ρ, V ]

dW [ρ, Vc ]

dρ δW [ρ,V

c

c]

=

+

dVc

dV

δρ

∂Vc

Z c

=

wc (r)ρ(r)dr − Nc = 0

(1.25)

Note that we have made use of the fact that δW [ρ, Vc ]/δρ = 0 (Equation 1.23). Second

derivatives come from first-order perturbation theory:

d2 W [ρ, Vc ]

=

dVc2

dρ(r)

dr

dVc

R

X X | φi (r)wc (r)φa (r)dr|2

= 2

ǫi − ǫa

i∈occ a∈virt

Z

wc (r)

(1.26)

where indices i and a run over the occupied and unoccupied orbitals respectively, and

ǫi,a are the Kohn-Sham eigenvalues. Equation 1.23 is solved as an eigenproblem using

35

numerical linear algebra routines, while equation 1.25 is solved using root-searching

algorithms, with the help of the analytic second derivative equation 1.26.

Figure 1-4: Algorithm for Solving the CDFT equations

Figure 1-4 illustrates the algorithms we use to solve the CDFT equations. within

each of the black boxes are additional iterations which add the right multiple of

the constraint matrix to the output Fock matrix, set such that the resulting density

matrix built from the Fock matrix eigenvectors satisfies the constraints in equation

1.25.

36

Possible Constraints

Formally, the constraint wc can be any function of the α- and β- densities, but in

our implementation is limited to linear combinations of α- and β- atomic populations.

We have implemented two definitions for the assignment of atomic populations, Becke

weights and Lowdin populations. The Becke weights use the atomic center assignments of density that is used in the Becke quadrature [18], whereas Lowdin weights

are basis-dependent projection operators obtained from diagonalizing the overlap matrix in the AO basis and assigning the resulting orthogonal basis functions to the AO

basis atomic centers. In either case, the resulting populations sum to the total density

of the atom and the population operators are Hermitian.

Previous work in the group has applied CDFT to the computation of long-range

charge-transfer states [19], electron transfer coupling coefficients and reorganization

energies [20, 21], all through the use of different constraints. We will use CDFT in

chapter 3 to construct diabatic states, compute their electronic couplings, and use

that information to construct a more accurate ground state.

1.2.4

Time-Dependent Density Functional Theory (TDDFT)

So far, we have only talked about ground-state densities. There is, however, also

a formulation of time-dependent quantum mechanics based on the single-particle

density. As described earlier, the basis of the Hohenberg-Kohn theorem is the fact that

differences within the space of fully-interacting electronic Hamiltonians are all oneparticle operators. Analogously, within the space of time-dependent fully-interacting

electronic Hamiltonians, if we disallow magnetic fields, any difference is also a timedependent one-particle potential. For time-dependent Hamiltonians of the form

H(t) = T + vext (t) + vee

the Runge-Gross theorem [22] states that, given initial ground-state wavefunction

Φ(0), there exists a one-to-one correspondence between the time-dependent potential

vext (t) and the time-dependent density ρ(t). The corresponding Kohn-Sham formalism to the Runge-Gross theorem is the time-dependent Kohn-Sham (TDKS) equation,

37

which expresses the evolution of Kohn-Sham orbitals in response to a time-dependent

external potential:

∂t φi (t) = −i · HKS[{ρ(τ )}τ <t , t]φi (t)

(1.27)

Just like the case for the ground-state Kohn-Sham equations, the time-dependent

Kohn-Sham orbitals give the same density as the exact wavefunction, but otherwise

are not to be attributed any physical meaning. Notice the density dependence in

equation 1.27 is of all densities before time t.

Time-Dependent Density Functional Response Theory (TDDFRT)

TDDFT is usually used in its linear response form, linear TDDFRT. We follow the

treatment presented in Ref. [23]. Consider, to first order, the effect of a perturbation

of time-dependent external potential at time t, δvext (t) on the TDKS density matrix

at time t′ , ρ(t′ ) = ρ0 + δρ(t′ ). The system starts out at t = −∞ in the ground state

ρ0 , is subject to perturbation δvext (t), and obeys the TDKS equations

1 2

∇ + vext + δvext (t) + J[ρ] + vxc [ρ]

2

∂t ρ(t) = −i[HKS [ρ(t), t], ρ(t)]

HKS [ρ, t] = −

(1.28)

The response of a system at time t′ to a perturbation at time t is purely a function

of τ = t′ − t and of the density at t, ρ(t). This time-translation invariance makes

it preferable to work in frequency space, so we assume the following monochromatic

forms for δvext (t) and δρ(t′ ).

†

δvext (t) = δṽext (ω)e−iωt + δṽext

(ω)eiωt

δρ(t′ ) = δρ̃(ω)e−iωt + δρ̃† (ω)eiωt

38

To first order, the TDKS equation of motion (Eq. 1.28) then results in

A(ω) B(ω)

1 0 δρ̃(ω)

δṽext (ω)

−ω

=

†

B(ω) A(ω)

†

0 −1

δρ̃ (ω)

δṽext (ω)

Aiajb (ω) = (ǫa − ǫi )δij δab + Biabj (ω)

Z

1

′

+ f̃xc (ω, x, x ) φb (x′ )φj (x′ )dxdx′

Biajb (ω) =

φi(x)φa (x)

r − r′

(1.29)

(1.30)

where φi are the Kohn-Sham orbitals from the ground-state and ǫi are the Kohn-Sham

eigenvalues. f˜xc (ω, x, x′ ) is the frequency-dependent exchange-correlation kernel, and

represents the change in vxc at one time in response to a change in the density at

another. Up until this point, the treatment has been exact, given the restriction of

sticking to purely electric fields. Note that this means we are unable to treat magnetic

phenomena like EPR or electronic circular dichroism.

Under the adiabatic approximation, vxc is assumed to depend only on the density

at the same time, which in frequency space translates to f̃xc having no frequency

dependence:

f̃xc (ω, x, x′ ) = f̃xc (0, x, x′ ) =

δvxc [ρ](x)

δ 2 Exc [ρ]

=

δρ(x′ )

δρ(x)δρ(x′ )

(1.31)

When the LHS of equation 1.29 is zero

A(ω) B(ω)

δρ̃(ω)

1 0

δρ̃(ω)

= ω

,

†

†

B(ω) A(ω)

δρ̃ (ω)

0 −1

δρ̃ (ω)

a finite δṽext (ω) gives rise to an infinite δρ̃(ω) - these are the poles of the response

matrix

−1

A(ω) B(ω)

B(ω) A(ω)

39

,

and the corresponding ω are the excited state energies of the system, with δρ̃(ω) being

the Kohn-Sham transition density matrices, which in turn give the exact transition

densities.

1.2.5

Approximate Exchange-Correlation Potentials

As mentioned earlier, the exact form for Exc [ρ] is not known, even in principle.

In order to use KS-DFT, we must have a way of approximating Exc [ρ]. Unlike

wavefunction-based approaches, there is no exact space which is truncated to produce

approximations - functionals are picked to satisfy both computability constraints and

also to satisfy criteria that the exact functional is known to satisfy, and maybe also

to produce the correct result for a model system. In this subsection, we introduce the

exchange-correlation functionals which we use in our work.

The Local Spin Density Approximation (LSDA) The uniform electron gas

is a fictitious system where a negative sea of electrons exactly neutralizes a uniform

background of positive charge. It is parameterized by only two parameters, the alpha

density ρα and the beta density ρβ , and so, assuming the form

Exc [ρα , ρβ ] =

Z

LSDA

fxc

(ρα (r), ρβ (r))dr

LSDA

it is possible to formulate functional fxc

(ρα , ρβ ) which gives the exact exchange and

correlation energies for every possible uniform electron gas. The resulting functionals

are the LSDA exchange and correlation functionals. The exchange functional was

derived by Slater as an approximation to Hartree-Fock exchange [2], whereas the

correlation functional is an interpolation based on accurate Monte-Carlo simulations

[24].

The LSDA works very well in situations where the electron density varies slowly,

outperforming semi-empirical functionals for solids and jellium surfaces, but tends to

overbind electrons in molecules and atoms [25].

Generalized Gradient Approximations (GGAs) One of the earliest attempts

to go beyond the LSDA was the gradient expansion approximation (GEA), a trun40

cated expansion based on slowly varying electron gas densities. This functional was

found to have worse performance than the LSDA when used on molecules.

This was found to be due to a violation of certain exact conditions called the

exchange-correlation hole sum rules [26, 27, 28]. By modifying the GEA functional to

satisfy the exchange-correlation hole sum rules, the first GGAs like PW86 [27] were

formulated. GGAs are of the form

Exc [ρα , ρβ ] =

Z

fxc (ρα (r), ρβ (r), |∇ρα (r)|, |∇ρβ (r)|)dr

and make use of both density and gradient to determine functional values. Later

GGAs like PW91 [29] and PBE [30] were derived by first coming up with a model

for the exchange-correlation hole, and then deriving the resulting functional. The

judicious selection of exact conditions to satisfy is crucial - of particular note is the

Becke88 [31] exchange GGA functional, which gives the right asymptotic exchange

potential for finite systems, on top of satisfying the exchange hole sum rules.

GGAs correct for the tendency of LSDA to overbind and overestimate bond energies, performing better than LSDA in predicting total energies, atomization, barriers

and geometries [32, 33, 34, 35].

The Adiabatic Connection and Hybrid Functionals Consider the set of Hamiltonians

λ

Hλ = T + vext

+ λ · vee

The Kohn-Sham and the full Hamiltonians are instances of this set, with λ = 0

λ

and λ = 1 respectively. For a given density ρ(r), assume that there exists a vext

for every value of λ that gives rise to ρ(r) as its ground-state density, with corresponding wavefunctions Ψλ . Then, using the Hellman-Feynman theorem, one finds

an expression for the exchange-correlation energy Exc [ρ] [36]:

Exc [ρ] + EJ [ρ] =

Z

1

0

41

Ψ†λ (~r)vee Ψλ (~r)d~r

(1.32)

This process is known as the adiabatic connection. At λ = 0 , the integrand

of equation 1.32 is ∝ J − K, and the wavefunction Ψ0 is the Kohn-Sham Slater

determinant. This implies that if we were to evaluate integral 1.32 by quadrature, the

sum would include some component from K evaluated on the Kohn-Sham orbitals.

This logic guided Becke to postulate the half-and-half functional in Ref. [37] and

the B3PW91 functional in Ref. [38]. These are the hybrid functionals, some linear

combination of GGAs and the Hartree-Fock exchange as evaluated using the KohnSham orbitals. In the case of B3PW91, the coefficients of the linear combination

were determined empirically by fitting to experimental results [38]. The resulting

functional is

B3PW91

LSDA

Exc

= Exc

+ a0 (Exexact − ExLSDA ) + ax (ExBecke88 − ExLSDA ) + ac (EcPW91 − EcLSDA )

where a0 = 0.20, ax = 0.72, ac = 0.81.

Currently, the most popular hybrid functional is B3LYP, which uses the same

coefficients as B3PW91 but replaces the PW91 correlation functional with the LYP

correlation functional. By incorporating exact exchange, B3LYP is able to get an

accuracy of 2-3 kcal/mol within the G2 test set. This is very close to chemical

accuracy, ≈ 1 kcal/mol. Also because of the exact exchange component, hybrid

functionals exhibit a lower level of self-interaction error (SIE) proportional to the

amount of exact exchange [39]. We discuss SIE further below.

One might think to just use exact exchange and only approximate correlation.

The problem with this approach lies in the inability of a delocalized exchange hole, in

combination with a localized correlation hole, to give rise to a good approximation to

the exact exchange-correlation hole (Ref. [10] chapter 6.6). Hybrid functionals hence

represent a compromise between accurate exchange and accurate correlation.

Advantages and Flaws of Approximate Exchange-Correlation Functionals

The choice to use DFT is often motivated by its efficiency. DFT is as cheap as HartreeFock theory for most purposes because of the way commonly used xc potentials

42

are designed - Exc [ρ] are formulated such that the resulting vxc [ρ] =

dExc [ρ]

dρ

can

be evaluated point-by-point on a spatial grid. The consequence of this is that the

computation required to calculate vxc scales linearly with system size. This is what

makes KS-DFT so efficient.

KS-DFT’s efficiency in treating electronic correlation allows it to be used on systems for which conventional wavefunction methods are simply too expensive. This

cheapness, however, comes at a price - we know of many characteristics of the exact xc

functional which that commonly used approximations do not satisfy. The inaccuracies

are discussed below.

Lack of Derivative Discontinuity We first define the energy of densities that do

not integrate to integers as being the result of ensembles of integer densities. In Ref.

[40], Perdew et al. show that the lowest energy mixture for a density that integrates

to M + ω electrons, where M is an integer and 0 ≤ ω ≤ 1, is the mixture of (1 − ω)

of the lowest energy M-electron density and ω of the (M + 1)-electron density, giving

rise to the energy

EM +ω = (1 − ω)EM + ωEM +1

This means that the energy as a function of N is a series of straight lines with

discontinuities on the first derivative at integer values. As a consequence of this, the

Kohn-Sham HOMO eigenvalue is in fact discontinuous at integer N:

ǫHOM O =

−IZ

−AZ

(Z − 1 < N < Z)

(Z < N < Z + 1)

where IZ and AZ are the ionization and electron affinities of the Z-electron system. This property is not present in approximate non-hybrid exchange-correlation

functionals [40, 41, 42]. It is partly present in hybrid functionals because of the

orbital-dependence of exact exchange. The derivative discontinuity problem is most

evident in the separation of ionic diatomics, like LiH, which dissociates to erroneous

partial charges. Under LSDA, LiH dissociates to Li+0.25 H−0.25 instead of the correct

43

Li0 H0 [40]. In solid state applications, the lack of a derivative discontinuity causes

underestimates in band gap predictions, which at times makes semi-conductors and

insulators look like metals [43]. For example, it causes LSDA to predict a silicon band

gap that is 50% too small [44].

Spatial Locality The local nature of exchange-correlation approximations means

that charge transfer and charge transport cannot be treated correctly using perturbation theory [45].

As noted earlier, other than the exact exchange component of hybrid functionals,

common approximate exchange-correlation potentials can all be evaluated on a grid.

This means that the TDDFRT exchange-correlation kernel is not only local in time

due to the adiabatic approximation, but also local in space. Equation 1.31 then

becomes

f̃xc (0, x, x′ ) = f̃xc (0, x)δ(x − x′ )

The potential at a given location vxc [ρ](r) only depends on properties of the

density at the same point. This property is purely an ad-hoc simplification, and not

given by the original Kohn-Sham derivation.

The simplification of spatial locality leads to erroneous predictions for chargetransfer under linear response TDDFT [45, 46]. Consider the Biajb (ω) term of TDDFRT

in equation 1.30. The ia and jb indices refer to excitations φi → φa and φj → φb .

Note that for long-range charge transfers, where there is little overlap between these

excitation pairs, the B(ω) term effectively goes to zero, and the excitation is then

determined solely by the A(ω) contribution, the difference in orbital eigenvalues.

For well-separated molecules, this difference is not distance dependent at all, and

so does not give rise to the correct

1

R

factor. Charge transfer excitations are hence

underestimated by TDDFRT. The exact exchange component of hybrid functionals

is non-local, adding a multiple of the term

44

1

φa (x′ )φb (x′ )dxdx′

= − φi (x)φj (x)

r − r′

Z

1

X

Biajb (ω) = − φi (x)φb (x)

φa (x′ )φj (x′ )dxdx′

′

r−r

Z

AX

iajb (ω)

to Aiajb (ω) and Biajb (ω). AX

iajb (ω) has the correct

1

R

dependence and allows hybrid

functionals to perform better, but as a whole still exhibit the same problem.

Self-Interaction Error (SIE) This is the tendency of individual electrons to feel

an erroneous repulsion from their own densities [47, 48]. Notice that even though

the inter-electron interaction is pairwise between the

N (N −1)

2

pairs of electrons, the

sums in Hartree-Fock Coulomb (equation 1.6) and exchange (equation 1.7) energies

run over all N 2 orbital indices. This transformation is allowed because the j = k

terms in the Hartree-Fock Coulomb and exchange terms are equal and opposite in

sign to each other. This is the transformation that allows the Coulomb energy to

be evaluated purely from the classical electron density. It is, however, a problem for

DFT because DFT exchange is not evaluated exactly. Electrons hence experience a

spurious repulsion from themselves.

-0.4

Hartree-Fock (exact)

LSDA

PW91

B3LYP

Energy/Hartrees

-0.45

-0.5

-0.55

-0.6

-0.65

0

0.5

1

1.5

2

2.5

3

Bond Length/Angstroms

3.5

Figure 1-5: H+

2 Energies in cc-PVTZ basis

45

4

Consider the example of one-electron system H+

2 . Hartree-Fock does not suffer at all

from SIE, and is used to find the exact energy in this example. The error in the energy

difference between the equilibrium and dissociated states comes from there being a

larger SIE in the equilibrium state, when the electron density is more compact. Note

that due to the inclusion of some exact exchange, hybrid functionals suffer less from

SIE.

Lack of Static Correlation Wavefunctions which are well-described by the sum of

multiple determinants exhibit long-range correlations which are treated with multiplereference methods under wavefunction theories [11]. The Kohn-Sham Slater wavefunction is a single determinant by definition, and in principle is able to represent the

multiple determinant wavefunction just fine. This non-interacting v-representability

has been demonstrated for the specific case of dissociating H2 , where the wavefunction

in the dissociated limit is the sum of two determinants, and yet can be represented

by a single determinant KS wavefunction [49]. Using approximate xc functionals,

however, static correlation is usually underestimated by DFT, especially when compensating effects from SIE are eliminated [50].

-0.75

-0.8

Energy/Hartrees

-0.85

-0.9

-0.95

-1

-1.05

CCSD (Exact)

Hartree-Fock

LSDA

PW91

B3LYP

-1.1

-1.15

-1.2

0

0.5

1

1.5

2

2.5

3

Bond Length/Angstroms

3.5

4

Figure 1-6: H2 Energies in cc-PVTZ basis

Consider the 2-electron example of H2 . In 2-electron systems, the coupled-cluster

singles-doubles (CCSD) method is exact. None of the other methods have static

46

correlation, and in the case of Hartree-Fock this results in charge-separation terms

H−↑↓ -H+ and H+ -H−↑↓ which cause the energy to go up with distance. Note that

PW91, a GGA, and B3LYP, a hybrid functional, get pretty good energies at the

equilibrium distance due to their ability to treat dynamic correlation.

There is much work on new xc functionals which address these systemic inaccuracies [51, 52, 53]. In this work, however, no new xc functionals will be presented.

Instead, we focus on developing methods to extract physical properties of interest

using the DFT formalism. We do not ignore the artifacts described above; instead

of trying to tackle them directly, we design our procedures so as to minimize their

effects.

1.3

Structure of this Dissertation

This dissertation will be structured as follows - chapters are arranged in accordance

to physical properties of interest. Each chapter begins with an introduction to the

significance of the property being studied, followed by a description of the state of the

art, then a description of the methodology which we have developed. An account of

results from applying the methodology to physical systems follows. The chapter then

concludes with rationalizations of the failures and successes of the approach and, if

appropriate, possible future directions.

Chapter 2 covers the work that we have done to simulate conductance in single

molecules. We numerically integrate the time-dependent Kohn-Sham (TDKS) equations in a Gaussian basis to simulate the flow of charge across a polyene molecule,

and characterize the corresponding current-voltage characteristics of the molecule.

Chapter 3 describes a configuration interaction method based on constrained density functional theory, the advantage of which is its ability to treat both dynamic

and static correlation at a modest computational cost. We apply this method to the

computation of molecular dissociation curves of small diatomic molecules. Chapter

4 details explorations into the ability of DFT to treat isolated excited states - we

devise a method that uses non-Aufbau occupation within the Kohn-Sham formalism

to determine the energy of excited stationary states. This method is applied to the

47

Rydberg states of hydrogen and lithium atoms under various functionals.

48

Chapter 2

Molecular Conductance using

Real-Time Time-Dependent

Density Functional Theory

Note: The bulk of this chapter has been published in Ref. [54].

2.1

Introduction

Molecular electronics represent the next length-scale in the miniaturization of electronics as embodied in Moore’s Law [55]. The pertinent physics in molecular electronics differs from that of molecules in bulk solids, and experiments probing the nature of

single-molecule devices involving metal-molecule-metal (MMM) junctions have been

performed in an attempt to understand more about them [56, 57, 58, 59, 60, 61, 62,

63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79]. In this chapter, we

describe the development of DFT-based methods used to simulate molecular-scale

conductors.

2.1.1

The Landauer-Büttiker Model

We start by examining charge transport under the Landauer-Büttiker model [80, 81,

82, 83, 84]. The Landauer formula expresses the conductance Γ as a sum of M

channels which each have transmittance Ti .

M

Γ=

1 X 2

|Ti |

2π i

49

The Landauer-Büttiker model is an elastic electron transport model - electrons

only exchange momentum with the channel, and are either transmitted through or

reflected from the channel without a change in energy. Under the Landauer-Büttiker

model, the metal leads to which the channel is connected exist in a state of grand

canonical equilibrium, possesing well-defined chemical potentials and driving electron transport through the channel by virtue of the difference in chemical potential

between the two leads. The resistance is quantized as a function of chemical potential difference, with step increases in conductance corresponding to the activation

of new quantum channels. When applying the Landauer-Büttiker model to MMM

experiments, treatments that interpret the channels as purely molecular scattering

coefficients have been pursued [85, 86, 87, 88, 89]. Under such interpretations, the

molecule acts as a potential off which electrons of the bulk scatter.

2.1.2

Non-Equilibrium Green’s Function (NEGF) Methods

Beyond scattering theory, the non-equilibrium Green’s function [90] can also be used

to obtain a Landauer-like expression for electron transport through junctions. The

NEGF contains the information needed to treat conductance exactly, but obtaining the exact NEGF is as difficult as solving the many-body Schroedinger equation.

Therefore, instead of computing the exact NEGF, various approximations to the

NEGF have been developed [91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104].

DFT-based NGEF models use ground-state DFT to provide the effective singleparticle potential for the scattering formalism. They divide the system into three

regions, the left lead L, right lead R and molecule M, with the corresponding division

of the DFT Fock matrix

FL

FLM

0

Ftot = F†LM FM FM R ,

†

0

FM R FR

For any given energy level, an effective Hamiltonian over the molecular basis

functions is built:

50

Fef f = FM − F†LM [FL − EI]−1 FLM − F†M R [FL − EI]−1 FM R

Fef f is then used as an effective one-body Hamiltonian for computing the NEGF.

Since the ground-state Fock matrix is used, the assumption implicit in this procedure

is that the steady state is a small perturbation from the ground state of the system.

There remain persistent discrepancies between theoretical prediction and experimental measurement of molecular conductance. One finds that the best experiments

and theories differ by two orders of magnitude in predicting the conductance of simple

junctions.

2.1.3

Time-Dependent Density Functional Theory

TDDFT can be used to study molecular conductance by simulating the evolution of

the electron density ρ(t) under a time-dependent potential v(t). By formulating the

appropriate potential v(t), the charge transport observed can be taken to represent

the flow of charge in the molecule in response to a voltage bias. This approach is

supported by formal justification for TDDFT conductance simulations [105, 106, 107],

the study of the effect of existing DFT approximations on conductance [108, 109] and

protocols for simulating current flow using TDDFT [110, 111, 112, 113]. Our work is

most directly preceded by that described in Refs. [110, 112, 113].

As described in the introduction chapter, time-dependent density functional theory is an exact reformulation of many-body quantum mechanics. As such, barring

approximations at the DFT level, one would expect such an approach to be exact.

Difficulties with using TDDFT

There are two main obstacles that must be surmounted in order to use TDDFT to

conduct full numerical simulations of molecular conductance.

Computational Expense The first difficulty is that of overcoming the computational expense of simulating the systems in real time. The conductance of a molecule

in theory is a local molecular property that is independent of the leads, and simulations should in principle model molecules as open systems that lose and gain electrons

51

from their environment. In practice, however, large closed systems that include the

environment are often used instead. Using a closed system to represent an open one

places lower bounds on the size of the system used - the simulated system has to be

large enough for results to approach convergence with respect to the infinite-size limit

[105]. There is also the issue of temporal convergence - experimental timescales are

typically much longer than electronic timescales, and hence experimental measurements are of steady-state properties. Therefore, for simulation results to be comparable to experiment, they must allow time for the system to reach steady state. These

lower bounds on system size and simulation time impose considerable computational

expense on the simulateur.

Despite this expense, however, simulations of credible size (e.g. 50-100 atoms)

and time spans (femtoseconds) can now be done [114, 115, 116, 117, 118]. It has also

been previously shown that one can attain a quasi-steady state in a simple metal

wire using TDDFT [112]. Key to making these simulations practical is the efficient

integration of the time-dependent Kohn-Sham (TDKS) equations [119, 120, 121].

Voltage Identification The second major obstacle to TDDFT conductance simulations is the difficulty of assigning the voltage value to a given current simulation. This is not a problem within the Landauer formalism, since the leads are

always near equilibrium, the voltage is simply the difference between two well-defined

chemical potential values. Differences in effective single-particle energy levels are

used in some modern theories to define voltage, the choices being the difference between Fermi-levels conditioned on direction of propagation [122, 91, 123, 124, 125],

or Fermi-levels of regions deep within the leads, away from the conducting molecule

[94, 95, 96, 97, 98, 99, 100, 101]. The use of Fermi-levels in DFT is not fully justified,

as the idea of a Fermi level is only valid for non-interacting particles. KS orbital

energies (other than the HOMO), which are used as proxies for Fermi energies, are

known to be meaningless [40, 41, 42, 126], and as a result, when computing biases by

subtracting two KS orbital energies from each other, one is in fact using an uncontrolled approximation. This difficulty is specific to the DFT formalism, and different

in nature from those faced in identifying the experimental voltage with microscopic

52

reality.

2.2

2.2.1

Methodology

Integrating the TDKS equations

These are the TDKS equations:

HKS[ρ(t), t] · φi (t) = i

where ρ(t) ≡

Pocc

i

dφi (t)

dt

(2.1)

|φi (t)|2 . They describe the evolution of a density in response

to time-dependent external potential. Under the KS formalism, an effective noninteracting mean-field Hamiltonian HKS [ρ(t), t] is computed from the density ρ(t),

and it is under this Hamiltonian that the KS orbitals φi (t) evolve. Under the adiabatic

approximation [127], the KS Hamiltonian at time t, HKS [ρ(t), t] only depends on the

density at the same time ρ(t) and the time t itself. We only concern ourselves with

adiabatic TDDFT functionals, so this will remain true for the rest of this chapter.

The formal solution to the TDKS equations is:

φ(t + dt) = U(t + dt, t) · φ(t)

Z t+dt

U(t + dt, t) ≡ T exp{−i

HKS [ρ(τ )τ ]dτ }

(2.2)

t

Notice T , the time ordering operator, which reorders operators such that they

always go from later to earlier times from left to right.

HKS [ρ(t), t] is composed of three main parts,

HKS [ρ(t), t] = −

1 2

∇ + vext (t) + vJxc [ρ(t)]

2

the kinetic energy (− 21 ∇2 ) the external potential (vext ) and the inter-electron

Coulomb-exchange-correlation potential (vJxc ). Note that only vext has an explicit

time dependence. The kinetic energy operator is time-independent, whereas the

53

Coulomb-exchange-correlation potential is time-dependent only through the density

ρ(t). The implicit time-dependence of the Coulomb-exchange-correlation potential is

an important detail which we will revisit later.

The one-particle density matrix (1PDM) P(t) and the KS orbitals φi (t) are equivalent ways of representing the states of the single-determinant KS wavefunction, with