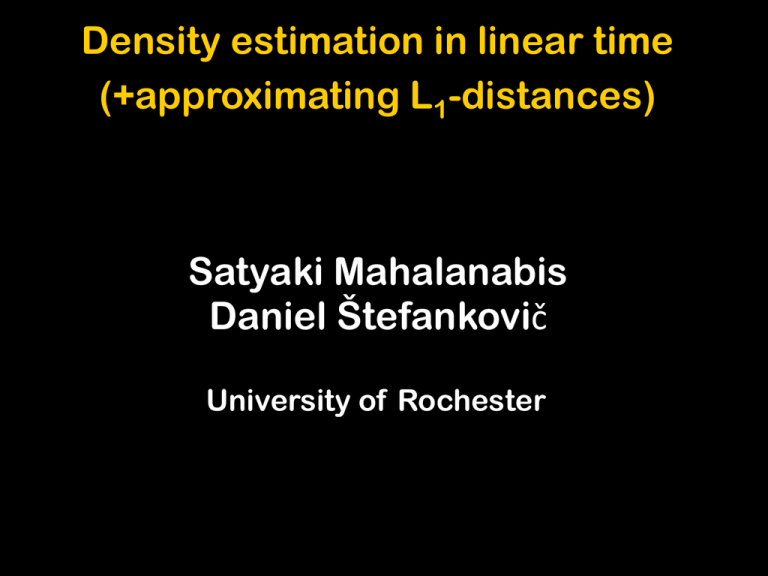

Satyaki Mahalanabis Daniel Štefankovi Density estimation in linear time (+approximating L

advertisement

Density estimation in linear time

(+approximating L1-distances)

Satyaki Mahalanabis

Daniel Štefankovič

University of Rochester

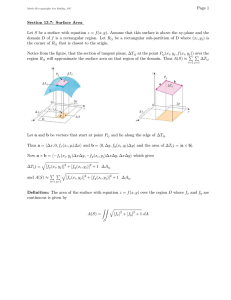

Density estimation

f1

f3

f6

f2

f4

+ DATA

f5

F = a family of densities

density

Density estimation - example

N(,1)

+

F = a family of normal

densities with =1

0.418974,

0.848565,

1.73705,

1.59579,

-1.18767,

-1.05573,

-1.36625

Measure of quality:

g=TRUTH

f=OUTPUT

L1 – distance from the truth

|f-g|1 = |f(x)-g(x)| dx

Why L1?

1) small L1 all events estimated with

small additive error

2) scale invariant

Obstacles to “quality”:

F

+ DATA

bad data

weak class

of densities

dist1(g,F)

?

What is bad data ? | h-g |1

g = TRUTH

h = DATA (empirical density)

= 2max |h(A)-g(A)|

AY(F)

f1

f2

f3

Y(F) = Yatracos class of F

Aij={ x | fi(x)>fj(x) }

A12

A13

A23

Density estimation

F

+

DATA (h)

f with small |g-f|

assuming these are small:

dist1(g,F)

= 2max |h(A)-g(A)|

AY(F)

1

Why would these be small ???

dist1(h,F)

= 2max |h(A)-g(A)|

AY(F)

They will be if:

1) pick a large enough F

2) pick a small enough F

so that VC-dimension of Y(F) is small

3) data are iid from h

Theorem (Haussler,Dudley, Vapnik, Chervonenkis):

E[max|h(A)-g(A)|] VC(Y)

samples

AY

How to choose from 2 densities?

f1

f2

How to choose from 2 densities?

f1

+1

f2

+1 +1

-1

How to choose from 2 densities?

+1

Th

f2

+1 +1

f1

T f1

T f2

-1

T

How to choose from 2 densities?

f2

Th

f1

T f1

T f2

Scheffé:

if T h > T (f1+f2)/2 f1

else f2

Theorem

DL’01):

+1 +1(see

+1

-1

|f-g|1 3dist1(g,F) + 2

T

Density estimation

F

+

DATA (h)

f with small |g-f|

assuming these are small:

dist1(g,F)

= 2max |h(A)-g(A)|

AY(F)

1

Test functions

F={f1,f2,...,fN}

Tij (x) = sgn(fi(x) – fj(x))

Tij(fi – fj) = (fi-fj)sgn(fi-fj) = |fi – fj|1

Tijh

fj wins

Tijfj

fi wins

Tijfi

Density estimation algorithms

Scheffé tournament:

Pick the density with the most wins.

Theorem (DL’01):

|f-g|1 9dist1(g,F)+8

2

n

Minimum distance estimate (Y’85):

Output fk F that minimizes

max

|(f

-h)

T

|

k

ij

3

ij

Theorem (DL’01):

|f-g|1 3dist1(g,F)+2

n

Density estimation algorithms

Scheffé tournament:

Pick the density with the most wins.

Theorem (DL’01):

|f-g|1 9dist1(g,F)+8

2

n

Minimum distance estimate (Y’85):

Output

F that

minimizes

Can

wefkdo

better?

max

|(f

-h)

T

|

k

ij

3

ij

Theorem (DL’01):

|f-g|1 3dist1(g,F)+2

n

Our algorithm:

Efficient minimum loss-weight

repeat until one distribution left

1) pick the pair of distributions in F

that are furthest apart (in L1)

2) eliminate the loser

Theorem [MS’08]:

|f-g|1 3dist1(g,F)+2

n

*

Take the most “discriminative” action.

* after preprocessing F

Tournament revelation problem

INPUT:

a weighed undirected graph G

(wlog all edge-weights distinct)

OUTPUT:

REPORT: heaviest edge {u1,v1} in G

ADVERSARY eliminates u1 or v1 G1

REPORT: heaviest edge {u2,v2} in G1

ADVERSARY eliminates u2 or v2 G2

.....

OBJECTIVE:

minimize total time spent generating reports

Tournament revelation problem

A

report the heaviest edge

4

3

2

B

D

5

6

1

C

Tournament revelation problem

A

report the heaviest edge

4

3

BC

2

B

D

5

6

1

C

Tournament revelation problem

A

3

D

report the heaviest edge

BC

2

1

eliminate B

C

report the heaviest edge

Tournament revelation problem

A

3

D

report the heaviest edge

BC

2

1

eliminate B

C

report the heaviest edge

AD

Tournament revelation problem

report the heaviest edge

BC

eliminate B

D

1

C

report the heaviest edge

AD

eliminate A

report the heaviest edge

CD

Tournament revelation problem

A

B

4

3

2

D

6

1

C

AD

B

5

BC

C

BD

A

D

DC

AC

B

AD

2O(F) preprocessing O(F) run-time

O(F2 log F) preprocessing O(F2) run-time

WE DO NOT KNOW:

Can get O(F) run-time with

polynomial preprocessing ???

D

AB

Efficient minimum loss-weight

repeat until one distribution left

1) pick the pair of distributions that are

furthest apart (in L1)

2) eliminate the loser

(in practice 2) is more costly)

2O(F) preprocessing O(F) run-time

O(F2 log F) preprocessing O(F2) run-time

WE DO NOT KNOW:

Can get O(F) run-time with

polynomial preprocessing ???

Efficient minimum loss-weight

repeat until one distribution left

1) pick the pair of distributions that are

furthest apart (in L1)

2) eliminate the loser

Theorem:

|f-g|1 3dist1(g,F)+2

Proof:

n

“that guy lost even more badly!”

For every f’ to which f loses

|f-f’|1 max |f’-f’’|1

f’ loses to f’’

Proof:

“that guy lost even more badly!”

For every f’ to which f loses

|f-f’|1 max |f’-f’’|1

f’ loses to f’’

2hT23 f2T23 + f3T23

f1

(f1-f2)T12 (f2-f3) T23

(f4-h)T23

(fi-fj)(Tij-Tkl) 0

bad loss

f3

BEST=f2

|f1-g|1 3|f2-g|1+2

Application:

kernel density estimates

(Akaike’54,Parzen’62,Rosenblatt’56)

K = kernel

h = density

1

n

n

kernel used to smooth empirical g

(x1,x2,...,xn i.i.d. samples from h)

K(y-x )

i=1

=

g*K

i

as n

h*K

What K should we choose?

1

n

K(y-x )

n i=1

g*K

i

Dirac is not good

as n

h*K

Dirac would be good

Something in-between: bandwidth selection

for kernel density estimates

K(x/s)

as s 0

Ks(x)=

s

Ks(x) Dirac

Theorem (see DL’01): as s 0 with sn

|g*K – h|1 0

Data splitting methods for

kernel density estimates

How to pick the smoothing factor ?

n

1

y-xi

K

ns

s

i=1

(

x1,...,xn-m

x1,x2,...,xn

xn-m+1,...,xn

)

1

fs =

(n-m)s

n-m

K(

i=1

y-xi

s

choose s using

density estimation

)

Kernels we will use:

1

ns

K(

y-xi

s

)

piecewise uniform

piecewise linear

Bandwidth selection for uniform

1/2

E.g.

N

n

kernels

5/4

mn

N distributions

each is piecewise uniform with n pieces

m datapoints

Goal: run the density estimation algorithm efficiently

TIME

MD

EMLW

gTij

(fi+fj)Tij

2

n+m log n

(fk-h) Tkj

n+m log n

|fi-fj|1

n

N

N2

N2

Bandwidth selection for uniform

1/2

E.g.

N

n

kernels

5/4

Can speed m n

N distributions

this

up?

each is piecewise uniform with n pieces

m datapoints

Goal: run the density estimation algorithm efficiently

TIME

MD

EMLW

gTij

(fi+fj)Tij

2

n+m log n

(fk-h) Tkj

n+m log n

|fi-fj|1

n

N

N2

N2

Bandwidth selection for uniform

1/2

E.g.

N

n

kernels

5/4

Can speed m n

N distributions

this

up?

each is piecewise uniform with n pieces

absolute error bad

Goal: run the density estimation algorithm efficiently

relative

error

good

TIME

MD

EMLW

m datapoints

gTij

(fi+fj)Tij

2

n+m log n

(fk-h) Tkj

n+m log n

|fi-fj|1

n

N

N2

N2

Approximating L1-distances

between distributions

N piecewise uniform densities (each n pieces)

(N2+Nn) (log N)

WE WILL DO:

2

TRIVIAL (exact): N2n

Dimension reduction for L2

Johnson-Lindenstrauss Lemma (’82)

: L2 Lt2

|S|=n

t = O(-2 ln n)

( x,y S)

d(x,y) d((x),(y)) (1+)d(x,y)

N(0,t-1/2)

Dimension reduction for L1

Cauchy Random Projection (Indyk’00)

: L1 Lt1

|S|=n

t = O(-2 ln n)

( x,y S)

d(x,y) est((x),(y)) (1+)d(x,y)

-1/2)

C(0,1/t)

N(0,t

(Charikar, Brinkman’03 : cannot replace est by d)

Cauchy distribution C(0,1)

density function:

1

(1+x2)

FACTS:

XC(0,1)

aXC(0,|a|)

XC(0,a), YC(0,b)

X+YC(0,a+b)

Cauchy random projection for L1

(Indyk’00)

A

X1

X2

B

D

X3 X4 X5 X6 X7 X8 X9

X1C(0,z)

A(X2+X3) + B(X5+X6+X7+X8)

z

Cauchy random projection for L1

(Indyk’00)

A

X1

z

X2

B

D

X3 X4 X5 X6 X7 X8 X9

X1C(0,z)

A(X2+X3) + B(X5+X6+X7+X8)

D(X1+X2+...+X8+X9)

Cauchy(0,|-|1)

All pairs L1-distances

piece-wise linear densities

All pairs L1-distances

piece-wise linear densities

R=(3/4)X1 + (1/4)X2

B=(3/4)X2 + (1/4)X1

R-BC(0,1/2)

X1

X2

C(0,1/2)

All pairs L1-distances

piece-wise linear densities

Problem: too many intersections!

Solution: cut into even smaller pieces!

Stochastic measures are useful.

1.0

Brownian motion

0.5

1

0.2

0.4

0.6

0.8

1.0

exp(-x^2/2)

(2)1/2

0.5

Cauchy motion

0.4

1

0.2

0.2

0.2

0.4

0.4

0.6

0.8

1.0

(1+x)2

1.0

Brownian motion

0.5

1

0.2

0.4

0.6

0.8

1.0

exp(-x^2/2)

(2)1/2

0.5

computing integrals is easy

f:RRd

f dL = Y N(0,S)

0.4

Cauchy motion

0.2

1

0.2

0.4

0.6

0.8

1.0

(1+x)2

0.2

0.4

computing integrals is easy

f:RRd

f dL = Y C(0,s) for d=1

computing integrals is hard d>1

*

* obtaining explicit expression for the density

X1

X2

X3 X4 X5 X6 X7 X8 X9

What were we doing?

(f1,f2,f3) dL = (w1)1,(w2)1,(w3)1

X1

X2

X3 X4 X5 X6 X7 X8 X9

What were we doing?

(f1,f2,f3) dL = (w1)1,(w2)1,(w3)1

Can we efficiently compute

integrals dL for piecewise linear?

Can we efficiently compute

integrals dL for piecewise linear?

: R R2

(z)=(1,z)

(X,Y)= dL

: R

(z)=(1,z)

2

R

(X,Y)= dL

u+v,u-v

(2(X-Y),2Y) has density at

2

All pairs L1-distances for mixtures of

uniform densities in time

(N^2+Nn) (log N)

2

O(

)

All pairs L1-distances for piecewise

linear densities in time

(N^2+Nn) (log N)

2

O(

)

QUESTIONS

: R R3

2)

(z)=(1,z,z

1)

(X,Y,Z)= dL

?

2) higher dimensions ?