Language-Based Security - Shambhu Upadhyaya November 15, 2006

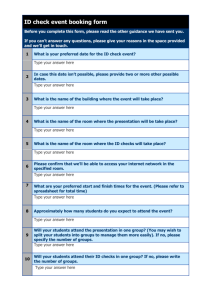

advertisement

Language-Based Security

- Guest lecture in CSE 605: Advanced Programming Languages

November 15, 2006

Shambhu Upadhyaya

CSE Department

University at Buffalo

Motivation

Conventional security mechanisms treat

programs as black box

Encryption

Firewalls

System calls/privileged mode, Access control

But it cannot address important current and

future security needs:

Downloaded, mobile code

Buffer overruns and other safety problems

Threat Model

Remote Exploit

Attacker can send in-band data

Attacker can force execution to

jump to the data

Language-based security is one way to deal with it

Running

Process

Outline

The need for language-based security

Safety analyses and transformations

Making languages safe

Certifying compilation and verification

Runtime Environment Driven Program Safety – a

case study

Acknowledgements:

Fred Schneider, Greg Morrisett Tutorial (Cornell)

Ramkumar Chinchani (Cisco)

Computer security

Goal: prevent bad things from happening

Clients not paying for services

Critical service unavailable

Confidential information leaked

Important information damaged

System used to violate copyright

Conventional security mechanisms aren’t up

to the challenge

st

1

Principle of Security Design

Least Privilege: each principal is given the

minimum access needed to accomplish

its task [Saltzer & Schroeder ‘75]

Examples:

+ Administrators don’t run day-to-day tasks as root, So

“rm –rf /” won’t wipe the disk

-

fingerd runs as root so it can access different users’

.plan files. But then it can also

“rm –rf /”

Problem: OS privilege is coarse-grained

nd

2

Principle of Security Design

Keep the TCB small.

Trusted Computing Base (TCB) : components

whose failure compromises the security of a

system

Example: TCB of operating system includes

kernel, memory protection system, disk image

Small/simple TCB:

TCB correctness can be checked/tested/reasoned

about more easily more likely to work

Large/complex TCB:

TCB contains bugs enabling security violations

Attack Implementation

Stack-based Overflows

Heap-based Overflows

Format String Attack

Example Attack: buffer overflows

Payload

char buf[100];

…

gets(buf);

Return address

buf

Program

Stack

sp

Attacker gives long input that overwrites

function return address, local variables

“Return” from function transfers control to

payload code

Example Attack: format strings

fgets(sock, s, n);

…

fprintf(output, s);

Attack: pass string s containing a %n qualifier

(writes length of formatted input to arbitrary

location)

Use to overwrite return address to “return” to

malicious payload code in s

NB: neither attack is viable with a type-safe

language such as Java

But who’s going to rewrite 50Mloc in Java/C#?

1988: Morris Worm

Penetrated an estimated 6,000 machines (510% of hosts at that time)

Used a number of clever methods to gain

access to a host

brute force password guessing

bug in default sendmail configuration

X windows vulnerabilities, rlogin, etc.

buffer overrun in fingerd

Remarks:

Buffer overruns account for roughly half of CERT

advisories

You’d think we could solve this but…

1999: Love Bug & Melissa

Both email-based viruses that exploited:

a common mail client (MS Outlook)

trusting (i.e., uneducated) users

VB scripting extensions within messages to:

lookup addresses in the contacts database

send a copy of the message to those contacts

ran with full privileges of the user

Melissa: hit an estimated 1.2 million machines

Love Bug: caused estimated $10B in damage

When to enforce security

Possible times to respond to security violations:

Before execution:

analyze, reject, rewrite

During execution:

monitor, log, halt, change

After execution:

rollback, restore, audit, sue, call police

Language-based techniques

A complementary tool in the arsenal: programs

don’t have to be black boxes! Options:

1.

2.

3.

Analyze programs at compile time or load

time to ensure that they are secure

Check analyses at load time to reduce TCB

Transform programs at compile/load/run

time so that they don’t violate security, or so

that they log actions for later auditing

Fixing The Problem

Compiler

Source Program

Static analysis

Model-checking

Type-safety

Binary Executable

Runtime checks

Anomaly detection

Maturity of language tools

Some things have been learned in the last 25 years…

How to build a sound, expressive type system that

provably enforces run-time type safety

protected interfaces

Type systems that are expressive enough to encode

multiple high-level languages

language independence

How to build fast garbage collectors

trustworthy pointers

On-the-fly code generation and optimization

high performance

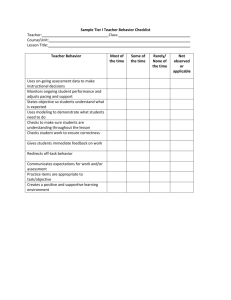

CQUAL

Pros

Cyclone

One-time effort

Efficient

BOON

CCured

Cons

Weak type system

Arbitrary

typecasting

Type-Based

Pros

One-time effort

Cons

Static Analysis

Undecidability of

aliasing

False negatives

Runtime Checks

Pros

Coverage

Few false positives

BoundsChecker

Cons

StackGuard

Inefficient

Security properties

What kinds of properties do we want to ensure

programs or computing systems satisfy?

Safety properties

“Nothing bad ever happens”

A property that can be enforced using only

history of program

Amenable to purely run-time enforcement

Examples:

access control (e.g., checking file permissions on

file open)

memory safety (process does not read/write outside

its own memory space)

type safety (data accessed in accordance with type)

Information security: confidentiality

Confidentiality: valuable information should not be

leaked by computation

Also known as secrecy, though sometimes a distinction

is made:

Simple (access control) version:

Secrecy: information itself is not leaked

Confidentiality: nothing can be learned about information

Only authorized processes can read from a file

But… when should a process be “authorized” ?

End-to-end version:

Information should not be improperly released by a

computation no matter how it is used

Requires tracking information flow in system - Encryption

provides end-to-end secrecy—but prevents computation

Information security: integrity

Integrity: valuable information should not be

damaged by computation

Simple (access control) version:

Only authorized processes can write to a file

But… when should a process be “authorized”

End-to-end version:

Information should not be updated on the basis of

less trustworthy information

Requires tracking information flow in system

Privacy and Anonymity

Privacy: somewhat vague term

encompassing confidentiality, secrecy,

anonymity

Sometimes means: individuals (principals) and

their actions cannot be linked by an observer

Anonymity: identity of participating principals

cannot be determined even if actions are

known

stronger than privacy

Availability

System is responsible to requests

DoS attacks: attempts to destroy availability

(perhaps by cutting off network access)

Fault tolerance: system can recover from

faults (failures), remain available, reliable

Benign faults: not directed by an adversary

Usual province of fault tolerance work

Malicious or Byzantine faults: adversary can

choose time and nature of fault

Byzantine faults are attempted security violations

usually limited by not knowing some secret keys

Safety analysis and

transformation

Reference Monitor

Observes the execution of a program and halts

the program if it’s going to violate the security

policy

Common Examples:

memory protection

access control checks

routers

firewalls

Most current enforcement mechanisms are

reference monitors

What policies?

Reference monitors can only see the past

They can enforce all safety properties but not

liveness properties

Assumptions:

monitor can have access to entire state of

computation

monitor can have arbitrarily large state

but monitor can’t guess the future – the predicate

it uses to determine whether to halt a program

must be computable

Interpreter and sandboxing

Interpreter:

easy to implement (small TCB)

works with binaries (high-level language-independent)

terrible execution overhead (25x? 70x?)

Sandboxing:

Software Fault Isolation (SFI) code rewriting is

“sandboxing”

Requires code and data for a security domain are in one

contiguous segment

Java sandbox model

Restricts the functionality of the code

Type Safety and Security

Type-safe languages

Software-engineering benefits of type safety:

memory safety

no buffer overruns (array subscript a[i] only

defined when i is in range for the array a)

no worries about self-modifying code, wild

jumps, etc.

Type safety can be used to construct a

protected interface (e.g., system call

interface) that applies access rules to

requests

Java

Java is a type-safe language in which type

safety is security-critical

Memory safety: programs cannot fabricate

pointers to memory

Type safety: private fields, methods of objects

cannot be accessed without using object

operations

Bytecode verifier ensures compiled bytecode

is type-safe

Security operations

Each method has an associated protection domain

doPrivileged(P){S}:

e.g., applet or local

fails if method's domain does not have priv. P

switches from the caller's domain to the method's while

executing statement S (think setuid)

checkPermission(P) walks up stack S doing:

for (f := pop(S); !empty(S) ; f := pop(S)) {

if domain(f) does not have priv. P then

error;

if f is a doPrivileged frame then break;

}

Ensures integrity of control flow leading to a

security-critical operation

Some pros and cons?

Pros:

rich, dynamic notion of context that tracks

some of the history of the computation

this could stop Melissa, Love Bug, etc.

low overhead

Cons:

implementation-driven (walking up stacks)

Could be checked statically [Wallach]

policy is smeared over program

possible to code around the limited history

e.g., by having applets return objects that are

invoked after the applet's frames are popped

Require type safety?

Write all security-critical programs in type-safe highlevel language? (e.g., Java)

Problem 1: legacy code written in C, C++

Problem 2: sometimes need control over memory

management

Solution: type-safe, backwards compatible C

Solution: type-safe memory management

Can we have compatibility, type safety and low-level

control? Can get 2 out of 3:

CCured [Necula et al. 2002]

Emphasis on compatibility, memory safety

Cyclone [Jim et al. 2002]

Emphasis on low-level control, type safety

CCured [Necula, 2002]

Another type-safe C dialect

Different pointer classes

DYNAMIC : no info, slow, all accesses checked

SAFE: a memory- and type-safe pointer (or null)

SEQ: pointer to an array of data (like Cyclone fat)

Type-safe world

SAFE,SEQ

Memory-safe world

DYNAMIC

DYNAMIC

Nonmodular but fast C CCured converter

based on BANE constraint solving framework

Certifying compilation

Code certification mechanisms

Problem: can you trust the code you run?

Code signing using digital signatures

Idea: self-certifying code

Too many signers

If you can’t trust Microsoft,…

Code consumer can check the code itself to ensure

it’s safe

Code includes annotations to make this feasible

Checking annotations easier than producing them

Certifying compiler generates self-certifying code

Java/JVM: first real demonstration of idea

PCC:

trusted computing base

verifier

optimizer

System

Binary

machine code

prover

Security

Policy

could be

you

“certified binary”

code

invariants proof

Making “Proof ” Rigorous:

Specify machine-code semantics and security

policy using axiomatic semantics

{Pre} ld r2,r1(i) {Post}

Given:

security policy (i.e., axiomatic semantics and

associated logic for assertions)

untrusted code, annotated with (loop) invariants

it’s possible to calculate a verification condition:

an assertion A such that

if A is true then the code respects the policy

Producer side

The code producer takes its code & the policy:

constructs some loop invariants

constructs the verification condition A from the

code, policy, and loop invariants

constructs a proof that A is true

code

invariants

“certified binary”

proof

Proof-carrying code

Code producer Safety

policy

Target

code

Safety

Proof

Code consumer

Verification

condition

generator

Safety

Conditions

Proof

checker

Code consumer side

Verifier (~5 pages of C code):

takes code, loop invariants, and policy

calculates the verification condition A

checks that the proof is a valid proof of A:

fails if some step doesn’t follow from an axiom or

inference rule

fails if the proof is valid, but not a proof of A

code

ininvariants

variants

“certified binary”

proof

Advantages of PCC

A generic architecture for providing and

checking safety properties

In Principle:

Simple, small, and fast TCB

No external authentication or cryptography

No additional run-time checks

“Tamper-proof”

Precise and expressive specification of code safety

policies

In Practice:

Still hard to generate proofs for properties stronger

than type safety. Need certifying compiler…

Summary

Extensible, networked systems need languagebased security mechanisms

Analyze and transform programs before running

Use safe programming languages to defend

against attacks and for fine-grained access

control

Java, C#, CCured, Cyclone

Certifying compilation to simplify TCB

Software fault isolation, inlined reference monitors

JVM, TAL, PCC

Static analysis of end-to-end system security

Noninterference, static information flow, …

Other work and future challenges

Lightweight, effective static analyses for common

attacks (buffer overflows, format strings, etc.)

Security types for secrecy in network protocols

Self-certifying low-level code for object-oriented

languages

Applying interesting policies to PCC/IRM

Secure information flow in concurrent systems

Enforcing availability policies

See website for bibliography, more tutorial

information:

www.cs.cornell.edu/info/People/jgm/pldi03

Runtime Environment Driven

Program Safety – a case study

Making the case

Static analysis is one-time but poor coverage

Runtime checks have good coverage but per

variable checks are inefficient

Type-based safety is efficient but can be

coarse-grained

Motivation

A new vulnerability class:

Integer Overflow Vulnerability

Recently seen in openssh, pine, Sun RPC

and several other software

Cause: attacker-controlled integer variable

Integer Overflow Attack

alloc_mem(u_short size)

{

u_short pad_size = 16;

size = size + pad_size;

return malloc(size);

}

size = 65535

size = 15 !!

return smaller memory

Program Security Is NOT Portable!

32-bit

Safe

Program

Security

Safe

Source or

Binary code

Unsafe

16-bit

Buffer Overflows And Runtime Environment

Intel

Immediate caller’s

return address on stack

For successful attack,

callee has to return

Variable width

instruction set

PowerPC

Immediate caller’s

return address in link

register

For successful attack,

both callee and caller

have to return

Fixed width instruction

set

NOTE: StackGuard has only been implemented for the x86 architecture.

Porting to other architectures should be easy, but we haven't done it yet

Various Runtime Environments

Observations

Vulnerabilities are specific to a runtime environment

CERT incident reports contain information such as

architecture, software distribution, version, etc.

Programming language level analysis is not adequate

Machine word size determines behavior of numerical

types

Operating system and compiler determine memory

layout

Approach Outline

Runtime Environment Driven Program Safety

Infer safety properties in context of runtime

environment

Enforce these properties

Java-like; except no JVM

Overall Goal

RE 1

Safe

Program

Security

Source or

Binary code

RE 2

Safe

Safe

RE 3

Basic Methodology

A Type-Based Safety Approach

Runtime-dependent interpretation

Not merely an abstraction, but using actual values

No new types

Also, can be efficient

Prototype Implementation: ARCHERR

Implemented as a parser using flex and bison

Currently works on 32-bit Intel/Linux platform

Detecting Integer Overflows

Machine word size is an important factor

Intel XScale Processor

16-bit

(now 32-bit version)

32-bit

Intel Pentium Processor

Main idea: Analyze assignment and

arithmetic operations in context of machine

word size

Integers : Classical View

Assignment:

x : int → x є I

x, y : int

x=y

Arithmetic:

succ(x : int) = (x + 1)

pred(x : int) = (x – 1)

where I = (-∞, +∞)

Integers: Runtime Dependent View

Integer Arithmetic Safety Checks

if x ≥ 0; y ≥ 0, then

x + y assert : x (MAXINT - y)

if x ≥ 0; y < 0, then

x - y assert : x (MAXINT + y)

if x < 0; y ≥ 0, then

x - y assert : x ≥ (MININT + y)

if x < 0; y < 0, then

x + y assert : x ≥ (MININT - y)

x, y,

x y assert : x ≥ MININT/y /\ x MAXINT/y

x y assert : y ≠ 0

x % y assert : y ≠ 0

Other Numerical Types

short, long, unsigned short/long, etc.

Similar analysis

float, double, long double

Floating points use a standard IEEE format

Analysis is more complex

But floating point arithmetic is discouraged for

efficiency reasons

Other Operators

Bitwise operators

<< : multiplication by 2

>> : division by 2 (is safe)

Logical operators?

Not exactly arithmetic in nature

In A Program?

foo(int x, int y)

{

VALIDATE_ADD_INT(x,y);

16-bit check?

32-bit check?

return (x + y);

}

Compile-time

Annotations

Runtime

Checking

A High-Level View

What have we achieved

actually?

Properties of

types in classical

sense

A programmer’s

view

Automatic

safety

conversion

An attacker’s view

RE 1

RE 2

Extending Idea To Pointers

Common concept of segments – data, text, stack

But differences in actual layout

Process Address Map

4 GB

System space

(0xFFFFFFFF)

3 GB

(0xBFFFFFFF)

User space

0 GB

Linux

Windows NT

Similarities/Differences Between

Integers and Pointers

Pointers are represented as “unsigned int’s”

Both pointers and integers have a type

However, arithmetic is different

A machine word to represent the entire virtual

address space

integers increment by 1

pointers increment by size of type they point to

Valid values that they can assume?

For integers, just one interval

For pointers, several disjoint intervals

Bookkeeping Examples

char buffer[256];

char *p;

int main() {

ADD_GLOBAL(buffer,

sizeof(buffer));

ADD_GLOBAL(&p, sizeof(p));

…

}

int foo() {

char buffer[256];

ADD_STACK_FRAME();

…

DEL_STACK_FRAME();

return 0;

}

Reading stack frames currently

implemented through ESP and

EBP register values.

Pointers : Runtime Dependent View

Safe pointer assignment

A pointer variable p, which points to variables of

type be denoted by p:q()

Safe pointer arithmetic (the following must

obey the above rule)

Pointer Assignment Scenarios

Pointer Check Examples

VALIDATE_PTR(q);

p = q;

q is a valid ptr?

[q, sizeof(*q)] is inside same

range?

VALIDATE_PTR(&p[i]);

p[i] = 2;

&p[i] is a valid ptr?

[&p[i], sizeof (*(&p[i]))] is inside

same range?

VALIDATE_PTR_ADD(p, 1);

p++;

p is a valid ptr?

[p, sizeof(*p)] is inside same

range?

p + 1 is a valid ptr and belongs to

the same address range?

Additional Pointer Issues

Function pointers

If not protected, can lead to arbitrary code

execution

Maintain a separate list of function addresses and

check against them

Typecasting is a feature in C

Private fields in structures through void *

Leads to dynamic types

Optimizations

Remove unnecessary checks using static

analysis

Speed up memory range lookups

Currently, integer arithmetic

Maintain separate FIFO lists for stack, data and

heap

Pointer assignment is "safe"; dereferencing

is not

Optimize initialization loops

Security Testing

Does this approach actually work?

Real-world programs

Vulnerabilities and exploits available at SecurityFocus website

Program

Vulnerability

Detected?

sendmail (8.11.6)

Stack-based buffer

overflow

YES

GNU indent (2.2.9)

Heap-based buffer

overflow

YES

man (1.5.1)

Format string

NO

pine (4.56)

Integer overflow

YES

Performance Testing

Scimark2 benchmark

32-bit Intel/Linux 2.6.5

Compared against CCured and BoundsChecker

Performance Hit (slowdown)

CCured

BoundsChecker

1.5 x

35 x

ARCHERR w/o pointer checks

ARCHERR with pointer checks

2.3 x

2.5 x

Impact On Code Size

Source code annotations cause bloat

Source Code Bloat

Runtime Image Bloat

1.5 – 2.5 x

1.2 – 1.4 x

Limitations

Source code annotations

Therefore, no guarantees if code is not annotated

Write wrappers for well-documented binary

libraries

32

strncpy(dst, src, 32);

Maybe considered inefficient for high

performance applications

Security has a price but it is not too high

Features

Portable safety is runtime environment

dependent

First work to show systematic way to

detect/prevent integer overflow attacks

Extended the idea to detect/prevent memorybased attacks

Currently on one architecture

Again on one architecture

Security testing and performance evaluation

CQUAL

Pros

Cyclone

One-time effort

Efficient

BOON

CCured

Cons

Weak type system

Arbitrary

typecasting

Type-Based

Pros

One-time effort

Cons

Static Analysis

ARCHERR

Undecidability of

aliasing

False negatives

Runtime Checks

Pros

Coverage

Few false positives

BoundsChecker

Cons

StackGuard

Inefficient

Current Status And Future Work

Code to be released soon

Investigating implementation on other runtime

environments

Currently research grade

32-bit Intel/Windows PE32

32-bit Intel/FreeBSD ELF

32-bit SPARC/ELF

Improve efficiency?

rndARCHERR – randomized runtime checks

Static analysis driven optimizations

Reference

ARCHERR: Runtime Environment Driven

Program Safety

Ramkumar Chinchani, Anusha Iyer,

Bharat Jayaraman, and Shambhu Upadhyaya

ESORICS 2004

http://www.cse.buffalo.edu/~rc27/publications/chin

chani-ESORICS04-final.pdf