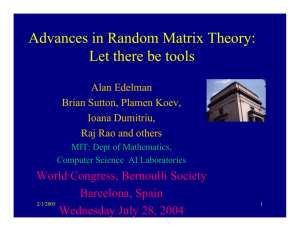

Advances in Random Matrix Theory: Let there be tools Barcelona, Spain

advertisement

Advances in Random Matrix Theory:

Let there be tools

Alan Edelman

Brian Sutton, Plamen Koev,

Ioana Dumitriu,

Raj Rao and others

MIT: Dept of Mathematics,

Computer Science AI Laboratories

World Congress, Bernoulli Society

Barcelona, Spain

5/28/2016

Wednesday July 28, 2004

1

Tools

So

many applications …

Random matrix theory: catalyst for 21st century

special functions, analytical techniques, statistical

techniques

In addition to mathematics and papers

Need

tools for the novice!

Need tools for the engineers!

Need tools for the specialists!

5/28/2016

3

Themes of this talk

Tools

for general beta

What

is beta? Think of it as a measure of (inverse)

volatility in “classical” random matrices.

Tools

for complicated derived random matrices

Tools for numerical computation and simulation

5/28/2016

4

The

Wigner’s Semi-Circle

classical & most famous rand eig theorem

Let S = random symmetric Gaussian

MATLAB: A=randn(n);

S=(A+A’)/2;

S

known as the Gaussian Orthogonal Ensemble

Normalized eigenvalue histogram is a semi-circle

n=20;

%matrix

size, samples,

sample

s=30000;

Precised=.05;

statements

require

n

etc.dist

e=[];

%gather up eigenvalues

im=1; %imaginary(1) or real(0)

for i=1:s,

a=randn(n)+im*sqrt(-1)*randn(n);a=(a+a')/(2*sqrt(2*n*(im+1)));

v=eig(a)'; e=[e v];

end

hold off; [m x]=hist(e,-1.5:d:1.5); bar(x,m*pi/(2*d*n*s));

axis('square'); axis([-1.5 1.5 -1 2]); hold on;

t=-1:.01:1; plot(t,sqrt(1-t.^2),'r');

5

sym matrix to tridiagonal form

G 6

6 G 5

5 G 4

4 G 3

Same eigenvalue distribution

as

GOE:

G

3

2

2

O(n) storage !! O(n ) compute

2 G 1

1 G

5/28/2016

6

General beta

G 6 beta:

1: reals 2: complexes 4: quaternions

6 G 5

5 GBidiagonal

4 Version corresponds

of Statistics

G matrices

4To Wishart

3

3 G 2

2 G

G

5/28/2016

7

Tools

Motivation: A condition number problem

Jack & Hypergeometric of Matrix Argument

MOPS: Ioana Dumitriu’s talk

The Polynomial Method

The tridiagonal numerical 109 trick

5/28/2016

8

Tools

Motivation: A condition number problem

Jack & Hypergeometric of Matrix Argument

MOPS: Ioana Dumitriu’s talk

The Polynomial Method

The tridiagonal numerical 109 trick

5/28/2016

9

(A)

Numerical Analysis:

Condition Numbers

= “condition number of A”

If A=UV’ is the svd, then (A) = max/min .

Alternatively, (A) = max (A’A)/ min (A’A)

One number that measures digits lost in finite

precision and general matrix “badness”

Small=good

Large=bad

The

condition of a random matrix???

5/28/2016

10

Von Neumann & co. estimates

(1947-1951)

“For a ‘random matrix’ of order n the expectation value

has been shown to be about X

n”

Goldstine, von Neumann

“… we choose two different values of , namely n and

10n” P(<n)

0.02

Bargmann, Montgomery, vN

P(< 10n)0.44

“With a probability ~1 … < 10n”

P(<10n) 0.80

5/28/2016

Goldstine, von Neumann

12

Random cond numbers, n

Distribution of /n

2 x 4 2 / x 2 / x 2

y

e

3

x

5/28/2016

Experiment with n=200

13

Finite n

n=10

n=25

n=50

n=100

5/28/2016

14

Condition Number Distributions

P(κ/n > x) ≈ 2/x

P(κ/n2 > x) ≈ 4/x2

Real n x n, n∞

Generalizations:

•β: 1=real, 2=complex

Complex n x n, n∞

•finite matrices

•rectangular: m x n

5/28/2016

15

Condition Number Distributions

P(κ/n > x) ≈ 2/x

P(κ/n2 > x) ≈ 4/x2

Square, n∞: P(κ/nβ > x) ≈ (2ββ-1/Γ(β))/xβ (All Betas!!)

Real

n x n, n∞

General

Formula:

P(κ>x) ~Cµ/x β(n-m+1),

where µ= β(n-m+1)/2th moment of the largest eigenvalue of

Wm-1,n+1 (β)

and C is a known geometrical constant.

Complex n x n, n∞

Density for the largest eig of W is known in terms of

1F1((β/2)(n+1), ((β/2)(n+m-1); -(x/2)Im-1) from which µ is available

Tracy-Widom law applies probably all beta for large m,n.

5/28/2016

Johnstone

shows at least beta=1,2.

16

Tools

Motivation: A condition number problem

Jack & Hypergeometric of Matrix Argument

MOPS: Ioana Dumitriu’s talk

The Polynomial Method

The tridiagonal numerical 109 trick

5/28/2016

17

Multivariate Orthogonal Polynomials

&

Hypergeometrics of Matrix Argument

Ioana

Dumitriu’s talk

The important special functions of the 21st century

Begin with w(x) on I

pκ(x)pλ(x) Δ(x)β ∏i w(xi)dxi = δκλ

Jack Polynomials orthogonal for w=1

on the unit circle. Analogs of xm

∫

5/28/2016

18

Multivariate Hypergeometric Functions

5/28/2016

19

Multivariate Hypergeometric Functions

5/28/2016

20

Wishart (Laguerre) Matrices!

5/28/2016

21

Plamen’s clever idea

5/28/2016

22

Tools

Motivation: A condition number problem

Jack & Hypergeometric of Matrix Argument

MOPS: Ioana Dumitriu’s talk

The Polynomial Method

The tridiagonal numerical 109 trick

5/28/2016

23

Mops (Dumitriu etc.) Symbolic

5/28/2016

24

Symbolic MOPS applications

A=randn(n); S=(A+A’)/2; trace(S^4)

det(S^3)

5/28/2016

25

Symbolic MOPS applications

β=3; hist(eig(S))

5/28/2016

26

Smallest eigenvalue statistics

A=randn(m,n); hist(min(svd(A).^2))

5/28/2016

27

Tools

Motivation: A condition number problem

Jack & Hypergeometric of Matrix Argument

MOPS: Ioana Dumitriu’s talk

The Polynomial Method -- Raj!

The tridiagonal numerical 109 trick

5/28/2016

28

RM Tool – Raj!

Courtesy of the Polynomial Method

5/28/2016

29

5/28/2016

30

Tools

Motivation: A condition number problem

Jack & Hypergeometric of Matrix Argument

MOPS: Ioana Dumitriu’s talk

The Polynomial Method

The tridiagonal numerical 109 trick

5/28/2016

38

Everyone’s Favorite Tridiagonal

-2

1

1

-2

1

1

n2

1

1

-2

d2

dx2

5/28/2016

39

Everyone’s Favorite Tridiagonal

-2

1

1

-2

G

1

1

+(βn)1/2

1

n2

1

d2

dx2

5/28/2016

G

1

-2

G

+

dW

β1/2

40

Stochastic Operator Limit

d2

x

2

dx

2

dW ,

β

N(0,2) χ (n 1)β

χ (n 1)β N(0,2) χ (n 2)β

1

Hβn ~

2 nβ

χ 2β

H

5/28/2016

β

n

H

n

,

N(0,2)

χβ

χβ

N(0,2)

2

G

β

n

,

41

Largest Eigenvalue Plots

5/28/2016

42

MATLAB

beta=1; n=1e9; opts.disp=0;opts.issym=1;

alpha=10; k=round(alpha*n^(1/3)); % cutoff parameters

d=sqrt(chi2rnd( beta*(n:-1:(n-k-1))))';

H=spdiags( d,1,k,k)+spdiags(randn(k,1),0,k,k);

H=(H+H')/sqrt(4*n*beta);

eigs(H,1,1,opts)

5/28/2016

43

Tricks to get O(n9) speedup

• Sparse matrix storage (Only O(n) storage is used)

• Tridiagonal Ensemble Formulas (Any beta is available due to the

tridiagonal ensemble)

• The Lanczos Algorithm for Eigenvalue Computation ( This allows

the computation of the extreme eigenvalue faster than typical

general purpose eigensolvers.)

• The shift-and-invert accelerator to Lanczos and Arnoldi (Since we

know the eigenvalues are near 1, we can accelerate the

convergence of the largest eigenvalue)

• The ARPACK software package as made available seamlessly in

MATLAB (The Arnoldi package contains state of the art data

structures and numerical choices.)

• The observation that if k = 10n1/3 , then the largest eigenvalue is

determined numerically by the top k × k segment of n. (This is an

interesting mathematical statement related to the decay of the Airy

function.)

5/28/2016

44

Random matrix tools!

5/28/2016

47