Using Comparative Judgement to Assess Performance in the Critical Maths Curriculum

advertisement

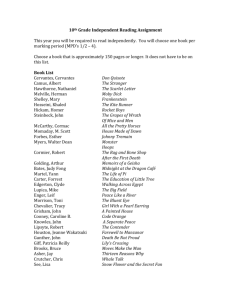

Using Comparative Judgement to Assess Performance in the Critical Maths Curriculum Chris Wheadon & Ian Jones Abstract The purpose of this study was to assess how well Comparative Judgement performs in relation to a traditional marking approach on maths questions that require genuine problem solving. 92 students completed one of two open-ended maths questions and their responses were marked using level of response mark schemes and judged using Comparative Judgement. The findings suggest that both traditional marking and Comparative Judgment can produce reliable and valid results for the cohort used to trial these questions. The most obvious explanation for this result is that most of the students struggled so much with the calculations they were required to make that they were unable to offer analysis or interpretation of data. With little analysis offered, there was little subjectivity inherent in the assessment, so the students’ work could be assessed objectively using either traditional marking or Comparative Judgement. Introduction The DfE is funding MEI to investigate how Professor Sir Timothy Gowers's ideas might inform a curriculum that could become the basis of a new course for students who do not currently study mathematics post-16. The curriculum will be based on students engaging with realistic problems and developing skills of analysing problems and thinking flexibly to solve them. As the intention of the curriculum is that questions are open-ended it is important to understand whether traditional marking approaches are appropriate or whether alternative approaches to traditional marking are more suitable. This report considers the results of a small scale trial of two questions developed for the curriculum which were marked both using traditional level of response mark schemes and using Comparative Judgement. Method Approach The approach to the trial was to develop two questions and associated level of mark schemes which would then be trialled by students. Following the trial the work would be both marked and judged using Comparative Judgement. The original intention was to randomly assign markers and judges from a single pool to avoid confounding experience and expertise with the mode of assessment, but this proved logistically difficult. Instead the designers of the questions and mark schemes undertook the marking themselves, while the judging was undertaken by a wider panel of judges. The different expertise of the markers and judges therefore restricts the interpretation of the results. Materials The two questions that were developed for the trial are presented along with their associated mark schemes in Appendix 1. The Comparative Judgement was undertaken using www.nomoremarking.com, and judges provided with the guidance presented in Appendix 2. Judges were given the mark schemes developed for the marking, along with detailed work throughs for the School Trip question. Participants The students who answered the questions were, in the main, year 12 students. Three groups of year 12 students were taken from two 11-18 schools and one group from a sixth form college. A further group of 22 year eleven students from an 11-18 school completed the School Trip assessment. All of 1 No More Marking Ltd. September 2014 the students had gained a grade C or better in Mathematics at GCSE. 53 students completed the SPAM question, while 39 students completed the School Trip question. The two markers of the questions were the designers of the assessments and mark schemes. Both markers marked all of the questions. The judges were recruited on a voluntary basis mainly from within MEI and have varying degrees of Mathematics expertise. The two markers also participated in the judging, but their judgements have been removed from the analysis that follows, apart from where indicated. Analysis All the analysis of the Comparative Judgement data was undertaken using the R package 1 BradleyTerry2 . For ease of interpretation the Comparative Judgement ability parameters were scaled to have the same mean and standard deviation as the average marks from both markers on each question. Inter-rater reliability of the judging was calculated by an iterative procedure of splitting the judges into two random groups, fitting the Bradley Terry model to the decisions produced by each group separately, and calculating the rank order correlation between the ability parameters derived from the two separate models. The mean correlation of 100 iterations is reported. Judge variability is 2 calculated using the Infit formula provided by Pollitt (2012) . Results SPAM Marking The mean mark on the SPAM question was 2.68 for marker1 and 2.83 for marker2 (out of a maximum of 6) with a standard deviation of the two markers at 1.46 and 1.42 respectively. The inter-rater marking reliability was high, with a rank order correlation of 0.85 between the marks given by the two markers. The reason for the high agreement appears to be the tendency of both markers to award the central mark of 3, and of the reluctance to award marks above 3 (See figure 1). The restricted range of marks results in poor discrimination. The markers agreed exactly on the marks of 62 per cent of the scripts, which is far higher than would be expected for true open-ended questions. Referring to the mark scheme and the scripts, any subjectivity in marking would only be apparent at marks higher than 4, where students attempt a recommendation based on their analysis, but only 15 per cent of scripts scored higher than 4 marks. Judging For the SPAM question there were 15 judges, each completing 90 judgements. Around twice as many judges were recruited as were strictly required in order to calculate inter-rater reliability. One judge was removed due to the variability of their judging (Infit > 1.5), leaving 14 judges and a total of 1260 judgements. The inter-rater reliability of the judges was 0.94, higher than that between the two markers. The rank order correlation between the average mark of the two markers and the judges was 0.89, and with each marker separately 0.87. The high correlation of the judges with the markers suggests that the judging process was valid, with judges generally valuing the same qualities in the scripts as the markers. Figure 2 shows how the marks and the judgements are closely correlated, but also reveals a few anomalies. A particularly interesting example is presented in Appendix 3. This script makes the observation that 37 per cent of the blocked email is incorrectly blocked, which is correct, and shows a good understanding of the problem. The script was ranked 5th by the judges, but 12th according to the average mark awarded by the markers, who both gave a mark of 3 out of 6. To be awarded a 1 Firth, David, and Heather L Turner. "Bradley-Terry models in R: the BradleyTerry2 package." Journal of Statistical Software 48.9 (2012). 2 Pollitt, Alastair. "The method of adaptive comparative judgement." Assessment in Education: Principles, Policy & Practice 19.3 (2012): 281-300. 2 No More Marking Ltd. September 2014 higher mark than 3 the mark scheme requires an explicit recommendation. The judges, unconstrained by the mark scheme, could reward the implicit understanding more highly than the markers. Overall the judging performed well for the SPAM question despite the paucity of interpretation and analysis offered by the students. The high inter-rater reliability of the judges suggests that a Comparative Judgement approach would work well with distributed panels of judges. Figure 1: Density distribution of marks from the Comparative Judgement (cj) and the marks for the SPAM question. Figure 2: The Comparative Judgement scores (cj) with associated standard errors, and the marks for the SPAM assessment. School Trip Marking The mean mark on the School Trip question for both markers was very low for both markers (5.2 and 4.9 respectively out of 15) with the standard deviation of the two markers at 3.8 and 3.2 respectively. The inter-rater marking reliability was higher than on the SPAM assessment at 0.90. The markers agreed exactly on 44 per cent of the marks, which is again much higher than would be expected for 3 No More Marking Ltd. September 2014 an open-ended question with some inherent subjectivity. The distributions of marks (figure 3) again suggests that the markers have a tendency towards the middle mark, while both markers awarded relatively few high marks. Again it seems that most students struggled to move beyond calculations into explanations and justifications, which restricted any subjectivity of interpretation of their answers. Judging For the School Trip question there were 17 judges, each completing 70 judgements. Around twice as many judges were recruited as were strictly required in order to calculate inter-rater reliability. No judges were removed due to the variability of their judging (Infit > 1.5), which means there was a total of 1190 judgements. The inter-rater reliability of the judges was 0.91, similar to that between the two markers. The rank order correlation between the average mark of the two markers and the judges was 0.89, and with each marker separately 0.86 and 0.93. Again the high correlation of the judges with the markers suggests that the judging process was valid, with judges generally valuing the same qualities in the scripts as the markers. There were fewer anomalies between the judges and the markers for the School Trip question, although marker1 did find some quality in an explanation that didn’t impress the judges or marker2. While the agreement seems to be a positive quality, a closer examination of the scripts suggests that the students were mainly reproducing learned approaches to the question, with little evidence of any genuine problem-solving. The best answers, reproduced in Appendix 4, are more complete than other answers, but show little originality. Figure 3: Density distribution of marks from the Comparative Judgement (cj) and the marks for the School Trip question. 4 No More Marking Ltd. September 2014 Figure 4: The Comparative Judgement scores (cj) with associated standard errors, and the marks for the School Trip assessment. Discussion The intention of this study was to examine how well Comparative Judgement performed in assessing open-ended questions that require genuine problem-solving. Overall, Comparative Judgement performed well in assessing the questions in the trial, but then so did traditional marking. The obvious explanation for this result is that the variation in responses was due to mistakes made by the students, rather than to variation in the quality of interpretation and analysis of the data they were presented with. The failure of the questions to achieve their purpose is no reflection on those involved in creating the questions but a reflection of how difficult it is to produce such questions. Despite the attempts of the questions to elicit original thinking and answers it was also clear that many of the students’ approaches were reproductions of taught approaches. Students in this trial struggled to move beyond the procedural mathematics required to complete the questions and to reflect on their approaches and the possible interpretations of their analyses. One possible way in which the questions could be improved is if they were to be less demanding in their requirement for calculations, and more demanding in the areas of interpretation and analysis. Overall, it would seem that the greatest challenge for this curriculum is creating questions that are accessible, mathematically valid, and allow genuine problem-solving approaches to shine; the development of such questions may well be a bigger challenge for this curriculum than how to assess the students’ responses. 5 No More Marking Ltd. September 2014 Appendix 1: Questions and Mark Schemes SPAM A company is considering introducing a filter to its email system to remove unwanted advertising mail which is sometimes referred to as SPAM. It is suggested that the filter will save time and prevent email storage capacity being wasted. The company receives approximately 100000 emails per week of which about 5% are SPAM. A new SPAM filter claims to be 97% accurate. The company take this to mean that 97% of SPAM is correctly filtered out, and only 3% of genuine emails are incorrectly filtered out. Some of the company managers are worried that the SPAM filter may filter out urgent genuine emails and cause delays, others think that too much SPAM will still get through the system. Use the information above to find out how many of the company’s emails are likely to be affected by each of these worries. State whether you would recommend the SPAM filter and give your reasons for this decision. Solution Solutions are likely to include the following points. ● Using data given to find representative frequencies. ● Recommendation with reason Marks 5-6 for a clear, correct complete solution which takes account of the all the information given and includes a recommendation with a reason that follows from the work 3-4 for a solution which takes account of the information and includes a recommendation that follows on from the work. There may be some errors in the frequencies but these are not serious enough to invalidate the argument. Indicative content The correct frequencies are as follows ● In a typical week 5000 SPAM emails and 95 000 emails are OK. ● 4850 SPAM emails rejected, 150 get through. ● 2850 good emails rejected, 92 150 get through 1-2 for some progress towards a solution School Trip 6 No More Marking Ltd. September 2014 A group of 50 students are given 5 possible venues for a school reward trip. To make recording easy each venue is assigned a letter A,B,C,D or E. The students are asked to place the venues in order of preference starting with their favourite at the top. When all of the lists are collected they are sorted into groups whose lists are identical. In total there are 6 groups. This information is given in the table below For example group 1 is the preference list submitted by 18 of the students. Choice 1st 2nd 3rd 4th 5th Frequency Group 1 A D E C B 18 Group 2 B E D C A 12 Group 3 C B E D A 8 Group 4 D C E B A 7 Group 5 E B D C A 3 Group 6 E C D B A 2 50 The school plans to select just one venue. Using the information in the table, suggest different ways to decide on a venue for the reward trip and choose the best way to decide where to go. You should explain how you arrived at your decision, and show your working to choose the venue for the trip. School trip mark scheme Solution Solutions are likely to include the following points. ● A description of a method to make a decision. ● Consideration of more than one possible method and reasons for choosing a particular method. ● Working showing the chosen method in practice. ● A conclusion based on the working. Marks 13-15 for a clear, correct complete solution which takes account of the all the information given and includes a conclusion. The solution recognises that there is more than one possible way to arrive at a decision and gives reasons for rejecting alternative methods. 10-12 for a clear, correct complete solution which takes account of the information and includes a conclusion. Indicative content Choices of decision methods for the top three levels should take account of all the data in the table. Example of 4-6 category Most students chose A. Although more students put A last than put it first, they will understand a decision based on top votes easily and it is quick to use so I would choose A. Example of 1-3 category Most students chose A but a lot of students also put it last. 7-9 for substantial progress towards a complete solution. This level includes responses which correctly describe a decision method that takes account of all the information and start to 7 No More Marking Ltd. September 2014 apply the decision method but there may be some errors or no conclusion is stated. 4-6 for some progress towards a complete solution. Responses at this level include some correct work, for example, describing one or more decision methods but not applying any of them. This level also includes responses that correctly use a very simple decision method with a reason. 1-3 for some relevant work. Responses at this level have at least one correct statement or some relevant working but these are not part of a coherently communicated solution. 8 No More Marking Ltd. September 2014 Appendix 2: Instructions for Judges Notes to Judges You should have received an email which contains a link to the online assessment url. When you click on this link you should obtain a the screen below Click on the blue start button in the bottom right of the box and you should see two items of work for you to judge. You can zoom in on either item of work by placing the mouse pointer on the item and left clicking the mouse once. Use the button in the top right of the screen to revert to the normal two item view. Once you have decided on which item is the better solution use the right or left button (see below) to select this item After making your selection you should automatically obtain two more items to judge, and so on, until the screen informs that you have completed your judgements. (see below) The questions which the students were asked to answer in the assessment trial are shown on the next page. There are also examples of some of the possible calculations and/or systems which the students may have chosen to use. This should save you some time. 9 No More Marking Ltd. September 2014 Please note that this is not a mark scheme and you are encouraged to use your own judgement to identify, in each case , which of the two solutions is better. 10 No More Marking Ltd. September 2014 Appendix 3: Scripts from SPAM A Perfectly Marked Answer (6 out of 6 for both markers) 11 No More Marking Ltd. September 2014 An Implicit Recommendation 12 No More Marking Ltd. September 2014 Appendix 4: Scripts from School Trip The Best Judged Answer 13 No More Marking Ltd. September 2014 Appendix 5: Tables of results SPAM id mei-12 mei-28 mei-8 mei-14 mei-2 mei-13 mei-27 mei-22 mei-3 mei-30 mei-21 mei-17 mei-25 mei-31 mei-51 mei-50 mei-24 mei-44 mei-18 mei-19 mei-29 mei-32 mei-53 mei-4 mei-7 mei-20 mei-6 mei-52 mei-23 mei-48 mei-26 mei-43 mei-11 mei-33 mei-36 mei-15 mei-5 mei-46 mei-45 mei-39 mei-41 mei-16 mei-37 mei-42 mei-47 mei-10 mei-34 mei-35 mei-49 mei-9 mei-38 mei-1 mei-40 judging mark 5.14 4.93 4.81 4.71 4.40 4.36 4.35 4.17 3.95 3.89 3.75 3.73 3.72 3.70 3.59 3.56 3.49 3.39 3.38 3.31 3.27 3.21 3.00 2.97 2.97 2.85 2.66 2.66 2.65 2.61 2.58 2.55 2.34 2.20 2.19 2.16 2.09 1.95 1.90 1.82 1.64 1.55 1.53 1.28 1.00 0.94 0.90 0.85 0.77 0.55 0.34 0.25 -0.04 standard error 0.38 0.34 0.35 0.32 0.31 0.30 0.32 0.30 0.29 0.29 0.29 0.30 0.29 0.28 0.29 0.29 0.29 0.27 0.28 0.28 0.28 0.28 0.29 0.28 0.28 0.29 0.28 0.28 0.28 0.28 0.28 0.28 0.28 0.29 0.29 0.29 0.29 0.29 0.30 0.29 0.29 0.30 0.31 0.32 0.32 0.33 0.33 0.33 0.32 0.34 0.38 0.37 0.40 marker1 marker2 5 5 5 4 3 5 6 6 3 4 3 6 3 3 3 3 3 2 3 3 3 2 3 3 3 3 3 3 3 3 3 3 3 1 2 3 2 2 2 0 1 2 1 1 0 2 1 2 0 1 1 1 1 5 5 5 4 3 6 5 5 5 3 3 6 3 3 3 3 3 3 3 3 3 4 2 3 6 3 3 2 3 3 3 2 4 2 2 2 2 2 2 1 2 2 2 1 1 2 1 1 1 1 1 1 1 14 No More Marking Ltd. September 2014 School Trip id mei-v6 mei-v16 mei-v7 mei-v8 mei-v5 mei-v18 mei-v27 mei-v24 mei-v15 mei-v17 mei-v26 mei-v14 mei-v33 mei-v29 mei-v12 mei-v2 mei-v3 mei-v13 mei-v11 mei-v30 mei-v34 mei-v1 mei-v28 mei-v4 mei-v37 mei-v19 mei-v23 mei-v36 mei-v25 mei-v32 mei-v35 mei-v9 mei-v38 mei-v39 mei-v22 mei-v31 mei-v20 mei-v21 mei-v10 mark 11.10 10.27 9.22 8.77 8.77 8.53 8.46 7.84 7.83 7.53 7.40 7.21 6.90 6.82 6.45 6.42 6.36 6.26 5.65 5.64 5.20 5.13 5.04 4.38 4.25 4.02 3.80 3.34 3.20 3.16 3.10 2.35 1.74 0.78 0.77 0.23 -0.86 -1.02 -5.04 se 1.05 0.94 0.85 0.84 0.84 0.84 0.84 0.82 0.84 0.81 0.81 0.82 0.80 0.80 0.80 0.81 0.80 0.80 0.80 0.80 0.80 0.80 0.80 0.81 0.81 0.82 0.82 0.84 0.82 0.85 0.84 0.88 0.92 0.94 0.92 0.92 0.99 1.09 1.88 mark1 11 9 12 13 11 9 10 11 10 9 9 4 4 2 4 6 7 4 4 4 4 5 2 5 7 2 2 1 9 4 1 3 1 1 1 1 1 1 0 mark2 12 9 12 9 9 8 10 9 9 8 6 5 5 5 4 3 5 5 5 4 4 3 5 5 5 2 2 2 4 4 2 3 1 1 1 2 1 1 0 15 No More Marking Ltd. September 2014