Restarted GMRES Dynamics

advertisement

Restarted GMRES Dynamics

Mark Embree

Computational and Applied Mathematics

Rice University

Supported in part by EPSRC grant GR/M12414, The Oxford Eigenvalue Project.

With thanks to John Sabino, Nick Trefethen, Andy Wathen

0.2

0.1

0

1

1.5

2

Householder Symposium XV — 21 June 2002

Corrected version, 19 July 2002

2.5

GMRES

Iteratively solve:

Ax = b

with iterates drawn from a Krylov subspace:

xk ∈ Kk (A, b)

where

Kk (A, b) = span{b, Ab, A2 b, . . . , Ak−1b}

= {p(A)b : p ∈ Pk−1}.

P` denotes the polynomials of degree ` or less.

Then the kth residual is

rk = b − Axk = pk (A)b

Full GMRES: [Saad & Schultz 1986]

The residual rk is minimized:

krk k = min kp(A)bk.

p∈Pk

p(0)=1

Roots of GMRES Residual Polynomial

krk k = min kpk (A)bk

p∈Pk

p(0)=1

Roots of pk are harmonic Ritz values:

• Reciprocals of Rayleigh–Ritz eigenvalue estimates for A−1 drawn from AKk (A, b).

• Characterized as pseudoeigenvalues [Simoncini & Gallopoulos 1996];

see also [Toh & Trefethen 1999].

• Derivation in [Freund 1992], [Paige, Parlett, & van der Vorst 1995].

Key point: Roots of optimal pk change at each iteration.

An Illustrative Example

Consider the convection–diffusion equation

−ν∆u + w · ∇u = 0 on Ω = [0, 1] × [0, 1]

with w = (0, 1) and ν = 0.01, discretized on a quadrilateral grid with

bilinear Streamline–Upwinded Petrov–Galerkin (SUPG) finite elements.

The resultant matrix was described by [Fischer, Ramage, Silvester, Wathen 1999].

This is the same class of matrices investigated by Jörg Liesen and Zdeněk Strakoš.

Run restarted GMRES for a grid of 33 × 33 unknowns;

right hand side from the boundary value problem.

SUPG Pseudospectra

Pseudospectra for an SUPG problem with 33 × 33 unknowns

0.05

0.04

0.03

0.02

0.01

0

−0.01

−0.02

−0.03

−0.04

−0.05

−0.01

0

0.01

0.02

0.03

0.04

0.05

0.06

0.07

Pseudospectra for ε = 10−2, 10−3, . . . , 10−10.

Eigenvalues of the coefficient matrix: •

Restarted GMRES

Expensive to construct and store orthogonal basis for Kk (A, b) =⇒ Restart GMRES .

GMRES(m) [Saad & Schultz 1986]

Use xm as the starting guess for a fresh run of GMRES.

(m)

Denote the residual after k iterations of GMRES(m) by rk .

When k is a multiple of m, we have

(m)

rk

=

k/m

Y

pm,j (A) b

j=1

where deg(pm,j ) ≤ m.

Each restart locks in m roots in the residual polynomial.

How does one select the GMRES restart parameter?

The Cost of Restarting

• Restarting compromises global optimality.

− GMRES with no restarts converges exactly in no more than n steps;

restarted GMRES may fail to converge.

Example of Saad and Schultz [1986]

A=

0 1

1 0

b=

1

0

.

• eigenvalues of A = {−1, 1}

• b has equal components in each eigenvector direction.

• Optimal degree-1 polynomial is p1(A) = I.

• GMRES(1) makes no progress; GMRES(2) converges in two iterations.

Typical Restart Behavior

Working Assumption:

GMRES(m) produces smaller residuals than GMRES(`) if ` < m.

(`)

(m)

If ` < m, then krk k2 ≥ krk k2 for k ≤ m.

This always holds for early iterations:

Typical example: A = FS 760 1 from Matrix Market

0

10

GMRES(1)

−2

10

(m)

krk k2

kr0 k2

−4

10

GMRES(5)

−6

10

GMRES(10)

−8

10

GMRES(20)

−10

10

0

200

400

600

iteration, k

800

1000

Restarted GMRES for a Convection–Diffusion Problem

Now try Restarted GMRES on the SUPG Matrix:

Run restarted GMRES for a grid of 33 × 33 unknowns;

right hand side from the boundary value problem.

0

1

20

Full

relative residual norm

10

−5

10

−10

10

0

50

100

iteration

150

200

Extreme behavior also observed by:

[de Sturler 1997], [Walker, Watson, et al.], [Eiermann, Ernst, & Schneider 2000]

SUPG Pseudospectra

Pseudospectra for an SUPG problem with 33 × 33 unknowns

0.05

0.04

0.03

0.02

0.01

0

−0.01

−0.02

−0.03

−0.04

−0.05

−0.01

0

0.01

0.02

0.03

0.04

0.05

0.06

0.07

Pseudospectra for ε = 10−2, 10−3, . . . , 10−10.

Eigenvalues of the coefficient matrix: •

How Extreme Can This Behavior Be?

Simplest possible case: GMRES(1) versus GMRES(2) for A ∈ IR3×3.

1 0 0

[Eiermann, Ernst, & Schneider 2000] consider A = 1 1 0 ,

0 1 1

(1)

−1

b = 1 ,

1

(2)

where kr4 k2 = 0.057 . . . but kr4 k2 = 0.26 . . ..

We wish to maximize the difference at the third iteration by considering:

1 α β

b1

A = 0 1 γ , b = b2 .

0 0 1

1

Find extreme behavior via the optimization problem:

min

α,β,γ,b1 ,b2 ∈IR

f (α, β, γ, b1, b2),

with objective functions like

(1)

f (α, β, γ, b1, b2) =

kr3 k2

(2)

kr3 k2

(1)

or f (α, β, γ, b1, b2) =

MATLAB’s fminunc led to some interesting matrices. . .

kr3 k2

(2)

kr3 k22

.

How Extreme Can This Behavior Be?

. . . but even discrete optimization turned out to be sufficient!

To get “nice” matrices, restrict parameters to small integer values:

α, β, γ, b1, b2 ∈ {−5, −4, −3, −2, −1, 0, 1, 2, 3, 4, 5}

(1)

and look for cases where kr3 k2 < 10−8,

(2)

but GMRES(2) does not converge in 100 iterations and kr100k2 >

(2)

1

kr

3 k2

10

alpha = [-5:5]; beta = [-5:5]; gamma = [-5:5];

b1

= [-5:5]; b2

= [-5:5];

for j12=1:length(alpha), for j13=1:length(beta), for j23=1:length(gamma)

for j1=1:length(b1), for j2=1:length(b2)

A = [1 alpha(j12) beta(j13);

0 1

gamma(j23);

0 0

1];

b = [b1(j1);b2(j2);1];

res1 = rgmres(A,b,1,1e-10,3);

if (res1(end) < 1e-8)

res2 = rgmres(A,b,2,1e-10,100);

if length(res2) > 100, if res2(end) > .1*res2(4),

disp([A b])

end,end

end

end, end

end, end, end

Harvest of Discrete Search

1 −2 −4

A = 0 1 −2 ,

0 0

1

1 −2

A= 0 1

0 0

4

2 ,

1

1 −1 −1

A = 0 1 −4 ,

0 0

1

5

b= 0

1

−5

b= 0

1

5

b= 3

1

1 −1 −1

4 ,

A= 0 1

0 0

1

1 −1

A= 0 1

0 0

1

3 ,

1

1 −1 2

A = 0 1 3 ,

0 0 1

1 −1

1 −4 ,

0 1

1 −1

1 4 ,

0 1

1

A= 0

0

1

A= 0

0

−3

b = −5

1

1 −2 −4

3 ,

A= 0 1

0 0

1

1 −1 −2

A = 0 1 −3 ,

0 0

1

5

b = −3

1

1 1 1

2

A = 0 1 3 , b = −4

0 0 1

1

1 1 2

−4

A = 0 1 −2 , b = 0

0 0 1

1

4

b= 2

1

1 −1 1

A = 0 1 −4 ,

0 0

1

5

b= 1

1

1 −1 −1

A = 0 1 −3 ,

0 0

1

−3

b= 5

1

1 −1

A= 0 1

0 0

−5

b = −5

1

−4

b = −2

1

−5

b = −1

1

1

A= 0

0

1

4 ,

1

1 −2

1 2 ,

0 1

1 −1

1 −3 ,

0 1

1 1

1 −4 ,

0 1

1

1

0

1

A= 0

0

1

A= 0

0

1

A= 0

0

1

A= 0

0

3

b= 5

1

−5

b = −3

1

4

b= 0

1

−2

b= 4

1

−5

b= 3

1

1

3

4 , b = −5

1

1

2 −4

−5

1 −3 , b = 5

0 1

1

1 −2 4

A = 0 1 −3 ,

0 0

1

1 −1 −2

A = 0 1 −2 ,

0 0

1

4

b= 0

1

1 −1 −1

3 ,

A= 0 1

0 0

1

−2

b = −4

1

1 −1 1

A = 0 1 −3 ,

0 0

1

1 −1

A= 0 1

0 0

1

A= 0

0

2

2 ,

1

1 −2

1 3 ,

0 1

5

b= 5

1

2

b= 4

1

−4

b= 0

1

5

b = −1

1

1 −1

1 3 ,

0 1

4

b = −2

1

1 1

1 −3 ,

0 1

−4

b= 2

1

1

A= 0

0

1

A= 0

0

1 1 2

−5

A = 0 1 −3 , b = 1

0 0 1

1

1 2 −4

5

A = 0 1 2 , b = 0

0 0 1

1

Restarts: An Extreme Example

1 1 1

A = 0 1 3 ,

0 0 1

2

b = −4 ,

1

x0 = 0.

0

10

GMRES(2)

(m)

krk k2

kr0k

−5

10

−10

10

GMRES(1)

−15

10

0

5

10

15

20

25

30

iteration, k

This right hand side b is special in that GMRES(1) converges exactly at the third iteration.

But GMRES(1) is superior to GMRES(2) for many other right hand sides, too. . .

GMRES(1) and GMRES(2) Residuals

1 1 1

A = 0 1 3 ,

0 0 1

2

b = −4 ,

1

Straightforward computations yield

2

3

(1)

r0 = −4 , r1 = −3 ,

1

0

(1)

r2

x0 = 0.

3

= 0 ,

0

(1)

r3

0

= 0 .

0

Hence, GMRES(1) converges exactly in three iterations.

On the other hand, GMRES(2) yields

2

3

(2)

r0 = −4 , r1 = −3 ,

0

1

(2)

Clearly, kr3 k2 6= 0.

(2)

r2

3

= 0 ,

2

3

1

(2)

r3

24

−27 .

=

28

33

1

Convergence of GMRES(1), Stagnation of GMRES(2)

(1)

k

krk k2 /kr0 k2

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

1

0.925820099772. . .

0.654653670707. . .

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

(2)

krk k/kr0 k2

1

0.925820099772. . .

0.462910049886. . .

0.381324223286. . .

0.377189160453. . .

0.376888290025. . .

0.376548629114. . .

0.376528985604. . .

0.376512989917. . .

0.376511942997. . .

0.376502488858. . .

0.376501839534. . .

0.376498558527. . .

0.376498325779. . .

0.376497022258. . .

0.376496927936. . .

0.376496407650. . .

0.376496369526. . .

0.376496158161. . .

0.376496142549. . .

0.376496055944. . .

0.376496049515. . .

0.376496013816. . .

0.376496011157. . .

0.376495996388. . .

0.376495995285. . .

These residual norms were computed

in exact arithmetic with Mathematica.

80

11

60

10

40

20

0

−20

−40

9

8

7

6

54

3

21

12

3

54

6

7

8

9

10

−60

11

−80

−20

0

20

roots of GMRES(2)

residual polynomial

in the complex plane

(number indicates cycle)

Analysis of GMRES(1) and GMRES(2)

One cycle of GMRES(1) uses the residual polynomial p(z) = 1 + αz,

rk+1 = p(A)rk = rk + αk Ark

rTk Ark

where αk = − T T

.

rk A Ark

[Krasnosel’skiı̆ and Kreı̆n 1952]

One cycle of GMRES(2) uses the residual polynomial p(z) = 1 + βk z + γk z 2:

rk+2 = p(A)rk = rk + βk Ark + γk A2rk ,

where

βk

(rTk AArk )(rTk ATAArk ) − (rTk Ark )(rTk ATATAArk )

=

,

(rTk ATArk )(rTk ATATAArk ) − (rTk ATAArk )(rTk ATAArk )

γk

(rTk Ark )(rTk ATAArk ) − (rTk AArk )(rTk ATArk )

=

.

(rTk ATArk )(rTk ATATAArk ) − (rTk ATAArk )(rTk ATAArk )

Conditions for GMRES(2) to exactly stagnate:

(rTk Ark )(rTk ATATAArk ) = (rTk AArk )(rTk ATAArk )

(rTk Ark )(rTk ATAArk ) = (rTk AArk )(rTk ATArk ).

Stagnation Analysis

Consider only initial residuals of the form

ξ

r0 = η .

1

GMRES(1) stagnates when rTk Ark = 0, which simplifies to:

ξ 2 + ξη + η 2 + ξ + 3η + 1 = 0,

the equation for an oblique ellipse in the (ξ, η) plane.

GMRES(2) stagnates when, in addition, the following holds:

ξ 2 + 2ξη + η 2 + 5ξ + 6η + 1 = 0,

the equation for an oblique parabola.

There are two fixed points for GMRES(2).

Jacobian of GMRES(2) map indicates one stable attractor, one unstable point.

GMRES(1) For a Range of Initial Residuals

ξ

b = η ,

1

1 1 1

A = 0 1 3 ,

0 0 1

x0 = 0.

η

ξ

(1)

Color indicates log10(krk k2/kr0k2) for k = 100.

Ellipse indicates fixed points of the non-linear map (black=stable; white=unstable).

GMRES(2) For a Range of Initial Residuals

ξ

b = η ,

1

1 1 1

A = 0 1 3 ,

0 0 1

x0 = 0.

η

ξ

(2)

Color indicates log10(krk k2/kr0k2) for k = 100.

Dots indicate fixed points of the non-linear map (black=stable; white=unstable).

Extreme Behavior For Diagonalizable Matrices

Some might wonder: Is Jordan structure important in the previous example?

1 α β

Consider matrices of the form: A = 0 2 γ ,

0 0 3

1 −5 −3

A = 0 2 −2 ,

0 0

3

1 −3

A= 0 2

0 0

5

3 ,

3

−5

b= 2

1

2

4 ,

3

2 −2

2 4 ,

0 3

1 −2

A= 0 2

0 0

1

A= 0

0

1

A= 0

0

4

b= 0

1

3 −5

2 3 ,

0 3

−3

b= 1

1

3

b= 1

1

5

b= 2

1

1 −5

A= 0 2

0 0

3

2 ,

3

1 −2 −5

A = 0 2 −5 ,

0 0

3

1 −2

A= 0 2

0 0

5

5 ,

3

2 2

2 −4 ,

0 3

3 5

2 −3 ,

0 3

5 3

2 −2 ,

0 3

1

A= 0

0

1

A= 0

0

1

A= 0

0

−4

b= 0

1

4

b = −2

1

−4

b= 2

1

−3

b = −1

1

−5

b = −2

1

−4

b= 0

1

b1

b = b2 .

1

1 −3 −5

A = 0 2 −3 ,

0 0

3

1 −2 −2

A = 0 2 −4 ,

0 0

3

1

A= 0

0

2 −5

2 5 ,

0 3

2 5

2 −5 ,

0 3

5 −3

2 2 ,

0 3

1

A= 0

0

1

A= 0

0

5

b = −2

1

3

b = −1

1

4

b= 2

1

−4

b = −2

1

4

b= 0

1

Extreme Behavior For Diagonalizable Matrices

3

b = 1 ,

1

1 2 −2

We focus on one of these examples: A = 0 2 4 ,

0 0 3

x0 = 0.

0

10

GMRES(2)

(m)

krk k2

kr0k

−5

10

−10

10

GMRES(1)

−15

10

0

5

10

15

20

25

30

iteration, k

Now consider the dynamical system induced by the GMRES(1) and GMRES(2) non-linear maps. . .

GMRES(2) For a Range of Initial Residuals

ξ

b = η ,

1

1 2 −2

A = 0 2 4 ,

0 0 3

x0 = 0.

η

ξ

(2)

Color indicates log10(krk k2/kr0k2) for k = 100.

Dots indicate fixed points of the non-linear map (black=stable; white=unstable).

GMRES(1) For a Range of Initial Residuals

ξ

b = η ,

1

1 2 −2

A = 0 2 4 ,

0 0 3

x0 = 0.

η

ξ

(1)

Color indicates log10(krk k2/kr0k2) for k = 100.

Ellispe indicates fixed points of the non-linear map (black=stable; white=unstable).

GMRES(1): Close-Up Near Unstable Fixed Points

ξ

b = η ,

1

1 2 −2

A = 0 2 4 ,

0 0 3

x0 = 0.

η

ξ

(1)

Color indicates log10(krk k2/kr0k2) for k = 100.

White curve indicates unstable fixed points.

GMRES(1) on a 2-by-2 Example

We want to understand this apparent sensitive dependence for GMRES(1).

Thus, we reduce the dimension as small as possible.

A=

1 −2

0 1

,

r0 =

α

β

.

How does convergence vary as a function of α and β?

GMRES(1):

rk+1

rTk Ark

= rk − T T

Ark .

rk A Ark

This iteration makes no progress whenever rT0 Ar0 = 0:

rT0 Ar0 = ( α β )

1 −2

0 1

α

= α2 − 2αβ + β 2

β

= (α − β)2,

so exact stagnation can only occur when α = β.

100 GMRES(1) Iterations on a 2-by-2 Example

A=

1 −2

0 1

β

,

r0 =

α

β

.

log10

α

kr100 k

kr0 k

GMRES(1) on a 2-by-2 Example

2β 2

r1 = 2

α − 4αβ + 5β 2

r2 =

2β 2

α2 − 4αβ + 5β 2

β

2β − α

Exact convergence in one step only if β = 0.

2(2β − α)2

5α2 − 16αβ + 13β 2

2β − α

3β − 2α

Exact convergence in two steps only if α = 2β.

r3 =

2β 2

α2 − 4αβ + 5β 2

2(2β − α)2

5α2 − 16αβ + 13β 2

2(2α − 3β)2

13α2 − 36αβ + 25β 2

3β − 2α

4β − 3α

.

Exact convergence in three steps only if α = 32 β.

r4 =

2β 2

α2 − 4αβ + 5β 2

2(2β − α)2

5α2 − 16αβ + 13β 2

2(2α − 3β)2

13α2 − 36αβ + 25β 2

2(3α − 4β)2

25α2 − 64αβ + 41β 2

4β − 3α

5β − 4α

.

Exact convergence in three steps only if α = 43 β.

Restrict Attention to the Case β = 1

If β = 0, convergence in a single iteration. Otherwise, scale the problem to have β = 1.

1 −2

α

A=

,

r0 =

.

0 1

1

β

log10

α

kr100 k

kr0 k

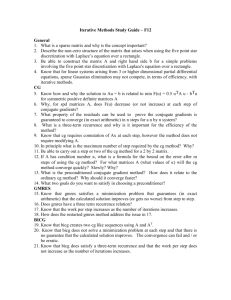

Residual Norm Behavior for a Few Iterations

1 iteration

1

kr1k

kr0k 0.5

0

1

1.5

2

2.5

3

α

2 iterations

1

kr2k

kr0k 0.5

0

1

1.5

2

2.5

3

α

3 iterations

1

kr3k

kr0k 0.5

0

1

1.5

2

2.5

3

α

Residual Norm Behavior for a Few Iterations

5 iterations

0.2

kr5k

kr0k 0.1

0

1

1.5

2

2.5

α

10 iterations

0.2

kr10k

kr0k 0.1

0

1

1.5

2

2.5

α

20 iterations

0.2

kr20k

kr0k 0.1

0

1

1.5

2

2.5

α

Residual Norm Behavior for a Few Iterations

25 iterations

0.2

kr25k

kr0k 0.1

0

1

1.5

2

2.5

α

50 iterations

0.2

kr50k

kr0k 0.1

0

1

1.5

2

2.5

α

100 iterations

0.2

kr100k

kr0k 0.1

0

1

1.5

2

2.5

α

Analysis for β = 1 (with John Sabino)

For this simple matrix, the GMRES(1) iteration

rk+1

rTk Ark

= rk − T T

Ark

rk A Ark

can be written down as:

rk = µ1 µ2 µ3 · · · µk

where

k − (k − 1)α

,

(k + 1) − kα

2((j − 1)α − j)2

µj =

.

(1 − 2j + 2j 2)α2 − 4j 2α + (1 + 2j + 2j 2)

Exact convergence only occurs when some µj = 0:

µj = 0

⇐⇒

(j − 1)α − j = 0

⇐⇒

Thus, the exactly convergent residuals take the form:

j+1

r0 = j

1

α=

j

for j > 1.

j−1

for positive integers j.

Analysis for β = 1

Another way to see the convergence of these residuals is to note that if we have

j+1

r0 = j ,

1

then

j

2j 2 − 2j j − 1

r1 = 2

.

2j − 2j + 1

1

So GMRES(1) preserves the form of the residual — it just reduces the value of j.

2

Since r0 =

converges in two iterations, we have exact convergence for all j by induction.

1

We can use a similar tactic to investigate convergence of

2j + 1

r0 = 2j − 1 .

1

Stagnation Analysis for Special Residuals

One step of GMRES(1) applied to the initial residual

2j + 1

r0 = 2j − 1

1

yields

2(j − 1) + 1

(2j − 1)(2j − 3) 2(j − 1) − 1

r1 =

.

4j 2 − 8j + 5

1

Again, GMRES(1) preserves the form of the residual; it just reduces the value of j.

Note that (2j − 1)(2j − 3) 6= 0 for all integer values of j =⇒ no exact convergence!

As a base case for induction, take j = 0:

rk =

k−1

Y

γ`

`=0

Bound

∞

Y

γ`

`=0

!

2k − 1

2k + 1

1

using

where γ` = 1 −

2

.

4`2 + 8` + 5

∞ z2

sin z Y

=

1− 2 2 .

z

` π

`=1

Interlacing of Convergent and Stagnant Residuals

A=

1 −2

0 1

,

r0 =

α

1

.

Proposition. Let j be a positive integer.

If α = (j + 1)/j, then GMRES(1) converges exactly at iteration j + 1.

If α = (2j + 1)/(2j − 1), then GMRES(1) stagnates; in particular:

√

4 2

krk k ≥

15π 2

for all positive integers k.

kr10k

·· ·· ··

··· ↑↑ ↑ ↑ ↑

↑

↑

↑

11 5 9 4

9 4 7 3

3

2

5

3

2

1

7

5

Trajectories of Convergent and Stagnant Residuals

j = 10

j=1

A

A

A

A

1.5

1

A

0.5

0

0

−0.5

−0.5

−1

−1

−1.5

−1

0

1

2

j+1

Convergent: r0 = j

1

3

−1.5

−1

A

A

A

A

A

AU

A

AU

1

0.5

A

A

A

A

A

1.5

A

A

U

A

j=1

j = 10

0

1

2

2j + 1

Stagnant: r0 = 2j − 1

1

3

Exactly Convergent Initial Residuals

3

2

1

0

−1

−2

−3

−3

−2

−1

0

1

2

3

Blue lines in the plot on the right indicate all exactly convergent residuals in the (α, β) plane.

For this example, the convergent initial residuals appear to form a set of measure zero

in the (α, β) space. This is apparently in contrast to our 3-by-3 examples.

Numerical Range and Elman’s Bound

A=

1 −2

0 1

W (A) = {x∗Ax : kxk = 1}.

Numerical Range:

1.5

1

0.5

0

−0.5

−1

−1.5

−1

0

1

2

3

Note that if we replace the −2 by any number in (−2, 2), then 0 6∈ W (A).

If 0 6∈ W (A), then bound of Elman [1982] (see [Greenbaum 1997]) guarantees convergence:

s

krk+1k

d2

≤ 1−

,

krk k

kAk2

where d is the distance of W (A) from the origin.

Conclusions and Future Directions

• Smaller restarts can dramatically outperform larger restarts.

• Modest changes in starting vectors can dramatically affect convergence.

• Descriptive analysis of Restarted GMRES must incorporate the starting vector.

• How widespread is this sensitive dependence for larger problems, larger restarts?

• Does this phenomenon arise in popular algorithms like QMR and BiCGSTAB?

— Recall that BiCGSTAB incorporates GMRES(1).

• How does an augmented basis method affect this behavior?

• Analogous behavior occurs for restarted Arnoldi eigenvalue computations with exact shifts.

Technical Report available:

“The Tortoise and the Hare Restart GMRES”

Oxford University Numerical Analysis Group Report 01/22

November 2001.