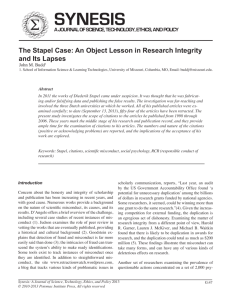

Flawed science: The fraudulent research practices of social psychologist Diederik Stapel

advertisement