Detecting ECG Abnormalities via Transductive Transfer Learning Kang Li Nan Du

advertisement

Detecting ECG Abnormalities via Transductive Transfer

Learning

Kang Li

Department of Computer

Science and Engineering

SUNY at Buffalo

Buffalo, 14260, U.S.A.

kli22@buffalo.edu

Nan Du

Department of Computer

Science and Engineering

SUNY at Buffalo

Buffalo, 14260, U.S.A.

nandu@buffalo.edu

Aidong Zhang

Department of Computer

Science and Engineering

SUNY at Buffalo

Buffalo, 14260, U.S.A.

azhang@buffalo.edu

ABSTRACT

Detecting Electrocardiogram (ECG) abnormalities is the process of identifying irregular cardiac activities which may lead

to severe heart damage or even sudden death. Due to the

rapid development of cyber-physic systems and health informatics, embedding the function of ECG abnormality detection to various devices for real time monitoring has attracted

more and more interest in the past few years. The existing machine learning and pattern recognition techniques developed for this purpose usually require sufficient labeled

training data for each user. However, obtaining such supervised information is difficult, which makes the proposed

ECG monitoring function unrealistic.

To tackle the problem, we take advantage of existing well

labeled ECG signals and propose a transductive transfer

learning framework for the detection of abnormalities in

ECG. In our model, unsupervised signals from target users

are classified with knowledge transferred from the supervised

source signals. In the experimental evaluation, we implemented our method on the MIT-BIH Arrhythmias Dataset

and compared it with both anomaly detection and transductive learning baseline approaches. Extensive experiments

show that our proposed algorithm remarkably outperforms

all the compared methods, proving the effectiveness of it in

detecting ECG abnormalities.

Categories and Subject Descriptors

I.5.3 [Pattern Recognition]: Clustering—algorithms; J.3

[Computer Applications]: Life and Medical Science—

health

General Terms

Algorithms, Performance

Keywords

ECG, anomaly detection, transductive learning

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that copies

bear this notice and the full citation on the first page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specific

permission and/or a fee.

ACM-BCB’12 October 7-10, 2012, Orlando, FL, USA

Copyright 2012 ACM 978-1-4503-1670-5/12/10 ...$15.00.

ACM-BCB 2012

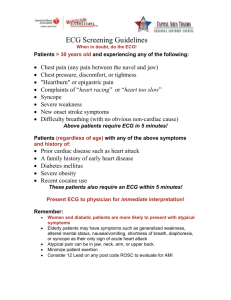

Figure 1: A Cyber-Physical System.

1.

INTRODUCTION

ECG is an important and commonly used interpretation

of cardiac electrical activities over time and can be used to

assess different heart related biological processes and diseases including cardiac arrhythmias [21], mental stress [24],

morbid obesity [5], etc. The collected ECG signals can be

naturally divided into sequenced instances, each of which is

an observed cardiac cycle (heart beat). In ECG signal monitoring, existing studies usually classify these instances into

two classes: normal and abnormal, which represent normal

state of the heart and abnormal biological events that may

lead to heart damage or even sudden death, respectively. An

automatic ECG abnormality detection approach is thus very

meaningful towards promptly responses of heart attacks and

a better understanding of the underlying biological mechanisms.

Due to the rapid development of health informatics and

cyber-physical systems, embedding the function of ECG abnormality detection into wearable devices for health monitoring and timely alarm has attracted more and more interests during the past few years. Such a typical system

usually contains electro-nodes attached to human bodies,

carry-on devices such as smart phones for signal collection,

and a remote database for data management and analysis,

as presented in Figure 1. One of the major factors limiting the popular usage of these cyber-physical systems is

that the existing machine learning and pattern recognition

techniques developed for ECG abnormality detection usually require sufficient labeled training data for each user to

make the model user-specified and accurate. However, for

ECG signals, such supervised information is very difficult to

obtain without professional knowledge and a lot of effort.

Moreover, since abnormal instances are usually much less

than normal ones, supervised methods require more data in

210

these cases to capture enough variability on data distributions, which makes the labels expensive to obtain.

To solve the problem, we take advantage of existing well

labeled ECG signals and use them as sources into a novel

transductive transfer learning framework for ECG abnormality detection. We propose to unsupervisedly learn ECG

signals from target patients with knowledge transferred from

these supervised sources. In detail, knowledge transferring is

achieved by the Kernel Mean Matching (KMM) [8] method,

in which source instances are weighted to match the distribution of target instances in a reproducing kernel Hilbert

space (RKHS). A novel weighted transductive one class support vector machine (WTOSVM) as well as a labeling process are then proposed to discriminatively classify instances

in the target.

The rest of this paper is organized as follows: we will

briefly discuss the related work of this topic in Section 2;

after giving out the notation used in this paper, we present

the theoretical work of our method in Section 3; the experiments and corresponding analysis are shown in Section 4;

and finally we come to the conclusions and perspectives in

Section 5.

2.

RELATED WORK

As a classical problem in machine learning and pattern

recognition, ECG abnormality detection can be categorized

into the areas of anomaly detection and imbalanced learning,

due to the imbalance between numbers of instances of normal and abnormal classes in the ECG data. Similar datasets

exist in a lot of research and applications, such as text mining [28], video indexing [27] and network security [7]. In such

datasets, one or more classes have significantly less instances

than the others, which conflicts with the assumption made

by regular machine learning methods that each class should

be equal or nearly equal in size. Failing to cover the distributions of imbalanced datasets by traditional methods invokes

general interest of developing new algorithms for them. The

developed methods are further applied to many relevant areas such as noise filtering [1], intrusion detection [9] and

innovation detection [19].

To solve the problem, several solutions have been proposed at both data and algorithmic levels. The former one

includes many different re-sampling methods favoring rebalancing class distributions, such as random under-sampling

of majority instances [15] and synthetic minority oversampling technique (SMOTE) [4]. The algorithmic approaches

usually give higher weights to minority instances in costsensitive and discriminative methods, aiming at rebalancing

the biased decision boundaries [6, 3]. These popular approaches always require supervised information to guide the

rebalancing process, which is expensive to obtain, as explained in the Introduction.

Several methods have been proposed for unsupervised learning on imbalanced datasets. One class support vector machine (OCSVM) [26] adapts the support vector machine

(SVM) algorithm to bound data into a circle while minimizing the diameter of it. Kernel nearest neighbors (KNN)

[23] assumes that majority instances are dense and minorities are sparse, then classify them according to their kernel distances. [10] makes another assumption that each

minority instance should either not belong to any cluster

or be far from its cluster center, and presents a clustering

based method for detecting local outliers. Other unsuper-

ACM-BCB 2012

vised methods could be generally divided into these three

categories.

The main challenge in unsupervised learning on imbalanced ECG datasets is how to uncover the real distribution

of each class without any labeled information. Inspired by

the success of transfer learning on many areas, we propose

a transductive transfer learning algorithm for ECG abnormality detection. Instead of assuming the distributions of

normal and abnormal instances, we classify each target with

assistance of a well labeled source, which fits our common

experience in real life, such as diagnosing a disease on a target patient with cases of similar patients having the same

disease.

The formulated problem in this paper is related to transfer learning, which is the process of transferring information

from a source domain to help learning in a target domain. It

is commonly used when sources and targets are drawn from

the same feature space. Specifically, transductive transfer

learning is the process of knowledge transferring when only

labeled sources and unlabeled targets are available. It has

been successfully implemented in various areas [22, 2]. The

major difficulty in knowledge transferring is that source and

target data are not likely to be drawn from the same distribution, thus directly estimating target data through source

will cause covariance shift and is not applicable in most

cases. To address the problem, we follow the framework proposed in [8] which matches the kernel means of the source

and the target in an RKHS for knowledge transferring.

The main contributions of this paper are:

• We propose a novel anomaly detection algorithm to solve

the problem of detecting irregular events in the collected

ECG signals.

• We investigate the problem from the transductive transfer

learning point of view. Since our model is general, it can

be further expanded to transductive learning on imbalanced

datasets in other areas.

• In the experimental study of our method on the MITBIH Arrhythmias Dataset [18], our model outperforms all

the other baseline methods for ECG abnormality detection

and achieves 25% to 60% performance improvement than

the baselines, which demonstrates the effectiveness and superority of our proposed algorithm.

3.

METHOD

Notice that the preprocessing method of dividing ECG

signals into instances (heart beats) is not the focus of this

paper. Instead, we aim at unsupervised recognition of the

preprocessed instances. Before diving into the details of our

framework, let’s first define the notation used in the following sections. Suppose for a target user/patient, we obtain

an ECG signal including t instances T = {xi | i ∈ [1, t]} to

be classified into normal and abnormal classes. A selected

source labeled signal S = {xˆi | i ∈ [1, s]} will be used to help

the learning process of T . For simplicity here, we assume

that xi and xˆi are drawn from the same feature space and

the label vector for the source is Ŷ = {yˆi |yˆi ∈ {−1, +1}, i ∈

[1, s]}, where −1 represents majority (normal) instances and

+1 represents minority (abnormal) instances, respectively.

The aimed problem in this paper can be expressed as:

given a target imbalanced dataset T , a source dataset S

and its labels Ŷ , learn a mapping f = T → Y , in which

Y = {yi |yi ∈ {−1, 1}, i ∈ [1, t]}.

211

to observations that are under-represented in Pˆr and lower

weights to over-represented instances. The estimation of

β(x, y) thus does not require any supervised information in

both the source and the target.

According to Equation 2, knowledge transferring from a

source to a target is accomplished by estimating the target

data distribution P r(x) by β(x)Pˆr(x). The idea of KMM

in learning the β vector is to minimize the discrepancy between the centers of instances in the target and the weighted

instances

in the source in an RKHS. Moreover, a constraint

| 1s si=1 βi − 1| ≤ is enforced to ensure that β(x)Pˆr(x) is

close to a probability distribution. The objective function

for KMM is then expressed as:

140

Dataset

Dataset

Dataset

Dataset

120

100

1:

1:

2:

2:

Normal

Abnormal

Normal

Abnormal

Dimension #2

80

60

40

20

0

−20

−40

0

50

100

150

200

Dimension #1

250

300

350

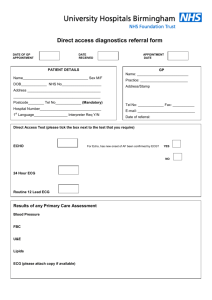

Figure 2: Distributions of two imbalanced datasets.

3.1

β = arg min Ex∼P r [ϕ] − Ex∼Pˆr [βϕ]2

Knowledge Transferring

= arg min Our aim is to classify instances in an unsupervised target with assistance of labeled instances in a source. However, directly classifying data in the target by using models

trained on the source is not applicable, because the source

and the target are not independently and identically drawn

(iid ) from the same distribution. As shown in Figure 2,

in a two dimensional space, two imbalanced datasets 1 and

2 are presented in different shapes, and abnormal instances

of them are marked in red. If we directly classify data in

dataset 2 by classifiers learned on dataset 1, most normal

instances will be labeled as abnormal due to the different

distributions of these two datasets.

To enable knowledge transferring between datasets with

different distributions, we use the KMM method [8]. Suppose we obtain the target samples Z = {(x1 , y1 ), . . . , (xt , yt )} ⊆

X × Y from a Borel probability distribution P r(x, y), and

the source samples Ẑ = {(xˆ1 , yˆ1 ), . . . , (xˆs , yˆs )} ⊆ X̂ × Ŷ

from another such distribution Pˆr(x, y).

A learning method on sources generally minimizes the expected risk:

θ = arg min R[Pˆr, θ, l(x, y, θ)] = arg min E(x,y)∼Pˆr [l(x, y, θ)],

(1)

where l(x, y, θ) is a loss function that depends on a parameter vector θ, such as negative log-likelihood − log P r(y|x, θ)

and misclassification loss; E is the expectation; and R is the

over all loss that we wish to minimize. Since P r(x, y) and

Pˆr(x, y) are different, what we would really like to minimize

is R[P r, θ, l]. An observation from the field of importance

sampling [11] is that

θ = arg min R[P r, θ, l(x, y, θ)]

= arg min E(x, y)∼P r [l(x, y, θ)]

= arg min E(x,

y)∼Pˆr [

P r(x, y)

l(x, y, θ)]

Pˆr(x, y)

(2)

= arg min R[Pˆr, θ, β(x, y)l(x, y, θ)],

P r(x, y)

.

Pˆr(x, y)

Through the function, we can

compute the risk with respect to P r using Pˆr. But the

key problem is that the coefficient vector β(x, y) is usually

unknown, and we need to estimate it from the data. In our

context, since P r and Pˆr differ only in P r(x) and Pˆr(x), we

P r(x)

have β(x, y) = P

ˆr(x) . β then can be viewed as a weighting

factor for the source instances and it gives higher weights

where β(x, y) =

ACM-BCB 2012

s

t

1

1

βi ϕ(xˆi ) −

ϕ(xi ) 2

s i=1

t i=1

= arg min

(3)

1 T

2

β Kβ − K̂ T β + const,

s2

st

where ϕ is a mapping function, which maps data of the

source

t and the target into an RKHS; Kij = k(xˆi , xˆj ); K̂i =

1

ˆi , xj ); and const represents an unknown constant

j=1 k(x

t

which could be ignored in the optimization process. The

function k could be an arbitrary kernel function specified

according to the aimed task. In our experiments, we set k

to be the commonly used Gaussian Kernel, which is

k(xi , xj ) =

3.2

(xi − xj )T (xi − xj )

1

exp[−

].

2πσ 2

2σ 2

(4)

Weighted Transductive One Class SVM

OCSVM [26], which bounds data in a circle while minimizing the diameter of the boundary, has been successful used

in many imbalanced datasets. However, it is not applicable

to directly use it in our transductive transfer learning framework. The major difficulty is that each instance is weighted

by β for knowledge transferring. To solve this, we propose

a variant objective function called Weighted Transductive

OCSVM (WTOSVM) which can be expressed as:

min R2 +

2

s

1 ξi ,

sγ i=1

(5)

2

s.t. βi ϕi − O ≤ R + ξi , ξi ≥ 0,

where R is the diameter of the decision boundary; O is the

center of the bounding circle; ξi is the slack variable, which

measures the degree of misclassification of data xˆi ; and γ is

a measurement for the trade-off between a small diameter

of the boundary and a small error penalty.

Using multipliers αi , ζi ≥ 0, we introduce the Lagrangian

as:

L(R, γ, β, ξ, α, ζ) = R2 +

s

i=1

s

1 ξi +

sγ i=1

αi [ βi ϕi − O 2 −R2 − ξi ] −

s

(6)

ζi ξi .

i=1

Set the derivatives with respect to the primal variables R,

212

ξ and O to be zero, yielding:

dL

= 2R(1 −

dR

s

⇒

αi ) = 0

i=1

s

αi = 1,

i=1

dL

1

=

− α i − ζi = 0

dξi

sγ

s

dL

αi (βi ϕi − O) = 0

= −2

dO

i=1

⇒ 0 ≤ αi ≤

⇒O=

s

1

,

sγ

α i β i ϕi .

i=1

(7)

Substituting Equation 7 into Equation 5, and using kernel

function method:

K(x, y) = (ϕ(x) · ϕ(y)),

(8)

we then obtain the dual problem as:

min

s

2

αi βi K(xˆi , xˆi ) −

s s

i=1

αi αj βi βj K(xˆi , xˆj )

i=1 j=1

s

1 αi = 1, 0 ≤ γ ≤ 1.

,

s. t. 0 ≤ αi ≤

sγ i=1

(9)

The optimal value of αi and the corresponding center of

the decision boundary are determined by solving the quadratic

programming (QP) problem in (9). There are many off-shelf

QP solvers which are convenient for such tasks. In our case,

we take advantage of the Optimization Toolbox in MATLAB

to solve the objective function of WTOSVM. The distances

between weighted instances in the source and the center of

the round boundary are:

(10)

DS(xˆi ) = βi ϕ(xˆi ) − O2 .

3.3

Labeling Process

After transferring knowledge from a source to a target

via KMM method, the weighted supervised instances in the

source can then participate in the process of learning on the

unsupervised target. The crucial problems are how to use

the supervised information provided by the weighted source

instances and how to discriminate abnormal instances in the

target. Through the WTOSVM, weighted instances in the

source are re-distributed into a circle and a round decision

boundary is learned. However, labeling instances in the target using the trained decision boundary of the source is impractical for mainly two reasons. First, distances calculated

through parameters trained for the source will be comparatively larger for data in the target because of the discrepancy existing between the distributions of P r and β Pˆr, even

if they are perfectly matched in the kernel means. Second,

labeling data solely by WTOSVM actually adopts an assumption in OCSVM that minorities are sparse and majorities are dense, which may work well if the assumption is met

and cause high bias otherwise.

Notice that in WTOSVM, distance from each weighted

instance to the center is calculated, and the instances with

larger distances are supposed to be abnormal with higher

probability and vice versa. Since there is supervised information of the source, we can estimate the probability distribution of normal instances over different distances as:

s

· yˆi ) · sgn(DS(xˆi ) − d)]

i=1 [sgn(−1

s

,

(11)

pn (d) =

ˆi )

i=1 sgn(−1 · y

ACM-BCB 2012

where pn (d) measures the probability that an instance is

normal given that its distance to the center is d; sgn(x) = 1

if x > 0 and sgn(x) = 0 otherwise. In the same way, we

estimate the probability distribution of abnormal instances

over different distances as:

s

yˆi ) · sgn(d − DS(xˆi ))]

i=1 [sgn(

s

,

(12)

pa (d) =

ˆi )

i=1 sgn(y

where pa (d) measures the probability that an instance is

abnormal given that its distance to the center is d. Using

OCSVM, we can re-distribute instances in the target in the

same way as the source trained by WTOSVM, and the distances between instances in the target dataset and the center

in the round boundary are:

(13)

DT (xi ) = ϕi − OT 2 ,

in which OT is the center of target instances determined by

OCSVM. Our idea of discriminating them is using the distance to calculate the probabilities for normal and abnormal

states, according to the statistically estimated probability

distributions pn and pa in the source. The decision making

process is:

−1 if pn (DT (xi )) > pa (DT (xi )),

yi =

(14)

+1 otherwise.

Our algorithm of transductive transfer learning on imbalanced datasets is then summarized in Algorithm 1. Notice

that this framework is for transductive transfer learning using a single source.

Algorithm 1: ECG Abnormality Detection

via Transductive Transfer Learning

Input: A supervised source S and an unsupervised

target T , a subset Nt ∈ T , kernel covariance σ

and trade-off parameter γ.

Output: The label vector Y for instances in T

1. use subset Nt to weight instances in S through

KMM and obtain weighting vector β.

2. implement WTOSVM on βS to calculate DS, pn

and pa .

3. use OCSVM on T to obtain DT .

4. for i = 1 to t do:

5.

if pn (DT (xi )) > pa (DT (xi )):

6.

yi = −1;

7.

else:

8.

yi = +1;

9.

end if

10. end for.

11. return: Y = {yi }ti=1 .

3.4

Source Selection

Given the above framework for transductive learning on

a target ECG signal with a single source, a noticeable unsolved problem is how to take advantage of different sources

for each target. Considering each source as a decision maker,

then for data in the target, ideally we could combine opinions

from all the sources and make a better decision. However,

it is not the case for our problem because no supervised information is provided for the target. Existing unsupervised

multiple sources ensemble approaches can be divided into

two categories: unweighted and weighted combination. The

former equals the weights of opinions from different decision

makers, ignoring the fact that they should have different

213

suitability and reliability for the same target, causing a lot

of bias and driving down the overall performance; the latter

always assumes that opinions from majority sources should

be close to each other, which does not hold in our cases due

to the difficulty in handling data imbalance. These ensemble

methods will then introduce much randomness into the decision making process. To avoid this and to refrain distraction

of this paper, we will focus on selecting the most reasonable

source for each target, and we present a straight-forward

approach for it based on cross-validation.

In detail, suppose we are going to choose one optimal

source from n well labeled candidate datasets S1 , S2 , S3 , ..., Sn ,

we iteratively set each of them to be the source and other

candidates to be the targets. The source which has the

highest accumulated performance is set to be the optimal

source for an aimed target. The underlying assumption of

this strategy is that a source consistently performs well on

other targets will be a reasonable source for the aimed target. In the experiment, we will experimentally verify this

assumption and show that the simple source selection strategy can obtain results well fitting the highest performance of

each target and outperforms comparative methods in most

cases. Moreover, this source selection framework can be executed beforehand and easily implemented in a parallel way,

which highly decreases the computing time.

4.

EXPERIMENTS

In this section, we demonstrate the effectiveness of the

transductive learning based ECG abnormality detection framework. The algorithm is evaluated on the MIT-BIH Arrhythmias Dataset [18], and compared to a set of baseline approaches. Results show that the proposed framework could

successfully transfer knowledge from sources to targets to

better identify irregular events in ECG signals with significant improvements. Beside these, the optimal source selection method is also experimentally tested. The results

fit the highest performance of each target well and outperform all those of the comparative methods, which verify the

practicality and superiority of our method. Finally, experiments on parameter sensitivity are performed to prove the

robustness of our model against various settings.

4.1

Dataset Description and Preprocessing

The MIT-BIH Arrhythmias Dataset [18] contains 48 half

hours excerpts of ECG signals, obtained from 47 subjects.

Each heart beat is labeled as normal or abnormal by clinical

experts. From it, we randomly picked 10 records, including

the following files: 100, 101, 103, 105, 109, 115, 121, 210, 215

and 232. The preprocessing and feature extraction of each

signal are implemented in a similar way to [17]. The percentage of abnormal instances in each preprocessed dataset

varies from 0.7% to 29.38% with only 9.09% on average,

which indicates these datasets are highly imbalanced.

4.2

Evaluation Metric

The overall accuracy on a test dataset is commonly used

in evaluating the performance of a classifier. However, for

imbalanced datasets, especially in severely imbalanced cases,

overall accuracies could be extremely high even if misclassifying all the minorities to the class of majority, thus alternative measurement is employed here. In the binary classification, we refer minority to be Positive and majority to be

Negative. After obtaining the numbers of four values: TP

ACM-BCB 2012

(True Positive), TN (True Negative), FP (False Positive)

and FN (False Negative), G-Mean [16] is calculated as:

TN

TP

•

.

(15)

G-M ean =

TP + FN TN + FP

The G-Mean is a combinatory measurement on accuracies of the two classes. The range of it is from 0 to 1, and

the higher it is the better the performance is in evaluation.

The reason why we use this measurement is that it is extremely sensitive towards classification errors on minority

instances in severely imbalanced dataset. In some ECG signals, there are only less than 10 abnormal heart beats and

several thousand normal ones, which means a model misclassifying only 1% of the data may have a G-Mean as low

as 0. For this evaluation metric, perfect recognition on most

instances does not guarantee a high outcome.

4.3

Baseline Approaches

We compare the transductive transfer learning based ECG

abnormality detection framework with a set of baseline approaches from both anomaly detection and transductive learning areas, including:

OCSVM [26]: Our model is built upon OCSVM, we

hereby set it as a comparative approach.

Local Distance Based Outlier Factor (LDOF) [29]:

LDOF is a local distance based and unsupervised anomaly

detection method. It defines a distance factor measuring

how much an object deviates from its neighborhood. Objects with higher deviation degree measured by the factor

are supposed to be more abnormal than others. In the implementation, the major difference of this approach to others

is that it requires an estimation of total number of abnormal instances (ECG heart beats). We set the number to be

9.09% of each target dataset, according to the actual average

percentage of minority instances in the datasets.

Clustering Based Outlier Detection (CBOD) [30]:

It is a clustering based unsupervised anomaly detection method.

It assumes that abnormal instances are either in small clusters or far from the centers in large clusters. Partitioning

Around Medoids (PAM) [14] and a defined distance based

factor are used in clustering and detecting outliers in large

clusters, respectively.

Transductive Support Vector Machine (TSVM) [13]:

TSVM is one of the most popular knowledge transferring

method in existing research. It builds the discriminative

structure based on the margin of separating hyperplanes on

both the source and the target data. In experiment, we

implemented it through the SVM light [12].

Modified Bisecting DBSCAN (BRSD-DB) [25]: It

is another transductive learning approach. It estimates the

data structure of both source and target through clustering, and aims to re-balance the distribution of the source

through uniformly drawing samples from each clusters. The

re-balanced source data could then be viewed as supervised

training dataset for the target. In experiment, we use SVM

for the final learning and classification goal.

4.4

Performance Study

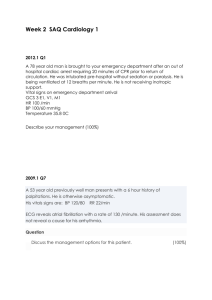

The results are summarized in Table 1, where we denote

the name of each dataset by Data Index and mark the highest G-Means over different methods in bold font. For each

target, when using transductive learning approaches including TSVM, BRSD-DB and WTOSVM, other datasets are

214

Data Index

100

101

103

105

109

115

121

210

215

232

OCSVM

0.8713

0.8609

0.8230

0.3343

0.9431

0.7191

0.6734

0.6293

0.6097

0.4665

LDOF

0.5190

0.8662

0.6753

0.4124

0.5331

0.7219

0.6255

0.4631

0.3267

0.2921

CBOD

0.9798

0.8770

0.7701

0.4722

0.9323

0.5248

0.6428

0.6857

0.5989

0.4259

TSVM

0.1715

0.5222

0.3927

0.6079

0.7068

0.4578

0.2671

0.4717

0.8638

0.9676

BRSD-DB

0.5941

0.6735

0.3534

0.5639

0.7269

0.5928

0.2646

0.6867

0.4541

0.9595

WTOSVM

0.9876

0.9444

0.8910

0.7291

0.9056

0.7590

0.7820

0.9063

0.9082

0.9754

Table 1: Compare our method to baselines on the MIT-BIH Arrhythmias Dataset.

ACM-BCB 2012

1

Using Best Sources

0.95

Using Optimal Sources

0.9

G−Mean

iteratively set to be the source and the highest G-Mean of

these results is presented in the table. The parameter settings will be explained in the section Parameter Sensitivity.

In the implementation, we ran our algorithm in MATLAB

using a PC with 3.17 GHz Intel Core 2 Duo processor and

4GB RAM. The average time of classifying an instance by

our algorithm is 0.0127 second, which only takes 1.59% of

the duration of a usual heart beat according to [20].

Since our framework is based on OCSVM, comparison

with it can best verify the effectiveness of our model. It can

be clearly seen that for 9 out of the 10 tasks, the proposed

ECG abnormality detection framework can significantly outperform the original OCSVM method and achieve improvement by 26.79%. The only exception is for Data 109, in

which our result is lower for 0.04. These facts indicate that

in most cases the proposed framework could transfer knowledge from sources into targets successfully, and these transferred knowledge does help in the classification of data in

the targets.

The other two anomaly detection methods also show much

lower performance than ours in most cases. Each of them

can get relatively high G-Means in several cases, and CBOD

can even achieve slightly higher than our method for data

109. However, these two methods show obviously unstable performance over different datasets. Such instability

also exists in TSVM and BRSD-DB. There are two explanations for it. One is due to the sensitivity of the evaluation metric G-Mean, which stresses the misclassification

on minority instances and exaggerates overall performance

variations. Another reason is that all the comparative methods are built based on their proposed assumptions. LDOF

assumes minorities are in ’sparse area’; CBOD makes assumption that abnormal instances are either in small clusters or far from cluster centers; TSVM assumes there are

shared hyperplanes for source and target; and BRSD-DB

assumes clustering could mine the shared data distributions

of sources and targets. Different from them, our method

only assumes that for a given target there are sources that

have close distributions with it, thus can produce more stable performance with enough candidate sources. According to this assumption, the relatively lower performance of

our method on the dataset 109 can be explained as there

is no investigated source having sufficiently close data distribution to it. In spite of this, the result of our method

shows significantly higher stability than those of the comparative methods, demonstrating the robustness of the proposed algorithm. On average, our method improves the

performance of the listed five baseline methods for 26.79%,

0.85

0.8

0.75

0.7

0.65

100

101

103

105

109

115

Data Index

121

210

215

232

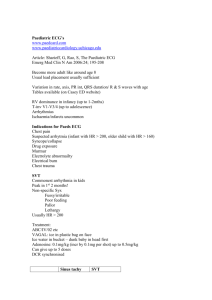

Figure 3: Cross-Validation Based Source Selection.

61.68%, 27.18%, 61.86% and 49.72%, respectively.

4.5

Source Selection Study

The experimental results of the cross-validation based source

selection method are shown in Figure 3, in which we denote

the selected sources as Optimal Sources and sources with

best performance as Best Sources. In the results, Optimal

Sources for 100, 103, 121 and 215 are also the Best Sources

for them, thus produce the highest possible G-Means in our

framework. In all the cases, the deviations of the G-Means

using Best and Optimal Sources are less than 0.03, and the

root-mean-square deviation (RMSD) is only 0.0146, proving

that the Optimal Sources well fit the Best Sources.

When compared with the listed baseline approaches, our

method using the Optimal Sources could still outperform

them in 8 out of the 10 ECG signals. For 109, the G-Means

is lowered to 0.8829 and lower than OCSVM and CBOD; and

for 232, the performance is reduced to 0.9531 thus slightly

lower than TSVM and BRSD-DB. However, notice that the

presented G-Means of TSVM and BRSD-DB are for the best

sources of the targets. On average, our method using the

selected optimal sources improves the performance of the

baseline methods for 25.44%, 59.95%, 25.82%, 60.13% and

48.11%, respectively.

4.6

Parameter Sensitivity

There are three parameters in the proposed algorithm,

including the subset N t of a target data which is involved

in the knowledge transferring process, kernel covariance σ

and trade-off parameter γ used in the objective. Traditional

way of setting parameters through experimenting on training data of each target is not applicable due to the fact

215

1

0.9

0.945

Source is Dataset 100 and Target is Dataset 101

0.8

0.7

Source is Dataset 100 and Target is Dataset 101

0.6

G−Mean

G−Mean

0.94

0.935

0.5

0.4

0.93

0.3

0.2

0.925

0.1

0.92

100

200

300

400

500

600

700

Size of the Subset Nt

800

900

1000

0

1100

0

0.1

0.2

0.3

0.4

0.5

0.6

Trade−off Parameter γ

0.7

0.8

0.9

1

Figure 4: Parameter Sensitivity of N t.

Figure 6: Parameter Sensitivity of γ.

that no supervised information exists in target ECG signals

during the learning process. So here, we show some experimental results on parameter sensitivity and give out some

basic principles in setting parameters. Without losing generality, we choose data 100 as the source and data 101 as

the target for the following experiments.

from 0.7 to 0.9. We could hereby consider 0.3 to 0.7 as a

reasonable range for γ and set it to be 0.5 in experiments in

Table 1.

For baseline approaches, we set parameters in the similar

way to the three above experiments. We could also notice

that in spite of the changes caused by parameter variations,

our proposed WTOSVM significantly outperforms the best

baseline method (LDOF when target is 101) in most of the

cases. This proves the robustness of our method against

various parameter settings.

1

0.98

Source is Dataset 100 and Target is Dataset 101

0.96

0.94

5.

G−Mean

0.92

0.9

0.88

0.86

0.84

0.82

0.8

40

60

80

100

Kernel Parameter σ

120

140

Figure 5: Parameter Sensitivity of σ.

The results on the sensitivity of N t is shown in Figure

4. We fixed σ = 100 and γ = 0.5, and then varied the size

of N t from 100 to 1011, where 1011 is the size of the target data 101. In each setting, if the size is K, then first K

instances in the data are set as the subset N t. The performance is relatively stable, with varance = 1.39×10−4 . This

suggests that the subset does not have significant impact on

the performance in these settings. However, to avoid possible randomness caused by insufficient learning instances

and overfitting caused in the opposite way when using other

sources and targets, we set N t to be the top 500 instances

of each target for the experiments shown in Table 1.

To test the sensitivity of σ, we fixed N t to be the top

100 instances and γ = 0.5, and then varied σ from 40 to

140 as shown in Figure 5. It is clearly evident that when

σ rises from 40 to 80, the G-Mean first reaches the lowest

point when σ = 50 then gradually improves. After the point

of 80, the performance stays almost stable until γ ≥ 120.

Therefore a reasonable setting for σ should be chosen from

[80, 120]. For Table 1, we set it to be 100.

In the similar way, we tested the sensitivity of parameter γ

and show it in Figure 6. When varying the value of it from

0.1 to 0.9, the performance of our framework first increases

to a turning point at γ = 0.3 and then keeps almost the same

to γ = 0.7. A hardly observable decrease happens at the end

ACM-BCB 2012

CONCLUSIONS AND PERSPECTIVES

Unsupervised ECG abnormality detection is an important

problem in health informatics and cyber-physical systems

that has not been well investigated. In this work, we take

advantage of well labeled ECG signals, and propose a transductive transfer learning framework to utilize these labeled

source data into the classification of target unsupervised

ECG signals.

The experimental results on the MIT-BIH Arrhythmias

Dataset show that the proposed method could outperform

both anomaly detection and transfer learning baseline models in most of the cases and improve significantly over each of

them for 25% to 60% on G-Mean. A cross-validation based

source selection framework is also presented. It could determine the optimal source for unsupervised learning on each

target, and the related experimental results could well fit the

highest G-Mean for each target and significantly outperform

baselines in most cases, demonstrating the practicality and

superiority of our framework. Moreover, we have also presented the sensitivity for each parameter used in the model,

and given out principles for their settings. In the future,

we plan to investigate ECG abnormality detection based on

transductive transfer learning using multiple sources.

6.

REFERENCES

[1] B. W. Andrews, T.-M. Yi, and P. A. Iglesias. Optimal

noise filtering in the chemotactic response of

escherichia coli. PLoS Computational Biology,

2(11):12, 2006.

[2] M. T. Bahadori, Y. Liu, and D. Zhang. Learning with

Minimum Supervision: A General Framework for

Transductive Transfer Learning. IEEE, 2011.

[3] X. Chang, Q. Zheng, and P. Lin. Cost-sensitive

supported vector learning to rank. Learning, pages

305–314, 2009.

216

[4] N. V. Chawla, K. W. Bowyer, L. O. Hall, and W. P.

Kegelmeyer. Smote: synthetic minority over-sampling

technique. Journal of Artificial Intelligence Research,

16(1):321–357, 2002.

[5] J. Domienik-Karlowicz, B. Lichodziejewska, W. Lisik,

M. Ciurzynski, P. Bienias, A. Chmura, and

P. Pruszczyk. Electrocardiographic criteria of left

ventricular hypertrophy in patients with morbid

obesity. EARSeL eProceedings, 10(3):1–8, 2011.

[6] P. Domingos. MetaCost: A General Method for

Making Classifiers Cost-Sensitive, volume 55, pages

155–164. ACM, 1999.

[7] E. Eskin, A. Arnold, M. Prerau, L. Portnoy, and

S. Stolfo. A Geometric Framework for Unsupervised

Anomaly Detection: Detecting Intrusions in Unlabeled

Data, volume 6, page 20. Kluwer, 2002.

[8] A. Gretton, A. J. Smola, J. Huang, M. Schmittfull,

K. M. Borgwardt, and B. Sch?lkopf. Covariate Shift by

Kernel Mean Matching, page 131ı̈£¡C160. MIT Press,

2009.

[9] M. Guimaraes and M. Murray. Overview of intrusion

detection and intrusion prevention. Proceedings of the

5th annual conference on Information security

curriculum development InfoSecCD 08, page 44, 2008.

[10] Z. He, X. Xu, and S. Deng. Discovering cluster-based

local outliers. Pattern Recognition Letters,

24(9-10):1641–1650, 2003.

[11] J. Huang, A. Smola, A. Gretton, K. Borgwardt, and

B. SchÃűlkopf. Correcting sample selection bias by

unlabeled data. Distribution, 19:601–608, 2006.

[12] T. Joachims. Making large-scale support vector

machine learning practical, pages 169–184. MIT Press,

Cambridge, MA, 1999.

[13] T. Joachims. Transductive inference for text

classification using support vector machines. Most,

pages 200–209, 1999.

[14] L. Kaufman and P. J. Rousseeuw. Finding Groups in

Data: An Introduction to Cluster Analysis, volume 39.

John Wiley Sons, 1990.

[15] S. B. Kotsiantis and P. E. Pintelas. Mixture of expert

agents for handling imbalanced data sets. Annals of

Mathematics Computing and Teleinformatics,

1(1):46–55, 2003.

[16] M. Kubat and S. Matwin. Addressing the curse of

imbalanced training sets: one-sided selection.

Training, pages 179–186, 1997.

[17] P. Li, K. Chan, S. Fu, and S. Krishnan. An abnormal

ecg beat detection approach for long-term monitoring

of heart patients based on hybrid kernel machine

ensemble. Biomedical Engineering, 3541/2005:346–355,

2005.

[18] G. B. Moody and R. G. Mark. The impact of the

mit-bih arrhythmia database. IEEE Engineering in

Medicine and Biology Magazine, 20(3):45–50, 2001.

[19] M. Nakatsuji, Y. Miyoshi, and Y. Otsuka. Innovation

detection based on user-interest ontology of blog

community. Innovation, 4273(1):9–11, 2006.

[20] P. Palatini. Resting heart rate. Heart, 33:622–625,

1999.

[21] D. Patra, M. K. Das, and S. Pradhan. Integration of

fcm , pca and neural networks for classification of ecg

ACM-BCB 2012

arrhythmias. International Journal, (February), 2010.

[22] B. Quanz and J. Huan. Large margin transductive

transfer learning. Proceeding of the 18th ACM

conference on Information and knowledge management

CIKM 09, page 1327, 2009.

[23] S. Ramaswamy, R. Rastogi, and K. Shim. Efficient

algorithms for mining outliers from large data sets.

ACM SIGMOD Record, 29(2):427–438, 2000.

[24] G. Ranganathan and K. College. Ecg signal processing

using dyadic wavelet for mental stress assessment.

Engineering, 2010.

[25] J. Ren, X. Shi, W. Fan, and P. S. Yu.

Type-independent correction of sample selection bias

via structural discovery and re-balancing, page

565âĂŞ576. Citeseer, 2008.

[26] B. SchÃűlkopf, J. C. Platt, J. S. Shawe-Taylor, A. J.

Smola, and R. C. Williamson. Estimating the support

of a high-dimensional distribution. Neural

Computation, 13(7):1443–1471, 2001.

[27] C. G. M. Snoek, M. Worring, J. C. Van Gemert, J.-M.

Geusebroek, and A. W. M. Smeulders. The challenge

problem for automated detection of 101 semantic

concepts in multimedia. Proceedings of the 14th

annual ACM international conference on Multimedia

MULTIMEDIA 06, pages 421–430, 2006.

[28] L. I. Xin-fu, Y. U. Yan, and Y. I. N. Peng. A New

Method of Text Categorization on Imbalanced

Datasets, pages 10–13. 2008.

[29] K. Zhang, M. Hutter, and H. Jin. A new local

distance-based outlier detection approach for scattered

real-world data. Advances in Knowledge Discovery and

Data Mining, 5476:813–822, 2009.

[30] B. A. Zoubi. An effective clustering-based approach

for outlier detection. European Journal of Scientific

Research, 28(2):310–316, 2009.

217