Sound, Mixtures, and Learning ROSA )

advertisement

Sound, Mixtures, and Learning

Dan Ellis

<dpwe@ee.columbia.edu>

Laboratory for Recognition and Organization of Speech and Audio

(LabROSA)

Electrical Engineering, Columbia University

http://labrosa.ee.columbia.edu/

Outline

1

Auditory Scene Analysis

2

Speech Recognition & Mixtures

3

Fragment Recognition

4

Alarm Sound Detection

5

Future Work

NCARAI Seminar - Dan Ellis

2002-11-04 - 1/34

Auditory Scene Analysis

1

4000

frq/Hz

3000

0

2000

-20

1000

-40

0

0

2

4

6

8

10

12

time/s

-60

level / dB

Analysis

Voice (evil)

Voice (pleasant)

Stab

Rumble

Choir

Strings

•

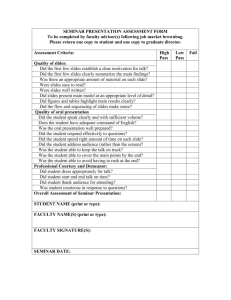

Auditory Scene Analysis: describing a complex

sound in terms of high-level sources/events

- ... like listeners do

•

Hearing is ecologically grounded

- reflects ‘natural scene’ properties

- subjective, not absolute

NCARAI Seminar - Dan Ellis

2002-11-04 - 2/34

Sound, mixtures, and learning

freq / kHz

Speech

Noise

Speech + Noise

4

3

+

2

=

1

0

0

0.5

1

time / s

0

0.5

1

time / s

0

0.5

1

•

Sound

- carries useful information about the world

- complements vision

•

Mixtures

- .. are the rule, not the exception

- medium is ‘transparent’, sources are many

- must be handled!

•

Learning

- the ‘speech recognition’ lesson:

let the data do the work

- like listeners

NCARAI Seminar - Dan Ellis

2002-11-04 - 3/34

time / s

The problem with recognizing mixtures

“Imagine two narrow channels dug up from the edge of a

lake, with handkerchiefs stretched across each one.

Looking only at the motion of the handkerchiefs, you are

to answer questions such as: How many boats are there

on the lake and where are they?” (after Bregman’90)

•

Received waveform is a mixture

- two sensors, N signals ... underconstrained

•

Disentangling mixtures as the primary goal?

- perfect solution is not possible

- need experience-based constraints

NCARAI Seminar - Dan Ellis

2002-11-04 - 4/34

Human Auditory Scene Analysis

(Bregman 1990)

•

People hear sounds as separate sources

•

How ?

- break mixture into small elements (in time-freq)

- elements are grouped in to sources using cues

- sources have aggregate attributes

•

Grouping ‘rules’ (Darwin, Carlyon, ...):

- cues: common onset/offset/modulation,

harmonicity, spatial location, ...

Onset

map

Frequency

analysis

Harmonicity

map

Grouping

mechanism

Position

map

(after Darwin, 1996)

NCARAI Seminar - Dan Ellis

2002-11-04 - 5/34

Source

properties

Cues to simultaneous grouping

freq / Hz

•

Elements + attributes

8000

6000

4000

2000

0

0

1

2

3

4

5

6

7

8

9 time / s

•

Common onset

- simultaneous energy has common source

•

Periodicity

- energy in different bands with same cycle

•

Other cues

- spatial (ITD/IID), familiarity, ...

NCARAI Seminar - Dan Ellis

2002-11-04 - 6/34

Context and Restoration

•

Context can create an ‘expectation’:

i.e. a bias towards a particular interpretation

- e.g. auditory ‘illusions’ = hearing what’s not there

•

The continuity illusion

f/Hz

ptshort

4000

2000

1000

0.0

•

0.2

0.4

0.6

0.8

1.0

1.2

1.4

time/s

SWS

f/Bark

15

80

60

S1−env.pf:0

10

5

40

0.0

0.2

0.4

0.6

0.8

1.0

1.2

1.4

1.6

1.8

- duplex perception

•

How to model these effects?

NCARAI Seminar - Dan Ellis

2002-11-04 - 7/34

Computational Auditory Scene Analysis

(CASA)

CASA

Object 1 description

Object 2 description

Object 3 description

...

•

Goal: Automatic sound organization ;

Systems to ‘pick out’ sounds in a mixture

- ... like people do

•

E.g. voice against a noisy background

- to improve speech recognition

•

Approach:

- psychoacoustics describes grouping ‘rules’

- ... just implement them?

NCARAI Seminar - Dan Ellis

2002-11-04 - 8/34

The Representational Approach

(Brown & Cooke 1993)

•

input

mixture

Implement psychoacoustic theory

signal

features

Front end

(maps)

Object

formation

discrete

objects

Source

groups

Grouping

rules

freq

onset

time

period

frq.mod

- ‘bottom-up’ processing

- uses common onset & periodicity cues

•

frq/Hz

Able to extract voiced speech:

brn1h.aif

frq/Hz

3000

3000

2000

1500

2000

1500

1000

1000

600

600

400

300

400

300

200

150

200

150

100

brn1h.fi.aif

100

0.2

0.4

0.6

0.8

NCARAI Seminar - Dan Ellis

1.0

time/s

0.2

0.4

0.6

0.8

2002-11-04 - 9/34

1.0

time/s

Adding top-down constraints

Perception is not direct

but a search for plausible hypotheses

•

Data-driven (bottom-up)...

input

mixture

Front end

signal

features

Object

formation

discrete

objects

Grouping

rules

Source

groups

- objects irresistibly appear

vs. Prediction-driven (top-down)

hypotheses

Noise

components

Hypothesis

management

prediction

errors

input

mixture

Front end

signal

features

Compare

& reconcile

Predict

& combine

Periodic

components

predicted

features

- match observations

with parameters of a world-model

- need world-model constraints...

NCARAI Seminar - Dan Ellis

2002-11-04 - 10/34

Approaches to sound mixture recognition

•

Recognize combined signal

- ‘multicondition training’

- combinatorics..

•

Separate signals

- e.g. CASA, ICA

- nice, if you can do it

•

Segregate features into fragments

- then missing-data recognition

NCARAI Seminar - Dan Ellis

2002-11-04 - 11/34

Outline

1

Auditory Scene Analysis

2

Speech Recognition & Mixtures

- standard ASR

- approaches to speech + noise

3

Fragment Recognition

4

Alarm Sound Detection

5

Future Work

NCARAI Seminar - Dan Ellis

2002-11-04 - 12/34

Speech recognition & mixtures

2

•

Speech recognizers are the most successful

and sophisticated acoustic recognizers to date

sound

Feature

calculation

D AT A

feature vectors

Acoustic model

parameters

Acoustic

classifier

Word models

s

ah

t

Language model

p("sat"|"the","cat")

p("saw"|"the","cat")

•

phone probabilities

HMM

decoder

phone / word

sequence

Understanding/

application...

‘State of the art’ word-error rates (WERs):

- 2% (dictation) - 30% (phone conv’ns)

NCARAI Seminar - Dan Ellis

2002-11-04 - 13/34

Learning acoustic models

•

Goal: describe p ( X M ) with e.g. GMMs

p(x|ω1)

Labeled

training

examples

{xn,ωxn}

Sort

according

to class

Estimate

conditional pdf

for class ω1

•

Separate models for each class

- generalization as blurring

•

Training data labels from:

- manual annotation

- ‘best path’ from earlier classifier (Viterbi)

- EM: joint estimation of labels & pdfs

NCARAI Seminar - Dan Ellis

2002-11-04 - 14/34

Speech + noise mixture recognition

•

Background noise

Biggest problem for current ASR?

•

Feature invariance approach:

Design features to reflect only speech

- e.g. normalization, mean subtraction

- one model for clean and noisy speech

•

Alternative:

More complex models of the signal

- separate models for speech and ‘noise’

NCARAI Seminar - Dan Ellis

2002-11-04 - 15/34

HMM decomposition

(e.g. Varga & Moore 1991, Roweis 2000)

•

Total signal model has independent state

sequences for 2+ component sources

model 2

model 1

observations / time

•

New combined state space q' = {q1 q2}

- new observation pdfs for each combination

p ( X q 1, q 2 )

NCARAI Seminar - Dan Ellis

2002-11-04 - 16/34

Outline

1

Auditory Scene Analysis

2

Speech Recognition & Mixtures

3

Fragment Recognition

- separating signals vs. separating features

- missing data recognition

- recognizing multiple sources

4

Alarm Sound Detection

5

Future Work

NCARAI Seminar - Dan Ellis

2002-11-04 - 17/34

Fragment Recognition

3

(Jon Barker & Martin Cooke, Sheffield)

•

Signal separation is too hard!

Instead:

- segregate features into partially-observed

sources

- then classify

•

Made possible by ‘missing data’ recognition

- integrate over uncertainty in observations

for optimal posterior distribution

•

Goal:

Relate clean speech models P(X|M)

to speech-plus-noise mixture observations

- .. and make it tractable

NCARAI Seminar - Dan Ellis

2002-11-04 - 18/34

Comparing different segregations

•

Standard classification chooses between

models M to match source features X

P( M )

M ∗ = argmax P ( M X ) = argmax P ( X M ) ⋅ -------------P( X )

M

M

•

Mixtures → observed features Y, segregation S,

all related by P ( X Y , S )

Observation

Y(f )

Source

X(f )

Segregation S

freq

- spectral features allow clean relationship

•

Joint classification of model and segregation:

P( X Y , S )

P ( M , S Y ) = P ( M ) P ( X M ) ⋅ ------------------------- dX ⋅ P ( S Y )

P( X )

∫

- integral collapses in several cases...

NCARAI Seminar - Dan Ellis

2002-11-04 - 19/34

Calculating fragment matches

P( X Y , S )

P ( M , S Y ) = P ( M ) P ( X M ) ⋅ ------------------------- dX ⋅ P ( S Y )

P( X )

∫

•

P(X|M) - the clean-signal feature model

•

P(X|Y,S)/P(X) - is X ‘visible’ given segregation?

•

Integration collapses some channels...

•

P(S|Y) - segregation inferred from observation

- just assume uniform, find S for most likely M

- use extra information in Y to distinguish S’s

e.g. harmonicity, onset grouping

•

Result:

- probabilistically-correct relation between

clean-source models P(X|M)

and inferred contributory source P(M,S|Y)

NCARAI Seminar - Dan Ellis

2002-11-04 - 20/34

Speech fragment decoder results

•

Simple P(S|Y) model forces contiguous regions

to stay together

- big efficiency gain when searching S space

"1754" + noise

90

AURORA 2000 - Test Set A

80

WER / %

70

SNR mask

60

HTK clean training

MD Soft SNR

HTK multicondition

50

40

30

20

Fragments

10

0

-5

Fragment

Decoder

•

0

5

10

SNR / dB

15

"1754"

Clean-models-based recognition

rivals trained-in-noise recognition

NCARAI Seminar - Dan Ellis

2002-11-04 - 21/34

20

clean

Multi-source decoding

•

Search for more than one source

q2(t)

S2(t)

Y(t)

S1(t)

q1(t)

•

Mutually-dependent data masks

•

Use e.g. CASA features to propose masks

- locally coherent regions

•

Theoretical vs. practical limits

NCARAI Seminar - Dan Ellis

2002-11-04 - 22/34

Outline

1

Auditory Scene Analysis

2

Speech Recognition & Mixtures

3

Fragment Recognition

4

Alarm Sound Detection

- sound

- mixtures

- learning

5

Future Work

NCARAI Seminar - Dan Ellis

2002-11-04 - 23/34

Alarm sound detection

4

•

freq / kHz

s0n6a8+20

4

Alarm sounds have particular structure

- people ‘know them when they hear them’

- clear even at low SNRs

hrn01

bfr02

buz01

20

3

0

2

-20

1

0

0

5

10

15

20

25

time / s

•

Why investigate alarm sounds?

- they’re supposed to be easy

- potential applications...

•

Contrast two systems:

- standard, global features, P(X|M)

- sinusoidal model, fragments, P(M,S|Y)

NCARAI Seminar - Dan Ellis

2002-11-04 - 24/34

-40

level / dB

freq / Hz

Alarms: Sound (representation)

•

Standard system: Mel Cepstra

- have to model alarms in noise context:

each cepstral element depends on whole signal

•

Contrast system: Sinusoid groups

- exploit sparse, stable nature of alarm sounds

- 2D-filter spectrogram to enhance harmonics

- simple magnitude threshold, track growing

- form groups based on common onset

5000

5000

5000

4000

4000

4000

3000

3000

3000

2000

2000

2000

1000

1000

1000

0

•

1

1.5

2

2.5

0

1

1.5

2

time / sec

2.5

0

1

1

1.5

2

2.5

Sinusoid representation is already fragmentary

- does not record non-peak energies

NCARAI Seminar - Dan Ellis

2002-11-04 - 25/34

Alarms: Mixtures

•

Effect of varying SNR on representations:

- sinusoid peaks have ~ invariant properties

Sine track groups

Cepstra (normalized)

10 dB SNR 60 dB SNR

2

10

1

5

0

2

3

10

1

5

0

1

3

0 dB SNR

2

5

4

0

0

5

10

15

20

NCARAI Seminar - Dan Ellis

25

time / s

0

5

10

15

20

2002-11-04 - 26/34

25

-5

time / s

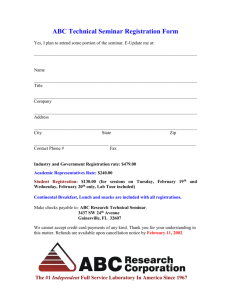

Alarms: Learning

•

Standard: train MLP on noisy examples

Feature

extraction

Sound

mixture

•

PLP

cepstra

Neural net

acoustic

classifier

Detected

alarms

Alarm14

non alarm

12

Magnitude SD

80

Spectral moment

Alarm

probability

Alternate: learn distributions of group features

- duration, frequency deviation, amp. modulation...

100

60

40

20

0

Median

filtering

10

8

6

4

2

0

50

100

150

Spectral centroid

200

0

0

10

20

30

Inverse Frequency SD

- underlying models are clean (isolated)

- recognize in different contexts...

NCARAI Seminar - Dan Ellis

2002-11-04 - 27/34

40

freq / kHz

Alarms: Results

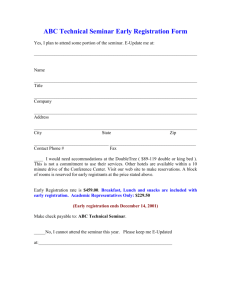

Restaurant+ alarms (snr 0 ns 6 al 8)

4

3

2

1

0

MLP classifier output

freq / kHz

0

Sound object classifier output

4

6

9

8

7

3

2

1

0

20

•

25

30

35

40

45

time/sec 50

Both systems commit many insertions at 0dB

SNR, but in different circumstances:

Noise

Neural net system

Del

Ins

Tot

Sinusoid model system

Del

Ins

Tot

1 (amb)

7 / 25

2

36%

14 / 25

1

60%

2 (bab)

5 / 25

63

272%

15 / 25

2

68%

3 (spe)

2 / 25

68

280%

12 / 25

9

84%

4 (mus)

8 / 25

37

180%

9 / 25

135

576%

170

192%

50 / 100

147

197%

Overall 22 / 100

NCARAI Seminar - Dan Ellis

2002-11-04 - 28/34

Alarms: Summary

•

Sinusoid domain

- feature components belong to 1 source

- simple ‘segregation’ (grouping) model

- alarm model as properties of group

- robust to partial feature observation

•

Future improvements

- more complex alarm class models

- exploit repetitive structure of alarms

NCARAI Seminar - Dan Ellis

2002-11-04 - 29/34

Outline

1

Auditory Scene Analysis

2

Speech Recognition & Mixtures

3

Fragment Recognition

4

Alarm Sound Detection

5

Future Work

- generative models & inference

- model acquisition

- ambulatory audio

NCARAI Seminar - Dan Ellis

2002-11-04 - 30/34

Future work

5

•

CASA as generative model parameterization:

Generation

model

Channel

parameters

Source

models

Source

signals

M1

Y1

C1

Received

signals

X1

C2

Model

dependence

Observations

Θ

M2

Y2

X2

O

Analysis

structure

Observations

O

Fragment

formation

Mask

allocation

p(X|Mi,Ki)

NCARAI Seminar - Dan Ellis

{Ki}

Model

fitting

{Mi}

Likelihood

evaluation

2002-11-04 - 31/34

Learning source models

•

The speech recognition lesson:

Use the data as much as possible

- what can we do with unlimited data feeds?

•

Data sources

- clean data corpora

- identify near-clean segments in real sound

- build up ‘clean’ views from partial observations?

•

Model types

- templates

- parametric/constraint models

- HMMs

•

Hierarchic classification

vs. individual characterization...

NCARAI Seminar - Dan Ellis

2002-11-04 - 32/34

Personal Audio Applications

•

Smart PDA records everything

•

Only useful if we have index, summaries

- monitor for particular sounds

- real-time description

•

Scenarios

- personal listener → summary of your day

- future prosthetic hearing device

- autonomous robots

•

Meeting data, ambulatory audio

NCARAI Seminar - Dan Ellis

2002-11-04 - 33/34

Summary

•

Sound

- carries important information

•

Mixtures

- need to segregate different source properties

- fragment-based recognition

•

Learning

- information extracted by classification

- models guide segregation

•

Alarm sounds

- simple example of fragment recognition

•

General sounds

- recognize simultaneous components

- acquire classes from training data

- build index, summary of real-world sound

NCARAI Seminar - Dan Ellis

2002-11-04 - 34/34