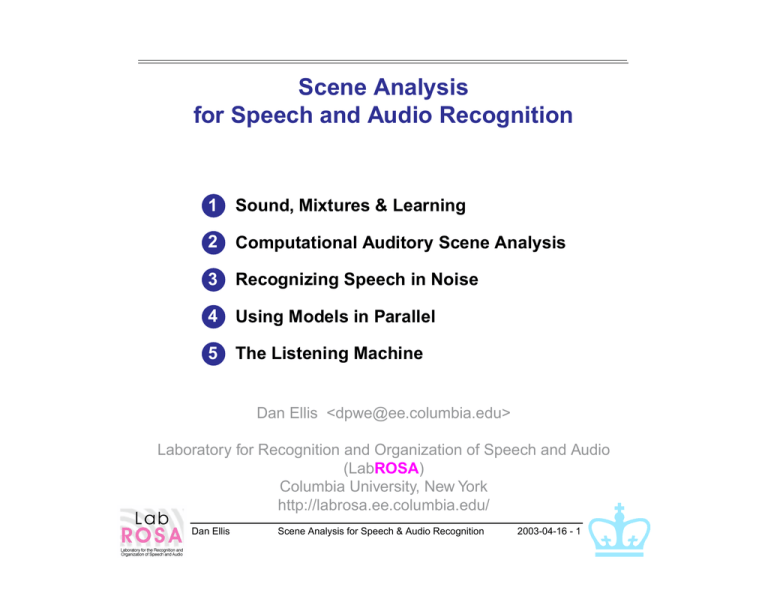

Scene Analysis for Speech and Audio Recognition

advertisement

Scene Analysis

for Speech and Audio Recognition

1 Sound, Mixtures & Learning

2 Computational Auditory Scene Analysis

3 Recognizing Speech in Noise

4 Using Models in Parallel

5 The Listening Machine

Dan Ellis <dpwe@ee.columbia.edu>

Laboratory for Recognition and Organization of Speech and Audio

(LabROSA)

Columbia University, New York

http://labrosa.ee.columbia.edu/

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 1

Sound, Mixtures & Learning

1

4000

frq/Hz

3000

0

2000

-20

1000

-40

0

0

2

4

6

8

10

12

time/s

-60

level / dB

•

Sound

- carries useful information about the world

- complements vision

•

Mixtures

- .. are the rule, not the exception

- medium is ‘transparent’ with many sources

- must be handled!

•

Learning

- the speech recognition lesson:

let the data do the work

- ... like listeners do

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 2

The problem with recognizing mixtures

“Imagine two narrow channels dug up from the edge of a

lake, with handkerchiefs stretched across each one.

Looking only at the motion of the handkerchiefs, you are

to answer questions such as: How many boats are there

on the lake and where are they?” (after Bregman’90)

•

Auditory Scene Analysis: describing a complex

sound in terms of high-level sources/events

- ... like listeners do

•

Hearing is ecologically grounded

- reflects natural scene properties = constraints

- subjective, not absolute

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 3

Auditory Scene Analysis

(Bregman 1990)

•

How do people analyze sound mixtures?

- break mixture into small elements (in time-freq)

- elements are grouped in to sources using cues

- sources have aggregate attributes

•

Grouping ‘rules’ (Darwin, Carlyon, ...):

- cues: common onset/offset/modulation,

harmonicity, spatial location, ...

Onset

map

Frequency

analysis

Harmonicity

map

Source

properties

Grouping

mechanism

Position

map

(after Darwin, 1996)

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 4

Cues to simultaneous grouping

freq / Hz

•

Elements + attributes

8000

6000

4000

2000

0

0

1

2

3

4

5

6

7

8

9 time / s

•

Common onset

- simultaneous energy has common source

•

Periodicity

- energy in different bands with same cycle

•

Other cues

- spatial (ITD/IID), familiarity, ...

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 5

The effect of context

Context can create an ‘expectation’:

i.e. a bias towards a particular interpretation

•

Bregman’s old-plus-new principle:

frequency / kHz

•

2

1

+

0

0.0

0.4

0.8

1.2

time / s

- a change is preferably interpreted as addition

•

E.g. the continuity illusion

f/Hz

ptshort

4000

2000

1000

0.0

Dan Ellis

0.2

0.4

0.6

0.8

1.0

1.2

1.4

time/s

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 6

Approaches to sound mixture recognition

•

Separate signals, then recognize

- e.g. CASA, ICA

- nice, if you can do it

•

Recognize combined signal

- ‘multicondition training’

- combinatorics..

•

Recognize with parallel models

- full joint-state space?

- divide signal into fragments,

then use missing-data recognition

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 7

Independent Component Analysis (ICA)

(Bell & Sejnowski 1995 etc.)

•

Drive a parameterized separation algorithm to

maximize independence of outputs

m1

m2

x

a11 a12

a21 a22

s1

s2

−δ MutInfo

δa

•

Advantages:

- mathematically rigorous, minimal assumptions

- does not rely on prior information from models

•

Disadvantages:

- may converge to local optima...

- separation, not recognition

- does not exploit prior information from models

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 8

Outline

1

Sound, Mixtures & Learning

2

Computational Auditory Scene Analysis

- Data-driven

- Top-down constraints

3

Recognizing Speech in Noise

4

Using Models in Parallel

5

The Listening Machine

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 9

Computational Auditory Scene Analysis:

The Representational Approach

(Cooke & Brown 1993)

•

input

mixture

Direct implementation of psych. theory

signal

features

Front end

(maps)

Object

formation

discrete

objects

Source

groups

Grouping

rules

freq

onset

time

period

frq.mod

- ‘bottom-up’ processing

- uses common onset & periodicity cues

•

frq/Hz

Able to extract voiced speech:

brn1h.aif

frq/Hz

3000

3000

2000

1500

2000

1500

1000

1000

600

600

400

300

400

300

200

150

200

150

100

brn1h.fi.aif

100

0.2

0.4

Dan Ellis

0.6

0.8

1.0

time/s

0.2

Scene Analysis for Speech & Audio Recognition

0.4

0.6

0.8

2003-04-16 - 10

1.0

time/s

Adding top-down constraints

Perception is not direct

but a search for plausible hypotheses

•

Data-driven (bottom-up)...

input

mixture

Front end

signal

features

Object

formation

discrete

objects

Grouping

rules

Source

groups

- objects irresistibly appear

vs. Prediction-driven (top-down)

hypotheses

Noise

components

Hypothesis

management

prediction

errors

input

mixture

Front end

signal

features

Compare

& reconcile

Periodic

components

Predict

& combine

predicted

features

- match observations

with parameters of a world-model

- need world-model constraints...

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 11

Prediction-Driven CASA

(Ellis 1996)

•

Explain a complex sound with basic elements

f/Hz

City

4000

2000

1000

400

200

1000

400

200

100

50

0

f/Hz

1

2

3

Wefts1−4

4

5

Weft5

6

7

Wefts6,7

8

Weft8

9

Wefts9−12

4000

2000

1000

400

200

1000

400

200

100

50

Horn1 (10/10)

Horn2 (5/10)

Horn3 (5/10)

Horn4 (8/10)

Horn5 (10/10)

f/Hz

Noise2,Click1

4000

2000

1000

400

200

Crash (10/10)

f/Hz

Noise1

4000

2000

1000

−40

400

200

−50

−60

Squeal (6/10)

Truck (7/10)

−70

0

Dan Ellis

1

2

3

4

5

6

7

8

Scene Analysis for Speech & Audio Recognition

9

time/s

dB

2003-04-16 - 12

Aside: Evaluation

•

Evaluation is a big problem for CASA

- what is the goal, really?

- what is a good test domain?

- how do you measure performance?

•

SNR improvement

- tricky to derive from before/after signals:

correspondence problem

- can do with fixed filtering mask;

but rewards removing signal as well as noise

•

Speech Recognition (ASR) improvement

- recognizers typically very sensitive to artefacts

•

‘Real’ task?

- mixture corpus with specific sound events...

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 13

Outline

1

Sound, Mixtures & Learning

2

Computational Auditory Scene Analysis

3

Recognizing Speech in Noise

- Conventional ASR

- Tandem modeling

4

Using Models in Parallel

5

The Listening Machine

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 14

Recognizing Speech in Noise

3

•

Standard speech recognition structure:

sound

Feature

calculation

D AT A

feature vectors

Acoustic model

parameters

Word models

s

ah

t

Language model

p("sat"|"the","cat")

p("saw"|"the","cat")

•

Dan Ellis

Acoustic

classifier

phone probabilities

HMM

decoder

phone / word

sequence

Understanding/

application...

How to handle additive noise?

- just train on noisy data: ‘multicondition training’

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 15

Tandem speech recognition

(with Hermansky, Sharma & Sivadas/OGI, Singh/CMU, ICSI)

•

Neural net estimates phone posteriors;

but Gaussian mixtures model finer detail

•

Combine them!

Hybrid Connectionist-HMM ASR

Feature

calculation

Conventional ASR (HTK)

Noway

decoder

Neural net

classifier

Feature

calculation

HTK

decoder

Gauss mix

models

h#

pcl

bcl

tcl

dcl

C0

C1

C2

s

Ck

tn

ah

t

s

ah

t

tn+w

Input

sound

Words

Phone

probabilities

Speech

features

Input

sound

Subword

likelihoods

Speech

features

Tandem modeling

Feature

calculation

Neural net

classifier

HTK

decoder

Gauss mix

models

h#

pcl

bcl

tcl

dcl

C0

C1

C2

s

Ck

tn

ah

t

tn+w

Input

sound

Speech

features

•

Dan Ellis

Phone

probabilities

Subword

likelihoods

Words

Train net, then train GMM on net output

- GMM is ignorant of net output ‘meaning’

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 16

Words

Tandem system results

•

It works very well (‘Aurora’ noisy digits):

WER as a function of SNR for various Aurora99 systems

100

Average WER ratio to baseline:

HTK GMM:

100%

Hybrid:

84.6%

Tandem:

64.5%

Tandem + PC: 47.2%

WER / % (log scale)

50

20

10

5

HTK GMM baseline

Hybrid connectionist

Tandem

Tandem + PC

2

1

-5

0

5

System-features

10

15

SNR / dB (averaged over 4 noises)

20

clean

Avg. WER 20-0 dB

Baseline WER ratio

HTK-mfcc

13.7%

100%

Neural net-mfcc

9.3%

84.5%

Tandem-mfcc

7.4%

64.5%

Tandem-msg+plp

6.4%

47.2%

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 17

Inside Tandem systems:

What’s going on?

•

Visualizations of the net outputs

Clean

3

3

2

2

1

1

0

0

10

10

5

5

0

0

q

h#

ax

uw

ow

ao

ah

ay

ey

eh

ih

iy

w

r

n

v

th

f

z

s

kcl

tcl

k

t

q

h#

ax

uw

ow

ao

ah

ay

ey

eh

ih

iy

w

r

n

v

th

f

z

s

kcl

tcl

k

t

q

h#

ax

uw

ow

ao

ah

ay

ey

eh

ih

iy

w

r

n

v

th

f

z

s

kcl

tcl

k

t

q

h#

ax

uw

ow

ao

ah

ay

ey

eh

ih

iy

w

r

n

v

th

f

z

s

kcl

tcl

k

t

freq / kHz

4

phone

Spectrogram

5dB SNR to ‘Hall’ noise

4

phone

“one eight three”

(MFP_183A)

10

0

-10

-20

-30

-40 dB

Cepstral-smoothed

mel spectrum

Phone posterior

estimates

Hidden layer

linear outputs

freq / mel chan

7

Dan Ellis

5

4

0

•

6

0.2

0.4

0.6

time / s

0.8

1

3

1

0.5

0

20

10

0

-10

0

0.2

0.4

0.6

time / s

0.8

1

-20

Neural net normalizes away noise?

- ... just a successful way to build a classifier?

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 18

Tandem vs. other approaches

Aurora 2 Eurospeech 2001 Evaluation

Columbia

Rel improvement % - Multicondition

60

Philips

UPC Barcelona

Bell Labs

50

IBM

40

Motorola 1

Motorola 2

30

Nijmegen

ICSI/OGI/Qualcomm

20

ATR/Griffith

AT&T

Alcatel

10

Siemens

UCLA

Microsoft

0

Avg. rel. improvement

-10

Slovenia

Granada

•

50% of word errors corrected over baseline

•

Beat a ‘bells and whistles’ system

that used many large-vocabulary techniques

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 19

Outline

1

Sound, Mixtures & Learning

2

Computational Auditory Scene Analysis

3

Recognizing Speech in Noise

4

Using Models in Parallel

- HMM decomposition/factoring

- Speech fragment decoding

5

The Listening Machine

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 20

Using Models in Parallel:

HMM decomposition

4

(e.g. Varga & Moore 1991, Gales & Young 1996)

•

Independent state sequences

for 2+ component source models

model 2

model 1

observations / time

•

New combined state space q' = {q1 q2}

- need pdfs for each combination p ( X q 1, q 2 )

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 21

“One microphone source separation”

(Roweis 2000, Manuel Reyes)

State sequences → t-f estimates → mask

•

Original

voices

freq / Hz

Speaker 1

Speaker 2

4000

3000

2000

1000

0

Mixture

4000

3000

2000

1000

0

Resynthesis

masks

State

means

4000

3000

2000

1000

0

4000

3000

2000

1000

0

0

1

2

3

4

5

6

time / sec

0

1

2

3

4

5

6

time / sec

- 1000 states/model (→ 106 transition probs.)

- simplify by modeling subbands (coupled HMM)?

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 22

Speech Fragment Recognition

(Jon Barker & Martin Cooke, Sheffield)

•

Signal separation is too hard!

Instead:

- segregate features into partially-observed

sources

- then classify

•

Made possible by missing data recognition

- integrate over uncertainty in observations

for optimal posterior distribution

•

Goal:

Relate clean speech models P(X|M)

to speech-plus-noise mixture observations

- .. and make it tractable

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 23

Comparing different segregations

•

Standard classification chooses between

models M to match source features X

P( M )

M ∗ = argmax P ( M X ) = argmax P ( X M ) ⋅ -------------P( X )

M

M

•

Mixtures → observed features Y, segregation S,

all related by P ( X Y , S )

Observation

Y(f )

Source

X(f )

Segregation S

freq

- spectral features allow clean relationship

•

Joint classification of model and segregation:

P( X Y , S )

P ( M , S Y ) = P ( M ) ∫ P ( X M ) ⋅ ------------------------- dX ⋅ P ( S Y )

P( X )

- integral collapses in several cases...

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 24

Calculating fragment matches

P( X Y , S )

P ( M , S Y ) = P ( M ) ∫ P ( X M ) ⋅ ------------------------- dX ⋅ P ( S Y )

P( X )

•

P(X|M) - the clean-signal feature model

•

P(X|Y,S)/P(X) - is X ‘visible’ given segregation?

•

Integration collapses some bands...

•

P(S|Y) - segregation inferred from observation

- just assume uniform, find S for most likely M

- or: use extra information in Y to distinguish S’s

e.g. harmonicity, onset grouping

•

Result:

- probabilistically-correct relation between

clean-source models P(X|M)

and inferred, recognized source + segregation

P(M,S|Y)

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 25

Speech fragment decoder results

•

Simple P(S|Y) model forces contiguous regions

to stay together

- big efficiency gain when searching S space

"1754" + noise

90

AURORA 2000 - Test Set A

80

WER / %

70

SNR mask

60

HTK clean training

MD Soft SNR

HTK multicondition

50

40

30

20

Fragments

10

0

-5

Fragment

Decoder

•

Dan Ellis

0

5

10

SNR / dB

15

"1754"

Clean-models-based recognition

rivals trained-in-noise recognition

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 26

20

clean

Multi-source decoding

•

Search for more than one source

q2(t)

Y(t)

S2(t)

S1(t)

q1(t)

•

Mutually-dependent data masks

•

Use e.g. CASA features to propose masks

- locally coherent regions

- more powerful than Roweis masks

•

Huge practical advantage over full search

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 27

Outline

1

Sound, Mixtures & Learning

2

Computational Auditory Scene Analysis

3

Recognizing Speech in Noise

4

Using Models in Parallel

5

The Listening Machine

- Everyday sound

- Alarms

- Music

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 28

The Listening Machine

5

•

Smart PDA records everything

•

Only useful if we have index, summaries

- monitor for particular sounds

- real-time description

•

Scenarios

- personal listener → summary of your day

- future prosthetic hearing device

- autonomous robots

•

Dan Ellis

Meeting data, ambulatory audio

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 29

Alarm sound detection

(Ellis 2001)

•

freq / kHz

s0n6a8+20

4

Alarm sounds have particular structure

- people ‘know them when they hear them’

- clear even at low SNRs

hrn01

bfr02

buz01

20

3

0

2

-20

1

0

0

5

10

15

20

25

time / s

•

Why investigate alarm sounds?

- they’re supposed to be easy

- potential applications...

•

Contrast two systems:

- standard, global features, P(X|M)

- sinusoidal model, fragments, P(M,S|Y)

Dan Ellis

Scene Analysis for Speech & Audio Recognition

-40

level / dB

2003-04-16 - 30

freq / kHz

Alarms: Results

Restaurant+ alarms (snr 0 ns 6 al 8)

4

3

2

1

0

MLP classifier output

freq / kHz

0

Sound object classifier output

4

6

9

8

7

3

2

1

0

20

•

25

35

40

45

time/sec 50

Both systems commit many insertions at 0dB

SNR, but in different circumstances:

Noise

Neural net system

Del

Ins

Tot

Sinusoid model system

Del

Ins

Tot

1 (amb)

7 / 25

2

36%

14 / 25

1

60%

2 (bab)

5 / 25

63

272%

15 / 25

2

68%

3 (spe)

2 / 25

68

280%

12 / 25

9

84%

4 (mus)

8 / 25

37

180%

9 / 25

135

576%

170

192%

50 / 100

147

197%

Overall 22 / 100

Dan Ellis

30

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 31

Music Applications

•

Music as a complex, information-rich sound

•

Applications of separation & recognition:

- note/chord detection & classification

freq / kHz

DYWMB: Alignments to MIDI note 57 mapped to Orig Audio

2

1

0

23

24

31

32

40

41

48

49

2

1

0

72

73

80

81

85

86

88

89

- singing detection (→ genre identification ...)

Track 117 - Aimee Mann (dynvox=Aimee, unseg=Aimee)

true voice

Michael Penn

The Roots

The Moles

Eric Matthews

Arto Lindsay

Oval

Jason Falkner

Built to Spill

Beck

XTC

Wilco

Aimee Mann

The Flaming Lips

Mouse on Mars

Dj Shadow

Richard Davies

Cornelius

Mercury Rev

Belle & Sebastian

Sugarplastic

Boards of Canada

0

50

Dan Ellis

100

150

Scene Analysis for Speech & Audio Recognition

200

2003-04-16 - 32

time / sec

Summary

•

Sound

- .. contains much, valuable information at many

levels

- intelligent systems need to use this information

•

Mixtures

- .. are an unavoidable complication when using

sound

- looking in the right time-frequency place to find

points of dominance

•

Learning

- need to acquire constraints from the

environment

- recognition/classification as the real task

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 33

References

A. Bregman. Auditory Scene Analysis, MIT Press, 1990.

A. Bell and T. Sejnowski. “An information-maximization approach to blind separation and blind

deconvolution,” Neural Computation, 7: 1129-1159, 1995.

http://citeseer.nj.nec.com/bell95informationmaximization.html

A. Berenzweig, D. Ellis, S. Lawrence (2002). “Using Voice Segments to Improve Artist

Classification of Music “, Proc. AES-22 Intl. Conf. on Virt., Synth., and Ent. Audio. Espoo,

Finland, June 2002.

http://www.ee.columbia.edu/~dpwe/pubs/aes02-aclass.pdf

A. Berenzweig, D. Ellis, S. Lawrence (2002). “Anchor Space for Classification and Similarity

Measurement of Music“, Proc. ICME-03, Baltimore, July 2003.

http://www.ee.columbia.edu/~dpwe/pubs/icme03-anchor.pdf

M. Cooke and G. Brown. “Computational auditory scene analysis: Exploiting principles of

perceived continuity”, Speech Communication 13, 391-399, 1993

D. Ellis. Prediction-driven computational auditory scene analysis, Ph.D. dissertation, MIT, 1996.

http://www.ee.columbia.edu/~dpwe/pubs/pdcasa.pdf

D. Ellis. “Detecting Alarm Sounds”, Proc. Workshop on Consistent & Reliable Acoustic Cues

CRAC-01, Denmark, Sept. 2001.

http://www.ee.columbia.edu/~dpwe/pubs/crac01-alarms.pdf

M. Gales and S. Young. “Robust continuous speech recognition using parallel model

combination”, IEEE Tr. Speech and Audio Proc., 4(5):352--359, Sept. 1996.

http://citeseer.nj.nec.com/gales96robust.html

H. Hermansky,D. Ellis and S. Sharma, “Tandem connectionist feature extraction for conventional

HMM systems,” Proc. ICASSP, Istanbul, June 2000.

http://citeseer.nj.nec.com/hermansky00tandem.html

Dan Ellis

Scene Analysis for Speech & Audio Recognition

2003-04-16 - 34