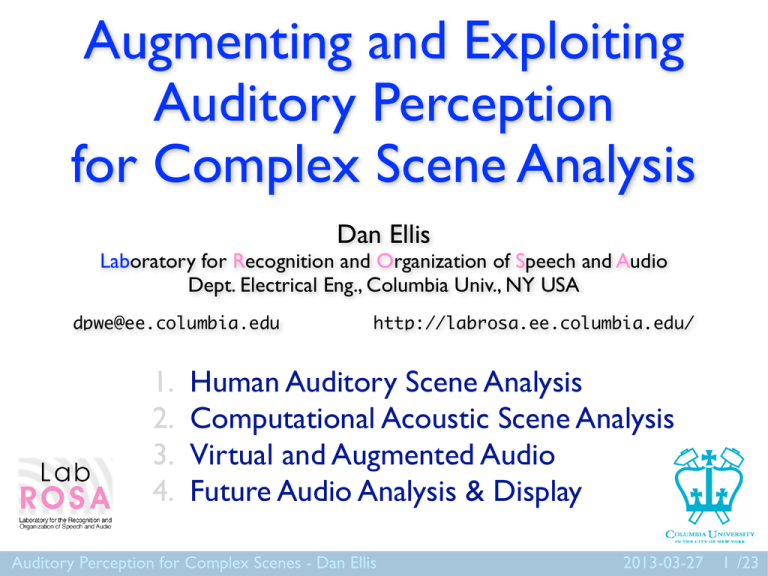

Augmenting and Exploiting Auditory Perception for Complex Scene Analysis

advertisement

Augmenting and Exploiting Auditory Perception for Complex Scene Analysis Dan Ellis Laboratory for Recognition and Organization of Speech and Audio Dept. Electrical Eng., Columbia Univ., NY USA dpwe@ee.columbia.edu 1. 2. 3. 4. http://labrosa.ee.columbia.edu/ Human Auditory Scene Analysis Computational Acoustic Scene Analysis Virtual and Augmented Audio Future Audio Analysis & Display Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 1 /23 1. Human Auditory Scene Analysis “Imagine two narrow channels dug up from the edge of a lake, with handkerchiefs stretched across each one. Looking only at the motion of the handkerchiefs, you are to answer questions such as: How many boats are there on the lake and where are they?” (after Bregman’90) • Now: Hearing as the model for machine perception • Future: Machines to enhance human perception Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 2 /23 Auditory Scene Analysis • Listeners organize sound mixtures Bregman ’90 Darwin & Carlyon ’95 common onset + continuity harmonicity freq / kHz into discrete perceived sources based on within-signal cues (audio + ...) 4 30 20 3 10 0 2 -10 -20 1 -30 0 -40 0 spatial, modulation, ... learned “schema” 0.5 Auditory Perception for Complex Scenes - Dan Ellis 1 1.5 2 2.5 3 3.5 4 time / sec level / dB reynolds-mcadams-dpwe.wav 2013-03-27 3 /23 Spatial Hearing • People perceive sources based on cues Blauert ’96 spatial (binaural): ITD, ILD R L head shadow (high freq) path length difference source shatr78m3 waveform 0.1 Left 0.05 0 -0.05 Right -0.1 2.2 2.205 2.21 2.215 Auditory Perception for Complex Scenes - Dan Ellis 2.22 2.225 2.23 2.235 time / s 2013-03-27 4 /23 Human Performance: Spatial Info • Task: Coordinate Response Measure “Ready Baron go to green eight now” 256 variants, 16 speakers correct = color and number for “Baron” Brungart et al.’02 crm-11737+16515.wav • Accuracy as a function of spatial separation: A, B same speaker Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 5 /23 Human Performance: Source Info Brungart et al.’01 varying the level and voice character !"#$%&$'()#*"&+$,-"#$'(!$./-#0 • CRM 1232'(4-**2&2#52. (same spatial location) 1232'(6-**2&2#52(5$#(72'8(-#(.82257(.20&20$,-"# energetic vs. informational masking 1-.,2#(*"&(,72(9%-2,2&(,$'/2&: Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 6 /23 Human Hearing: Limitations Intensity / dB • Sensor number: just 2 ears • Sensor location: short, horizontal baseline • Sensor performance: local dynamic range absolute threshold masking tone masked threshold log freq • Processing: Attention & Memory limits integration time Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 7 /23 2. Computational Scene Analysis • Central idea: Segment time-frequency into sources based on perceptual grouping cues Segment input mixture Front end signal features (maps) Brown & Cooke’94 Okuno et al.’99 Hu & Wang’04 ... Group Object formation discrete objects Grouping rules Source groups freq onset time period frq.mod ... principal cue is harmonicity Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 8 /23 Spatial Info: Microphone Arrays Benesty, Chen, Huang ’08 • If interference is diffuse, can simply boost energy from target direction e.g. shotgun mic - delay-and-sum 0 -20 -40 ∆x = c . D λ = 4D λ = 2D λ=D + D + D + D off-axis spectral coloration many variants - filter & sum, sidelobe cancelation ... Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 9 /23 Independent Component Analysis Bell & Sejnowski ’95 Smaragdis ’98 • Separate “blind” combinations by maximizing independence of outputs m1 m2 x a11 a21 a12 a22 s1 s2 −δ MutInfo δa Mixture Scatter kurt(y) = E y µ 4 as a measure of independence? 3 s2 0.6 0.4 kurtosis mix 2 kurtosis Kurtosis vs. 0.8 s1 12 10 8 0.2 6 0 4 -0.2 -0.4 2 -0.6 -0.3 0 s1 -0.2 -0.1 0 0.1 0.2 0.3 0.4 mix 1 Auditory Perception for Complex Scenes - Dan Ellis 0 0.2 0.4 0.6 s2 0.8 / 1 2013-03-27 10 /23 Environmental Scene Analysis • Find the pieces a listener would report Ellis ’96 f/Hz City 4000 2000 1000 400 200 1000 400 200 100 50 0 1 2 3 f/Hz Wefts1 4 4 5 Weft5 6 7 Wefts6,7 8 Weft8 9 Wefts9 12 4000 2000 1000 400 200 1000 400 200 100 50 Horn1 (10/10) Horn2 (5/10) Horn3 (5/10) Horn4 (8/10) Horn5 (10/10) f/Hz Noise2,Click1 4000 2000 1000 400 200 Crash (10/10) f/Hz Noise1 4000 2000 1000 40 400 200 50 60 Squeal (6/10) Truck (7/10) 70 0 1 2 Auditory Perception for Complex Scenes - Dan Ellis 3 4 5 6 7 8 9 time/s dB 2013-03-27 11 /23 “Superhuman” Speech Analysis ABSTRACT We present a framework for speech enhancement and robust speech recognition that exploits the harmonic structure of speech. We achieve substantial gains in signal to noise ratio (SNR) of enhanced speech as well as considerable gains in accuracy of automatic speech recognition in very noisy conditions. The method exploits the harmonic structure of speech by employing a high frequency resolution speech model in the log-spectrum domain and reconstructs the signal from the estimated posteriors of the clean signal and the phases from the original noisy signal. We achieve a gain in signal to noise ratio of 8.38 dB for enhancement of speech at 0 dB. We also present recognition results on the Aurora 2 data-set. At 0 dB SNR, we achieve a reduction of relative word error rate of 43.75% over the baseline, and 15.90% over the equivalent low-resolution algorithm. There are two observations that motivate the consideration of high frequency resolution modelling of speech for noise robust speech recognition and enhancement. First is the observation that most noise sources do not have harmonic structureKristjansson, similar to that ofHershey voiced speech. et al.Hence, ’06 voiced speech sounds should be more easily distinguishable from environmental noise in a high dimensional signal space1 . • IBM’s 2006 Iroquois speech separation system detailed state combinations large speech recognizer exploits grammar constraints 34 per-speaker models • “Superhuman” performance 45 35 30 25 20 1. INTRODUCTION 15 x estimate noisy vector clean vector A long standing goal in speech enhancement and robust 10 speech recognition has been to exploit the harmonic struc0 200 400 600 800 1000 Frequency [Hz] ture of speech to improve intelligibility and increase recognition accuracy. Fig. 1. The noisy input vector (dot-dash line), the correThe source-filter model of speech that speech Sameassumes Gender Speakers sponding clean vector (solid line) and the estimate of the 100 is produced by an excitation source (the vocal cords) which clean speech (dotted line), with shaded area indicating the has strong regular harmonic structure during voiced phonemes. uncertainty of the estimate (one standard deviation). Notice The overall shape of the spectrum is then formed by a filthat the uncertainty on the estimate is considerably larger in 50 ter (the vocal tract). In non-tonal languages the filter shape the valleys between the harmonic peaks. This reflects the alone determines which phone component of a word is prolower SNR in these regions. The vector shown is frame 100 duced (see Figure 2). The source on the other hand introfrom Figure 2 0 duces fine structure in the frequency spectrum that in many dB 3 dB different0utterances dB - 3the dB same - 6 dB - 9 dB cases varies6 strongly among of A second observation is that in voiced speech, the signal phone.SDL Recognizer No dynamics Acoustic dyn. Grammar dyn. Human power is concentrated in areas near the harmonics of the This fact has traditionally inspired the use of smooth fundamental frequency, which show up as parallel ridges in representations of the speech spectrum, such as the Melfrequency cepstral coefficients, in an attempt to accurately 1 Even if the interfering signal is another speaker, the harmonic structure estimate the filter component of speech in a way that is inof the two signals may differ at different times, and the long term pitch variant to the non-phonetic effects of the excitation[1]. contour of the speakers may be exploited to separate the two sources [2]. Complex Scenes - Dan Ellis 2013-03-27 12 /23 ... in some conditions Auditory Perception for Noisy vector, clean vector and estimate of clean vector 40 Energy [dB] Key features: Meeting Recorders Janin et al. ’03 Ellis & Liu ’04 • Distributed mics in meeting room • Between-mic correlations locate sources Inferred talker positions (x=mic) mr-2000-11-02-1440: PZM xcorr lags 1 4 lag 3-4 / ms 0 1 -1 4 2 z/m 0.5 3 -2 -3 -3 -2 0 2 2 1 -1 0 1 lag 1-2 / ms 2 3 2 3 Auditory Perception for Complex Scenes - Dan Ellis 1 1 0 y/m -1 0 x/m -2 -2 2013-03-27 13 /23 Environmental Sound Classification Ellis, Zheng, McDermott ’11 • Trained models using e.g. “texture” features \x\ Sound Automatic gain control \x\ mel filterbank (18 chans) \x\ \x\ \x\ \x\ • Paradoxical results FFT Envelope correlation Auditory Perception for Complex Scenes - Dan Ellis Histogram Octave bins 0.5,1,2,4,8,16 Hz Modulation energy (18 x 6) mean, var, skew, kurt (18 x 4) Cross-band correlations (318 samples) 2013-03-27 14 /23 Audio Lifelogs Lee & Ellis ’04 • Body-worn continuous recording 09:00 • 09:30 Long time windows for episode-scale segmentation, clustering, and classification 10:00 10:30 11:00 11:30 2004-09-13 preschool cafe preschool cafe Ron lecture 12:00 office 12:30 office outdoor group 13:00 2004-09-14 L2 cafe office outdoor lecture outdoor DSP03 compmtg meeting2 13:30 lab 14:00 cafe meeting2 Manuel outdoor office cafe office Mike Arroyo? outdoor Sambarta? 14:30 15:00 15:30 16:00 office office office postlec office Lesser 16:30 17:00 17:30 18:00 Auditory Perception for Complex Scenes - Dan Ellis outdoor lab cafe 2013-03-27 15 /23 Machines: Current Limitations • Separating overlapping sources blind source separation • Separating individual events segmentation • Learning & classifying source categories recognition of individual sounds and classes Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 16 /23 3.Virtual and Augmented Audio Brown & Duda ’98 • Audio signals can be effectively spatialized by convolving with Head-Related Impulse Responses (HRIRs) HRIR_021 Left @ 0 el HRIR_021 Right @ 0 el 1 45 0 0 1 -45 0 Azimuth / deg RIGHT LEFT 0 0.5 1 1.5 0 0.5 1 1.5 -1 time / ms 0 HRIR_021 Left @ 0 el 0 az HRIR_021 Right @ 0 el 0 az 0.5 1 1.5 time / ms • Auditory localization also uses head-motion cues Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 17 /23 Augmented Audio Reality • Pass-through and/or mix-in Härmä et al. ’04 oc. of the 11th Int. Conference on Digital Audio Effects (DAFx-08), Espoo, Finland, September 1-4 Hearium ’12 Figure 3: Filter TheHearium headset (especially Journal of Electrical and Computer Engineering gure 2: Left: Headset used in the evaluation. Microphone is on e left and headphone outlet on the right. Middle: Headset fitted ear. Right: Prototype ARA mixer. Virtual Signal to sounds Virtual acoustic processing ARA mixer Preprocessing for transmission distant user Although multimodal communication is in most cases prerred over a single modality, one of the strengths of ARA techques is that they can be used practically anywhere, anytime, Figure 1: Block diagram of an ARA system. nds-free, and eyes-free. This paper introduces results ofScenes a pilot- study on the usability of Auditory Perception for Complex Dan Ellis and high frequencies comin ever, there is always some between the skin and the cu quencies can leak through leaking from the real environ pseudo-acoustic representat summing causes coloration riorates the pseudo-acoustic QuietPro+ low frequencies has to be eq 2013-03-27 18 /23 4. Future Audio Analysis & Display • Better Scene Analysis overcoming the limitations of human hearing: sensors, geometry Sound mixture Object 1 percept Object 2 percept Object 3 percept • Challenges fine source discrimination modeling & classification (language ID?) Integrating through time: single location, sparse sounds Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 19 /23 Ad-Hoc Mic Array • Multiple sensors, real-time sharing long-baseline beamforming • Challenges precise relative (dynamic) localization precise absolute registration Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 20 /23 Sound Visualization • Making acoustic information visible O’Donovan et al. ’07 “synesthesia” www.ultra-gunfirelocator.com • Challenges source formation & classification registration: sensors, display Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 21 /23 Auditory Display • Acoustic channel complements vision acoustic alarms verbal information “zoomed” ambience instant replay http://www.youtube.com/watch?v=v1uyQZNg2vE • Challenges Information management & prioritization maximally exploit perceptual organization Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 22 /23 Summary • Human Scene Analysis Spatial & Source information • Computational Scene Analysis Spatial & Source information World knowledge • Augmented Audition Selective pass-through + insertion Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 23 /23 References • • • • • • • • • • • • • • • • • • • • A. Bregman, Auditory Scene Analysis, MIT Press, 1990. C. Darwin & R. Carlyon, “Auditory grouping” Hbk of Percep. & Cogn. 6: Hearing, 387–424, Academic Press, 1995. J. Blauert, Spatial Hearing (revised ed.), MIT Press, 1996. D. Brungart & B. Simpson, “The effects of spatial separation in distance on the informational and energetic masking of a nearby speech signal”, JASA 112(2), Aug. 2002. D. Brungart, B. Simpson, M. Ericson, K. Scott, “Informational and energetic masking effects in the perception of multiple simultaneous talkers,” JASA 110(5), Nov. 2001. G. Brown & M. Cooke, “Computational auditory scene analysis,” Comp. Speech & Lang. 8(4), 297–336, 1994. H. Okuno, T. Nakatani, T. Kawabata, “Listening to two simultaneous speeches,” Speech Communication 27, 299–310, 1999. G. Hu and D.L. Wang, “Monaural speech segregation based on pitch tracking and amplitude modulation,” IEEE Tr. Neural Networks, 15(5), Sep. 2004. J. Benesty, J. Chen,Y. Huang, Microphone Array Signal Processing, Springer, 2008. A. Bell, T. Sejnowski, “An information-maximization approach to blind separation and blind deconvolution,” Neural Computation, vol. 7 no. 6, pp. 1129-1159, 1995. P. Smaragdis, “Blind separation of convolved mixtures in the frequency domain,” Intl. Wkshp. on Indep. & Artif.l Neural Networks, Tenerife, Feb. 1998. D. Ellis, “Prediction-Driven Computational Auditory Scene Analysis,” Ph.D. thesis, MIT EECS, 1996. T Kristjansson, J Hershey, P Olsen, S Rennie, R Gopinath, Super-human multi-talker speech recognition: The IBM 2006 speech separation challenge system, Proce. of Interspeech, 97-100, 2006. A. Janin, D. Baron, J. Edwards, D. Ellis, D. Gelbart, N. Morgan, B. Peskin, T. Pfau, E. Shriberg, A. Stolcke, C. Wooters, The ICSI Meeting Corpus, Proc. ICASSP-03, pp. I-364--367, 2003. D. Ellis and J. Liu, Speaker turn segmentation based on between-channel differences, NIST Meeting Recognition Workshop @ ICASSP, pp. 112-117, Montreal, May 2004. D. Ellis, X. Zheng, and J. McDermott, Classifying soundtracks with audio texture features, Proc. IEEE ICASSP, pp. 5880-5883, Prague, May 2011. D. Ellis and K.S. Lee, Minimal-Impact Audio-Based Personal Archives, First ACM workshop on Continuous Archiving and Recording of Personal Experiences CARPE-04, New York, pp. 39-47, Oct 2004. C. P. Brown, R. O. Duda, A structural model for binaural sound synthesis, IEEE Tr. Speech & Audio 6(5):476-488, 1998. A. Härma, J. Julia, M. Tikander, M. Karjalainen, T. Lokki, J, Hiipakka, G. Lorho, Augmented reality audio for mobile and wearable appliances, J. Aud Eng. Soc. 52(6): 618-639, 2004. A. O'Donovan, R. Duraiswami, J. Neumann, Microphone arrays as generalized cameras for integrated audio visual processing, IEEE CVPR, 1-8, 2007. Auditory Perception for Complex Scenes - Dan Ellis 2013-03-27 24 /23