A Burst Scheduling Access Reordering Mechanism

advertisement

A Burst Scheduling Access Reordering Mechanism

Jun Shao and Brian T. Davis

Department of Electrical and Computer Engineering

Michigan Technological University

{jshao, btdavis}@mtu.edu

Abstract

Utilizing the nonuniform latencies of SDRAM devices,

access reordering mechanisms alter the sequence of main

memory access streams to reduce the observed access latency. Using a revised M5 simulator with an accurate

SDRAM module, the burst scheduling access reordering

mechanism is proposed and compared to conventional in order memory scheduling as well as existing academic and industrial access reordering mechanisms. With burst scheduling, memory accesses to the same rows of the same banks

are clustered into bursts to maximize bus utilization of the

SDRAM device. Subject to a static threshold, memory reads

are allowed to preempt ongoing writes for reduced read latency, while qualified writes are piggybacked at the end of

bursts to exploit row locality in writes and prevent write

queue saturation. Performance improvements contributed

by read preemption and write piggybacking are identified. Simulation results show that burst scheduling reduces

the average execution time of selected SPEC CPU2000

benchmarks by 21% over conventional bank in order memory scheduling. Burst scheduling also outperforms Intel’s

patented out of order memory scheduling and the row hit

access reordering mechanism by 11% and 6% respectively.

1. Introduction

Memory performance can be measured in two ways:

bandwidth and latency. Memory bandwidth can be largely

addressed by increasing resources, using increased frequency, wider busses, dual or qual channels. However, reducing memory latency often requires reducing the device

size. Although caches can hide the long main memory latency, cache misses may require hundreds of CPU cycles

and cause pipeline stalls. Therefore main memory access

latency remains a factor limiting system perform [21].

Due to the 3-D (bank, row, column) structure, modern

SDRAM devices have nonuniform access latencies [3, 4].

The access latency depends upon the location of requested

data and the state of SDRAM device. Two adjacent memory accesses directed to the same row of the same bank can

be completed faster than two accesses directed to different

rows because the accessed row data can be maintained in an

active row for faster access to following same row accesses.

In addition, accesses to unique banks can be pipelined, thus

two accesses directed to unique banks may have shorter latency than two accesses directed to the same bank.

With aggressive out of order execution processors and

non-blocking caches, multiple main memory accesses can

be issued and outstanding while the main memory is serving the previous access. Compared with conventional in

order access scheduling, memory access reordering mechanisms execute these outstanding memory accesses in an

order which attempts to reduce execution time. Through exploiting SDRAM row locality and bank parallelism, access

reordering mechanisms significantly reduce observed main

memory access latency and improve the effective memory

bandwidth. Access reordering mechanisms do not require

a large amount of chip area and only need modifications to

the memory controller.

This paper makes the following contributions:

• Studies and identifies performance contributions made

by access reordering mechanisms.

• Introduces burst scheduling which creates bursts by

clustering accesses directed to the same rows of the

same banks to achieve a high data bus utilization.

Reads are allowed to preempt ongoing writes while

qualified writes are piggybacked at the end of bursts.

• Compares burst scheduling with conventional bank in

order memory scheduling as well as existing access

reordering mechanisms, including published academic

and industrial out of order scheduling.

• Explores the design space of burst scheduling by using

a static threshold to control read preemption and write

piggybacking. Determines the threshold that yields the

shortest execution time by experiments.

The rest of the paper is organized as follows. Section 2 discusses the background of modern SDRAM devices, memory access scheduling and related work. Section 3 introduces burst scheduling. Section 4 and Section 5

present experimental environment and simulation results.

Conclusions are explored in Section 6 based on the results.

Section 7 briefly discusses future work.

2. Background

Modern SDRAM devices store data in arrays (banks)

which can be indexed by row address and column address [3, 4]. An access to the SDRAM device may require

three transactions besides the data transfer: bank precharge,

row activate and column access [10]. A bank precharge

charges and prepares the bank. A row activate copies an

entire row data from the array to the sense amplifiers which

function like a cache. Then one or more column accesses

can access the row data.

Depending on the state of the SDRAM device, a memory

access could be a row hit, row conflict or row empty and

experiences different latencies [14]. A row hit occurs when

the bank is open and an access is directed to the same row

as the last access to the same bank. A row conflict occurs

when an access goes to a different row than the last access

to the same bank. If the bank is closed (precharged) then a

row empty occurs. Row hits only require column accesses

while all three transactions are required for row conflicts

therefore row hits have shorter latencies than row conflicts.

After completing a memory access, the bank can be left

open or closed by a bank precharge, subject to the memory controller policy. One of two static controller policies,

Open Page (OP) and Close Page Autoprecharge (CPA), typically makes this decision [20]. Table 1 summarizes possible SDRAM access latencies given SDRAM busses are idle,

where tRP , tRCD and tCL are the timing constraints associated with bank precharge, row activate and column access

respectively [10].

Table 1. Possible SDRAM access latencies

Controller policy

Row hit

Row empty

Row conflict

OP

tCL

tRCD +tCL

tRP +tRCD +tCL

CPA

N/A

tRCD +tCL

N/A

Multiple internal banks allow accesses to unique banks

to be executed in parallel. A set of SDRAM devices concatenated to populate the system memory bus is known as

a rank, which shares address bus and data bus with other

ranks. For systems that support dual channels, different

channels have unique busses. Therefore parallelism exists

in the memory subsystem between banks and/or channels.

Main memory access streams are comprised of cache

misses from the lowest level cache and have been shown

to have significant spatial and temporal locality even after

being filtered by cache(s) [16]. These characteristics of the

main memory create a design space where parallelism and

locality can be exploited by access reordering mechanisms

to reduce the main memory access latency.

Throughout the rest of this paper, the term access refers

to a memory read or write issued by the lowest level cache.

An access may require several transactions depending upon

the state of the SDRAM devices.

2.1. Memory Access Scheduling

The memory controller, usually located on the north

bridge or on the CPU die, generates the required transactions for each access and schedules them on the SDRAM

busses. SDRAM busses are split transaction busses therefore transactions belonging to different accesses can be interleaved.

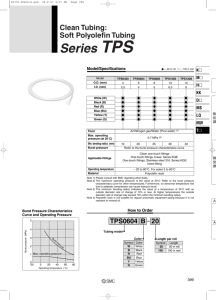

Access0 to

bank0 row0

R

C

Access1 to

bank1 row0

R

C

D0

Access2 to

bank0 row1

P

R

C

D1

Access3 to

bank0 row0

P

R

C

D2

D3

28 cycles

(a) In order scheduling without interleaving

R

C R C C

D0

P

D3

R

D1

C

D2

16 cycles

(b) Out of order scheduling with interleaving

P Bank precharge

R

Row activate

C Column access

Dx

Data

Figure 1. Memory access scheduling

How the memory controller schedules transactions of accesses impacts the performance, as illustrated in Figure 1.

In this example four accesses are to be scheduled. Access0

and access1 are row empties; access2 and access3 are row

conflicts. The SDRAM device has timing constraints of 22-2 (tCL -tRCD -tRP ) and a burst length of 4 (2 cycles with

double data rate). In figure 1(a), the controller performs

these accesses strictly in order and does not interleave any

transactions. It takes 28 memory cycles to complete four

accesses. While in order scheduling is easy to implement,

it is inefficient because of the low bus utilization.

In Figure 1(b), the same four accesses are scheduled out

of order. Access3 is scheduled prior to access1, which

turns access3 from a row conflict into a row hit. The transactions of different accesses are also interleaved to maximize SDRAM bus utilization. As a result, only 16 memory

cycles are needed to complete the same four accesses. It is

possible that some accesses result in increased latency due

to access reordering, however the average access latency

should be reduced.

2.2. Related Work

SDRAM transactions to devices

Other access reordering mechanisms exist. They are usually designed for special applications or hardware. Scott

Rixner et al. exploited the features of Virtual Channel

SDRAM devices [11] and proposed various access reordering policies to achieve a high memory bandwidth and low

memory latency for modern web servers [12]. Proposed

by Ibrahim Hur et al., the adaptive history-based memory

scheduler tracks the access pattern of recently scheduled

accesses and selects memory accesses matching the program’s mixture of reads and writes [7]. Zhichun Zhu et

al. proposed fine-grain priority scheduling, which splits and

maps memory accesses into different channels and returns

critical data first, to fully utilize the available bandwidth

and concurrency provided by Direct Rambus DRAM systems [24]. Sally McKee et al. described a Stream Memory

Controller system for streaming computations, which combines compile-time detection of streams with executiontime access reordering [9]. SDRAM access reordering and

prefetching were also proposed by Jahangir Hasan et al. to

increase row locality and reduce row conflict penalty for

network processors [6].

Other SDRAM related techniques such as SDRAM address mapping change the distribution of memory blocks in

the SDRAM address space to exploit the parallelism [19,

5, 23, 16]. A dynamic SDRAM controller policy predictor proposed by Ying Xu reduces main memory access latency by using a history based predictor similar to branch

predictors to make the decision whether or not to leave the

accessed row open for each access [22].

3. Burst Scheduling

In a packet switching network, large packets can improve

network throughput because the fraction of bandwidth used

to transfer packet heads (overhead) is reduced. Consider

these three transactions as the overhead and the data transfer as the payload, the same idea could be used in access

scheduling to improve bus utilization.

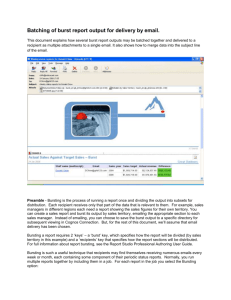

P0

R0

C0

C1

C2

C3

C4

Access0 Access1 Access2 Access3 Access4

Overhead

Burst (payload)

Figure 2. Burst scheduling

As illustrated in Figure 2, the proposed burst scheduling

clusters outstanding accesses directed to the same rows of

the same banks into bursts. Accesses within a burst, except

SDRAM Transaction Scheduler

Bank0

Arbiter

Bank1

Arbiter

BankN

Arbiter

Shared write

data pool

Shared

access

pool

Memory accesses

Read

Write

Write data

Figure 3. Structure of burst scheduling

for the first one, are row hits and only require column access transactions. Data transfers of these accesses can be

performed back to back on the data bus, resulting in a large

payload therefore improving data bus utilization. Increasing the row hit rate and maximizing the memory data bus

utilization are the major design goals of burst scheduling.

Figure 3 shows the structure of burst scheduling. Outstanding accesses are stored in unique read queues and write

queues based on their target banks. The read and write

queues share a global access pool. A write data pool is used

to store the data associated with writes. The queues can be

implemented as linked lists. Depending upon the row index

of the access address, new reads will join existing bursts, or

new bursts containing single access will be created and appended at the end of the read queues. Bank arbiters select an

ongoing access from the read or write queue for each bank.

At each memory cycle a global transaction scheduler selects

an ongoing access from all bank arbiters and schedules the

next transactions of the access.

Newly arrived accesses can join existing bursts when

bursts are being scheduled. Bursts within a bank are sorted

based on the arrival time of the first access to prevent starvation to single access bursts or small bursts in the same bank.

However a large or an increasing burst can still delay small

bursts from other banks. Therefore burst scheduling interleaves bursts from different banks to give relatively equal

opportunity to all bursts. High data bus utilization can be

maintained during burst interleaving. However consideration must be taken to avoid bubble cycles due to certain

SDRAM timing constrains, i.e. rank to rank turnaround cycles introduced by DDR2 devices [8].

The following sections present the three subroutines of

the algorithm used in burst scheduling, which can be transformed into finite state machine for incorporation into the

SDRAM controller.

3.1. Access Enter Queue Subroutine

Figure 4 shows the access enter queue subroutine, which

is called when new accesses enter the queues. Because the

write queue serves as a write buffer, all reads must search

the write queue for possible hits, although write queue hits

happen infrequently due to the small queue size. When a

write queue hit occurs, a read is requesting the data at the

same location as a preceding write. The data from the latest

write (if there are multiple) will be forwarded to the read

such that the read can complete immediately. Missed reads

enter the read queue. If a read is directed to the same row

as an existing burst, the read will be appended to that burst.

Otherwise, a new burst composed of the read will be created

and appended to the read queue. All writes enter the write

queue in order and are completed from the view of the CPU.

subroutine AccessEnterQueue(access)

1: if access is a read then

2:

if hit in the write queue then

3:

forward the latest write data to access

4:

send response to access

5:

else if found an existing burst in read queue then

6:

append access to that burst

else

7:

create a new burst

8:

append the new burst to read queue

end if

else

9:

append access to the write queue

10:

send response to access

end if

Figure 4. Access enter queue subroutine

3.2. Bank Arbiter Subroutine

Each bank has one ongoing access, which is the access for which transactions are currently being scheduled,

but have not yet been completed. The bank arbiter selects

the ongoing access from either the read queue or the write

queue, generally prioritizing reads over writes. Writes are

selected only when there are no outstanding reads in the

read queue, when the write queue is full or when doing write

piggybacking. The algorithm is given in Figure 5.

Two options, read preemption and write piggybacking,

are available to the bank arbiter. Read preemption allows a

newly arrived read to interrupt an ongoing write. The read

becomes the ongoing access and starts immediately, therefore reducing the latency to the read. Read preemption will

not affect the correctness of execution; the preempted write

will restart later.

subroutine BankArbiter(ongoing access)

1: if ongoing access == NULL then

2:

if write queue is full then

3:

ongoing access = oldest write in write queue

4:

else if write queue length > threshold and

last access was an end of burst and

any row hit in write queue then

5:

ongoing access = oldest row hit write

6:

else if write queue is not empty and

read queue is empty then

7:

ongoing access = oldest write in write queue

else

8:

ongoing access = first read in next burst

end if

9: else if ongoing access is a write and

read queue is not empty and

write queues length < threshold then

10:

reset ongoing access

11:

ongoing access = first read in next burst

end if

Figure 5. Bank arbiter subroutine

The major functionality of the write queue, besides hiding the write latency and reducing write traffic [17], is to

allow reads to bypass writes. When the write queue reaches

its capacity, the main memory can not accept any new access, causing a possible CPU pipeline stall. Write piggybacking is designed to speed up write process by appending

qualified writes at the end of bursts. The writes being appended must be directed to the same row as the burst, so

that they will not disturb the continuous row hits created by

the burst. If there are no qualified writes available, the next

burst will start. Write piggybacking reduces the probability

of write queue saturation and exploits the locality of row

hits from writes as well.

Read preemption and write piggybacking may conflict

with each other, i.e. a piggybacked write may be preempted

by a new read. A threshold is introduced to allow the bank

arbiter to switch dynamically between read preemption and

write piggybacking. When the write queue occupancy is

less than the threshold, read preemption is enabled. Otherwise, write piggybacking is enabled. Section 5.4 will have

a detailed study of the threshold.

3.3. Transaction Scheduler Subroutine

A transaction is considered as unblocked when all required timing constraints are met. At each memory cycle

the transaction scheduler selects from all banks one ongoing access for which the next transaction is unblocked and

schedules that transaction.

A static priority, as shown in Table 2, is used to select the

ongoing access containing the next unblocked transaction to

Table 2. Transactions priority table (1: the

highest, 8: the lowest)

Read

Write

Bank precharge

Row activate

Column access

Bank precharge

Row activate

Column access

Same

bank

5

5

1

6

6

3

Same

rank

5

5

2

6

6

4

Other

ranks

5

5

7

6

6

8

be scheduled. Among all unblocked transactions, column

accesses within the same rank as the last access scheduled

have the highest priorities. Column accesses from different banks but within the same ranks are interleaved, so that

bursts from different banks are equally served. The high

data bus utilization is maintained because interleaved accesses are still row hits. Bank precharge and row activate

have the next highest priorities as they do not require data

bus resources, and therefore can be overlapped with column

access transactions. The scheduler has priorities set to finish all bursts within a rank before switching to another rank

to avoid rank-to-rank turnaround cycles required by DDR2

device [8]. Column accesses from different ranks thus have

the lowest priority. Within each category, read transactions

always have higher priorities than write transactions. An

oldest first policy is used to break ties.

Based on the scheduling priority in Table 2, the subroutine of the transaction scheduler is shown in Figure 6. When

there are no unblocked transactions from any accesses, the

scheduler will switch to the bank which has the oldest accesses and initiates an access from that bank in the next

memory cycle.

The priority table and transaction scheduler are the core

of burst scheduling, which maintain the structure of bursts

created by bank arbiters, meanwhile maximizing the data

bus utilization by aggressively interleaving transactions between accesses.

3.4. Validation

Burst scheduling will not affect program correctness.

Reads are checked in the write queues for hits before entering the read queues. If a read hits in the write queue, the

latest data will be forwarded from the write to the read, so

read after write (RAW) hazards are avoided. Within bursts,

writes are always piggybacked after reads which have previously checked the write queue for hits, avoiding write after

read (WAR) hazards. When performing write piggybacking, the oldest qualified write will be selected first. Writes

are scheduled in program order within the same rows, therefore write after write (WAW) hazards are also avoided.

subroutine TransactionScheduler(last bank, last rank)

1: if last bank has unblocked col access then

2:

schedule the unblocked col access

3: else if any unblocked col access in last rank then

4:

schedule the oldest unblocked col access

5: else if any unblocked precharge or row activate then

6:

schedule the oldest precharge or row activate

7: else if any unblocked col access in other ranks then

8:

schedule the oldest unblocked col access

end if

9: if access scheduled then

10:

if scheduled access has completed then

11:

send response to that access

end if

12:

last bank = scheduled access’s target bank

13:

last rank = scheduled access’s target rank

else

14:

last bank = the bank having the oldest access

15:

last rank = the rank having the oldest access

end if

Figure 6. Transaction scheduler subroutine

4. Experimental Environment

A revised M5 simulator [1] and SPEC CPU2000 benchmark suite [18] are used in the studies of access reordering mechanisms. The M5 simulator is selected mainly

because it supports a nondeterministic memory access latency. The revisions made to the M5 simulator include a

detailed DDR2 SDRAM module, a parameterized memory

controller as well as the addition of the access reordering

mechanisms described in this paper.

4.1. Benchmarks and Baseline Machine

Due to the page limitation, results from 16 of 26 SPEC

CPU2000 benchmarks are shown in Section 5 using the criterion that the benchmark selected exhibits more than 2%

performance difference between in order scheduling and

any out of order access reordering mechanisms studied in

this paper. While excluding non-memory intensive benchmarks provides a better illustration of impacts contributed

by access reordering mechanisms, results from the complete

suite can be found in [15]. Simulations are run through the

first 2 billion instructions with reference input sets and precompiled little-endian Alpha ISA SPEC2000 binaries [2].

Table 3 lists the configuration of the baseline machine,

which represents a typical desktop workstation in the near

future using a bank in order memory access scheduling

(BkInOrder). With BkInOrder, accesses within the same

banks are scheduled in the same order as they were issued,

while accesses from different banks are selected in a round

robin fashion.

Table 3. Baseline machine configuration

CPU

L1 I-cache

L1 D-cache

L2 cache

FSB

Main memory

Channel/Rank/Bank

SDRAM row policy

Address mapping

Access reordering

Memory access pool

4GHz, 8-way, 32 LSQ, 196 ROB

128KB, 2-way, 64B cache line

128KB, 2-way, 64B cache line

2MB, 16-way, 64B cache line

64bit, 800MHz (DDR)

4GB DDR2 PC2-6400 (5-5-5),

64-bit, burst length 8

2/4/4 (a total of 32 banks)

Open Page

Page Interleaving

Bank in order (BkInOrder)

256 (maximal 64 writes)

transaction priority table given in Table 2, which encompasses timing constraints and is extensible, burst scheduling

guarantees that row hits within a burst are scheduled back to

back, maximizing the bus utilization. Additionally, different information is employed to make scheduling decisions

at the memory access level and the transaction level, making

burst scheduling a two-level scheduler.

Burst scheduling with various optimizations, as discussed in Section 3, is also evaluated. Burst RP allows

reads to preempt writes. Burst WP piggybacks writes at

the end of bursts. Burst TH uses an experimentally selected

static threshold to control read preemption and write piggybacking. A threshold of 52 obtains the best performance

crossing simulated benchmarks, as will be shown in Section 5.4.

4.2. Simulated Access Reordering Mechanisms

Besides BkInOrder scheduling, three existing access reordering mechanisms, RowHit, Intel and Intel RP, are simulated and compared with burst scheduling. Table 4 summarizes all simulated access reordering mechanisms.

Table 4. Simulated access reordering mechanisms

BkInOrder

RowHit

Intel

Intel RP

Burst

Burst RP

Burst WP

Burst TH

In order intra banks, round robin inter banks

Row hit first intra bank, round robin inter

banks [13]

Intel’s memory scheduling [14]

Intel’s scheduling with read preemption

Burst scheduling

Burst scheduling with read preemption

Burst scheduling with write piggybacking

Burst scheduling with threshold (52)

Proposed by Scott Rixner et al., RowHit scheduling uses

a unified access queue for each bank. A row hit first policy selects the oldest access directed to the same row as the

last access to that bank. Accesses from different banks are

performed in a round robin fashion [13].

Intel’s patented out of order memory scheduling features

unique read queues per bank and a single write queue for

all banks. Reads are prioritized over writes to minimize

read latency. Once an access is started, it will receive the

highest priority so that it can finish as quickly as possible

to reduce the degree of reordering [14]. Not proposed in

the patent, Intel scheduling with read preemption (Intel RP)

allows reads to interrupt ongoing writes in a similar way to

read preemption as described in Section 3.2.

RowHit and Intel’s scheduling both attempt to prioritize

row hits, however, they employ a “best effort” mechanism

in grouping row hit accesses. Without considerations of

SDRAM timing constraints, bubble cycles could easily be

introduced, leading to performance degradation. With the

5. Simulation Results and Analysis

Increasing row hits and reducing access latency are the

major design goals of burst scheduling. Studies of access

latency and SDRAM row hit rate illustrate the impacts of

access reordering mechanisms and inspire further improvements to burst scheduling.

5.1. Access Latency

When a memory read access is issued to the main memory, all in-flight instructions dependent upon this read request are blocked until the requested data is returned. Write

accesses, however, can complete immediately because no

data needs to be returned. Therefore, one of the design

goals of access reordering mechanisms is to reduce the read

latency by postponing writes.

Figure 7 shows the averaged read latency and write latency obtained by all access reordering mechanisms simulated. All out of order access reordering mechanisms reduce the read latency by a range of 26% to 47% compared

to BkInOrder; while all write latencies except for RowHit

are increased.

RowHit treats reads and writes equally thus it reduces

both read and write latency and achieves the lowest write

latency among all access reordering mechanisms. Burst RP

has the lowest read latency because reads are not only prioritized over writes but also allowed to interrupt ongoing

writes. Read preemption helps Intel’s scheduling to reduce

read latency as well. Intel and Burst postpone writes thus

they both have long write latencies. Read preemption makes

the write latency of Intel and Burst even longer. On the

other hand, write latency is greatly reduced by write piggybacking because more row hits from writes are exploited.

To better understand the relationship between read and

write latency, the distribution of outstanding memory accesses for the benchmark swim, which is defined as the

Percentage of Time

(a) Outstanding Reads

BkInOrder

RowHit

Intel

Burst_RP

Burst_WP

Burst_TH

0.20

0.15

0.5

0.4

0.3

0.10

0.2

0.05

0.1

0.00

0

5

10 15 20 25 30 35

Number of Accesses

0.0

0

0.20

0.15

0.10

Burst_TH

Burst_WP

Burst

0.00

Burst_RP

Address bus

Data bus

0.05

Intel

0.0

0.25

Intel_RP

Row empty

Row conflict

Row hit

0.2

Percentage of Time

0.3

0.30

RowHit

Burst_TH

Burst_WP

Burst

Burst_RP

Intel

Intel_RP

RowHit

Figure 7. Access latency in memory cycles

0.25

0.4

0.1

BkInOrder

0

Burst_TH

Burst_WP

Burst

Burst_RP

Intel

Intel_RP

RowHit

0

BkInOrder

100

0.5

Burst_TH

200

0.6

Burst_WP

300

0.35

0.7

Burst

400

0.40

0.8

Intel

20

500

(b) Bus Utilization

0.45

0.9

Intel_RP

40

600

RowHit

SDRAM Cycles

SDRAM Cycles

60

Percentage of Accesses

700

80

(a) Row Hit/Conflict/Empty

BkInOrder

1.0

Burst_RP

(b) Write Latency

800

BkInOrder

(a) Read Latency

100

Figure 9. Average row hit, row conflict and

row empty rate and SDRAM bus utilization

(b) Outstanding Writes

BkInOrder

RowHit

Intel

Burst_RP

Burst_WP

Burst_TH

10 20 30 40 50 60 70

Number of Accesses

Figure 8. Distribution of outstanding memory

accesses for benchmark swim

percentage of time that a given number of accesses are outstanding in the main memory, is shown in Figure 8.

RowHit slightly increases the number of outstanding

accesses compared to BkInOrder to allow row hits to be

served first. Intel and Burst have large number of outstanding writes in the write queue due to postponed writes. Burst

is more aggressive in prioritizing reads over writes than Intel. As a result, Intel and Burst cause write queue saturation

24% and 46% of time respectively for the swim benchmark.

Read preemption reduces the number of outstanding reads

but causes the write queue saturating more frequently, i.e.

Burst RP causes write queue saturation 70% of time.

Prioritizing reads over writes can improve system performance. However, write queue saturation may result

in CPU pipeline stalls. Write piggybacking is employed

to empty writes from the write queue without causing an

undo increase in read latency. Burst WP only causes write

queue saturation 2% of time. Burst TH with a threshold of

52 achieves a tradeoff between reducing read latency and

preventing write queue saturation, resulting in a 9% write

queue saturation rate. Burst TH yields the best performance

as following sections will show.

5.2. Row Hit Rate and Bus Utilization

Row hits require fewer transactions and have shorter latencies than row conflicts, as discussed in Section 2. Access

reordering mechanisms usually select row hits first and turn

potential row conflicts into row hits. Also more row hits

may become available as new accesses arrive. Figure 9(a)

shows row hit, row conflict and row empty rate averaged

crossing all simulated benchmarks.

Out of order access reordering mechanisms increase row

hit rate. Among them, RowHit, Burst WP and Burst TH

have the highest row hit rates. Intel and Burst without write

piggybacking have lower row hit rates although they are still

better than BkInOrder. The reason is that in contrast to Intel

and Burst, which only search the read queues for row hits,

RowHit, Burst WP and Burst TH seek row hits in both the

read queues and the write queues.

With static open page policy, most row empties happen

after SDRAM auto refreshes as banks are precharged. With

read preemption, an ongoing write interrupted by a read

may have precharged the bank while having not yet initiated the row activate. This causes the preempting read to be

a row empty. Therefore Intel RP, Burst RP and Burst TH

have increased row empty rates.

The SDRAM bus utilization, which is the percentage of

time that the bus is occupied, is shown in Figure 9(b). There

is only a 3% difference of address bus utilization among all

access reordering mechanisms, while the data bus utilization varies in a range from 31% to 42%. This confirms that

the data bus is more critical than the address bus. Given the

simulated DDR2 PC2-6400 SDRAM, Burst TH achieves

the highest data bus utilization of 42%. The effective memory bandwidth is increased from 2.0GB/s (BkInOrder) to

2.7GB/s (Burst TH), resulting in a 35% improvement.

0.25

(a) Outstanding Reads

WP

TH8

TH16

TH24

TH32

TH40

TH48

TH52

TH56

RP

0.8

0.7

0.6

0.5

0.2

art

facerec

mesa

applu

swim

mgrid

bzip2

wupwise

gap

parser

perlbmk

gcc

mcf

0.0

gzip

0.1

apsi

0.3

average

RowHit

Intel

Intel_RP

Burst

Burst_RP

Burst_WP

Burst_TH

0.4

Figure 10. Execution time of access reordering mechanisms, normalized to BkInOrder

Percentage of Time

0.9

lucas

Normalized Execution Time

1.0

0.20

0.15

0.10

0.05

0.00

0

0.5

0.4

0.3

0.2

(b) Outstanding Writes

WP

TH8

TH16

TH24

TH32

TH40

TH48

TH52

TH56

RP

0.1

5

10 15 20 25 30 35

Number of Accesses

0.0

0

10 20 30 40 50 60 70

Number of Accesses

Figure 11. Outstanding accesses for benchmark swim under various thresholds

mined.

5.4. Burst Threshold

5.3. Execution Time

Previous sections showed access latency, row hit rate as

well as but utilization of various access reordering mechanisms. Results in this section examine the execution time

of each individual benchmark with these access reordering

mechanisms. Execution times are normalized to BkInOrder

and shown in Figure 10.

RowHit achieves an average 17% reduction of execution

time compared to BkInOrder. Intel and Burst reduce the execution time by 12% and 14% respectively. Read preemption alone contributes another 3% improvement on top of

Intel and Burst. Write piggybacking alone also contributes

5% improvement on top of Burst, resulting in a total of 19%

reduction of execution time by Burst WP. Burst TH which

combines read preemption and write piggybacking using a

static threshold of 52 yields the best performance among all

access reordering mechanisms, achieving a 21% reduction

in execution time crossing all simulated benchmarks, which

results in a 6% improvement over RowHit, 11% and 7%

improvement over Intel and Intel RP respectively.

Read preemption and write piggybacking have a varied impact dependent upon benchmark characteristics. For

mcf, parser, perlbmk and facerec, read preemption

contributes much greater performance improvement compared to write piggybacking. For the remainder of the

benchmarks, write piggybacking generally results in more

improvement than read preemption. Especially for gcc and

lucas, Burst WP achieves 14% and 18% reduction in execution time respectively. It is desirable to take advantage

of both read preemption and write piggybacking to achieve

a maximal performance improvement. A static threshold is

employed to control read preemption and write piggybacking. The next section will show how this threshold affects

the performance and how the optimized threshold is deter-

Read preemption and write piggybacking have been

shown to perform well on some benchmarks but not on all

benchmarks. Which technique has greater impact on performance is largely dependent on the memory access patterns of benchmarks. For example, allowing a critical read

having many dependent instructions to preempt an ongoing

write may improve the performance. However, completing

the ongoing write may prevent CPU pipeline stalls due to a

saturated write queue.

When the write queue has low occupancy, read preemption is desired to reduce read latency by allowing reads to

bypass writes. When the write queue approaches capacity,

write piggybacking can keep the write queue from saturation. Read preemption and write piggyback can be switched

dynamically based on the write queue occupancy. When the

write queue occupancy is less than a certain threshold, read

preemption is enabled; otherwise, write piggybacking is enabled.

Using the same example swim benchmark as in Section 5.1, the distribution of outstanding accesses with various thresholds are shown in Figure 11. Note Burst RP and

Burst WP are equivalents to Burst TH64 and Burst TH0 respectively given that the write queue size is 64.

From Figure 11, Burst RP has fewer outstanding reads

than other thresholds, however, read latency of Burst RP

is slightly higher than others. This is because when there

are fewer reads in the read queue, there are less opportunities for row hits to occur. In order for burst scheduling to

create larger bursts and increase row hits, the read queue

should contain a number of outstanding reads, and these

reads should be served at a rate that will not deplete the

read queue too quickly.

As the threshold increases from 0 to 64, the peak value

of outstanding writes increases as well. The write buffer

(b) Read Latency

(c) Write Latency

(a) Execution Time

saturation rate is below 7% when the threshold is less than

48. The saturation rate increases to 14% at threshold 56

then jumps to 70% at threshold 64 (Burst RP). The earlier

write piggybacking is enabled, the less frequently the write

queue will be saturated.

To determine the threshold that yields the best performance, simulations with various thresholds are performed

and the results are shown in Figure 12. The execution

times are averaged crossing all benchmarks and normalized to Burst. As the threshold increases, read latency first

decreases because there are more reads which experience

shorter latencies by preempting writes. From threshold 40

read latency starts increasing mainly due to CPU pipeline

stalls caused by increased occurrences of write queue saturation. Write latency increases as expected as the threshold increases. Execution time is determined by both read

latency and write latency. According the results, the threshold 52 yields the lowest execution time based upon the 16

benchmarks of SPEC CPU2000 simulated.

benchmarks over in order scheduling. Burst scheduling also

outperforms the row hit scheduling and Intel’s out of order

scheduling by 6% and 11% respectively.

As SDRAM devices evolve, timing parameters (tCL tRCD -tRP ) do not improve as rapidly as bus frequency.

For instance, DDR PC-2100 devices (133MHz) have typical timings of 2-2-2 (15ns-15ns-15ns), while DDR2 PC26400 devices (400MHz) have typical 5-5-5 (12.5ns-12.5ns12.5ns) timing. From DDR PC-2100 to DDR2 PC2-6400,

the bus frequency as well as bandwidth improve by 200%

however the timing parameters only reduce by 17%. Access latency, in terms of memory cycles, increases, i.e. row

conflict latency increases from 6 cycles to 15 cycles. Increased main memory access latency leads to more performance improvement opportunities for memory optimization

techniques. As the number of cycles for timing parameters

increases in the future, the performance improvement provided by access reordering mechanisms will be even more

significant than the simulation results presented in this paper.

Access reordering mechanisms will play a more important role with chip level multiple processors, as the memory controller will have larger number of outstanding main

memory accesses from which to select. Access reordering

mechanisms may also benefit from integrating the memory

controller into the CPU die. Due to a tighter connection between the integrated memory controller and the CPU, more

instruction level information, such as the number of dependent instructions, is available to the controller. This information may be utilized to make intelligent scheduling decisions. An integrated memory controller can also run at the

same speed as the CPU, making complex scheduling algorithms feasible.

6. Conclusions

7. Future Work

Memory scheduling techniques improve system performance by changing the sequence of memory accesses to increase row hit rate and reduce memory access latency. Inspired by existing academic and industrial access reordering

mechanisms, the burst scheduling access reordering mechanism is hereby being proposed with the goal of improving

performance of existing access reordering mechanisms and

addressing their shortcomings.

Using the M5 simulator with a detailed SDRAM module, burst scheduling is examined and compared to the row

hit scheduling and Intel’s out of order memory scheduling. The performance contributions of read preemption

and write piggybacking are studied and identified. The

threshold that yields the best performance is determined by

experiments. With selected SPEC CPU2000 benchmarks,

burst scheduling with a threshold of 52 achieves an average

of 21% reduction in execution time crossing 16 simulated

Burst scheduling with static threshold works well on average, however, benchmarks have unique access patterns,

and therefore require different thresholds. A dynamical

threshold, which is calculated on the fly based on some critical parameters such as read write ratios, will match access

patterns of different benchmarks for further performance

improvement.

Currently reads inside bursts are scheduled in the same

order as they were issued. Changing the order of accesses

within a burst will not affect the effective memory bandwidth and the total time to complete the burst. However,

critical accesses (i.e. having many dependent instructions)

may benefit from being scheduled first inside the burst. Integrating the SDRAM controller into the CPU die makes

more instruction level information, which can be used to

perform intra burst scheduling, obtainable to the scheduler.

Similarly the sequence of bursts within banks can also be

800

40

30

20

Normalized to Burst

SDRAM Cycles

SDRAM Cycles

50

600

500

400

300

200

10

0.9

0.8

0.7

0.6

0

Burst

WP

TH8

TH16

TH24

TH32

TH40

TH48

TH52

TH56

TH60

RP

100

Burst

WP

TH8

TH16

TH24

TH32

TH40

TH48

TH52

TH56

TH60

RP

0

1.0

700

0.5

Burst

WP

TH8

TH16

TH24

TH32

TH40

TH48

TH52

TH56

TH60

RP

60

Figure 12. Access latency and execution time

under various thresholds

changed to reduce latency of critical data. Bursts could be

sorted other than the arrival time of the first access of each

burst as being proposed, i.e. sorted by the size of bursts.

However, considerations are required to prevent starvation

when performing inter burst scheduling.

Other SDRAM techniques such as address mapping [19,

5, 23, 16] change the distribution of memory accesses to

increase row hit rate. Access reordering mechanisms will

benefit from the increased row hit rate. Studies of access reordering mechanisms working in conjunction with SDRAM

address mapping are ongoing [15].

8. Acknowledgments

The work of Jun Shao and Brian Davis was supported

in part by NSF CAREER Award CCR 0133777. We would

also like to thank the reviewers for their comments and suggestions.

References

[1] N. L. Binkert, E. G. Hallnor, and S. K. Reinhardt. NetworkOriented Full-System Simulation using M5. In Proceedings

of the Sixth Workshop on Computer Architecture Evaluation

using Commercial Workloads (CAECW), 2003.

[2] Chris Weaver. Pre-compiled Little-endian Alpha ISA SPEC

CPU2000 Binaries.

[3] V. Cuppu, B. Jacob, B. Davis, and T. Mudge. HighPerformance DRAMs in Workstation Environments. IEEE

Trans. Comput., 50(11):1133–1153, 2001.

[4] B. T. Davis. Modern DRAM Architectures. PhD thesis, Department of Computer Science and Engineering, the University of Michigan, 2001.

[5] W. fen Lin. Reducing DRAM Latencies with an Integrated

Memory Hierarchy Design. In HPCA ’01: Proceedings

of the 7th International Symposium on High-Performance

Computer Architecture, page 301, Washington, DC, USA,

2001. IEEE Computer Society.

[6] J. Hasan, S. Chandra, and T. N. Vijaykumar. Efficient

Use of Memory Bandwidth to Improve Network Processor

Throughput. In ISCA ’03: Proceedings of the 30th Annual

International Symposium on Computer Architecture, pages

300–313, New York, NY, USA, 2003. ACM Press.

[7] I. Hur and C. Lin. Adaptive History-Based Memory Schedulers. In MICRO 37: Proceedings of the 37th annual International Symposium on Microarchitecture, pages 343–354,

Washington, DC, USA, 2004. IEEE Computer Society.

[8] J. Janzen. DDR2 Offers New Features and Functionality.

DesignLine, 12(2), Micron Technology, Inc., 2003.

[9] S. A. McKee, W. A. Wulf, J. H. Aylor, M. H. Salinas, R. H.

Klenke, S. I. Hong, and D. A. B. Weikle. Dynamic Access

Ordering for Streamed Computations. IEEE Trans. Comput.,

49(11):1255–1271, 2000.

[10] Micron Technology, Inc. Micron 512Mb: x4, x8, x16 DDR2

SDRAM Datasheet, 2006.

[11] NEC. 64M-bit Virtual Channel SDRAM, October 1998.

[12] S. Rixner. Memory Controller Optimizations for Web

Servers. In MICRO 37: Proceedings of the 37th annual

International Symposium on Microarchitecture, pages 355–

366, Washington, DC, USA, 2004. IEEE Computer Society.

[13] S. Rixner, W. J. Dally, U. J. Kapasi, P. Mattson, and J. D.

Owens. Memory Access Scheduling. In ISCA ’00: Proceedings of the 27th Annual International Symposium on Computer Architecture, pages 128–138, New York, NY, USA,

2000. ACM Press.

[14] H. G. Rotithor, R. B. Osborne, and N. Aboulenein. Method

and Apparatus for Out of Order Memory Scheduling. United

States Patent 7127574, Intel Corporation, October 2006.

[15] J. Shao. Reducing Main Memory Access Latency through

SDRAM Address Mapping Techniques and Access Reordering Mechanisms. PhD thesis, Department of Electrical and

Computer Engineering, Michigan Technological University,

2006.

[16] J. Shao and B. T. Davis. The Bit-reversal SDRAM Address

Mapping. In SCOPES ’05: Proceedings of the 9th International Workshop on Software and Compilers for Embedded

Systems, pages 62–71, September 2005.

[17] K. Skadron and D. W. Clark. Design Issues and Tradeoffs

for Write Buffers. In HPCA ’97: Proceedings of the 3rd

IEEE Symposium on High-Performance Computer Architecture, page 144, Washington, DC, USA, 1997. IEEE Computer Society.

[18] Standard Performance Evaluation Corporation.

SPEC

CPU2000 V1.2, December 2001.

[19] R. Tomas. Indexing Memory Banks to Maximize Page

Mode Hit Percentage and Minimize Memory Latency. Technical Report HPL-96-95, Hewlett-Packard Laboratories,

June 1996.

[20] A. Wong. Breaking Through the BIOS Barrier: The Definitive BIOS Optimization Guide for PCs. Prentice Hall, 2004.

[21] W. A. Wulf and S. A. McKee. Hitting the Memory Wall: Implications of the Obvious. SIGARCH Comput. Archit. News,

23(1):20–24, 1995.

[22] Y. Xu. Dynamic SDRAM Controller Policy Predictor. Master’s thesis, Department of Electrical and Computer Engineering, Michigan Technological University, April 2006.

[23] Z. Zhang, Z. Zhu, and X. Zhang. A Permutation-based

Page Interleaving Scheme to Reduce Row-buffer Conflicts

and Exploit Data Locality. In MICRO 33: Proceedings of

the 33rd annual ACM/IEEE international symposium on Microarchitecture, pages 32–41, New York, NY, USA, 2000.

ACM Press.

[24] Z. Zhu, Z. Zhang, and X. Zhang. Fine-grain Priority

Scheduling on Multi-channel Memory Systems. In HPCA

’02: Proceedings of the 8th International Symposium on

High-Performance Computer Architecture, page 107, Washington, DC, USA, 2002. IEEE Computer Society.