Weakly Supervised Visual Dictionary Learning by Harnessing Image Attributes Senior Member, IEEE

advertisement

5400

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 12, DECEMBER 2014

Weakly Supervised Visual Dictionary Learning

by Harnessing Image Attributes

Yue Gao, Senior Member, IEEE, Rongrong Ji, Senior Member, IEEE, Wei Liu, Member, IEEE,

Qionghai Dai, Senior Member, IEEE, and Gang Hua, Senior Member, IEEE

Abstract— Bag-of-features (BoFs) representation has been

extensively applied to deal with various computer vision applications. To extract discriminative and descriptive BoF, one

important step is to learn a good dictionary to minimize the

quantization loss between local features and codewords. While

most existing visual dictionary learning approaches are engaged

with unsupervised feature quantization, the latest trend has

turned to supervised learning by harnessing the semantic labels

of images or regions. However, such labels are typically too

expensive to acquire, which restricts the scalability of supervised

dictionary learning approaches. In this paper, we propose to

leverage image attributes to weakly supervise the dictionary

learning procedure without requiring any actual labels. As a

key contribution, our approach establishes a generative hidden

Markov random field (HMRF), which models the quantized

codewords as the observed states and the image attributes

as the hidden states, respectively. Dictionary learning is then

performed by supervised grouping the observed states, where

the supervised information is stemmed from the hidden states

of the HMRF. In such a way, the proposed dictionary learning

approach incorporates the image attributes to learn a semanticpreserving BoF representation without any genuine supervision.

Experiments in large-scale image retrieval and classification tasks

corroborate that our approach significantly outperforms the

state-of-the-art unsupervised dictionary learning approaches.

Index Terms— Bag-of-features, visual dictionary, image

attribute, weakly supervised learning, hidden Markov random

field, image classification, image search.

I. I NTRODUCTION

A

CCOMPANYING with the popularity of local features in

the recent decade, Bag-of-Features (BoF) representation

Manuscript received August 4, 2013; revised January 31, 2014, June 12,

2014, and August 1, 2014; accepted August 26, 2014. Date of publication

October 29, 2014; date of current version November 6, 2014. This work was

supported in part by the National Natural Science Foundation of China under

Grant 61422210 and Grant 61373076, in part by the Fundamental Research

Funds for the Central Universities under Grant 2013121026, and in part by the

985 Project through Xiamen University, Xiamen, China. The work of W. Liu

was supported by the Josef Raviv Memorial Post-Doctoral Fellowship. The

associate editor coordinating the review of this manuscript and approving it

for publication was Prof. Richard J. Radke. (Corresponding author: R. Ji.)

R. Ji is with the Department of Cognitive Science, School of Information

Science and Engineering, Xiamen University, Xiamen 361005, China (e-mail:

rrji@xmu.edu.cn).

Y. Gao and Q. Dai are with the Tsinghua National Laboratory for

Information Science and Technology, Department of Automation, Tsinghua

University, Beijing 100084, China.

W. Liu is with the IBM T. J. Watson Research Center, Yorktown Heights,

NY 10598 USA.

G. Hua is with the Department of Computer Science, Stevens Institute of

Technology, Hoboken, NJ 07030 USA.

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TIP.2014.2364536

has been widely advocated in various computer vision

applications, e.g., visual search [11], [14], [20], object

recognition [18], [26], image parsing and scene understanding [19], [34]. Its core idea is using a visual dictionary to

encode (a.k.a. quantize) a set of local features extracted from

a given image into a BoF histogram. This representation

enjoys good robustness against photographic variations caused

by changing backgrounds, viewpoints, illuminations and

occlusions.

Visual vocabulary, or so-called visual codebook, serves as a

fundamental component in extracting the BoF representation.

It is used to encode each local feature to its best matched

word(s) [19], [20]. This coding (or quantization) procedure

will inevitably introduce information loss, which incurs a

performance drop from the raw local features to the BoF

histogram. Extensive research efforts have been devoted to

reducing such information loss [17], [20], [21], [33], [34].

One solution is to improve the feature coding (quantization)

stage, i.e. assigning one local feature to multiple codewords,

for example soft quantization [17], [20] and sparse coding [18], or alternatively, embedding the spatial layouts of local

features into quantization, for example spatial pyramid matching [34], feature bundling [33], averaged pooling [21] and max

pooling [18]. Another solution is to directly optimize the dictionary learning procedure, which aims to minimize the quantization loss of individual BoFs [8], [23], [24]. Our work falls

into the latter solution, which combines the learned dictionary

with cutting-edge feature coding designs [17], [18], [20], [21],

[33], [34] to jointly compensate the quantization loss.

Most existing methods for visual dictionary learning are

unsupervised, which focus on minimizing the quantization

errors during coding each local feature to its adjacent

codeword(s) [5], [11], [13], [17], [18], [20]. However,

in many visual search and recognition applications, the

resulting BoFs are processed as a whole in the subsequent

steps, for instance similarity ranking or classifier training.

Such applications emphasize more on the semantic-level

discriminability of the generated BoF histograms, rather

than minimizing quantization loss of individual local

features.

Therefore, a natural inspiration is to use semantic cues

like labels of regions or images to supervise the dictionary

learning process. Inspirations also originate from the human

visual system. In that case, IT cortex that is in charge of the

visual recognition function has shown stimuli feedbacks to

the V1 to V4 cortices [22], which generate the local visual

representation based on the visual stimuli.

1057-7149 © 2014 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission.

See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

GAO et al.: WEAKLY SUPERVISED VISUAL DICTIONARY LEARNING

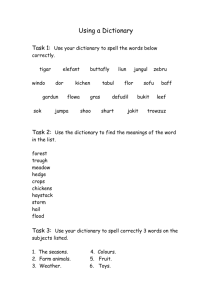

Fig. 1.

5401

The proposed weakly supervised dictionary learning framework harnessing image attributes.

The labels of supervised dictionary learning are typically on

the image or the region level [8], [12], [15], [23], [24].1 Such

labels are typically leveraged to refine the similarity metric

applied to local features, or alternatively to optimize the quantization procedure. Although significant performance gains

have been reported [8], [12], [15], [18], [23], [24], [26], this

kind of supervision does not come for free. On one hand,

manually labeling images is labor intensive. On the other hand,

image tags crawled from the Internet are always noisy [27].

Moreover, the potential of the existing supervised dictionary learning approaches is restricted by their limitations

in scalability and robustness. This hinders the proliferation

of such approaches to many scenarios such as million-scale

image search, city-scale location recognition, and hyperspectral image classification.

Facilitated by the recent progress on the image

attributes [28]–[32], is it possible to exploit such cheaplyavailable supervision to “supervise” the dictionary learning

without actual labels? Image attributes reveal the possibilities

of visual cues like “Round”, “Head”, “Torso”, “Label”,

“Feather”, etc., which are acquired from other training

sources or even from different domains.2 Importantly,

attributes-based supervision enjoys good scalability and thus

enables large-scale applications like image classification,

clustering and search.

It is not guaranteed that the automatic attribute extraction

is fully accurate, which necessitates the special treatment

when being applied to supervise dictionary learning. To this

end, we propose a weakly supervised dictionary learning

approach based on a Hidden Markov Random Field (HMRF)

model. Different from the existing dictionary learning

methods [5], [11], [13], [17], [18], [20], we model the image

1 Pairwise correspondences of local feature can be also used as supervision,

which are typically derived from wide/narrow baselines matching or nearduplicate image search. This kind of labels is out of the scope of this paper.

2 For example, we use the Classemes attributes [31] in our experiments,

which were trained on the web images crawled from the Bing search engine.

attributes as the hidden nodes in HMRF and the quantized

codewords as the observation nodes. Then, the supervised

dictionary learning is conducted by iteratively optimizing the

quantization process and the attribute-to-codeword mappings.

In principle, the HMRF model seeks an optimal tradeoff

between minimizing the quantization error and fitting the

attribute responses of the BoF histograms produced. We further show that several existing unsupervised and supervised

dictionary learning methods can be explained under the proposed dictionary learning framework.

Fig. 1 outlines the proposed framework, which consists of

three interdependent components:

• Attributes-based feature coding step integrates attributes

extracted from a given image into an objective function

of feature coding by using the proposed attribute-tocodeword mapping.

• Weakly supervised dictionary learning step learns a visual

dictionary by using a constrained local feature clustering. This component can be interpreted as an attributesaware “Maximization” operation, while the previous

feature coding can be interpreted as an “Expectation”

operation.

• Attribute-to-codeword mapping step maps non-zero

attributes to particular codewords. The mapping is based

on the co-occurrence statistics between attributes and

words, which is updated after each iteration of visual

dictionary learning.

The main contributions of this paper are three-fold:

• We propose to model dictionary learning from a probabilistic aspect, i.e., using a generative Hidden Markov

Random Field. It enables us to explicitly model the supervised dictionary learning procedure under an ExpectationMaximization formulation.

• We propose to exploit the cheaply available semantic

attributes extracted from images to supervise the dictionary learning, which produces semantic-preserving BoF

descriptors without any explicit labels.

5402

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 12, DECEMBER 2014

•

by minimizing mutual information loss of the codes generated

from the training set. Moosmann et al. [23] proposed an

ERC-Forest to incorporate semantic labels to build indexing

trees. The works presented in [9] and [12] refined the initial

codewords to derive class or image specific dictionaries for

image categorization. To a certain degree, topic decomposition

including pLSA [40], [41] and LDA [42] can also be regarded

as supervised dictionary learning, which learns a topic-level

abstraction from codewords and yields a new image signature.

It is worth to note that, supervised vector quantization has been

widely studied in the area of data compression, with wellknown methods like self-organizing maps [38], regression lost

minimization [39], etc.

We demonstrate the generalization capability of the proposed approach, i.e.,. several existing dictionary learning

and feature coding schemes can be explained using the

proposed formulation.

The rest of this paper is organized as follows: Section II

reviews the related work in unsupervised and supervised dictionary learning. Section III introduces our proposed weakly

supervised dictionary learning framework. Section IV presents

the experiments with comparisons to the state-of-the-arts in

image search and image classification tasks. Section V concludes the paper and discusses our future work.

II. R ELATED W ORK

A. Unsupervised Dictionary Learning

Visual dictionary learning typically resorts to quantizing

local features, i.e., subdividing the local feature space into

disjoint partitions, each of which corresponds to a codeword.

To this end, many vector quantization schemes have been

introduced, for example, K-Means [14], Hierarchical K-Means

(a.k.a. Vocabulary Tree) [11], Approximate K-Means [13], and

their variations [6], [13], [17]. Based on the learned visual

dictionary, local features extracted from a given image are

represented as a BoF histogram, in which each codeword is

associated with a term frequency that counts how many local

features of this image fall into the corresponding partition in

the local feature space.

The most critical issue for BoF-based representation lies in its quantization loss, which is incurred

by replacing each feature vector with its best matched

codeword(s) [11], [17], [19], [20]. To compensate, one potential solution is to improve the feature coding stage using

methods such as soft assignment [13], [17], [20], sparse

coding [18] or Hamming embedding [5]. Alternatively, a more

straightforward solution is to learn a better dictionary, such

as, but not limited to, kernelized codebook [2], hierarchical

optimization [35], and so forth. These dictionary optimization

approaches can also be combined with advanced feature coding schemes to further reduce the quantization loss.

For the purpose of image search, works in [3], [4], and [36]

proposed to hash local features into a discrete set of bins,

which are then indexed to their corresponding buckets in one

or multi hash tables. In this case, online search can be confined

within only a few buckets, leading to a sub-linear search time

with provable accuracy guarantees. In this direction, methods

such as Locality Sensitive Hashing (LSH) [3], Kernalized

LSH [4] and Spectral Hashing [36] have been widely investigated.

B. Supervised Dictionary Learning

The supervised dictionary learning paradigm [8], [23], [24],

[27], [37] exploits image/region labels or match/non-match

feature pairs to supervise the codeword generation process.

The targeted dictionary is supposed to produce semanticpreserving BoF histograms, e.g., the images belonging to

the same category should have similar BoFs and vice versa.

In detail, Mairal et al. [24] learned a dictionary in a supervised

mode by discriminative sparse coding. Lazebnik et al. [8]

learned a dictionary for the purpose of object categorization

C. Generative Dictionary Learning

In

line

with

generative

dictionary

learning,

Jaakkola et al. [43] exploited the presence/absence of class

labels to supervise the generation of BoFs using Maximum

a Posteriori. Perronnin et al. [12] used the Gaussian Mixture

Model with Fisher kernels for image categorization. In our

previous work [27], a semantic embedding scheme was

proposed to guide supervised dictionary learning, which used

a density-diversity purification to filter out imprecise patch

labels fetched from meta-data of web images. Then, a Hidden

Markov Random Field is used to leverage the purified labels

for dictionary learning.

D. Weakly Supervised Learning

Our work is inspired by an emerging machine learning

direction named “weak supervised learning” [44], [45], which

conducts a joint learning from multiple sources of labels,

in which the initial labels are not fully trusted. In practice,

a variety of real-world problems can be formalized to this

multi-labelers problem, where the reliabilities of different

annotators may vary significantly. For example, there has

been an increasing amount of endeavors exploring Amazon’s

Mechanical Turk for annotation [44]. In absence of groundtruth labels, how to simultaneously learn classifiers and

evaluate label correctness was investigated in [44] and [45],

in which a robust learning procedure is devised to deal with

multiple unreliable sources of labels.

Comparing to above, we innovate in the following aspects:

• The dictionary learning is modeled from a novel probabilistic aspect by using a generative Hidden Markov

Random Field. It enables us to explicitly model the supervised dictionary learning procedure with an ExpectationMaximization formulation.

• By exploiting the cheaply available semantic attributes

extracted from the image, the dictionary learning is

?supervised? without any explicit labels. In such a way,

our approach is label-free yet still preserves the semantic

meanings into the BoF histogram.

III. W EAKLY S UPERVISED D ICTIONARY

L EARNING F RAMEWORK

A. Generative Dictionary Learning Formulation

This section introduces the proposed Hidden Markov

Random Field (HMRF) for the formulation of generative

GAO et al.: WEAKLY SUPERVISED VISUAL DICTIONARY LEARNING

5403

Fig. 3.

Fig. 2. The graphical representation of the proposed weakly supervised

dictionary learning with attributes.

dictionary learning. In such a case, the supervised labels may

come from multiple sources, for example, image annotations,

category labels, image query logs, as well as automatic image

attribute detections (as adopted in this paper). To use supervised labels for dictionary learning, we need to link them,

directly or indirectly, to local features or codewords. For

example, labels may identify the local feature correspondence

(matching) or identify the category identity. In the latter case,

such labels are subsequently propagated to local features [27]

or codewords.

In our case, we assume that these labels are automatically

detected from images, and then propagated to codewords.

Fig. 2 shows the graphical representation of our supervised

dictionary learning formulation. This formulation consists of

three components as below:

(1) A Hidden Layer to model the label supervision,

K , each of

which consists of K nodes A = {ai }i=1

which corresponds to a label. As discussed before,

such labels may come from supervised category annotations, or alternatively from unsupervised image attribute

scores [28]–[31]. In the unsupervised case, each ai

denotes a unique attribute, which is mapped to codeM

words V = {v i }i=1

in the Observed Layer with M

mapping probabilities scored among [0, 1]. Here, K is

the dimension of semantic attributes detected, and M is

the size of the codebook learned, a.k.a., the number

of codewords (clustering centers) after quantization.

We denote A(i ) as the accumulated responses of i -th

attribute from all images in the dataset and Ak (i ) as

the response of k-th attribute from the i -th image in the

dataset.

(2) The

Mapping

represents

the

probabilistic

co-occurrences from attributes in the Hidden Layer

to codewords in the Observed Layer, as shown in

Fig. 3. We name it “Attribute-to-Codeword Mapping”

and denote it by a multi-to-multi mapping matrix

M ∈ R K ×M . The entry at the k-th row (attribute) and

the m-th column (codeword) of M is calculated as:

Mkm =

N

1 Ak (i ) × Frem (i ),

Z

(1)

The proposed attribute-to-codeword mapping.

on the i-th image. Frem (i ) is the frequency of the m-th

codeword on the i-th image.

We multiply Ak (i ) and Fm (i ) to derive the mapping

probability between the k-th attribute and the m-th

codeword for the i-th image. For each ak and v m pair,

probabilities from all reference images are summarized

to derive the final mapping score, which is then normalized among M.

After each round of dictionary learning, this mapping

is updated to provide a renewed supervision for both

the dictionary learning and the feature coding steps, as

detailed later in Section III-C.

M

(3) An Observed Layer contains codewords V = {v i }i=1

3

updated after each iteration of dictionary learning . V is

quantized from the local features extracted

from image

N

Ni N

dataset F(i ) i=1 =

f(i, l) l=1

, where f(i, l)

i=1

denotes the l-th local feature (in total Ni ) in the i-th

image. After dictionary learning, we denote the learned

N

BoF codes as U(i ) i=1 .

We take a generative understanding in the Observed

Field using the labels from the Hidden Field based on the

Attribute-to-Codeword Mapping M ∈ R K ×M . That is,

for the i-th image we treat each BoF U(i ) to be generated

from its local feature set F(i ). And this generation

process is supervised by the attribute detection scores

A(i ) of this image, formulated as:

N

P U(i )|A(i ), F(i ) .

P U|A, F =

(2)

i=1

We assume a conditional independence among individual images. Therefore, the BoF coding is solved in an

image-wise style, as detailed later in Section III-C. From

the graphical representation of Fig. 2, the BoF codes U

and attribute supervision A are conditionally dependent

given the Observed Field V. In such a manner, A is

imposed to optimize the learning procedure of BoF

codes U.

Indeed the attribute scores are determined by the

detector response rather than the inference. However,

the generation of observed layers can be still treated as

supervised (influenced) by the nodes in the hidden layer.

Here, the key replacement is not to infer the hidden

i=1

where Z is a normalization factor to ensure that each

entry in M has a maximal response of 1 and minimal

of 0. Ak (i ) is the response of the k-th attribute detector

3 Here, we do not restrict our model to the specific dictionary learning

scheme. Indeed methods like K-Means clustering [14], Hierarchical K-Means

clustering [11], or Projective Gradient Descendent [1], [18] can be fluently

adopted into our model.

5404

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 12, DECEMBER 2014

variables, but to infer the ?mapping? at each iteration,

from the learned codewords to the attributes. This mapping can then supervise the codebook quantization in

the next round.

B. Classemes Based Attribute Detection

1) Classemes Attribute Detector: In this paper, we

use the Classemes attribute descriptor proposed by

Torresani et al. in [31]. The Classemes attribute descriptor

consists of the outputs of 2,659 offline-trained object

category classifiers on a given image. The training

is done separately in a different dataset, and the

categories are selected from the Columbia LSCOM visual

concept ontology (Lexicon Definitions and Annotations,

http://www.ee.columbia.edu/ln/dvmm/lscom/). LSCOM is a

professional-designed visual concept ontology describing an

expanded multimedia concept lexicon. The LSCOM concepts

are related to events, objects, locations, people, and programs,

which are selected following a multi-step process involving

input solicitation, expert critiquing, comparison with related

ontologies, and performance evaluation.

2) Attribute Training Procedure: Classemes use the

LSCOM CYC ontology released on 2006-06-30, which contains 2,832 unique categories. Among them 97 categories

denoting abstract groups of other categories (marked in angle

brackets) are removed. Some other category removed include

plural categories that also occurred as singulars, as well as

some people-related categories which were effectively nearduplicates. Finally, they have 2,659 categories, for each of

which a corresponding detector is trained.

For the training, annotated images of a target category are

crawled from image search engines like Google Image with

hybrid features including color, texture, and local features.

Then, one-vs.-all linear SVMs are trained. Online, given a

test image, each category detector would output a probabilistic

response. The responses of all 2,659 individual detectors are

then gathered together to produce the Classemes descriptor.

3) On the Detector Accuracy and Bag-of-Words Performance: As pointed out in [31], it is not required or expected

that the base categories of Classemes will provide precise

labels, of the form water, sky, grass, beak. On the contrary,

there is an assumption that modern category recognizers are

essentially quite dumb; so a swimmer recognizer looks mainly

for water texture, and the bomberplane recognizer contains

some tuning for shapes corresponding to the airplane nose,

and perhaps the shapes at the wing and tail [31]. Even if

these recognizers are perhaps overspecialized for recognition

of their nominal category, they can still provide useful building

blocks to the learning algorithm that learns to recognize the

novel class duck.

In such a case, indeed we do not care about the explicit

performance of each individual attribute detector. Instead,

we exploit their joint response to a certain kind of images,

i.e., images with similar semantics should have similar

attribute responses, which has been clearly demonstrated

in [31] and its subsequent works. In our paper, we assume

that these detector responses, although kind of imprecise,

can provide a reasonable guess about the potential semantic

labels. This is the reason we term our approach as weakly

supervised. And the experiments have shown that such imprecise, automatic labels do significantly improve the recognition

and search accuracies. It is worth to note that, other attribute

detectors [28]–[30], [46] can be also used. And indeed, our

contribution is kind of orthogonal to the attribute detectors

chosen.

C. Supervised Dictionary Learning With Attributes

Learning both the dictionary V and the BoF codes U simultaneously is challenging, since the overall objective function

is not convex. As demonstrated in [18] and [26], the feature

coding step and the dictionary learning step are bi-convex by

fixing each other. Following this principle, we learn V and U

alternatively as below:

1) Descriptor Coding: The Markov Random Field imposes

Markov probabilistic

N distribution to supervise the learning

of V from F(i ) i=1 . In principle, given the attribute score

vector of the i-th image, the quantization of each local feature

only depends on other local features from the same image.

More specifically, for the i-th image, its BoF code Ui of

local features F(i ) only depends on F(i ) and A(i ). And the

dependency of the latter of Ui to A(i ) is measured via M:

arg min P F(i ) → U(i )|A(i )

U(i)

= arg min

U(i)

+

Ni

||V Q f(i, l) − f(i, l)||2

l=1

Ni K

l=1 k=1

α × exp − M Q f(i,l),k × Ak (i ) ,

(3)

where F(i ) → U(i ) denotes the quantization operation for

local features F(i ) extracted from the i-th image, which

is

specified by quantizing each local feature f(i, l) as Q f(i, l) .

V(k) is the k-th codeword. The objective function in Equation 3 is to learn the bag-of-words code U (i ) for the i -th

image, during which procedure the quantizer (codebook) V is

fixed.

Q f(i, l) is the quantizer that encodes a local feature f(i, l)

into one or more codewords in V, which outputs

the quantized

codeword(s). The definition of quantizer Q f(i, l) is very

flexible, for instance hard quantization [11], [14], [27], soft

quantization [13], [17], and sparse coding [18], [26].

Looking into the attribute supervision term in Equation (3),

we add a violation penalty term M Q f(i,l),k ×Ak (i ) with expo

nential loss into the descriptor coding loss ||V Q f(i, l) −

f(i, l)||2 . To obtain the attribute scores A(i ) for each image, we

adopt the Classemes attribute detector [31], which produces a

2,659-dimensional attribute vector for a given image. The time

cost is typically less than 0.5 second for an image of 400×600

resolution on a regular PC.4

4 Without loss of generality, other visual attribute detectors, such

as [28]–[30], [32] can be also used here. This choice is orthogonal to the

core contribution of this paper.

GAO et al.: WEAKLY SUPERVISED VISUAL DICTIONARY LEARNING

5405

a) Explanation: It is worth to note that, a straightforward

alternative solution is to re-weight the BoF matching scores

using the attribute scores. This solution can be treated as

a “late fusion”, which carries out the combination of BoF

based and attribute based scores at the stage of similarity

ranking. In contrast, our approach can be regarded as an

“early fusion”, which combines the BoF code and attribute

scores at the feature extraction stage. In Section IV, we will

quantitatively show that the proposed approach works better

than this alternative solution.

The key of our approach is to introduce the attributebased automatic semantic annotation to “supervise” the coding

process as in Equation 3. This is reflected by adding the

semantic-based loss term as in the last line of Equation 3.

To do this, two operations are designed, i.e., 1. attribute detection for individual images and 2. learning the overall attributecodeword mapping function M. In such a manner, we can

embed semantics into the resulting BoF. Subsequently, images

with related attributes are supposed to have similar BoF histograms. This is beneficial in many applications. For instance,

if this BoF histogram is used for image search, not only

the visually similar images, but also the semantically related

images to the query image, would be returned. For another

instance, if this BoF histogram is used for image categorization, images belonging to identical semantic category would

be more likely to be grouped together in the feature space.

Therefore, it is easier to train an accurate classifier, even using

a linear model (e.g., linear SVM as we tested in this paper).

2) Dictionary Learning: In this step, we aim to minimize

the local feature quantization error. This is achieved by

M

from

re-estimating its M codeword centroid vectors {v m }m=1

the local feature assignments done in the previous coding step.

For the k-th codeword, we update its centroid vector vk by

using local features that are assigned into this codeword:

fi ∈vk fi

vk =

(4)

s.t. vk = fi |Q(fi ) = vk .

|vk |

Note that the above Q(fi ) = vk describes the case of

one-to-one assignment from feature fi to codeword vk . And it

is straightforward to extend to multi-to-one case.

Algorithm 1 describes the iterative procedure between the

local feature coding and the dictionary learning steps. For

simplicity, from now on we rewrite the local features and their

N

N

and C = {ci }i=1

, which are fully equal

indices as F = {fi }i=1

N

N

to F(i ) i=1 and {Ci }i=1 , respectively. And N is the number

of local features extracted from the entire image dataset.

D. Correlation to Existing Dictionary Learnings

In a simplified case, a hard quantization [11], [14] assigns

the i-th local feature fi into only one codeword, such that the

following quantization loss is minimized:

min

V,C

M

||f j − vi ||2 = min

k=1 Q(f j )=vi

V,C

N

||fi − Vci ||2

where the second to the fourth lines in Equation (5) constrain

that only one word is assigned to the local descriptor fi .

To reduce the one-to-one quantization error, this hard constraint is usually relaxed into a soft assignment [17], [20],

e.g., removing the |ci | = 1 constraint in Equation (5).

To further avoid over fitting, i.e., assigning each local feature

to too many codewords, recent works [18], [26] have proposed

to impose an 1 regularizer into Equation (5), which results

in a sparse coding based objective function:

N fi − Vci 2 + λci 1

min

V,C

i=1

s.t. ∀i ∀ j ci j ∈ [0, 1], ci, j ≥ 0.

(6)

Without loss of generality, both Equation (5) and Equation (6)

can be directly integrated into our proposed dictionary learning

formulation in Equation (3). Taking the sparse coding for

instance, the following representation can be easily derived:

min

V,C

N ||fi − Vci ||2 + λ||ci ||1

i=1

+

Ni K

l=1 k=1

α × exp − M Q f(i,l),k × Ak (i )

s.t. ∀i ∀ j ci j ∈ [0, 1], ci, j ≥ 0.

(7)

IV. E XPERIMENTAL E VALUATIONS

We carried out two groups of experimental evaluations.

First, in Section IV-C, we evaluate the proposed approach in

the task of image classification, with comparisons to the stateof-the-art works [18], [33], [34] on Scene15, Caltech256, and

ILSVRC2010 datasets. Second, in Section IV-D, we evaluate

the proposed approach in the task of image search, with

comparisons to the state-of-the-art works [5], [18], [24], [25]

on Holiday and NUS-WIDE benchmark, as well as a

one-million landmark image dataset.

i=1

s.t. ∀i |ci | = 1,

∀ j ci j = {0, 1},

∀i ∀ j ci, j ≥ 0,

Algorithm 1 The Bi-Convex Optimization Procedure of

the Proposed Weakly Supervised Dictionary Learning With

Attributes

A. Baselines and Evaluation Protocols

(5)

In the task of image classification, we compare our dictionary learning approach to ScSPM [18] and LScSPM [25], both

5406

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 12, DECEMBER 2014

of which are very related to the proposed approach. Given the

fact that the spatial cues are widely recognized as being helpful

for BoF representation, we further combine our approach and

the alternative approaches of [18] and [25] with the scheme

proposed in [34], which adopts average pooling + Spatial

Pyramid Matching (SPM) to integrate spatial cues. We compare our approach combined with [34] to two alternative

solutions, i.e., (1) Weak Geometric Consistency + Hamming

Embedding proposed in [5] and (2) Max Pooling with ScSPM

proposed in [18]. For SPM, we use a 3-layer, 4-division setting

with identical weights for each division in each layer. For

ScSPM [18], we directly run their codes for Caltech256 with

the default settings. On both Scene15 and Caltech256, we use

the classification precision per class as the evaluation protocol,

which is identical to [18], [25], [34] and allows us to directly

compare to the results reported in [18], [25], and [34].

In the task of image search, we use mean Average Precision (mAP) as the evaluation protocol, which is widely used

in related work such as [6], [7], and [14]. mAP is defined as

follows:

l

L Nrelevant

P(r )

1 r=1

,

(8)

m AP =

l

L

Nrelevant

l=1

l

where l = 1 to L is the total queries for evaluation. Nrelevant

is the number of relevant images to the l-th query; r is the the

r-th relevant image; P(r ) is the precision at the cut-off rank

of image r .

The goal of evaluation on the image search experiment is

to validate that the proposed supervised dictionary learning

approach can generate BoF histograms that are semanticpreserving. That is, if two images have similar or related

semantic meaning, i.e. both containing similar scene layouts

and composition, their corresponding BoF histograms generated using our approach should be more similar, comparing

to the original BoF histograms generated without semantic

supervision. In this way, given the query image, it is more

likely to find semantically similar images from the database. From another perspective, this benefit can be regarded

as a solution to handle the so-call semantic gap, i.e. the

gap between visual representation and high-level semantic

meaning, which is a long-standing research challenge in

content-based image retrieval.

It is worth to note that, adding supervised information

to facilitate image retrieval is an interesting and ongoing

research, with several directions such as visual reranking,

pseudo relevance feedback, and query-dependent hashing,

etc. Our work differs from the existing ones and merits in

directly embedding the supervised information into the feature

representation. In such a manner, the subsequent procedures

like inverted indexing, distance calculation, can be retained.

Therefore, we do not need to change or add new components

into the standard search or indexing structure, which is very

helpful for large-scale applications.

B. Parameter Tunings and Implementation Details

1) Feature Sampling: In the stage of local feature extraction, to make a fair comparison, we follow the setting of [18]

TABLE I

C LASSIFICATION P RECISIONS ON S CENE 15

to do dense sampling in a given image. In such a case, we

first resize the max of length or width to 300 pixels with the

same aspect ratio. Then, we densely sample local patches in

the resized image on a regular grid, with the step and patch

sizes as 8 and 16, respectively. Each sampled local patch is

described using SIFT [10], which results in a 128-dim feature

vector.

2) Dictionary Size: Nevertheless, an optimal performance

can be achieved by tuning the dictionary size via crossvalidation. However, we prefer to fix this size to compare

among different dictionary learning approaches. Under this circumstance, we fix the dictionary size for image classification

as 1,024, as learned from (1.0 ∼ 1.2) × 105 randomly selected

local features from each dataset respectively. For test on the

ILSVRC2010 dataset, we further train a 8, 000-dim dictionary

as to be comparable to the codebook size used in [48].

3) Sparsity Regularization: In the stages of both sparse

coding and max/average pooling, we use the codes released

in [18]. The sparsity of the sparse codes λ is set as 0.3-0.4,

which is reported to achieve the best performance in [18].

4) Classifier: In the stage of classifier training, following [18], [25], we adopt a one-vs.-all Linear SVM setting,

which is shown to be quite effective and efficient by combining

with max pooling, as demonstrated in [18] and [25]. Note that,

without loss of generality, other classifiers can be also used,

which is orthogonal to the contribution of this paper.

C. Image Classification Performance

In the image classification task, we evaluate on the Scene15,

the Caltech256, and the ILSVRC2010 datasets.

1) Scene15: Scene 15 contains 15 categories with

4,485 images in total, where each category contains about

200 to 400 images (http://www-cvr.ai.uiuc.edu/ponce_grp/

data/scene_categories/scene_categories.zip). The image content is diverse, ranging from indoor to outdoor scenes, for

instance bedroom, kitchen building and country, etc. In comparison, we randomly select 100 images per class for training,

and then use all rest images for testing.

Table I lists the performances of our approach and alternative methods. Fig. 4 shows the confusion matrix for scenes. It

is clear that our weakly supervised dictionary learning scheme

has largely outperformed the state-of-the-art approaches. Also,

it outperforms our alternative approach, which trains Linear

SVMs on BoF and Classemes vectors respectively, and then

fuses their regression scores. This result indicates that, for the

purpose of using attributes and BoF features jointly, our “early

fusion”-like combination is more suitable than the above “late

fusion”-like combination.

GAO et al.: WEAKLY SUPERVISED VISUAL DICTIONARY LEARNING

5407

TABLE III

F LAT C OST T EST ON 5 P REDICTIONS ON I MAGE N ET 2010 U SING O UR

S EMANTIC -P RESERVING BoF + S TOCHASTIC SVM, W ITH C OMPARISONS

TO

Fig. 4. Confusion matrix of the proposed weakly supervised dictionary

learning scheme on Scene15 (vertical: original label, horizontal: inferred

label).

TABLE II

P ERFORMANCE C OMPARISONS ON C ALTECH 256

2) Caltech256: Caltech256 contains 256 categories and

29,780 images with a background class (www.vision.

caltech.edu/Imagedatasets/Caltech256/). We evaluate our

method under four different settings: i.e. 15, 30, 45 and

60 training images per category respectively. The results on

this dataset are listed in Table II. From Table II, we can

observe that our method has outperformed these state-ofthe-art approaches. Moreover, our method is more robust,

as indicated by the lowest variances in accuracy. Finally,

our approach is fully unsupervised in the stage of feature

extraction, i.e., without requiring any category labels. This

serves as an important advantage comparing to the alternative

approaches of [18] and [25] that perform most closely to our

approach.

In addition, we also compare our approach in combination with Radius Basis Function to the Fisher Kernel

based approach proposed in [47], which can be treated as

a non-linear feature mapping approach with linear SVM.

Similarly, the proposed feature is also a sort of non-linear

feature mapping from the image. By combining with linear

SVM, the proposed feature performs worse than Fisher Kernel based approach. However, by combing with non-linear

SVM, the proposed feature can be treated as the non-linear

augmented in the feature end. As shown in the second table of

Table II, in such a way our approach can still outperform this

state-of-the-art alternative approach.

It is also possible to include performance of other supervised dictionary learning approach and compare in Table II.

However, LScSPM is already the state-of-the-art work in the

literature. Therefore, by outperforming LScSPM, the merit of

our approach has already been demonstrated.

S TATE - OF - THE -A RTS OF LCC [48] AND F ISHER K ERNEL [47],

AS W ELL AS B ASELINE B O F M ETHODS

3) ILSVRC2010: Furthermore, we have conducted a

group of experiments to verify the performance of our

semantic-preserving BoF in the scenario of large-scale object

recognition. To this end, we test both our feature and

several alternative and state-of-the-art approaches on the

ILSVRC2010 dataset (http://www.image-net.org/challenges/

LSVRC/2010/download-public). This dataset was used for

ImageNet 2010 challenge, which contains 1.2M images from

1,000 diverse categories. There are a total of 1,261,406 images

for training. The number of images for each synset (category)

ranges from 668 to 3047. There are 50,000 validation images,

with 50 images per synset.

We extracted several groups of features in this experiment.

First, we use the dense SIFT features provided on the website,

which are extracted using VLFeat based implementation with

10 pixels spacing and 20 × 20 overlapping patches. We further

extract HoG + LBP features following the setting of [48],

with three scales of patch size for computing HOG + LBP,

namely, 1616, 2424 and 3232. We then concatenate both HoG

and LBP features into a single feature vector. On that, we run

a codebook with dimension 8,000, which approximates the

codebook size of [48]. We use stochastic SVM (i.e., stochastic

gradient descendent SVM) as classifier.5

We then compare our the learned semantic-preserving BoF

feature with: (1) Descriptor Coding (HOG + LBP) + stochastic SVM [48], which is reported as the best performed

method in ImageNet 2010, (2) Fisher Kernel + SVM [47],

which is the second best in the ImageNet 2010 challenge,

(3) Semantic-preserving BoF (Dense Sift) + stochastic SVM,

and finally (4) BoF (Dense Sift) + stochastic SVM. Tab. III

shows the performance comparison between our approach

(a.k.a., Semantic-preserving BoF (HOG + LBP) + stochastic

SVM) and the above four alternative approaches. To directly

compare to the results reported in the ImageNet 2010 challenge, we use Flat Cost with 5 Prediction as our evaluation

protocol.

As shown in Tab.III, it is quite clear that the proposed semantic-preserving BoF outperforms LCC based

approach [48] with the same feature setting (HOG + LBP).

Also, by only using SIFT as local features, our semanticpreserving BoF also significantly outperforms the baseline

BoF with a large margin (Flat Cost 0.47 to 0.78). It is worth to

note that, in our case the sparse coding step is very efficient.

5 We use three servers each with 16-core CPU to train these 1000 categories,

which costs approximately 4 days for training. The extraction of HoG feature

is also paralleled using these 48 cores, which costs approximately one week

for extracting both HoG and LBP features.

5408

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 12, DECEMBER 2014

Fig. 5. Performance comparisons on Holidays + Flickr1M, with codebook

size = 20, 000.

TABLE IV

mAP C OMPARISONS TO V OCABULARY T REE [11], SPM [34]

AN AND

Fig. 6. Performance comparisons on Holidays + Flickr1M, with codebook

size = 200, 000

ScSPM [18] ON NUS-WIDE

Also, the performance of the proposed semantic-preserving

BoF can be further improved by using non-linear SVMs,

e.g., by using the chi2 kernel.

D. Image Search Performance

In the task of image search, we evaluate on both the

Holidays + Flickr 1M and the NUS-WIDE benchmarks, as

well as a 1-million landmark image dataset.

1) Holidays + Flickr1M: First, we validate our performance

on the Holidays + Flickr1M dataset [5], which is constructed

by combining the Holidays near-duplicate image search benchmark and one-million Flickr distractor images. On such a large

dataset, the outliers of labels are unavoidable, which seriously

degenerate the performance of other supervised dictionary

learning approaches [18], [24], [25]. In contrast, by exploring

only the attribute-level weak supervision, our approach does

not require any actual labels. In the following setting, we do

not compare approaches using supervised dictionary learning

in the image search task.

As shown in Fig. 5 and Fig. 6, our approach also

significantly outperforms unsupervised dictionary learning

approaches, including Vocabulary Tree [11], SPM [34], Weak

Geometric Consistency and Hamming Embedding [5]. It is

worth to note that the Holiday benchmark is designed for

the application of landmark retrieval, while the Classemes

attribute used in our approach is designed for general-purpose

image description. Even given such an application bias, the

combination of attributes to dictionary learning still achieves

better performance, which is due to the generality of attributebased detectors. This again proves the correctness and the

generality of the proposed approach.

Fig. 7. Performance comparison of the proposed approach to alternatives

[11], [34], [35] in the ten-million landmark image dataset.

2) NUS-WIDE: The second dataset is NUS-WIDE, which

contains around 270,000 web images associated with

81 ground truth concept tags (http://lms.comp.nus.edu.

sg/research/NUS-WIDE.htm). Each image in NUS-WIDE

contains multiple tags, which serves as the ground truth

categories to be used for supervised dictionary learning.

In evaluation, we consider 21 most frequent tags, like “animal”, “buildings”, “person”, etc., each of which has abundant

relevant images ranging from 5,000 to 30,000. We sample

uniformly 100 images from each of the selected 21 tags to

form a query set of 2,100 images, while the rest images are

left to build the training set. Using the learned dictionary, each

image is represented by a 1,024-dimensional feature vector,

similar to LLC [26].

Table IV shows that our approach (weakly unsupervised

dictionary learning + max or average pooling) significantly

outperforms baselines including Vocabulary Tree [11],

SPM [34] and ScSPM [18]. As for SPM [34] and ScSPM [18],

our performance gain can be explained by the fact that only

a small portion of images in the NUS-WIDE dataset is

manually labeled. On the contrary, lots of other images in the

NUS-WIDE dataset are based on their original meta tagging,

without any correctness checking. Indeed, most of such tags

GAO et al.: WEAKLY SUPERVISED VISUAL DICTIONARY LEARNING

5409

Fig. 8. Examples in our 10 Million landmark image dataset, in which images are crawled from Flickr and Panoramia from cities of Beijing, New York City,

Barcelona, Singapore and Florence.

Fig. 9. Confusion table of our approach (bottom) on PASCAL VOC dataset

comparing to [15] (top).

are noisy and degenerate the supervised dictionary learning for

ScSPM [18]. However, such noise does not have influence on

our approach, since our approach does not require any actual

labels.

3) 1M Landmarks: We also test the performance of our

approach for image search using a 1-million landmark image

data set collected from Flickr (www.flickr.com) and Panoramia

(www.Panoramia.com). We crawl images with their GPS tags

from 5 metropolitans worldwide, including Beijing, New York

City, Barcelona, Singapore and Florence, as shown in Fig. 8.

This dataset is named as 1M Landmarks in our subsequent

discussion.

We run k-means clustering by using the GPS tags to

partition photos of each city into multiple regions. For each

city, we select the top 30 densest regions as well as 30 random

regions. We then ask a group of volunteers to identify one or

more dominant landmark views from each of these 60 regions.

For a given dominant view, all their near-duplicate photos are

manually labeled from the regions it locates and surroundings

regions nearby. Eventually we have 300 queries as well as

their ground truth labels (corrected matches).

Since it is not suitable to define such landmark-level identity

as supervised information, here we only compare our solution

to unsupervised dictionary learning. Fig. 7 presents the mAP

comparison between our approach and three unsupervised dictionary learning approaches, including Vocabulary Tree [11],

SPM [34] and Hierarchical Vocabulary Optimization [35].

To clarify the contributions of different components in our

approach, we derive two alternatives in terms of (1) Max or

Average Pooling + Spatial Pyramid and (2) Sparse Coding.

The performance of above 5 baselines are shown in Fig. 7. It is

clear that our dictionary learning approach significantly outperforms the other three baselines [11], [34], [35]. We have also

discovered that, the baseline SPM indeed does not introduce

significant performance gain, while in some settings of the

dictionary size, the corresponding mAP even degenerates. This

phenomenon can be explained by the fact that, in an uncontrolled setting, we cannot guarantee a uniform distance to take

photos of landmarks. Therefore, using SPM is inappropriate,

as the spatial layouts of corresponding photos might vary a

lot. Furthermore, max pooling is shown to perform better than

average pooling. We explain this phenomenon by the ability

of max pooling to capture the salient response in each spatial

subdivision. In contrast, average pooling only simply records

the averaged responses in each spatial subdivision.

4) Comparison to Supervised Dictionary Learning: We further compare our approach to two supervised dictionary learning approaches [15], [27] on PASCAL VOC 2005 (http://www.

PASCAL-network.org/challenges/VOC/). We adopt VOC 05

not 08 here, as to directly compare our results to that

of [15] and [27], which are most related to our approach.

We split PASCAL into two equal-sized sets for training and

testing, as identical to [15]. The training set provides bounding

boxes for image regions with annotations, which gives the

purified patch-label correspondences for implementing [27].

As for [27], the correspondence set of local features is built

from the bounding boxes with annotation labels, from which

the supervised dictionary is learned. In classifier learning, each

annotation is viewed as a class with a set of BoFs extracted

from the corresponding bounding boxes. Identical to [15], the

classification of the test bounding box is accomplished by

using nearest neighbor search.

As shown in Figure 9, in almost all categories, our method

achieves better precision comparing to [15] and [27]. Note that,

the supervised dictionary learning of [27] actually provides

almost comparable performance to our dictionary learning

scheme. However, our solution does not depend on any actual

labels. This advantage is important especially to cope with the

increase of category numbers. For instance in the ImageNet

5410

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 12, DECEMBER 2014

evaluations, the category number could be tens of thousands

(or even more). In such a case, the label correlations would be

a serious issue, which would largely challenge the performance

of the existing supervised dictionary learning schemes.

V. C ONCLUSIONS AND F UTURE W ORK

While most existing dictionary learning methods resort to

unsupervised quantization, the recent trend prefers supervised

dictionary learning by incorporating manual annotations or

category labels of images. However, the expensive labor of

human labeling hampers supervised dictionary learning from

real-world, large-scale computer vision tasks. To the best

of our knowledge, this paper is the first study on how to

make use of cheaply available image attributes to help the

visual dictionary learning process, accomplishing in a weakly

supervised learning formulation. We tackle dictionary learning

from a generative learning perspective. We propose a Hidden

Markov Random Field (HMRF) model to effectively and

robustly exploit the error-containing supervision stemming

from the image attributes. In the HMRF model, the attributes

are modeled into a hidden layer, while the codewords are

modeled into an observation layer. As such, the codewords

are iteratively refined through updating an attribute-to-word

mapping.

The proposed weakly supervised dictionary learning

approach is generic, because some involved components (e.g.,

feature coding and attribute detection) in our approach can be

easily replaced by other cutting-edge algorithms. Meanwhile,

our formulation can cover several existing unsupervised and

supervised dictionary learning methods. In evaluation, we

demonstrate the remarkable advantages of our approach in

terms of image classification and search experiments performed on several benchmark datasets.

R EFERENCES

[1] J. Duchi, S. Shalev-Shwartz, Y. Singer, and T. Chandra, “Efficient

projections onto the 1 -ball for learning in high dimensions,” in Proc.

25th Int. Conf. Mach. Learn., 2008, pp. 272–279.

[2] J. C. van Gemert, C. J. Veenman, A. W. M. Smeulders, and

J.-M. Geusebroek, “Visual word ambiguity,” IEEE Trans. Pattern Anal.

Mach. Intell., vol. 32, no. 7, pp. 1271–1283, Jul. 2010.

[3] A. Gionis, I. Piotr, and M. Rajeev, “Similarity search in high dimensions

via hashing,” in Proc. Int. Conf. Very Large Data Bases, 1999,

pp. 518–529.

[4] B. Kulis and K. Grauman, “Kernelized Locality-Sensitive Hashing for

Scalable Image Search,” in Proc. IEEE Int. Conf. Comput. Vis., 1999,

pp. 2130–2137.

[5] H. Jegou, M. Douze, and C. Schmid, “Hamming embedding and weak

geometric consistency for large scale image search,” in Proc. 10th Eur.

Conf. Comput. Vis., 2008, pp. 304–317.

[6] H. Jegou, M. Douze, C. Schmid, and P. Perez, “Aggregating local

descriptors into a compact image representation,” in Proc. IEEE Int.

Conf. Comput. Vis. Pattern Recognit., Jun. 2010, pp. 3304–3311.

[7] R. Ji et al., “Location discriminative vocabulary coding for mobile landmark search,” Int. J. Comput. Vis., vol. 96, no. 3, pp. 290–314, Jul. 2011.

[8] S. Lazebnik and M. Raginsky, “Supervised learning of quantizer

codebooks by information loss minimization,” IEEE Trans. Pattern

Anal. Mach. Intell., vol. 31, no. 7, pp. 1294–1309, Jul. 2009.

[9] J. Liu, Y. Yang, and M. Shah, “Learning semantic visual vocabularies

using diffusion distance,” in Proc. IEEE Int. Conf. Comput. Vis. Pattern

Recognit., Jun. 2009, pp. 461–468.

[10] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,”

Int. J. Comput. Vis., vol. 60, no. 2, pp. 91–110, 2004.

[11] D. Nister and H. Stewenius, “Scalable recognition with a vocabulary

tree,” in Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit.,

vol. 2. Jun. 2006, pp. 2161–2168.

[12] F. Perronnin, C. Dance, G. Csurka, and M. Bressan, “Adapted

vocabularies for generic visual categorization,” in Proc. 9th Eur. Conf.

Comput. Vis., May 2006, pp. 464–475.

[13] J. Philbin, O. Chum, M. Isard, J. Sivic, and A. Zisserman, “Object

retrieval with large vocabularies and fast spatial matching,” in Proc.

IEEE Conf. Comput. Vis. Pattern Recognit., Jun. 2007, pp. 1–8.

[14] J. Sivic and A. Zisserman, “Video Google: A text retrieval approach to

object matching in videos,” in Proc. 9th IEEE Int. Conf. Comput. Vis.,

Oct. 2003, pp. 1470–1477.

[15] J. Winn, A. Criminisi, and T. Minka, “Object categorization by learned

universal visual dictionary,” in Proc. IEEE Int. Conf. Comput. Vis.,

vol. 2. Oct. 2005, pp. 1800–1807.

[16] Z. Wu, Q. Ke, M. Isard, and J. Sun, “Bundling features for large scale

partial-duplicate web image search,” in Proc. IEEE Conf. Comput. Vis.

Pattern Recognit., Jun. 2009, pp. 25–32.

[17] J. Yang, Y.-G. Jiang, A. G. Hauptmann, and C.-W. Ngo, “Evaluating

bag-of-visual-words representations in scene classification,” in Proc.

Int. Workshop Workshop Multimedia Inf. Retr., Sep. 2007, pp. 197–206.

[18] J. Yang, K. Yu, Y. Gong, and T. Huang, “Linear spatial pyramid

matching using sparse coding for image classification,” in Proc. IEEE

Conf. Comput. Vis. Pattern Recognit., Jun. 2009, pp. 1794–1801.

[19] O. Boiman, E. Shechtman, and M. Irani, “In defense of nearest-neighbor

based image classification,” in Proc. IEEE Conf. Comput. Vis. Pattern

Recognit., Jun. 2008, pp. 1–8.

[20] J. Philbin, O. Chum, M. Isard, J. Sivic, and A. Zisserman, “Lost

in quantization: Improving particular object retrieval in large scale

image databases,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit.,

Jun. 2008, pp. 1–8.

[21] Y. LeCun et al., “Handwritten digit recognition with a back-propagation

network,” in Advances in Neural Information Processing Systems.

San Francisco, CA, USA: Morgan Kaufmann, Nov. 1989.

[22] T. Serre, L. Wolf, and T. Poggio, “Object recognition with features

inspired by visual cortex,” in Proc. IEEE Comput. Soc. Conf. Comput.

Vis. Pattern Recognit., Jun. 2005, pp. 994–1000.

[23] F. Moosmann, B. Triggs, and F. Jurie, “Fast discriminative visual

codebooks using randomized clustering forests,” in Advances in Neural

Information Processing Systems. Cambridge, MA, USA: MIT Press,

Dec. 2007, pp. 985–992.

[24] J. Mairal, F. R. Bach, J. Ponce, G. Sapiro, and A. Zisserman, “Supervised

dictionary learning,” in Advances in Neural Information Processing

Systems. Red Hook, NY, USA: Curran & Associates Inc., Dec. 2008.

[25] S. Gao, I. W. Tsang, L.-T. Chia, and P. Zhao, “Local features are not

lonely—Laplacian sparse coding for image classification,” in Proc.

IEEE Conf. Comput. Vis. Pattern Recognit., Jun. 2010, pp. 3555–2561.

[26] J. Wang, J. Yang, K. Yu, F. Lv, T. Huang, and Y. Gong, “Localityconstrained linear coding for image classification,” in Proc. IEEE Conf.

Comput. Vis. Pattern Recognit., Jun. 2010, pp. 3360–3367.

[27] R. Ji, H. Yao, X. Sun, B. Zhong, and W. Gao, “Towards semantic

embedding in visual vocabulary,” in Proc. IEEE Conf. Comput. Vis.

Pattern Recognit., Jun. 2010, pp. 918–925.

[28] V. Ferrari and A. Zisserman, “Learning visual attributes,” in Advances

in Neural Information Processing Systems. Red Hook, NY, USA:

Curran & Associates Inc., Dec. 2007.

[29] A. Farhadi, I. Endres, D. Hoiem, and D. Forsyth, “Describing objects

by their attributes,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit.,

Jun. 2009, pp. 1778–1785.

[30] Y. Wang and G. Mori, “A discriminative latent model of object classes

and attributes,” in Proc. 11th Eur. Conf. Comput. Vis., Sep. 2010,

pp. 155–168.

[31] L. Torresani, M. Szummer, and A. Fitzgibbon, “Efficient object category

recognition using classemes,” in Proc. 11th Eur. Conf. Comput. Vis.,

Sep. 2010, pp. 776–789.

[32] B. Siddiquie R. S. Feris, and L. S. Davis, “Image ranking and retrieval

based on multi-attribute queries,” in Proc. IEEE Conf. Comput. Vis.

Pattern Recognit., Jun. 2011, pp. 801–808.

[33] Z. Wu, Q. Ke, M. Isard, and J. Sun, “Bundling features for large scale

partial-duplicate web image search,” in Proc. IEEE Conf. Comput. Vis.

Pattern Recognit., Jun. 2009, pp. 25–32.

[34] S. Lazebnik, C. Schmid, and J. Ponce, “Beyond bags of features:

Spatial pyramid matching for recognizing natural scene categories,” in

Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit., vol. 2.

Jun. 2006, pp. 2169–2178.

[35] R. Ji, X. Xie, H. Yao, and W.-Y. Ma, “Vocabulary hierarchy optimization

for effective and transferable retrieval,” in Proc. IEEE Conf. Comput.

Vis. Pattern Recognit., Jun. 2009, pp. 1161–1168.

[36] Y. Weiss, A. Torralba, and R. Fergus, “Spectral hashing,” in Advances

in Neural Information Processing Systems. Red Hook, NY, USA:

Curran & Associates Inc., 2008.

GAO et al.: WEAKLY SUPERVISED VISUAL DICTIONARY LEARNING

5411

[37] R. Ji, H. Yao, W. Liu, X. Sun, and Q. Tian, “Task-dependent visualcodebook compression,” IEEE Trans. Image Process., vol. 21, no. 4,

pp. 2282–2293, Apr. 2012.

[38] T. Kohonen, Self Organizing Maps, 3rd ed. New York, NY, USA:

Springer-Verlag, 2000.

[39] A. Rao, D. Miller, K. Rose, and A. Gersho, “A generalized VQ method

for combined compression and estimation,” in Proc. IEEE Int. Conf.

Acoust., Speech, Signal Process., vol. 4. May 1996, pp. 2032–2035.

[40] F.-F. Li and P. Pietro, “A Bayesian hierarchical model for learning

natural scene categories,” in Proc. IEEE Comput. Soc. Conf. Comput.

Vis., vol. 2. Jun. 2005, pp. 524–531.

[41] R. Ji, J. Chen, L.-Y. Duan, and W. Gao, “Towards compact topical

descriptors,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit.,

Jun. 2012, pp. 2925–2932.

[42] A. Bosch, A. Zisserman, and X. Munoz, “Scene classification using a

hybrid generative/discriminative approach,” IEEE Trans. Pattern Anal.

Mach. Intell., vol. 30, no. 4, pp. 712–727, Apr. 2008.

[43] T. S. Jaakkola and D. Haussler, “Exploiting generative models

in discriminative classifiers,” in Advances in Neural Information

Processing Systems. Cambridge, MA, USA: MIT Press, 1999.

[44] V. C. Raykar et al., “Supervised learning from multiple experts: Whom

to trust when everyone lies a bit,” in Proc. 26th Annu. Int. Conf. Mach.

Learn., 2009, pp. 889–896.

[45] O. Dekel and O. Shamir, “Good learners for evil teachers,” in Proc.

26th Annu. Int. Conf. Mach. Learn., 2009, pp. 233–240.

[46] L.-J. Li, H. Su, L. Fei-Fei, and E. P. Xing, “Object bank: A highlevel image representation for scene classification & semantic feature

sparsification,” in Advances in Neural Information Processing Systems.

Red Hook, NY, USA: Curran & Associates Inc., 2010, pp. 1378–1386.

[47] F. Perronnin, J. Sánchez, and T. Mensink, “Improving the Fisher kernel

for large-scale image classification,” in Proc. 11th Eur. Conf. Comput.

Vis., 2010, pp. 143–156.

[48] Y. Lin et al., “Large-scale image classification: Fast feature extraction

and SVM training,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit.,

Jun. 2010, pp. 1689–1696.

Yue Gao (SM’14) received the B.S. degree from

the Harbin Institute of Technology, Harbin, China,

and the M.E. and Ph.D. degrees from Tsinghua

University, Beijing, China.

Rongrong Ji (SM’14) received the Ph.D. degree

in computer science from the Harbin Institute of

Technology, Harbin, China. He was a Post-Doctoral

Research Fellow with Columbia University, New

York, NY, USA, from 2011 to 2013. He has authored

over 40 referred journals and conferences in the

International Journal of Computer Vision, the IEEE

T RANSACTIONS ON I MAGE P ROCESSING, the IEEE

T RANSACTIONS ON M ULTIMEDIA, the ACM Transactions on Multimedia Computing, Communications

and Applications, the IEEE M ULTIMEDIA, Pattern

Recognition, the Computer Vision and Pattern Recognition (CVPR) Conference, the ACM Multimedia Conference, the International Joint Conference

on Artificial Intelligence, and the Conference on Artificial Intelligence. His

research interests include image and video search, content understanding,

mobile visual search and recognition, and interactive human–computer interface. He was a recipient of the Best Paper Award at the ACM Multimedia

2011 and the Microsoft Fellowship 2007. He is the Guest Editor of the

IEEE Multimedia Magazine, the IEEE N EUROCOMPUTING, the IEEE Signal

Processing Magazine, the ACM Multimedia Systems, and Multimedia Tools

and Applications. He was the Special Session Chair of the International

Conference on Multimedia Retrieval 2014, the Visual Communications and

Image Processing Conference 2013, the Magnetism and Magnetic Materials

Conference 2013, and the Pacific & Rim Conference on Multimedia 2012. He

was also the Program Committee Member of over 30 flagship international

conferences, including CVPR 2013, the International Conference on Computer

Vision 2013, and the ACM Multimedia 2014 to 2010. He is currently a

Professor with the Department of Cognitive Science, School of Information

Science and Engineering, Xiamen University, Xiamen, China.

Wei Liu (M’14) received the Ph.D. degree from

Columbia University, New York, NY, USA, in 2012.

He was a recipient of the 2013 Jury Award for Best

Thesis of Columbia University. He is currently a

research staff member of the IBM Thomas J. Watson

Research Center, Yorktown Heights, NY, USA, with

research interests in machine learning, computer

vision, pattern recognition, and information retrieval.

Qionghai Dai (SM’05) received the B.S. degree

in mathematics from Shanxi Normal University,

Xi’an, China, in 1987, and the M.E. and Ph.D.

degrees in computer science and automation from

Northeastern University, Shenyang, China, in 1994

and 1996, respectively. Since 1997, he has been

with the faculty of Tsinghua University, Beijing,

China, where he is currently a Professor and the

Director of the Broadband Networks and Digital

Media Laboratory. His research areas include signal

processing, broadband networks, video processing,

and communication.

Gang Hua (SM’11) received the B.S. degree in

automatic control engineering and the M.S. degree

in control science and engineering from Xi’an

Jiaotong University (XJTU), Xi’an, China, in 1999

and 2002, respectively, and the Ph.D. degree from

the Department of Electrical and Computer Engineering, Northwestern University, Evanston, IL,

USA, in 2006. He was enrolled in the Special Class

for the Gifted Young of XJTU in 1994. He is currently an Associate Professor of Computer Science

with the Stevens Institute of Technology, Hoboken,

NJ, USA. He was a research staff member of the IBM Research Thomas J.

Watson Center, Hawthorne, NY, USA, from 2010 to 2011, a Senior Researcher

with the Nokia Research Center, Hollywood, CA, USA, from 2009 to 2010,

and a Scientist with the Microsoft Live Labs Research, Redmond, WA, USA,

from 2006 to 2009. He has authored over 50 peer-reviewed publications

in prestigious international journals and conferences. He holds three U.S.

patents and 17 more patents pending. He is an Associate Editor of the IEEE

T RANSACTIONS ON I MAGE P ROCESSING and the IAPR Journal of Machine

Vision and Applications, and a Guest Editor of the IEEE T RANSACTIONS ON

PATTERN A NALYSIS AND M ACHINE I NTELLIGENCE and the International

Journal on Computer Vision. He was the Area Chair of the 2011 IEEE

International Conference on Computer Vision and the 2011 ACM Multimedia,

and the Workshops and Proceedings Chair of the 2011 IEEE Conference on

Face and Gesture Recognition. He is a member of the Association for the

Computing Machinery.