CSCI 4390/6390 Database Mining Assignment 3 Instructor: Wei Liu Due on Nov. 25th

advertisement

CSCI 4390/6390 Database Mining

Assignment 3

Instructor: Wei Liu

Due on Nov. 25th

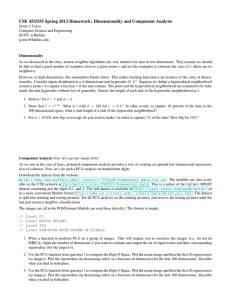

Problem 1. Sequential Pattern Mining (20 points)

For the above database of 5 database items (i.e., transactions), define the minimum support (frequency) as 3.

Find all frequent sequential patterns of length 2. (Hint: Use the Apriori property of sequential patterns, that is,

none of super-sequences of an infrequent pattern is frequent. And start with seeking all frequent 1-length patterns. )

Problem 2. Principal Component Analysis (PCA) (30 points)

d×m is the projection matrix achieved by

Suppose N data samples {xi ∈ Rd }N

i=1 in hand. The matrix U ∈ R

PCA, and m is thus the reduced dimensionality.

(a) The Mahalanobis distance between two arbitrary inputs xi , xj is defined as

q

d(xi , xj ) = (xi − xj )> M(xi − xj ),

(1)

in which the matrix M ∈ Rd×d is positive semi-definite (PSD). Show that doing dimensionality reduction via

PCA (i.e., projecting data onto the matrix U) and then computing the Euclidean distance in the reduced data space

is equivalent to directly computing the Mahalanobis distance in the raw data space. Also derive the PSD matrix

M of the Mahalanobis distance induced by PCA. Only allow M to be expressed in terms of {xi }i and U.

(b) If the original data dimensionality d is very high and much larger than the sample number N (d N ), U

is computationally expensive to obtain in the conventional way. There is actually an efficient computing method

for addressing high-dimensional PCA. This method is first computing the eigen-system of another matrix, instead

of the high-dimensional d × d covariance matrix,

C = JX> XJ,

(2)

11>

∈ RN ×N is the centering matrix.

in which X = [x1 , x2 , · · · , xN ] ∈ Rd×N is the data matrix, and J = I −

N

Denote by v1 , v2 , · · · , vm the top-m eigenvectors associated with the m largest eigenvalues σ1 , σ2 , · · · , σm of the

operated matrix C ∈ RN ×N . For convenience, define a matrix Vm = [v1 , · · · , vm ] ∈ RN ×m and a diagonal

matrix Σm = diag(σ1 , · · · , σm ) ∈ Rm×m . Show that the PCA projection matrix is

U = XJVm Σ−1/2

.

m

(3)

(c) Derive the time complexity of the method which solves high-dimensional PCA by operating the smaller

matrix C in Eq. (2) and then using the formula in Eq. (3). Only allow the time complexity to be expressed in terms

of d, N, m.

(Hint: To deal with (b), you can show that such a matrix U has orthonormal column vectors, and that any of its

columns is an eigenvector of the covariance matrix of the raw data. )

Problem 3. Kernel PCA (KPCA) (20 points)

Suppose N data samples {xi ∈ Rd }N

i=1 in hand. A valid kernel function k(, ) has been defined for arbitrary

input pairs. Now we want to address Kernel PCA using the method introduced in Problem 2 (b).

In particular, we do the eigen-decomposition over the following matrix

K̃ = JKJ,

(4)

11>

∈ RN ×N is still the centering matrix. As

where K = k(xi , xj ) 1≤i,j≤N is the kernel matrix, and J = I −

N

a result, K̃’s top-m eigenvectors and their corresponding largest m eigenvalues are written into a matrix Vm ∈

RN ×m and a diagonal matrix Σm ∈ Rm×m , respectively.

Please derive the m-dimensional KPCA embedding for any input sample x ∈ Rd . Note that the embedding

vector is nonlinearly constructed from Rd to Rm , and only allows to be expressed in terms of x, {xi }i , k(, ), J,

Vm , and Σm .

![See our handout on Classroom Access Personnel [doc]](http://s3.studylib.net/store/data/007033314_1-354ad15753436b5c05a8b4105c194a96-300x300.png)