Revisiting Memory Errors in Large-Scale Production Data Centers:

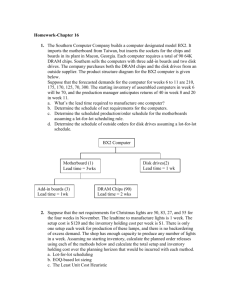

advertisement