Document 10414704

advertisement

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 29, NO. 6, JUNE 2010

977

Diagnosis of Integrated Circuits With Multiple

Defects of Arbitrary Characteristics

Xiaochun Yu, Student Member, IEEE, and Ronald DeShawn Blanton, Fellow, IEEE

Abstract—This paper describes a multiple-defect diagnosis

methodology that is flexible in handling various defect behaviors

and arbitrary failing pattern characteristics. Unlike some other

approaches, the search space of the diagnosis method does not

grow exponentially with the number of defects. Results from

extensive simulation experiments and real failing integrated

circuits show that this method can effectively diagnose circuits

that are affected by a large (>20) or small number of defects

of various types. Moreover, this method is capable of accurately

estimating the number of defective sites in the failing circuit.

Index Terms—Candidate isolation, failure diagnosis, integrated

circuit testing, multiple-defect, physical failure analysis.

I. Introduction

D

IAGNOSIS is the process of finding the locations and

nature of defects within a failing circuit by analyzing/comparing the observed circuit response and the intended

functionality of the circuit. It is a critical step for understanding the failure mechanisms that can adversely affect

circuit yield and quality. A circuit affected by a single line

permanently fixed at some logic value can be effectively

diagnosed using stuck-at fault dictionaries [1]. However, due

to increasing device and interconnect density, coupled with

more pronounced variability, some failing chips are affected

by multiple-defects. (We define a multiple-defect as a set

of faulty sites, where each faulty site si is affected by a

different defect. For example, a two-line bridge defect is

not a multiple-defect, but two physically-separated powersupply bridges does constitute one under this definition.) For

example, in our analysis of over 7000 failing chips from LSI

Corporation, we found that 23% of them exhibited behavior

that indicated the existence of multiple-defects. Moreover, it is

likely the case that the sheer number of defective sites can be

significant [2]. However, existing fault models cannot capture

all possible defect behaviors. Therefore, diagnosis of multipledefects is an important, yet challenging task.

There have been many explorations in this area. One approach is to develop new automatic test pattern generation

Manuscript received June 22, 2009; revised October 29, 2009. Date of current version May 21, 2010. This work was supported by the National Science

Foundation, under Award No. CCF-0427382. This paper was recommended

by Associate Editor S. Hellebrand.

The authors are with the Department of Electrical and Computer Engineering, Carnegie Mellon University, Pittsburgh, PA 15213 USA (e-mail:

xyu1@ece.cmu.edu; blanton@ece.cmu.edu).

Color versions of one or more of the figures in this paper are available

online at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TCAD.2010.2048352

approaches for enhancing the accuracy of diagnosis [3], [4].

Generating new patterns for diagnosis may not be economically viable in practice however. Typically, diagnosis uses

the production test patterns for defect detection. Diagnosis

methodologies for multiple-defects assumed to behave as a

collection of stuck-at faults have been described in [5]–[7].

A method for diagnosing defects that are either bounded or

region based were described in [8]. In [9], the author proposed

a diagnosis method for multiple bridge defects using IDDQ

information. A common shortcoming of the aforementioned

approaches is that they are all limited to the types of misbehaviors assumed. Alternatively, other work performs diagnosis

based on the idea of single-location-at-a-time (SLAT) test

patterns [2], [10]. [A SLAT pattern [2] is a test pattern that:

1) fails on the tester for the considered circuit under test; and

2) produces a circuit response that exactly matches the fault

simulation response of some stuck-at fault.] Such methods fail

when no SLAT patterns exist, or when the SLAT patterns are

actually created by multiple, simultaneously-active defects.

We have developed a multiple-defect diagnosis methodology that easily copes with failing patterns of arbitrary

characteristics (SLAT, SO-SLAT [3], non-SLAT, etc.), while

making no assumptions concerning the particular defect behavior. This method can even effectively diagnose circuits that

have a significant number (>20) of defects. The methodology

is composed of a defect site identification and elimination

(DSIE) procedure [11], and a candidate1 selection and ranking

heuristic. The search space of this diagnosis method does

not grow exponentially with the number of defects in the

circuit under diagnosis (CUD), since combinations of defect

sites are not explicitly considered. Another outcome of our

diagnosis is an estimate of the number of defective sites.

Simulation experiments show a strong correlation between the

number of defective sites estimated and the actual number of

defective sites in the CUD. This paper subsumes and extends

our previous work [11], [12] in that: 1) it proposes a forest

structure to organize the diagnosis candidates according to

their effect on the failing circuit, which can be used to effectively guide diagnosis; and 2) our candidate selection process

now encompasses the entire forest, instead of only the root

nodes in the forest, which leads to improved diagnosis results.

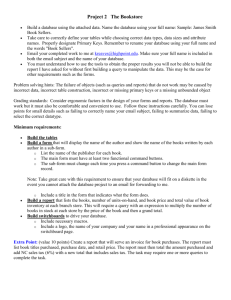

An overview of our diagnosis methodology is given in

Fig. 1. First, defect candidates are identified through a

1 If a signal line s potentially behaves as stuck-at-v for failing pattern t ,

i

k

i

where vi is the expected or correct value driven onto the line by tk when the

CUD is defect free, then si /vi is referred to as a candidate.

c 2010 IEEE

0278-0070/$26.00 © 2010 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media,

including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution

to servers or lists, or reuse of any copyrighted component of this work in other works.

978

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 29, NO. 6, JUNE 2010

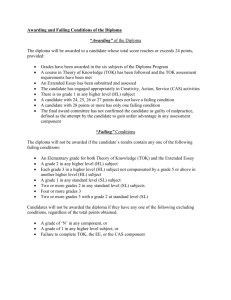

Algorithm 1 DSIE Algorithm

Fig. 1.

Overview of the multiple-defect diagnosis methodology.

defect site identification and elimination procedure (Section

II), which consists of a path-tracing procedure [13] and a site

elimination approach for conservatively eliminating some of

the candidates implicated by path-tracing. Then, the effect of

those candidates are analyzed and organized in a candidate

forest (Section III). Analysis of the candidate forest leads to

the selection of a near minimal group of candidates that jointly

produce the failing responses. The selected candidates are then

ranked and reported as the final output of diagnosis (Section

IV).

II. Defect Site Identification Elimination

In this section, we describe the method for conservatively

identifying potential defect sites using path-tracing [13] followed by a novel approach for conservatively eliminating some

of these sites. These two steps are referred to as DSIE. A highlevel description of DSIE is given in Algorithm 1.

The example CUD of Fig. 2 is used to illustrate DSIE.

An OR-type bridge defect involving signal lines j and k, and

a defect that causes signal h to be stuck-at-1 are assumed

to exist in the CUD. Also assume there are only two failing

test patterns, namely, t1 (abcdefg) = 0010001 [Fig. 2(a)] and

t2 (abcdefg) = 0001010 [Fig. 2(b)]. In Fig. 2, the output values

without (within) boxes are produced by the fault-free (faulty)

CUD for the two failing patterns.

For a given failing test pattern tk , the path-tracing procedure

[13] traces back through the circuit from every failing observable point (scanned memory element or primary output). An

observable point is failing if its observed response is different

from its response when the CUD is defect free. Otherwise,

the observable point is said to be passing. Lines encountered

during the trace are implicated as potential defect sites.2 Pathtracing processes internal lines encountered while tracing back

through the CUD in the following manner. When a gate output

is reached and all gate inputs have noncontrolling values

2 We will use the term “site” to refer to a signal line that is stuck-at value

v ∈ {0, 1} for some test pattern tk .

for each failing test pattern tk do

Path-trace to identify potential defect sites (Dk ) implicated by tk

end for

D = union of all Dk s

while first iteration or at least one site eliminated in last iteration do

for each failing test pattern tk do

Simulate tk

Inject the unknown value X at each potential defect site in D

Propagate all X values to the outputs

Assign fault-free logic values to all passing outputs

Queue = all lines with values changing from X to v ∈ {0, 1}

while Queue = ∅ do

si = line popped from Queue

Forward imply from si

FIi = lines with values X and implied to v ∈ {0, 1}

FAi = FIi − D

Lines in FAi are assigned to implied values

Queue = Queue ∪ FAi

if si ∈

/ D then

Backward imply from si

BIi = lines with values X and implied to v ∈ {0, 1}

Lines in BIi are assigned to implied values

Queue = Queue ∪ BIi

BPi = sites implicated by path-tracing only through BIi

Dk = Dk − BIi − BPi

D = union of all Dk s

end if

end while

end for

end while

applied, path-trace implicates all the gate inputs and continues

tracing from each input. If one or more inputs have controlling

values applied, only those inputs are implicated and the trace

continues only from those controlling inputs. If a fanout branch

is reached, it is implicated and tracing continues from the corresponding stem. In the example of Fig. 2, the set of potential

defect sites resulting from path-tracing the first failing pattern

include {m, i, a, b1 , b, h1 , h, c, n, h2 , j1 , e, f , j, j2 , p, q, j3 },

and the set of potential defect sites resulting from path-tracing

the second pattern are {m, n, q, r, i, h1 , h2 , k1 , k2 , l1 , a, b1 , b,

h, d1 , d, k, g, b3 , l, b2 }. We track the behavior of the potential

defect sites implicated, a modification first used in [14].

Path-tracing [13] is conservative in that it identifies defect

sites that can cause the failing outputs observed. However,

some sites may only impact outputs that never fail. In other

words, some sites may only prevent error propagation from

other sites to the outputs. Such sites cannot be found by

path-tracing. We do not deal with this shortcoming of pathtracing, but instead assume every defect site propagates an

error originating from itself to at least one failing output. This

assumption is reasonable since any defect site that does not

aid in establishing a failing output is very difficult to identify

without explicitly considering all lines within the CUD.

Path-tracing typically implicates many defect-free sites.

Given this reality, our goal is to eliminate defect-free sites

identified by path-tracing without removing any true defect

sites. If this can be accomplished, the eliminated sites do

not have to be further analyzed in the subsequent stages

of diagnosis, which alleviates the complexity of diagnosis

without degrading diagnosis accuracy.

Consider some site si implicated by path-tracing a failing

test pattern tk . We have observed cases where si will cause a

YU AND BLANTON: DIAGNOSIS OF INTEGRATED CIRCUITS WITH MULTIPLE DEFECTS OF ARBITRARY CHARACTERISTICS

979

a defective circuit. It is based on the fact that the logic value

of a defect-free line is a function of its upstream logic values,

while the value of a defective line is not. The following rules

are used to perform conservative implication.

A. Implication Rule

Consider a signal line si that has a value vi ∈ {0, 1} for

a failing pattern tk . If si is not a potential defect site for

any failing pattern (either by path-tracing or through earlier

elimination), both forward and backward implications are

performed from si . Otherwise, only forward implication is

performed from si .

B. Assignment Rule

Fig. 2. Fault-free signal values (no boxes) and observed responses (boxed

values) for an example CUD having two failing patterns: (a) t1 (abcdefg) =

0010001; and (b) t2 (abcdefg) = 0001010.

passing output to actually fail for tk no matter what other sites

implicated by path-tracing actually exist. For this situation, si

can be eliminated from the set of potential defect sites for tk ,

since its existence establishes an inconsistency not observed

in the test response. We use the following method to identify

and eliminate such sites.

For a failing pattern tk , we first determine the signal values

in the fault-free CUD. Then, we implicitly consider all possible

defect combinations by injecting the unknown logic value

X = {0, 1} at each potential defect site deduced from all

failing patterns. The X values are propagated to observable

points to represent the circuit state under all possible defect

combinations. The use of X values here is different from

previous diagnosis methods [8], [15]–[17], where X values are

used to model the unknown behavior of a logically-bounded

defect. We use X values instead to represent the simultaneous

interactions among all potential sites.

Next, fault-free values are assigned to all the passing outputs

whose values have changed during X propagation. A “conservative” implication is applied beginning with these passing

outputs. Conservative implication is a form of implication for

Consider a signal line si that is implied to a value vi ∈ {0, 1}

for a failing pattern tk . If si is implied to be vi through forward

implication, si is actually assigned to value vi only if it is not

a potential defect site for any failing pattern. If, on the other

hand, si is implied to vi through backward implication, then

value vi is assigned to si regardless of whether it is a potential

defect site or not. This rule is justified since si must be vi for

its downstream passing points to have the observed values.

If a site si is assigned to a value vi through conservative

backward implication in tk , si must have value vi in order

for its defect-free downstream logic to have correct values. si

is, therefore, eliminated if it is in the set of potential defect

sites identified by path-tracing pattern tk . Sites eliminated

through this analysis are likely to be near the observable

points. Whenever a site si is removed, other sites that were

implicated by path-tracing only through si are also removed.

This transitive removal of other sites leads to the elimination

of sites that are farther away from the observable points.

Since interactions among all potential defect sites are considered in conservative implication, the elimination of sites

in one pattern may lead to changes in circuit states deduced

from conservative implication in other patterns. Thus, alreadyeliminated sites can lead to the elimination of other sites, as

described in the outermost while loop of Algorithm 1. The

procedure terminates when no more sites are eliminated in a

given iteration in the outermost while loop of Algorithm 1.

All possible defect-site combinations produced by pathtracing and all possible maskings are taken into account

when eliminating sites. Moreover, no assumption is made

about defect behavior in DSIE. Therefore, if every defect site

propagates its error to at least one failing observable point,

then DSIE is conservative, that is, it cannot remove any defect

site that contributes to the failing responses.

Subsequent work reported in [7] applies a similar idea

to limited defect behaviors, namely, multiple stuck-at faults.

Defects whose behavior deviates from stuck-at behavior are,

therefore, likely eliminated incorrectly by their approach. In

addition, work in [7] uses simulation to identify and eliminate

sites, as opposed to conservative implication. Simulation is

less efficient since each site has to be evaluated separately

and the entire circuit is considered. On the other hand, when

using conservative implication, all possible sites that can be

eliminated are evaluated by determining all signal values only

once.

980

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 29, NO. 6, JUNE 2010

pattern, b2 is also removed from the set of potential defect sites

for the second failing pattern.

For the example of Fig. 2, in the first iteration of conservative implication and site elimination, no sites can be eliminated

for the first failing pattern. For the second failing pattern, l and

b2 are removed from the set of potential defect sites for the

second pattern. No more sites can be eliminated in the second

iteration, so the procedure terminates. Therefore, considering

the possible errors on each site recorded during path-tracing,

the set of candidates after DSIE include {m/1, i/1, a/1, b1 /1,

b/1, h1 /1, h/1, c/0, n/1, h2 /1, j1 /1, e/1, f/1, j/1, j2 /1, p/0,

q/1, j3 /1, d1 /0, d/0, k1 /1, k/1, g/1, b3 /1, r/1, k2 /1, l1 /1}.

III. Candidate Forest

The number of candidates after DSIE is applied can still

be significant. Thus, a smaller group of candidates should be

selected, if possible. In this section, we first analyze the effects

of each individual candidate on the CUD using path-based

behavior evaluation (Section III-A). Then, a candidate forest

is constructed based on the results of this analysis to better

guide diagnosis (Section III-B). The candidate forest is used

to perform candidate selection in Section IV.

A. Path-Based Behavior Evaluation

Fig. 3. Signal values for the example of Fig. 2 after fault-free simulation,

unknown value injection, X value propagation, and fault-free value assignment

to the passing outputs, for two failing patterns: (a) t1 (abcdefg) = 0010001;

and (b) t2 (abcdefg) = 0001010.

For the example CUD of Fig. 2, the signal values after

executing fault-free simulation, unknown value injection, Xvalue propagation, and fault-free value assignment to the

passing outputs are shown in Fig. 3. To illustrate conservative

implication and site elimination, we use the second failing

pattern of Fig. 3 as an example. Since l2 is not a potential

defect site for either of the failing patterns, both backward

and forward implication can be performed from l2 according

to the implication rule. Since l is implied to a value 0

through backward implication from l2 , it is assigned to value 0

according to the assignment rule. According to the implication

rule, only forward implication can be performed for l since it

is a potential defect site for the second failing pattern. Thus,

l1 is implied to a value 0 through forward implication from l.

Since l1 is a potential defect site, l1 is not assigned to value 0

according to the assignment rule. Since l is assigned to value

0 through backward implication, it is eliminated from the set

of potential defect sites identified by path-tracing the second

failing pattern. Since signal line b2 is identified as a potential

defect site when path-tracing only from l for the second failing

Given a test pattern tk , if a defect at site si is activated,

then si has an erroneous value in pattern tk . The resulting

error may propagate to some other signal lines in the CUD

along paths that are properly sensitized, which is the effect si

has on the CUD for tk . The side inputs of the gates on the

error propagation path must have noncontrolling values for the

path to be sensitized. In some cases, the error from site si can

propagate to outj when all the side inputs of the gates on the

error propagation path have fault-free values. In other cases,

the error from site si can propagate to an observable point outj

only when some side inputs have erroneous values, which is

possible in the context of reconvergent fanouts or multipledefective sites. In other words, when there are multipledefective sites in the CUD, some observable points may fail

due to the combined effects of several defective sites. In order

to avoid explicitly analyzing the effects of combinations of

candidates on the CUD, it is necessary to capture the effects

of each individual candidate for every failing pattern. The only

effects that can be captured are those that produce errors at

the observable points, which can be represented using the error

propagation environment of a candidate. To properly describe

this situation, we first introduce the following definitions.

Definition 1: Candidate si /vi is said to output-explain an

observable point outj for a failing pattern tk if the fault-free

value on site si is vi and an error from si propagates to outj in

tk when all the side inputs of the on-path gates have fault-free

values.

Definition 2: Candidate si /vi is said to partially outputexplain an observable point outj for a failing pattern tk if the

fault-free value on site si is vi and an error from si propagates

to outj in tk only when some side inputs of the on-path gates

have erroneous values.

YU AND BLANTON: DIAGNOSIS OF INTEGRATED CIRCUITS WITH MULTIPLE DEFECTS OF ARBITRARY CHARACTERISTICS

TABLE I

Per-Test Path Sets for Candidates From Fig. 2

Candidate

ID

f0

f1

f2

f3

f4

f5

f6

f7

f8

f9

f10

f11

f12

f13

f14

f15

f16

f17

f18

f19

Stuck-At Fault

Equivalence Class

m/1

i/1

a/1

b1 /1

b/1

h1 /1

h/1

c/0

n/1

h2 /1

j1 /1

e/1, f/1, j/1

j2 /1, p/0

q/1

j3 /1

d1 /0

d/0

k1 /1

k/1, g/1, b3 /1

r/1, k2 /1, l1 /1

Per-Test Path Set

Failing Pattern 1

Failing Pattern 2

{m}

{m}

{m}

{m}

{a−m}

{a−m}

{b1 −m}

{b1 −m}

{b1 −m}

{b1 −m, q, r}

{m}

{m}

{m, h2 −n}

{m, n}

{m, h2 −n}

∅

{n}

{n}

{h2 −n}

{n}

{j1 −n}

∅

{j1 −n, p, q}

∅

{p}

∅

{q}

{q}

{q}

∅

∅

{m, n}

∅

{m, n}

∅

{q}

∅

{q, r}

∅

{r}

Definition 3: Let pijk be the path along which an error from

site si propagates to an observable point outj for a failing

pattern tk . Let si −Ji be the longest subpath in pijk such that

an error from si can propagate to Ji when all the side inputs

of on-path gates have fault-free values, where Ji is an on-path

gate input or the observable point outj . The remaining subpath

of pijk (i.e., Ji −outj ) is called the under-explained subpath

of pijk .

If candidate si /vi output-explains outj , the under-explained

subpath from site si to outj is simply outj , a path of length 0.

In the case where si /vi partially output-explains outj , the length

of the corresponding under-explained subpaths is at least one.

In the latter case, there can be more than one under-explained

subpath since there could be multiple paths from site si to

outj that can be properly sensitized. It is possible however

that some paths cannot be properly sensitized. Consider the

CUD in Fig. 2, for example. There is one path from site d

to output r, namely d−d2 −l−l1 −r. However, in the second

failing pattern, since signal line b2 has controlling value 0 and

signal line d2 has noncontrolling value 1, it is impossible for

an error at d to flip the value at gate-output l. Therefore, there

is no path that can be properly sensitized to propagate the error

from d to r for the second failing pattern. Identifying all paths

that can be properly sensitized to enable error propagation to

a set of failing observable points can be easily accomplished

using an approach similar to path-tracing.

Given a failing observable point outj that candidate si /vi

(partially) output-explains, the corresponding under-explained

subpaths capture the error propagation environment from site

si to outj , which is the effect of the defect at site si on outj .

In order to capture the error propagation environment of all

candidates, a path-set is recorded for each candidate si /vi . For

each of si /vi ’s (partially) output-explained failing observable

points outj in failing pattern tk , the under-explained subpath

981

Algorithm 2 Path-Set Creation

for each candidate si /vi do

Initialize Pi to be empty

for each failing test tk do

Initialize Pik to be empty

for each failing output outj that si /vi output-explains do

Add outj to set Pik

end for

for each failing output outj that si /vi partially output-explains do

Add Ji −outj to set Pik

end for

Add Pik , which is the per-test path set of si /vi for tk , to set Pi

end for

Pi is the path set for candidate si /vi

end for

Ji −outj is added to the per-test path set Pik for tk . The path

set Pi for si /vi is a set composed of all Pik ’s. Pseudo-code

describing path set creation is given in Algorithm 2. However,

path sets can be more efficiently deduced for all candidates

using a procedure similar to path-tracing.

The CUD in Fig. 2 is used to illustrate path set creation.

For each failing pattern tk , per-test path sets are derived for

candidates surviving DSIE (Table I). For example, for the first

failing pattern, the error from site h can propagate to failing

output m when all the side inputs along the error propagation

path h−h1 −m have fault-free values. On the other hand, the

error from h can propagate to failing output n only when side

input j1 has an erroneous value. There is no error propagation

path from site h to output p or q. Therefore, the per-test path

set of h/1 for the first failing pattern is {m, h2 −n}.

Path-based behavior evaluation is able to capture the combined effects of multiple-defects, as well as effects of a single

defect. Thus, we avoid simulating combinations of candidates,

which prevents the complexity of diagnosis from growing exponentially with the number of defects in the CUD. Moreover,

this path-based behavior evaluation method does not rely on

the existence of any particular failing pattern characteristics

and is not based on any assumptions of defect behavior.

B. Candidate Forest Formation

In order to effectively guide diagnosis, we construct a candidate forest based on the path-sets according to the following

rules.

1) Rule 1: Candidates with exactly the same path-sets are

grouped to form a node.

2) Rule 2: Consider two nodes A and B with candidate

fa ∈ A and fb ∈ B. By comparing their corresponding

path-sets, node B is deemed to be parent of node A in

the forest if: 1) the path-set of fa is a proper subset of

the path-set of fb ; and 2) the path-set of fa is not a

proper subset of any candidate in the child node(s) of

B. This proper subset relationship is formally stated in

Definition 4.

3) Rule 3: There is a directed edge from node A to node

B if A is a parent of B.

Definition 4: The per-test path set Pak of candidate fa for

failing pattern tk is a proper subset of the per-test path set

Pbk of candidate fb for tk if Pak = Pbk and for every path

pak ∈ Pak , there exists a path pbk ∈ Pbk such that pak = pbk

982

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 29, NO. 6, JUNE 2010

or pbk is a subpath of pak . The path set Pa of candidate fa is

a proper subset of the path set Pb of candidate fb if Pa = Pb

and for every per-test path set Pak ∈ Pa , there exists a per-test

path set Pbk ∈ Pb such that Pak = Pbk or Pak is a proper subset

of Pbk .

Fig. 4 shows an example candidate forest that is constructed

based on the path-sets shown in Table I. For example, candidates f15 and f16 have exactly the same per-test path sets for

the two failing patterns of the CUD of Fig. 2. Therefore, f15

and f16 are in the same node according to Rule 1. Candidate

f6 has the same per-test path set as f15 and f16 for the second

failing pattern. However, for the first failing pattern, the pertest path set of f6 is {m, h2 −n}, while the per-test path set

of f15 and f16 is empty. Therefore, the path set of f15 and

f16 is a proper subset of the path set of f6 . Since there is

no candidate whose path set is both a proper subset of f6 ,

and a proper superset of the path set of f15 and f16 , the node

consisting of f15 and f16 is a child of the node consisting

of f6 according to Rule 2. As a result, there is a direct edge

from the node consisting of f6 (S0 ) to the node consisting

of f15 and f16 (S6 ) according to Rule 3. Candidates f4 and

f6 have different path sets, and there is no set subsumption

relationship between the per-test path sets of f4 and f6 for

failing pattern 2. So, f4 and f6 are in different, isolated nodes

with no directed path between the two nodes.

The candidate forest is a structured representation of the

effects of all possible candidates on a failing CUD. According

to the way a candidate forest is constructed, the candidates in

the same node have the same effects on the failing observable

points. For example, in Fig. 4, candidates f15 and f16 are in

the same node S6 . For the second failing pattern of Fig. 2, an

error from either f15 or f16 can propagate to outputs m and

n when all the side inputs have fault-free values. More, it is

impossible for any of them to produce an error at output q and

r. Neither f15 nor f16 have an effect on the CUD for the first

failing pattern. Therefore, the error propagation requirements

for f15 and f16 are the same under the test set. So, they have

the same effect on the failing observable points.

Candidates in different forest nodes, of course, have different effects on the failing observable points. Consider two

nodes A and B with candidate fa ∈ A and candidate fb ∈ B.

If B is a parent of A, then the effects that fa has on the

failing observable points are subsumed by the effects of

fb . Specifically, for every failing test tk and every failing

point outj that fa (partially) output-explains in tk , the set of

erroneous side inputs that can propagate an error from fa to

outj can also propagate the error from fb to outj . However, the

converse is not true. In other words, fb can (partially) outputexplain more failing observable points, or requires a smaller

set of erroneous side inputs to propagate its error to a failing

observable point that is partially output-explained by fa . More

generally, the effects of any candidate in node B subsume the

effects of any candidate in node A on failing points if there

is a directed path from B to A in the candidate forest. For

example, there is a directed path from the node that contains

f8 to the node containing f9 (see Fig. 4). f8 output-explains

output n for both failing patterns, while f9 partially outputexplains n for the first failing pattern and output-explains n for

the second failing pattern. Neither of them (partially) outputexplains any other failing outputs. Therefore, the effects of f8

subsumes the effects of f9 .

If there is no directed path between forest nodes A and B,

the candidates in A and B have unique impact on the failing

observable points, although their overall effects may overlap

for some of the logic cones. Consider nodes S2 and S3 in

Fig. 4 for example. Both of them output-explain output q for

the first failing pattern. However, f13 output-explains q for the

second failing pattern, while f11 does not. On the other hand,

f11 output-explains p and partially output-explains n for the

first failing pattern, but f13 (partially) output-explains neither

of them. So, f11 and f13 have their own unique effects on the

failing observable points, even though the effects of f11 and

f13 on failing point q overlap for the first failing pattern.

IV. Candidate Selection and Ranking

In this section, our candidate selection and ranking that

utilizes the candidate forest is described. We first select a nearminimal group of candidates that can jointly output-explain the

observed failing responses. Candidates that have an equivalent

effect are then identified. Among the selected candidates that

have the equivalent effect on the failing points, we identify

and rank those candidates that produce the least mismatch at

passing points for the failing patterns. The details of each step

are described in the following sections.

A. Candidate Selection

The candidate selection step greedily selects a near-minimal

group of nodes such that the candidates in the selected nodes

jointly output-explain all the observed failing points. Similar

to what is analyzed in [2], deriving the smallest group of candidates may lead to incorrect diagnosis, but this shortcoming is

difficult to overcome since examining all combinations would

greatly increase the complexity of diagnosis.

According to Section III-B, if there is a directed path

from B to A in the candidate forest, then the effects of the

candidates in node B subsume the effects of the candidates in

A. Therefore, the effects of the candidates in a root node of the

candidate forest subsume the effects of any candidates in its

decedent nodes. Therefore, the candidate selection procedure

selects one root node A at a time such that candidates in

A output-explain the most failing points that are not outputexplained by candidates already selected. The pseudo-code

for candidate selection is given in Algorithm 3. The number

of nodes selected is an estimation of the number of actual

defective sites in the CUD since candidates in different nodes

have unique effects on the CUD.

For the example CUD of Fig. 2, there are five root nodes

in the candidate forest formed from the candidates surviving

DSIE (Fig. 4). Candidates in the root nodes include f4 , f6 ,

f8 , f11 , and f13 . Table II shows the nodes and their outputexplained points. S0 is selected in the first iteration since

the candidates in S0 output-explain more failing points than

the candidates in nodes S1 , S2 , S3 , and S4 . In the second

iteration, the combined effects of the candidates in S0 and

S3 output-explain three of the remaining failing points that

YU AND BLANTON: DIAGNOSIS OF INTEGRATED CIRCUITS WITH MULTIPLE DEFECTS OF ARBITRARY CHARACTERISTICS

Fig. 4.

983

Candidate forest corresponding to candidates shown in Table I.

Algorithm 3 Candidate Selection

Algorithm 4 Equivalent Candidate Identification

Sr = {roots in the candidate forest}

Ssel = {the selected nodes}

FO = {failing observable points not output-explained by Ssel }

Initialize Ssel = ∅ and FO = all failing observable points

while FO = ∅ do

Select node A ∈ Sr that leads to maximal reduction in FO

Ssel = Ssel ∪ {A}

FO = FO− {points jointly output-explained by candidates in Ssel }

end while

TABLE II

Ssel = {selected nodes from candidate selection}

for each node A ∈ Ssel do

Initialize Queue = {A’s child nodes} ∪ {root nodes} − Ssel

while Queue = ∅ do

N=pop front(Queue)

Se = Ssel ∪ N − A

if candidates in Se jointly explain all failing points then

Queue = Queue∪ {N’s child nodes}

A=A∪N

end if

end while

end for

Candidates in the Roots of the Candidate Forest of Fig. 4 and

Their Output-Explained Points for the CUD of Fig. 2

Node

S0

S1

S2

S3

S4

= {f6 }

= {f4 }

= {f13 }

= {f11 }

= {f8 }

Observed Failing Outputs

Failing Pattern 1

Failing Pattern 2

m

n

p

q

m

n

q

r

✓

✓

✓

✓

✓

✓

✓

✓

✓

✓

✓

are not output-explained by candidates in S0 alone, including

output n for the first failing pattern. However, the combined

effect of the candidates in S0 and S1 , S0 and S2 , S0 and S4

output-explains fewer than three of the failing points that are

not output-explained by the candidate in S0 alone. Therefore,

S3 is selected in the second iteration. The combined effect of

the candidates in S0 , S1 , and S3 output-explains all the failing

points. However, the combined effect of the candidates in S0 ,

S2 , and S3 does not output-explain all the failing points, nor

does the combined effect of the candidates in S0 , S3 , and S4 .

So, S1 is selected in the third iteration. Candidate selection

terminates after three iterations since the combined effects

of sites f4 , f6 , and f11 output-explain all failing observable

points. The total number of nodes selected is three, so the

estimated number of defective sites in the CUD is three, which

happens to be correct.

B. Equivalent Candidate Identification and Ranking

Candidates in the unselected nodes have different effects

on the CUD as compared to those from the selected nodes.

However, given some selected nodes, candidates in different

nodes A and B may be able to produce the same failing

response. In this situation, candidates in node A and B are

said to have equivalent effects on the failing points given the

selected nodes. After the candidate selection step, we identify

nonselected candidates that have equivalent effects on the

failing points as the selected. Specifically, let Ssel be the set of

nodes selected in the candidate selection step. For each node

A ∈ Ssel , if some other node N has the property that candidates

in the node set Ssel ∪ {N} − {A} jointly output-explain all

failing points, then candidates in node N have the equivalent

effect on the failing observable points as the candidates in

node A given the selected group of nodes. N is, therefore,

selected in this equivalent-candidate identification step. Since

node A is selected due to the effects of its candidate elements

on the failing observable points, the nodes whose candidate elements have equivalent effects as A must be among:

1) the root nodes; 2) the tree rooted at the node A; or

3) the trees rooted at the nodes whose candidate elements have

the equivalent effects as A. The pseudo-code for equivalent

candidate identification is given in Algorithm 4. In a real

application of diagnosis, it is necessary to set an upper limit for

reporting equivalent candidates since physical failure analysis,

a process for determining the real defect location, is very time

consuming. It is, therefore, applicable only when there are few

candidates reported for a defect site. As a result, the estimated

number of defective sites, which is derived in the candidate

selection step, is a good guidance for setting this upper limit.

Among the candidates that have an equivalent effect on the

failing points, we prefer those that cause the least number of

passing points to fail for the failing patterns. This step is based

on the observation that an actual defect site will probably

have weaker evidence for supporting passing-point failure.

The candidates identified constitute our diagnosis output and

they are ranked according to their number of output-explained

failing observable points, since sites that output-explain more

failing points are more preferred.

For the example CUD of Fig. 2, the candidate forest for the

sites surviving DSIE is shown in Fig. 4. Nodes S0 , S1 , and S3

984

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 29, NO. 6, JUNE 2010

are selected in the candidate selection step. Apart from node

S1 , only node S8 has the property that the combined effects of

its candidates and the candidates in S0 and S3 output-explain

all the failing points. Therefore, f18 has the equivalent effect

as f4 given the selected nodes. f4 fails one passing observable

point, while f18 causes no the passing-point failure. So, f18 is

identified as a final diagnosis callout. No candidate in any of

the unselected nodes has the equivalent effect as a candidate

in either S0 or S3 . So, f6 and f11 are also identified as final

diagnosis candidates. Therefore, the output of diagnosis for the

example CUD of Fig. 2 is f6 , f11 , and f18 , which corresponds

to faults h/1, j/1, and k/1. h/1 is ranked first since it outputexplains more failing points than j/1 and k/1. Since there are

three defective sites h, j, and k in the example CUD of Fig. 2,

this diagnosis output is correct.

V. Experiment Results

In our experiments, we use the larger ISCAS’89 benchmarks

[18]. A test set generated by Atalanta [19] that achieves near

100% stuck-at fault coverage is used for diagnosis. Permanent

stuck-at, two-line (AND and OR-type) bridge, and transition

faults are used in this simulation experiment. For each circuit,

each fault type, and multiplicity, we consider 60 random fault

injections3 for a total of 5700 different faulty circuits. For

each of the 5700 faulty circuits, our diagnosis consisting of

DSIE, candidate forest formation, and candidate selection and

ranking was performed.

On average, applying DSIE leads to 30% fewer candidates

than applying only path-trace. For all 5700 faulty circuits,

there was not one case where an actual defect site was removed

by conservative implication when all defective sites propagated

their errors to at least one of the failing observable points. In

Section III, we showed the construction of a candidate forest

and that those candidates whose effects cannot be subsumed

by others were in forest roots. In this experiment, the average

percentage of actual defect sites being in one of the forest

root nodes is 91%, while the candidates in the roots only

constitute 13% of the candidates surviving DSIE. This shows

that candidate forest is effective in guiding diagnosis.

Figs. 5–7 report results for multiple stuck-at, two-line

bridge, and transition faults, respectively. The diagnosis quality

is evaluated using two metrics, namely, diagnosability and

resolution. Diagnosability and resolution are two important

measures of effectiveness of a diagnosis algorithm. Diagnosability is defined as SD /SI , where SD is the number of actual

defect sites identified by diagnosis and SI is the number of

defect sites injected. Resolution is defined as SI /ST , where ST

(≥SD ) is the total number of sites reported by diagnosis. For

any diagnosis approach, the ideal outcome is for resolution

and diagnosability to simultaneously both be one, that is,

SD /SI = SI /ST = 1.0.

3 Multiple faults are injected by randomly selecting a site and randomly

deciding its behavior (i.e., stuck-at-0 or stuck-at-1 for stuck-at faults, ANDtype or OR-type for bridge faults, and slow-to-rise or slow-to-fall for transition

faults) The fault is injected into the circuit unless a fault has already been

injected at that site. The injection repeats until the number of faults injected

reaches the required multiplicity.

Fig. 5. (a) Diagnosability and (b) resolution for a varying number of

randomly-injected stuck-at faults (averaged over 60 injections in five benchmarks).

When diagnosing CUDs affected by multiple stuck-at or

transition faults of size five or less, the average diagnosability

and resolution is 88% and 59%, respectively. Moreover, CUDs

affected by >20 multiple stuck-at or transition faults are

successfully diagnosed, with an average diagnosability of

79% and resolution of 78%. The average diagnosability and

resolution when diagnosing CUDs affected by a single twoline bridge fault are 88% and 47%, respectively. Moreover,

when diagnosing CUDs affected by >20 multiple two-line

bridge faults, our diagnosis methodology achieves an average

diagnosability and resolution of 63% and 93%, respectively.

The maximum standard deviation for diagnosabiltiy in Figs. 5–

7 is 0.03 and the maximum standard deviation for resolution

in Figs. 5–7 is 0.07.

It is shown that our diagnosis approach is able to effectively

diagnose circuits affected by a small and large number of

randomly-injected defects for all three types with good diagnosability and resolution. To the best of our knowledge,

the number of random defects injected in the experiments

of existing papers for other approaches are no more than

five. Moreover, this approach does especially well for a

large number of bridge faults. The reason that this proposed

approach performs better on bridge faults is that no assumption

is made about the defect behavior.

The sites selected by our diagnosis methodology are ranked

using the approach described in Section IV. Percentage first hit

YU AND BLANTON: DIAGNOSIS OF INTEGRATED CIRCUITS WITH MULTIPLE DEFECTS OF ARBITRARY CHARACTERISTICS

985

Fig. 6. (a) Diagnosability and (b) resolution for a varying number of

randomly-injected bridge faults (averaged over 60 injections).

Fig. 7. (a) Diagnosability and (b) resolution for a varying number of

randomly-injected transition faults (averaged over 60 injections).

is defined as the average percentage that an injected defect site

is top ranked (i.e., ranked number one). Percentage first hit in

this experiment is 85%. This means among the 5700 different

faulty circuits in this experiment, there are 4845 CUDs whose

top ranked candidate site is an actual defect site.

Fig. 8 shows the relationship between the number of defect

sites estimated by our diagnosis methodology and the actual

number of defect sites injected in the simulation experiment.

Defective sites are injected at multiplicities of x ∈ {2, 4, 5, 7,

10, 14, 15, 20, 21}. The dotted line represents the ideal case

where the estimated number of defective sites always agrees

with the actual number injected. The solid line represents

the estimated number of defective sites versus the actual

number of injected defective sites in this experiment. Data

points on the solid line are the means of the estimations. The

plot shows the means of the estimations are within 20% of

the ideal cases. The vertical bar across a mean represents

the standard deviation of the estimations above and below the

mean. It shows that the estimated numbers of defective sites

for a certain number of injected defective sites cluster closely

around the mean. For small numbers of injected defects, there

is very good agreement between injected and estimated. For

example, when diagnosing circuits affected by two defective

sites, in 83% of the cases, our method estimates there are

two defective sites. The amount of mis-prediction increases

however for the larger numbers. We believe there are two

reasons for this phenomenon. First, when many defects exist

within the CUD, it is likely that some are never activated

by the applied test set. Second, due to the greedy nature of

Algorithm 3, it is likely that some actual defect sites are

not selected. For both reasons, our prediction would underestimate the number of actual defective sites when the CUD is

affected by a large number of defective sites. This explanation

is supported by the downward error bias shown in Fig. 8.

Several CUDs that had no SLAT patterns are encountered

during our simulation experiments. Table III shows the performance of our methodology in diagnosing these CUDs. For

many of these circuits, the resolution and diagnosability are

outstanding. This is a very good result given that many techniques would simply abort for these CUDs [2], [14]. Moreover,

it is likely that techniques that rely on SLAT patterns would

also have considerable difficulties for the remaining CUDs

since many of the SLAT patterns produced are actually due to

interacting faulty lines.

Since no assumption is made about the defect behavior under diagnosis, this method is expected to perform consistently

for defects with arbitrary behavior. To demonstrate this point,

we randomly-inject three stuck-at, three two-line bridge, and

three transition faults into the larger ISCAS’89 benchmarks

[18], and apply diagnosis. This experiment is repeated 60 times

for each of the five benchmark circuits. Table IV shows the

resulting (average) diagnosability, resolution, and percentage

986

IEEE TRANSACTIONS ON COMPUTER-AIDED DESIGN OF INTEGRATED CIRCUITS AND SYSTEMS, VOL. 29, NO. 6, JUNE 2010

TABLE IV

Diagnosability, Resolution, and Percentage First-Hit Rate for

Simultaneous, Randomly-Injected Stuck-At, Two-Line Bridge,

and Transition Faults of Multiplicity Three for Each Type

(Twelve Defective Sites) Averaged Over 60 Injections

Circuit

s13207

s15850

s35932

s38417

s38584

Diagnosability

(SD /SI )

0.77

0.75

0.75

0.90

0.82

Resolution

(SI /ST )

0.83

0.77

0.64

0.83

0.96

% First

Hit

91%

77%

92%

97%

93%

Fig. 8. Relationship between the estimated and actual number of defect sites

injected.

TABLE III

Diagnosis Performance on CUDs With No SLAT Patterns

Circuit

s13207

s15850

s35932

s38417

s38584

No. of

CUDs With

no SLAT

Patterns

63

42

71

10

11

Diagnosability

(SD /SI )

Resolution

(SI /ST )

%

First

Hit

0.72

0.72

0.83

0.90

0.90

0.89

0.87

0.61

0.87

0.95

81%

71%

89%

90%

100%

first hit. The results indicate that our methodology can effectively diagnose circuits that are simultaneously affected by

multiple failing mechanisms.

Finally, we perform diagnosis on 131 failing arithmetic

logic unit (ALU) chips provided by LSI. These chips are

manufactured in 0.11 µm technology. Since there are no

physical failure analysis (PFA) results for these chips, the

defects actually affecting the chips are unknown. These ALUs

have some non-SLAT patterns, indicating that each has at

least two simultaneously defective lines. We report a total

of 456 sites for these failing ALUs, while the traditional

diagnosis technique of [20] reports 1372 sites in total. Thus,

the diagnosis resolution of our methodology is 3x better

than the traditional approach. Although the diagnosability of

the two methods cannot be directly compared since PFA

results are not available for these failures, the accuracy of our

methodology has already been demonstrated in the simulation

experiments.

Fig. 9 shows the diagnosis time of s35932 for a varying

number of injected faults. It indicates that the proposed

methodology scales well with the number of “defects” in the

CUD. The average CPU time for diagnosing a failing pattern is

6.5 seconds for failing ALU chips affected by multiple-defects.

We expect to improve the execution time dramatically however

by exploiting the inherent parallelism that exists within the

various tasks that constitute DSIE, candidate forest creation,

and candidate selection.

Fig. 9. Diagnosis time of s35932 for a varying number of randomly-injected

candidates (averaged over 20 injections).

Fig. 10. Memory usage for diagnosing s35932 for a varying number of

randomly-injected candidates (averaged over 20 injections).

We use s35932 as an example to show the memory consumption of our method. Fig. 10 shows the memory usage for

diagnosing s35932 for a varying number of injected faults of

different types. The memory used for creating the candidate

forest constitutes less than 10% of the total memory used.

Memory can likely be reduced by exploring improved data

structures.

VI. Conclusion

In this paper, we described a multiple-defect diagnosis

methodology that is flexible in handling a large number of

defects, various defect behaviors, and arbitrary failing pattern

YU AND BLANTON: DIAGNOSIS OF INTEGRATED CIRCUITS WITH MULTIPLE DEFECTS OF ARBITRARY CHARACTERISTICS

characteristics. As shown by the simulation experiments, the

methodology is also able to accurately estimate the number of

defective sites in the CUD. Moreover, it has the characteristic

that it scales well with the number of defects in the CUD.

This methodology can be used to diagnose CUDs that cannot

be diagnosed by existing diagnosis methods.

References

[1] M. Abramovici, M. A. Breuer, and A. D. Friedman, “Logic-level

diagnosis,” in Digital Systems Testing and Testable Design. Piscataway,

NJ: IEEE, 1990, pp. 541–568.

[2] T. Bartenstein, D. Heaberlin, L. Huisman, and D. Sliwinski, “Diagnosing

combinational logic designs using the single location at-a-time (SLAT)

paradigm,” in Proc. Int. Test Conf., 2001, pp. 287–296.

[3] Y.-C. Lin, F. Lu, and K.-T. Cheng, “Multiple-fault diagnosis based on

adaptive diagnostic test pattern generation,” IEEE Trans. Comput.-Aided

Design Integr. Circuits Syst., vol. 26, no. 5, pp. 932–942, May 2007.

[4] I. Pomeranz, S. Venkataraman, S. Reddy, and B. Seshadri, “Z-sets and zdetections: Circuit characteristics that simplify fault diagnosis,” in Proc.

Design Autom. Test Eur., 2004, pp. 68–75.

[5] M. Abramovici and M. A. Breuer, “Multiple fault diagnosis in combinational circuits based on an effect-cause analysis,” IEEE Trans. Comput.,

vol. 29, no. 6, pp. 451–460, Jun. 1980.

[6] H. Takahashi, K. O. Boateng, K. K. Saluja, and Y. Takamatsu, “On diagnosing multiple stuck-at faults using multiple and single fault simulation

in combinational circuits,” IEEE Trans. Comput.-Aided Design (CAD)

Integr. Circuits Syst., vol. 21, no. 3, pp. 362–368, Mar. 2002.

[7] M.-J. Tasi, M. C.-T. Chao, J.-Y. Jou, and M.-C. Wu, “Multiple-fault

diagnosis using faulty-region identification,” in Proc. Very-Large-Scale

Integr. (VLSI) Test Symp., 2009, pp. 123–128.

[8] V. Boppana, R. Mukherjee, J. Jain, M. Fujita, and P. Bollineni, “Multiple

error diagnosis based on Xlists,” in Proc. Design Autom. Conf. (DAC),

Jun. 1999, pp. 660–665.

[9] C. Thibeault, “On the adaptation of viterbi algorithm for diagnosis of

multiple bridging faults,” IEEE Trans. Comput., vol. 49, no. 6, pp. 575–

587, Jun. 2000.

[10] D. B. Lavo, I. Hartanto, and T. Larrabee, “Multiplets, model, and the

search for meaning: Improving per-test fault diagnosis,” in Proc. Int.

Test Conf., 2002, pp. 250–259.

[11] X. Yu and R. D. Blanton, “Multiple-defect diagnosis using no assumptions on failing pattern characteristics,” in Proc. Design Autom. Conf.

(DAC), 2008, pp. 361–366.

[12] X. Yu and R. D. Blanton, “An effective and flexible multiple-defect

diagnosis methodology using error propagation analysis,” in Proc. Int.

Test Conf., 2008, pp. 1–9.

[13] S. Venkataraman and W. Fuchs, “A deductive technique for diagnosis

of bridging faults,” in Proc. Int. Conf. Comput.-Aided Design (CAD),

1997, pp. 562–567.

[14] R. Desineni, O. Poku, and R. D. Blanton, “A logic diagnosis methodology for improved localization and extraction of accurate defect behavior,” in Proc. Int. Test Conf., 2006, pp. 1–10.

[15] V. Boppana and M. Fujita, “Modeling the unknown! Toward modelindependent fault and error diagnosis,” in Proc. Int. Test Conf., 1998,

p. 1094.

[16] A. L. D’Souza and M. S. Hsiao, “Error diagnosis of sequential circuits

using region-based model,” in Proc. Int. Conf. Very-Large-Scale Integr.

(VLSI) Design, Jan. 2001, p. 103.

[17] N. Sridhar and M. S. Hsiao, “On efficient error diagnosis of digital

circuits,” in Proc. Int. Test Conf., Oct.–Nov. 2001, pp. 678–687.

[18] F. Brglez, D. Bryan, and K. Dozminski, “Combinational profiles of

sequential benchmark circuits,” in Proc. Int. Symp. Circuits Syst., 1989,

pp. 1929–1934.

[19] H. Lee and D. Ha, “On the generation of test patterns for combinational

circuits,” Dept. Electr. Eng., Virginia Polytechnic Inst. State Univ.,

Middleburg, Tech. Rep. 12-93, 1993.

[20] J. A. Waicukauski and E. Lindbloom, “Failure diagnosis of structured

VLSI,” IEEE Design Test Comput. (DAC), vol. 6, no. 4, pp. 49–60, Aug.

1989.

987

Xiaochun Yu (S’08) received the B.S. degree in

microelectronics from Fudan University, Shanghai,

China, in 2005, and the M.S. degree in electrical

and computer engineering from the Department of

Electrical and Computer Engineering, Carnegie Mellon University, Pittsburgh, PA, in 2008, where she is

currently working toward the Ph.D. degree.

Her current research interests include the area of

very large scale integration testing, diagnosis, and

defect characterization.

Dr. Yu is a recipient of an Intel Ph.D. Fellowship,

and a Bertucci Graduate Fellowship.

Ronald DeShawn Blanton (S’93–M’95–SM’03–

F’09) received the B.S. degree in engineering from

Calvin College, Grand Rapids, MI, in 1987, the M.S.

degree in electrical engineering from the University

of Arizona, Tucson, in 1989, and the Ph.D. degree

in computer science and engineering from the University of Michigan, Ann Arbor, in 1995.

He is currently a Professor with the Department

of Electrical and Computer Engineering, Carnegie

Mellon University, Pittsburgh, PA, where he serves

as the Director of the Center for Silicon System

Implementation, an organization consisting of 18 faculty members and over

80 graduate students focusing on the design and manufacture of siliconbased systems. His current research interests include the test and diagnosis

of integrated, heterogeneous systems and design-, manufacture-, and testinformation extraction from tester measurement data.