Solving Inverse Problems in Partial Differential

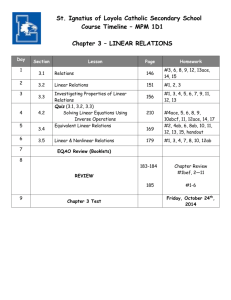

advertisement

Solving Inverse Problems in Partial Differential

Equations using higher degree Fréchet derivatives

William Rundell

Department of Mathematics

Texas A&M University

Joint work with Frank Hettlich (Karlsruhe)

Introduction

What were some of the hot mathematical topics

at the end of the seventeenth century?

Introduction

What were some of the hot mathematical topics

at the end of the seventeenth century?

Halley (1694) was impressed by a result published a few years earlier by

Thomas de Lagney.

r

p

2

ab

a

b

3

a+ 3

< a3 + b <

+

(1)

3a + b

4

3a

for a3 >> b > 0, calling these the rational formula and the irrational

formula. Out of these ideas evolved a numerical approximation scheme

now known as Halley’s method.

Introduction

What were some of the hot mathematical topics

at the end of the seventeenth century?

Halley (1694) was impressed by a result published a few years earlier by

Thomas de Lagney.

r

p

2

ab

a

b

3

a+ 3

< a3 + b <

+

(1)

3a + b

4

3a

for a3 >> b > 0, calling these the rational formula and the irrational

formula. Out of these ideas evolved a numerical approximation scheme

now known as Halley’s method.

√

3

For the standard example from school calculus, calculate 28,

a single step of Newton’s

method applied to g(x) := x3 − (a3 + b) gives

√

the approximation 3 28 = 3.037037.

Introduction

What were some of the hot mathematical topics

at the end of the seventeenth century?

Halley (1694) was impressed by a result published a few years earlier by

Thomas de Lagney.

r

p

2

ab

a

b

3

a+ 3

< a3 + b <

+

(1)

3a + b

4

3a

for a3 >> b > 0, calling these the rational formula and the irrational

formula. Out of these ideas evolved a numerical approximation scheme

now known as Halley’s method.

√

3

For the standard example from school calculus, calculate 28,

a single step of Newton’s

method applied to g(x) := x3 − (a3 + b) gives

√

the approximation 3 28 = 3.037037.

In comparison, (1) gives the estimates

√

3

3.036585 < 28 < 3.036591

Introduction

What were some of the hot mathematical topics

at the end of the seventeenth century?

Halley (1694) was impressed by a result published a few years earlier by

Thomas de Lagney.

r

p

2

ab

a

b

3

a+ 3

< a3 + b <

+

(1)

3a + b

4

3a

for a3 >> b > 0, calling these the rational formula and the irrational

formula. Out of these ideas evolved a numerical approximation scheme

now known as Halley’s method.

Brook Taylor recognised the derivatives implicit in Halley’s method in

1714 (this should not be surprising since it was not until 1740 that Simpson

showed the connection between derivatives and Newton’s method) so, let’s

follow that lead . . . .

End of Introduction

Halley’s method

In the linear equation f (x + h) = f (x) + f 0 (x)h

set xn = x, h = xn+1 −xn , with target f (x + h) = 0,

xn+1 − xn = h = −

f (xn )

f 0 (xn )

to give

(Newton’s Method)

Halley’s method

In the linear equation f (x + h) = f (x) + f 0 (x)h

set xn = x, h = xn+1 −xn , with target f (x + h) = 0,

xn+1 − xn = h = −

f (xn )

f 0 (xn )

to give

(Newton’s Method)

If we want greater accuracy the next step should be obvious

f (x + h) = f (x) + f 0 (x)h + 12 f 00 (x)h2 .

Halley’s method

In the linear equation f (x + h) = f (x) + f 0 (x)h

set xn = x, h = xn+1 −xn , with target f (x + h) = 0,

xn+1 − xn = h = −

f (xn )

f 0 (xn )

to give

(Newton’s Method)

If we want greater accuracy the next step should be obvious

f (x + h) = f (x) + f 0 (x)h + 12 f 00 (x)h2 .

This is a quadratic equation and the quadratic formula gives

p

0

h=

−f (x) −

f 0 (x)2 − 2f(x)f 00 (x)

f 00 (x)

(evaluate g(x) at x = a to obtain the irrational formula).

Halley’s method

An alternative method might be

f (x + h) = f (x) + f 0 (x)h + 12 f 00 (x) h.h̃

n)

where h̃ is predicted using Newton’s method, h̃ = − ff0(x

(xn ) .

Halley’s method

An alternative method might be

f (x + h) = f (x) + f 0 (x)h + 12 f 00 (x) h.h̃

n)

where h̃ is predicted using Newton’s method, h̃ = − ff0(x

(xn ) .

Inserting this prediction into the above gives the correction

0

f (xn + h) = f (xn ) + f (xn )h −

= f (xn ) +

1

2

1

2

f 00 (xn )f(xn )

h

f 0 (xn )

2f 0 (xn )2 − f 00 (xn )f(xn )

h

f 0 (xn )

Halley’s method

An alternative method might be

f (x + h) = f (x) + f 0 (x)h + 12 f 00 (x) h.h̃

n)

where h̃ is predicted using Newton’s method, h̃ = − ff0(x

(xn ) .

Inserting this prediction into the above gives the correction

0

f (xn + h) = f (xn ) + f (xn )h −

= f (xn ) +

1

2

1

2

f 00 (xn )f(xn )

h

f 0 (xn )

2f 0 (xn )2 − f 00 (xn )f(xn )

h

f 0 (xn )

This is again a linear equation in h and can be easily solved

−2f 00 (xn )f (xn )

xn+1 −xn = h = 0

2f (xn )2 − f (xn )f 00 (xn )

(Halley’s Method)

(Apply this to g(x), evaluate at x = a to obtain the rational formula)

Halley’s method

An alternative method might be

f (x + h) = f (x) + f 0 (x)h + 12 f 00 (x) h.h̃

n)

where h̃ is predicted using Newton’s method, h̃ = − ff0(x

(xn ) .

Inserting this prediction into the above gives the correction

0

f (xn + h) = f (xn ) + f (xn )h −

= f (xn ) +

1

2

1

2

f 00 (xn )f(xn )

h

f 0 (xn )

2f 0 (xn )2 − f 00 (xn )f(xn )

h

f 0 (xn )

This is again a linear equation in h and can be easily solved

−2f 00 (xn )f (xn )

xn+1 −xn = h = 0

2f (xn )2 − f (xn )f 00 (xn )

(Halley’s Method)

(Apply this to g(x), evaluate at x = a to obtain the rational formula)

Halley’s Method can be viewed as a predictor-corrector scheme that uses

Newton’s method as the predictor. Both predictor and corrector steps

require solving only linear equations.

End of Section

Nonlinear Mappings

What about solving a nonlinear equation in function space?

The paradigm is: let X, Y be Banach spaces and x ∈ U ⊆ X, then for

g ∈ Y we wish to solve

F (x) = g

Nonlinear Mappings

What about solving a nonlinear equation in function space?

The paradigm is: let X, Y be Banach spaces and x ∈ U ⊆ X, then for

g ∈ Y we wish to solve

F (x) = g

There are many possible approaches; we can attempt to solve this “pointwise” in X, or as a minimisation problem

minx∈U r(x)

r(x) := kF (x) − gkX .

Nonlinear Mappings

What about solving a nonlinear equation in function space?

The paradigm is: let X, Y be Banach spaces and x ∈ U ⊆ X, then for

g ∈ Y we wish to solve

F (x) = g

There are many possible approaches; we can attempt to solve this “pointwise” in X, or as a minimisation problem

minx∈U r(x)

r(x) := kF (x) − gkX .

We are interested in iterative solution methods

xn+1 = xn + An (F (xn ) − g)

½

−(F 0 )−1 (xn )

(Newton)

An =

(Landweber)

−µ(F 0 )? (xn )

Nonlinear Mappings

What about solving a nonlinear equation in function space?

The paradigm is: let X, Y be Banach spaces and x ∈ U ⊆ X, then for

g ∈ Y we wish to solve

F (x) = g

There are many possible approaches; we can attempt to solve this “pointwise” in X, or as a minimisation problem

minx∈U r(x)

r(x) := kF (x) − gkX .

We are interested in iterative solution methods

xn+1 = xn + An (F (xn ) − g)

½

−(F 0 )−1 (xn )

(Newton)

An =

(Landweber)

−µ(F 0 )? (xn )

What about using higher order derivatives in A?

A = A(F 0 , F 00 , . . .)

Nonlinear Mappings

F (x) = g

xn+1 = xn + An (F (xn ) − g)

Traditional wisdom would say the following:

Computationally, it is not worthwhile to invoke methods that utilise

second derivatives of F since . . . .

Nonlinear Mappings

F (x) = g

xn+1 = xn + An (F (xn ) − g)

Traditional wisdom would say the following:

Computationally, it is not worthwhile to invoke methods that utilise

second derivatives of F since . . . .

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

Nonlinear Mappings

F (x) = g

xn+1 = xn + An (F (xn ) − g)

Traditional wisdom would say the following:

Computationally, it is not worthwhile to invoke methods that utilise

second derivatives of F since . . . .

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

Nonlinear Mappings

F (x) = g

xn+1 = xn + An (F (xn ) − g)

Traditional wisdom would say the following:

Computationally, it is not worthwhile to invoke methods that utilise

second derivatives of F since . . . .

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

3. Have to deal with quadratic equations in function space.

Nonlinear Mappings

F (x) = g

xn+1 = xn + An (F (xn ) − g)

Traditional wisdom would say the following:

Computationally, it is not worthwhile to invoke methods that utilise

second derivatives of F since . . . .

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

3. Have to deal with quadratic equations in function space.

Here is the response:

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

• For ill-posed problems r(x) is never going to approach zero.

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

• For ill-posed problems r(x) is never going to approach zero.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

• For ill-posed problems r(x) is never going to approach zero.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

• For certain pde’s the second is not a valid criticism. Once F has been

obtained, derivatives can often be computed with a relatively small

additional cost.

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

• For ill-posed problems r(x) is never going to approach zero.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

• For certain pde’s the second is not a valid criticism. Once F has been

obtained, derivatives can often be computed with a relatively small

additional cost.

3. Have to deal with quadratic equations in function space.

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

• For ill-posed problems r(x) is never going to approach zero.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

• For certain pde’s the second is not a valid criticism. Once F has been

obtained, derivatives can often be computed with a relatively small

additional cost.

3. Have to deal with quadratic equations in function space.

• Our derivation of Halley’s method gave a hint of how to deal with

this item.

Nonlinear Mappings

1. Near convergence (residual r(x) near zero) the quadratic term is

exceedingly small and no benefit is obtained from including higher

derivatives.

• For ill-posed problems r(x) is never going to approach zero.

2. Calculation of the Hessians of F is very computationally intensive.

In fact, even brute force computation of a full first derivative might

be infeasible.

• For certain pde’s the second is not a valid criticism. Once F has been

obtained, derivatives can often be computed with a relatively small

additional cost.

3. Have to deal with quadratic equations in function space.

• Our derivation of Halley’s method gave a hint of how to deal with

this item.

Let us look at each of these responses in turn . . . .

End of Section

Ill-Posed Problems

We wish to solve the (first kind) integral equation

Z 1

K(x, t)f (t) dt = g(x)

Kf = g

0

ruept for the function f given the data g.

Ill-Posed Problems

We wish to solve the (first kind) integral equation

Z 1

K(x, t)f (t) dt = g(x)

Kf = g

0

ruept for the function f given the data g.

Two common approaches use the Nyström or the Galerkin method:

½

N

X

K(xi , tj )wj

fj = f (xj )

Aij fj

Aij = R 1

gi =

K(xi , t)φj (t) dt

fj = hf, φj i

0

1

Ill-Posed Problems

We wish to solve the (first kind) integral equation

Z 1

K(x, t)f (t) dt = g(x)

Kf = g

0

ruept for the function f given the data g.

Two common approaches use the Nyström or the Galerkin method:

½

N

X

K(xi , tj )wj

fj = f (xj )

Aij fj

Aij = R 1

gi =

K(xi , t)φj (t) dt

fj = hf, φj i

0

1

Z

1

Kf =

Example:

xe−xt f (t) dt = g(x)

x ∈ [0, 1]

0

g(x)

0.5

The Data

◦◦

.....

..........

.

.

.

.

.

.

.

.

.........

........

.

.

.

.

.

.

.......

.......

.

.

.

.

.

.

.......

.......

.

.

.

.

.

......

......

.

.

.

.

.....

......

.

.

.

.

.

.....

.....

.

.

.

.

.

.....

.....

.

.

.

....

.....

◦

◦

◦

◦◦

◦◦

◦

◦◦

◦

◦◦

◦

◦◦

◦

◦◦

1

Here g(x) is an

approximation to

1 − e−x .

Solution should be f = 1.

x

Ill-Posed Problems

We wish to solve the (first kind) integral equation

Z 1

K(x, t)f (t) dt = g(x)

Kf = g

0

ruept for the function f given the data g.

Two common approaches use the Nyström or the Galerkin method:

½

N

X

K(xi , tj )wj

fj = f (xj )

Aij fj

Aij = R 1

gi =

K(xi , t)φj (t) dt

fj = hf, φj i

0

1

Z

Kf =

Example:

1

xe−xt f (t) dt = g(x)

x ∈ [0, 1]

0

g(x)

0.5

The Data

◦

◦

◦

◦◦

◦◦

◦

◦◦

◦◦

◦

◦◦

◦◦

100 f (x) The reconstruction

◦◦

◦◦

........

.........

.

.

.

.

.

.

.

.........

........

.

.

.

.

.

.

.......

.......

.

.

.

.

.

.

.

.......

......

.

.

.

.

......

......

.

.

.

.

.....

......

.

.

.

.

.

.

.....

.....

.

.

.

.

.....

.....

.

.

.

...

.....

1

50

...

..

.

.

.

.

.....

..

.

......

.

...

.....

.

.

.......... .....

.....

. .

.

... . ..... ........ ....... ........ ......... .... ........... .... .. .. .

......... .....

.

.

. .

. .. . .

.

.. . . .

.................... .... ..... ......... .... ..... ... .. ........ .. ... ............ .. .... .................... ...... ..................

........ .................. ... ............... ........................ .................. .... ....... ................. ........... ... ............. ............ ............. ..................... .......

. .. . .

.. . . .

. ..

..

..

.. ..... ..... ..... ......... ..... .................... ...... .. ....... ............ ....... ..... ....... ....... .. .......

....

... ...... . ...... ....... .. ................. ............ ....... .. ........ .... ....... ....... ..

...

.... .. ... ..

.... ...

.....

. ...

.

.

.

.

.

.

....

... .....

....

....

...

... ...

.....

..

...

.....

...

1 x

−50

x

−100

Ill-Posed Problems

We wish to solve the (first kind) integral equation

Z 1

K(x, t)f (t) dt = g(x)

Kf = g

0

ruept for the function f given the data g.

Two common approaches use the Nyström or the Galerkin method:

½

N

X

K(xi , tj )wj

fj = f (xj )

Aij fj

Aij = R 1

gi =

K(xi , t)φj (t) dt

fj = hf, φj i

0

1

Z

Kf =

Example:

1

xe−xt f (t) dt = g(x)

x ∈ [0, 1]

0

g(x)

0.5

The Data

◦

◦

◦

◦◦

◦◦

◦

◦◦

◦◦

◦

◦◦

◦◦

100 f (x) The reconstruction

◦◦

◦◦

........

.........

.

.

.

.

.

.

.

.........

........

.

.

.

.

.

.

.......

.......

.

.

.

.

.

.

.

.......

......

.

.

.

.

......

......

.

.

.

.

.....

......

.

.

.

.

.

.

.....

.....

.

.

.

.

.....

.....

.

.

.

...

.....

1

50

...

..

.

.

.

.

.....

..

.

......

.

...

.....

.

.

.......... .....

.....

. .

.

... . ..... ........ ....... ........ ......... .... ........... .... .. .. .

......... .....

.

.

. .

. .. . .

.

.. . . .

.................... .... ..... ......... .... ..... ... .. ........ .. ... ............ .. .... .................... ...... ..................

........ .................. ... ............... ........................ .................. .... ....... ................. ........... ... ............. ............ ............. ..................... .......

. .. . .

.. . . .

. ..

..

..

.. ..... ..... ..... ......... ..... .................... ...... .. ....... ............ ....... ..... ....... ....... .. .......

....

... ...... . ...... ....... .. ................. ............ ....... .. ........ .... ....... ....... ..

...

.... .. ... ..

.... ...

.....

. ...

.

.

.

.

.

.

....

... .....

....

....

...

... ...

.....

..

...

.....

...

1 x

−50

x

−100

Clearly, something has gone horribly wrong

Ill-Posed Problems

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

Green’s function for Ly = −y 00

sin(πx)

g(x) =

, (f = sinπx)

π2

Ill-Posed Problems

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

Green’s function for Ly = −y 00

sin(πx)

g(x) =

, (f = sinπx)

π2

Using the Nyström method, trapezoid rule:

1

0.5

0

N =4

kR4 k2 = 0.042

kf − fcomp k2 = 0.018

...........................

...........

........

........

.......

.

.

.

.

.

.

......

.

.

.

.

.

.

.....

....

.....

.

.

.

.

.

.....

.

....

.....

.

.

.

.

.....

.

.

.

.

.

.....

..

.

.

....

.

.

.

.

....

.

...

....

.

.

.

.

.....

.

.

...

.....

.

.

.

.

.....

.

.

.

.

.

....

...

.

....

.

.

....

...

.

.

.

....

.

.

.

.

....

.

.

..

....

.

.

.

....

.

.

.

.

....

.

.

..

....

.

.

.

.

....

.

.

.

....

.

...

....

.

.

.

.

..

•

•

•

•

1

Ill-Posed Problems

Green’s function for Ly = −y 00

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

sin(πx)

g(x) =

, (f = sinπx)

π2

Using the Nyström method, trapezoid rule:

1

N =8

...........................

...........

........

........

.......

.

.

.

.

.

.

......

.

.

.

.

.

.

.....

....

.....

.

.

.

.

.

.....

.

....

.....

.

.

.

.

.....

.

.

.

.

.

.....

..

.

.

....

.

.

.

.

....

.

...

....

.

.

.

.

.....

.

.

...

.....

.

.

.

.

.....

.

.

.

.

.

....

...

.

....

.

.

....

...

.

.

.

....

.

.

.

.

....

.

.

..

....

.

.

.

....

.

.

.

.

....

.

.

..

....

.

.

.

.

....

.

.

.

....

.

...

....

.

.

.

.

..

•

•

0.5

•

•

0

kR8 k2 = 0.028

kf − fcomp k2 = 0.003

•

•

•

•

1

Ill-Posed Problems

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

Green’s function for Ly = −y 00

sin(πx)

g(x) =

, (f = sinπx)

π2

Using the Nyström method, trapezoid rule:

1

N = 16

...........................

...........

........

........

.......

.

.

.

.

.

.

......

.

.

.

.

.

.

.....

....

.....

.

.

.

.

.

.....

.

....

.....

.

.

.

.

.....

.

.

.

.

.

.....

..

.

.

....

.

.

.

.

....

.

...

....

.

.

.

.

.....

.

.

...

.....

.

.

.

.

.....

.

.

.

.

.

....

...

.

....

.

.

....

...

.

.

.

....

.

.

.

.

....

.

.

..

....

.

.

.

....

.

.

.

.

....

.

.

..

....

.

.

.

.

....

.

.

.

....

.

...

....

.

.

.

.

..

•

0.5

•

•

•

0

• • • •

•

•

kR16 k2 = 0.019

kf − fcomp k2 = 0.004

•

•

•

•

•

•

1

Ill-Posed Problems

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

Green’s function for Ly = −y 00

sin(πx)

g(x) =

, (f = sinπx)

π2

Using the Nyström method, trapezoid rule:

1

0.5

N = 32

kR32 k2 = 0.014

kf − fcomp k2 = 0.014

•......................................•

......... •

• ..............•

•...........•

......

.

.

.

.....

•

.... •

.

.

.....•

.

•

...•

.

.....

.

.

.

.

..... •

.

.

•

...•

.....

.

.

.

.

.....

.

.

.

.

.

....

•

... •

.

....

.

.

..

•

.....

.

.

.

.

•..

..

..

....

.

.

.

.

.

....

....

.

.

.

....

....

.

.

.

....

....

.

.

.

....

....

.

.

.

..

••

0

• •

•

••

.....

.....

....

....

....

....

....

....

....

....

....

....

....

....

.

••

•

• •

•

1

Ill-Posed Problems

Green’s function for Ly = −y 00

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

sin(πx)

g(x) =

, (f = sinπx)

π2

Using the Nyström method, trapezoid rule:

•

1

0.5

0

• • • • •

• •

kR48 k2 = 0.011

...........................

...........

........

• kf − fcomp k2 = 0.026

........

.......

.

.

•

.

.

.

.

.

.

.

.

.

.

.....

.. •

.....

..... •

• .•.............

•

•

.....

•

.

•

.

.....

•

.

.

•

... •

.....

.

.

.

.

.....

•

.

•

.

.

.

•

.

• •.......

..

.... ••

..

...•

.

.

.

•

•.•............. •

•..........•.

.....

....

•..........•

....

....

...

.

.

.

.... •

.

.

.

.

•

....

.

.

• ..•...

....

....

.

.

.

•

•

.

....

.

.

•

..

....

.

.

.

•

....

.

•

.

...

...

.

.

.

..

• •...

1

N = 48

Ill-Posed Problems

Green’s function for Ly = −y 00

Let’s take a more “pde-example”,

½

t(1 − x) if t < x

K(x, t) =

x(1 − t) if t > x

sin(πx)

g(x) =

, (f = sinπx)

π2

Using the Nyström method, trapezoid rule:

•

• •

1

0.5

0

•• ••

•

•

•

•

•

• kR64 k2 = 0.01

..................................

kf − fcomp k2 = 0.041

.........

.

.......

.

.

.

.

• ..•

.

......

•

....

.

.

•

.

.

.

•

.

.....

....

.

.

.

.

.

.

.....

•

• •

...

.....

.....

• • •

• ...........•..

• •

.....

.

.

.

.

• .......

.....

•

....

.

•• .........

.

.

•

•

•

...

•

.

.....

•

.

.

.

..... •

....

•

.

.

•

.....

.

•

.

.

•

.

.

•

....

..•

•

.

.

....

.

•

.

•......• •

....

•

•

....

.

•

.

.

.

....

.

.

..

....

.

.

.

.

....

.

•

.

.

•

.

....

.

..

•

....

.

.

.

.

•

....

•

•

.

.

•

..

....

.

.

•

.

....

.

.

•

.

•

• 1

N = 64

Ill-Posed Problems

The problem is the operator K is compact and even if one-to-one then its

inverse is not bounded.

Ill-Posed Problems

The problem is the operator K is compact and even if one-to-one then its

inverse is not bounded.

Here are the first 10 singular values of K when K(x, t) = xe−xt :

0.188, 0.090, 1.62 × 10−3, 1.45 × 10−6, 7.82 × 10−9, 2.77 × 10−11,

9.41 × 10−13, 2.63 × 10−13, 1.67 × 10−13, 1.32 × 10−13, 8.34 × 10−14

Ill-Posed Problems

The problem is the operator K is compact and even if one-to-one then its

inverse is not bounded.

Here are the first 10 singular values of K when K(x, t) = xe−xt :

0.188, 0.090, 1.62 × 10−3, 1.45 × 10−6, 7.82 × 10−9, 2.77 × 10−11,

9.41 × 10−13, 2.63 × 10−13, 1.67 × 10−13, 1.32 × 10−13, 8.34 × 10−14

There are some common resolutions:

• Truncate the Fourier series for f

fN (x) =

PN

0

fk cos kπx.

• Replace Kf = g by K̃f = g, K̃ = ²I + KK ? (Tichonov)

• Stop iterating when the residual increases (stopping condition).

Ill-Posed Problems

.............................................

........

...........

.

.

.

.

.

.

.

.......

....

.

.

.

......

.

.

....

.....

.

.

.

.

.....

.

.

.

.....

...

.

.

.

.....

.

.

.

.

.

...

.

...

...

.

∗

∗

.

...

..

...

.

..

...

.

.

...

.

...

.

...

...

...

...

...

α

..

.

→

...

. ••••••••• • • • • .....•

...

.

...

..

...

.

.

...

..

...

.

...

.

..

...

.

.

...

..

...

...

...

.

...

...

.....

....

.

.

.....

.

....

.....

......

.....

.

.

.

.

.

......

.....

.......

.......

.........

.

.

.

.

.

.

.

.

.

..............

...................................

αI + K Kf = K g

.................................................

........

..........

.

.

.

.

.

.

.......

....

.

.

.

......

.

.

.....

kKk2............

.....

.....

..

.

.

.

....

.

.

.

.

...

.

...

...

.

.

...

..

...

.

...

..

.

.

...

.

...

.

...

...

...

...

...

..

.

...

•••••••••• • • • • •....

...

.

...

..

...

.

...

.

...

..

.

...

.

...

...

...

.

..

...

...

...

.

...

..

.....

.....

.

.....

.

.

.....

.....

......

.....

.

.

.

......

.

.......

.....

.........

........

.

.

.

.

.

.

.

..............

.

.

................................

Kf = g

%

&

Tichonov

.........................................

............

.........

.

.

.

.

.

.

.

.......

.....

.

.

.

......

.

....

......

.

.

.

.

.

.

.....

.

...

.....

.

.

.

.

....

.

.

..

...

.

.

.

...

.

..

...

.

.

...

.

.

...

.

.

.

...

.

...

.

...

...

...

...

...

²

..

.

...

. ••••••• • • • • •.....

...

.

...

..

...

.

.

...

..

...

.

...

.

...

..

.

...

..

...

...

.

...

.

..

...

.....

...

.

.

.

.

.....

.

.....

....

......

.....

.

.

.

.

......

.....

.......

.......

.

.........

.

.

.

.

.

.

..

..............

...................................

K̄f = g

SV cut-off

Ill-Posed Problems

Need for regularisation.

Ill-Posed Problems

Need for regularisation.

1

0.5

0

N = 64

Tichonov

•

•

•

•

••• •• •• • •• •

α = 0.001

•

•• •

•

•

•

•

•••

••••

••

•••

••

••

•••

•••

•

••

••

•

••

•

••

••

•

••

••

•

1

......................

..............

.........

.........

.......

.

.

.

.

.

.

.

......

.

.

.

.

.

.

......

.

.

....

.....

.

.

.

.

.....

.

.

.

.

.....

.

....

.....

.

.

.

.

.....

.

.

...

.....

.

.

.

.

.....

.

.

.

.

.

....

...

.

....

.

.

....

...

.

.

.

....

.

.

.

.

.....

.

.

.

.

.

.....

...

.

.....

.

.

.

.

.

.....

..

.

.

.

.....

.

...

.....

.

.

.

.

.

....

.

...

.....

.

.

.

.

.

....

.

..

Ill-Posed Problems

Need for regularisation.

1

0.5

0

N = 64

SVD cut-off

² = 0.001

......................

..............

.........

.........

.......

.

.

.

.

.

.

.

......

.

.

.

.

.

.

......

.

.

....

.....

.

.

.

.

.....

.

.

.

.

.....

.

....

.....

.

.

.

.

.....

.

.

...

.....

.

.

.

.

.....

.

.

.

.

.

....

...

.

....

.

.

....

...

.

.

.

....

.

.

.

.

.....

.

.

.

.

.

.....

...

.

.....

.

.

.

.

.

.....

..

.

.

.

.....

.

...

.....

.

.

.

.

.

....

.

...

.....

.

.

.

.

.

....

.

..

•••••••••••••••••

•

•

•••

•••

•

•

•••

•

•

•

••

•

•

••

•

•

••

•

•

••

•

••

•

•

•

•

•

••

•

•

••

••

•

1

Ill-Posed Problems

Need for regularisation.

Singular values

1 w•

• •

• • •

10−2

• • • • • • •

•

• • • • • • • • •

10−4

•

•

10−6

10−8

•

10−10

•

10−12

• K = Green’s fnct

•

10−14

• K = e−xt

•

10−16

•

0

2

4

6

8

10

12

14

16

18

20

Ill-Posed Problems

What have we learned?

• It is essential to regularise an ill-posed problem

• The type and degree of regularisation depends on the error and the

problem.

• A common strategy combines two regularisation methods; one such

as Tichonov together with a “stopping condition”.

• Experience shows it is better to terminate the iteration process before

the residual krk reaches its minimum value.

• Despite an extensive theory on the topic, choosing the correct regularisation parameters can be an art in itself.

Ill-Posed Problems

What have we learned?

• It is essential to regularise an ill-posed problem

• The type and degree of regularisation depends on the error and the

problem.

• A common strategy combines two regularisation methods; one such

as Tichonov together with a “stopping condition”.

• Experience shows it is better to terminate the iteration process before

the residual krk reaches its minimum value.

• Despite an extensive theory on the topic, choosing the correct regularisation parameters can be an art in itself.

• The residual will never go to zero.

End of Ill-Posed Problems

Computing Derivatives

Suppose we are interested in recovering the coefficient q in

−4u + qu = f (x)

u=0

in Ω

on ∂Ω

∂u

= g on ∂Ω so that F is

where we have the additional measured data

∂ν

¯

¯

the map F : q → ∂u

.

∂ν ¯

∂Ω

Computing Derivatives

Suppose we are interested in recovering the coefficient q in

−4u + qu = f (x)

u=0

in Ω

on ∂Ω

∂u

= g on ∂Ω so that F is

where we have the additional measured data

∂ν

¯

¯

the map F : q → ∂u

.

∂ν ¯

∂Ω

Any numerical scheme designed to solve this equation will come down to

solving a matrix equation of the form

Ax = b

where the matrix A will depend on the domain Ω and the coefficient q; the

vector b will depend on the right hand side.

Computing Derivatives

Suppose we are interested in recovering the coefficient q in

−4u + qu = f (x)

u=0

in Ω

on ∂Ω

∂u

= g on ∂Ω so that F is

where we have the additional measured data

∂ν

¯

¯

the map F : q → ∂u

.

∂ν ¯

∂Ω

Any numerical scheme designed to solve this equation will come down to

solving a matrix equation of the form

Ax = b

where the matrix A will depend on the domain Ω and the coefficient q; the

vector b will depend on the right hand side.

• The majority of the effort is in setting up and “inverting” A

Computing Derivatives

Suppose we are interested in recovering the coefficient q in

−4u + qu = f (x)

u=0

in Ω

on ∂Ω

∂u

= g on ∂Ω so that F is

where we have the additional measured data

∂ν

¯

¯

the map F : q → ∂u

.

∂ν ¯

∂Ω

The derivative of the map F in the direction h1 ,

normal derivative on ∂Ω of u0 , where

−4u0 + qu0 = −u h1

in Ω

∂F

∂q

u0 = 0

.h1 , is given by the

on ∂Ω.

To solve this we have the equation Ax = c where c depends on h1 and the

previously computed u.

Computing Derivatives

Suppose we are interested in recovering the coefficient q in

−4u + qu = f (x)

u=0

in Ω

on ∂Ω

∂u

= g on ∂Ω so that F is

where we have the additional measured data

∂ν

¯

¯

the map F : q → ∂u

.

∂ν ¯

∂Ω

The derivative of the map F in the direction h1 ,

normal derivative on ∂Ω of u0 , where

−4u0 + qu0 = −u h1

in Ω

∂F

∂q

u0 = 0

.h1 , is given by the

on ∂Ω.

To solve this we have the equation Ax = c where c depends on h1 and the

previously computed u.

For the second derivative

∂2F

∂q 2

.(h1 , h2 ) we obtain

−4u00 + qu00 = −u0 (h2 ) h1 − u0 (h1 ) h2

in Ω

u00 = 0

on ∂Ω

leading to Ax = d where d depends on (h1 , h2 ) and the computed u, u0 .

Computing Derivatives

Suppose we are interested in recovering the coefficient q in

−4u + qu = f (x)

u=0

in Ω

on ∂Ω

∂u

= g on ∂Ω so that F is

where we have the additional measured data

∂ν

¯

¯

the map F : q → ∂u

.

∂ν ¯

∂Ω

Any numerical scheme designed to solve this equation will come down to

solving a matrix equation of the form

Ax = b

where the matrix A will depend on the domain Ω and the coefficient q; the

vector b will depend on the right hand side.

• The majority of the effort is in setting up and “inverting” A

• Most of the effort in computing both

has already been done!

∂F

∂q

.h and

∂2F

∂q 2

.(h1 , h2 )

Computing Derivatives

Schemes with frozen derivatives

Suppose that the previous benign situation was not possible. Perhaps we

can compute the derivative of F about a known solution and hold this fixed

during each iteration.

Using the previous notation and freezing about the case q = 0, this would

compute ∂F

∂q (0).h as

−4u0 = −u h

u0 = 0

in Ω

on ∂Ω

If, for example, Ω is a ball in Rn then we can use standard representations

to compute u0 explicitly in terms of h.

Computing Derivatives

Schemes with frozen derivatives

Suppose that the previous benign situation was not possible. Perhaps we

can compute the derivative of F about a known solution and hold this fixed

during each iteration.

Using the previous notation and freezing about the case q = 0, this would

compute ∂F

∂q (0).h as

−4u0 = −u h

u0 = 0

in Ω

on ∂Ω

If, for example, Ω is a ball in Rn then we can use standard representations

to compute u0 explicitly in terms of h.

• The “folk theorem” that solutions of the metaharmonic equation behave

very closely to harmonic functions gives credence to the belief that the

frozen derivative will retain much of the relevant features of the full

derivative.

Computing Derivatives

Schemes with frozen derivatives

Suppose that the previous benign situation was not possible. Perhaps we

can compute the derivative of F about a known solution and hold this fixed

during each iteration.

Using the previous notation and freezing about the case q = 0, this would

compute ∂F

∂q (0).h as

−4u0 = −u h

u0 = 0

in Ω

on ∂Ω

If, for example, Ω is a ball in Rn then we can use standard representations

to compute u0 explicitly in terms of h.

• The “folk theorem” that solutions of the metaharmonic equation behave

very closely to harmonic functions gives credence to the belief that the

frozen derivative will retain much of the relevant features of the full

derivative.

The second derivative F 00 can be handled similarily.

End of Computing Derivatives

Halley’s Method

Consider the nonlinear equation

F (x) = g.

(1)

With starting guess x0 let h̃ be computed by a Newton step,

F 0 [xn ] h̃ = g − F (xn ).

(2)

Halley’s Method

Consider the nonlinear equation

F (x) = g.

(1)

With starting guess x0 let h̃ be computed by a Newton step,

F 0 [xn ] h̃ = g − F (xn ).

(2)

Then the next iteration xn+1 = xn + h is defined from the 2nd degree

Taylor remainder by the solution of the linear equation

F 0 [xn ]h + 12 F 00 [xn ](h̃, h) = g − F (xn ).

(3)

Halley’s Method

Consider the nonlinear equation

F (x) = g.

(1)

With starting guess x0 let h̃ be computed by a Newton step,

F 0 [xn ] h̃ = g − F (xn ).

(2)

Then the next iteration xn+1 = xn + h is defined from the 2nd degree

Taylor remainder by the solution of the linear equation

F 0 [xn ]h + 12 F 00 [xn ](h̃, h) = g − F (xn ).

or

and

h̃ = (F 0 [xn ])−1 (g − F (xn ))

¡

0

¢−1

1 00

(g

2 F [xn ](h̃, ·)

h = F [xn ] +

xn+1 = xn + h

− F (xn ))

(3)

(Predictor)

(Corrector)

Halley’s Method

Consider the nonlinear equation

F (x) = g.

(1)

With starting guess x0 let h̃ be computed by a Newton step,

F 0 [xn ] h̃ = g − F (xn ).

(2)

Theorem. Let x̂ ∈ U ⊆ X denote a solution of (1). Assume F 0 [x̂]

admits a bounded inverse and F 0 and F 00 are uniformly bounded in U .

Then there exists δ > 0 such that the iteration (2), (3) with starting guess

x0 ∈ B(x̂, δ) = {x ∈ X : kx̂ − xk < δ} converges quadraticly to x̂.

Additionally, if the second derivative is Lipschitz continuous, i.e.

kF 00 [x](h, h̃) − F 00 [y](h, h̃)k ≤ Lkx − yk khk kh̃k

for all x, y ∈ U with h, h̃ ∈ X and a constant L > 0, then

kxn+1 − x̂k ≤ c kxn − x̂k3

holds for n = 0, 1, 2, . . . with a constant c > 0.

Halley’s Method

Consider the nonlinear equation

F (x) = g.

(1)

With starting guess x0 let h̃ be computed by a Newton step,

F 0 [xn ] h̃ = g − F (xn ).

(2)

Theorem. Let x̂ ∈ U ⊆ X denote a solution of (1). Assume F 0 [x̂]

admits a bounded inverse and F 0 and F 00 are uniformly bounded in U .

Then there exists δ > 0 such that the iteration (2), (3) with starting guess

x0 ∈ B(x̂, δ) = {x ∈ X : kx̂ − xk < δ} converges quadraticly to x̂.

Additionally, if the second derivative is Lipschitz continuous, i.e.

kF 00 [x](h, h̃) − F 00 [y](h, h̃)k ≤ Lkx − yk khk kh̃k

for all x, y ∈ U with h, h̃ ∈ X and a constant L > 0, then

kxn+1 − x̂k ≤ c kxn − x̂k3

holds for n = 0, 1, 2, . . . with a constant c > 0.

To prove convergence under noise we need conditions that are often very

difficult to check in practice – for example

kF (y) − F (x) − F 0 [x](y − x)k ≤ Cky − xk kF (y) − F (x)k

End of Halley’s Method

Inverse Sturm Liouville Problem

The inverse Sturm-Liouville problem

Reconstruct the potential q(x) from the equation

−yn00 + q(x)yn = λn yn

given the eigenvalue sequence {λn }.

yn (0) = yn (1) = 0

Inverse Sturm Liouville Problem

The inverse Sturm-Liouville problem

Reconstruct the potential q(x) from the equation

−yn00 + q(x)yn = λn yn

yn (0) = yn (1) = 0

given the eigenvalue sequence {λn }.

The result of Borg is that the sequence {λn } is sufficient to recover a

symmetric function q, that is, q(x) = q(1 − x).

(A second spectrum {µn } arising from the boundary conditions yn (0) =

yn0 (1) = 0 will determine a general q).

For simplicity, we will assume that q is symmetric, q(x) = q(1 − x).

Inverse Sturm Liouville Problem

The inverse Sturm-Liouville problem

Reconstruct the potential q(x) from the equation

−yn00 + q(x)yn = λn yn

yn (0) = yn (1) = 0

given the eigenvalue sequence {λn }.

The Gel’fand-Levitan equation gives

Z

³ √

1

y(x) = √ sin λx +

λ

√

x

´

u(x, t)sin λt dt

0

where u(x, t; q) satisfies

utt − uxx + q(x)u = 0 for 0 < t ≤ x < 1

Z

with

u(x, 0) = 0,

u(x, x) =

1

2

x

q(s) ds

0

The function y(x) satisfies the differential equation, the condition y(0) = 0

(and the normalisation y 0 (0) = 1).

We use the “additional” condition y(1) = 0 to determine q.

Inverse Sturm Liouville Problem

The inverse Sturm-Liouville problem

Reconstruct the potential q(x) from the equation

−yn00 + q(x)yn = λn yn

yn (0) = yn (1) = 0

given the eigenvalue sequence {λn }.

The inverse problem can be reduced to:

• Use the eigenvalues

and x = 1√ in the Gelfand Levitan equation

√

R

x

y(x) = sin√λλx + 0 u(x, t) sin√λλt dt to obtain

Z 1

p

p

g(t)sin λn t dt = −sin λn

n = 1, 2, . . . .

0

The asymptotics of the λn guarantees this is uniquely solvable.

• Recover q from u(1, t; q) = g(t) where u(x, t; q) satisfies

utt − uxx + q(x)u = 0 for 0 < t ≤ x < 1

Z

with

u(x, 0) = 0,

u(x, x) =

1

2

x

q(s) ds

0

Inverse Sturm Liouville Problem

Choose the map F : L2 [0, 1] → L2 [0, 1] defined by

F [q](t) := ut (1, t; q) − g 0 (t)

t

...

................

.

.

.

.

.

. .....

.............................

....................................................................

.

.

.

.

.

.....................................................

1 x

......................................................................................................................

.

.

.

.

.

. ......

........................................................................................

2 0

...............................................................................................................................................................................

.

.

.

.

................................................................................................

....................................................................................................................................................................................................................................

.

.

.

.

.

.

..........

................................................................................................................................................

........................................................................................................................................................................................................................................................................................

.

.

.

.

..................................................................................................................................................................

......................................................................................................................................................................................................................................................................................................................................

.

.

.

.

. .........................

.....

................................................................................................................................................................................

................ .............................

.

.

.

.

.....................

tt

xx

..................................................................................................................................................................

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.................................

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

........................................................................................................................................................................................................................................................................................................................................................................................................................

.

.

.

.

.

.

.

.

.

.

.

.

...................................................................................................................................................................................................................................................................

...........................................................................................................................................................................................................................................

...................................................................................................................................................................................................................................................................................................................................

............................................................................................................................................................................................................................................................................

........................................................................................................................................................................................................................................................

........................................................................................................................................................................................................................................

......................................................................................................................................................................................................................

......................................................................................................................................................................................................

.....................................................................................................................................................................................

.....................................................................................................................................................................

...................................................................................................................................................................................

....................................................................................................................................................

.................................................................................................................................................

...................................................................................................................................

.................................................................................................................................................................................

.................................................................................................................

.............................................................................................................................

.......................................................................................................

1 x

..........................................................................................

..............................................................................................

................................................................................

2 0

..................................................................

...............................................................

..........................................

.................................

..............................

...................

........

..

R

u(x, x) =

u

u(x, −x) = −

Note: u(x, t; 0) = 0.

R

q(s)ds

−u

t=x

u(1, t) = g(t)

{ux (1, t) = h(t)}

+ qu = 0

x

q(s)ds

t = −x

Inverse Sturm Liouville Problem

F 0 [q].h is the solution of

t

the Goursat problem

u0tt

−

u0xx

R

0

+ q(x)u = −hu

for 0 < |t| ≤ x < 1, with

0

u (x, ±x) =

± 12

Rx

0

h(s) ds

evaluated at x = 1.

Now u(x, t; 0) = 0 and so

u0 (x, t; 0; h) must satisfy

u0tt − u0xx = 0,

0

0 < |t| ≤ x < 1.

Thus u (1, t; 0; h) =

follows that

1

2

R

x+t

2

x−t

2

u0t (1, t; 0; h)

=

.....

....

.

.

.

....

.....

.

.

.

.

....

....

.

.

.

.

....

.... 0

.

x

.

.

.

......... u (x, x) = 1

.•

.

.

.

2 0 h(s)ds

... ........

.

.

.

.

.....

....

.....

....

.....

.

.

.

.

.

.....

.

...

.....

.

.

.

.

.

.....

.

...

.....

.

.

.

.

.

.....

.

...

0

0

.

.

.

.

.

.... F [q]h = u (1, t)

.

.

...

....

.

.

.

.

.

.

.

....

....

.....

....

.

.

.

.

.

.

.

.

....

....

....

....

.

.

.

.

.

.

.

.....

....

....

.... 0

.

.

0

0

.

.

.

.

.

.... utt − uxx + qu.........= −u h

....

.

.

.

.

.

....

......

....

.

.

.

.

.

.

.

.

•.......

....

.....

x

....

.

.

.....

.

...

.

.....

.

.

.

.....

....

.....

....

.

.

.....

.

.....

....

..... ........

..........

........

•

.....

.....

.....

.....

x

..... 0

1

.....u (x,−x) = −

2 0 h(s)ds

.....

.....

.....

.....

.....

.....

.....

.....

1+t

1−t

.....

.....

2

2

.....

.....

.....

.....

.....

.....

.....

.....

.....

..

R

h(s) ds and it

1

2

¡

h(

) + h(

and the symmetry assumption on q gives

u0t (1, t; 0; h) = h( 1+t

).

2

¢

)

Inverse Sturm Liouville Problem

The “frozen” Newton scheme now becomes

qn+1 (s) − qn (s) = g̃ 0 (2s − 1)

for s ∈ [0, 1].

where g̃(t) = 12 (g(2t − 1) − u(1, 2t − 1; qn ).

As an initial approximation we have g̃(t) = 12 g(2t − 1).

We can compute the second derivative in a similar manner.

Implementing the Halley scheme with derivatives evaluated at q = 0 gives

the predictor step as a Newton update,

h1 (t) = g̃ 0 (t).

Our corrector formula is

qn+1 (s) − qn (s) = h(t)

where h(t) is the solution of the Volterra equation

Z t³

´

0

1

g̃ (t) = h(t)+ 4

g̃(1−t−s)+g̃(t−s)−g̃(1+s−t)−g̃(t+s) h(s) ds.

0

This step is almost trivial to solve.

Inverse Sturm Liouville Problem

Reconstruction of 50sin(3πx)e−5x , x ∈ [0, 1/2]

from the first 10 Dirichlet eigenvalues

Inverse Sturm Liouville Problem

Reconstruction of 50sin(3πx)e−5x , x ∈ [0, 1/2]

from the first 10 Dirichlet eigenvalues

30

20

10

0

−10

..

......

.. ....

.. ....

.

... .....

...

.

...

.

...

..

...

...

.

. ....... ....

.

.. ........... ...

. .. ... ...

. . .. ...

.. ..

.. ...

.. ...

.

......

.....

. ..

....

.. ..

.

.

......

.

.

.....

.

.

.....

.....

.....

.....

.....

...

.....

......

.

.....

.....

...

....

.

......

...

...

....

.

.....

..

..

.

....

...

.....

.

....

.

...

.....

.

.

....

...

..

......

.

.....

....

...

.....

.

.....

.

....

..

.....

.

.

.

.

.

.

....

..

...

......

.

.

.

.

....

.. ..

...

.. .

.

.

.

....

...

..

...

.

.

....

..

.....

.....

...

.

..

...

.....

..

.

..

.....

...

.

.

..

... ..

. ..

..

.

..

... ..

. ..

..

.

..

... ..

. ..

..

.

... ..

..

. ..

.

..

... ..

..

. ..

.

..

... ..

.

. ..

... .

.

. ...

.

... ....

.

... .

... .....

.... ...

... .....

.

.

.

... .................... ....

....

.....

....

........................

1

Using the predictor only

(single iteration step)

(1 − kq1 − q̃k2 )

= 0.83

kq̃k2

Inverse Sturm Liouville Problem

Reconstruction of 50sin(3πx)e−5x , x ∈ [0, 1/2]

from the first 10 Dirichlet eigenvalues

30

20

10

0

..

..........

...........

.... ......

.... .......

.

.

.

...

...

..

...

..

..

....

.

...

..

.

...

..

...

...

.

..

...

.

..

.

..

..

...

.

.

..

..

..

.

..

..

.

..

..

.

..

.

....

..

.

.

...

.....

.

..

.

....

..

.

.....

.

.

.

..

...

.

......

.

.

..

...

.....

.

.

.

.

.

..

.. ...

... ...

.

.....

..

..

.

..

.

.

...

..

..

...

.....

.

..

.

.

..

....

..

...

....

..

.

..

..

.

....

..

..

.

.

.

.

.

...

..

..

...

..

.

..

.

...

..

.

..

.

...

..

.

..

.

...

.

.

..

..

..

...

.

.

.

.

....

.

.

.

.

......

....

.......

........

.

.

........

.

................................

.....

.

................

Using the corrector

(single iteration step)

(1 − kq1 − q̃k2 )

= 0.97

kq̃k2

1

−10

End of Inverse Sturm Liouville Example

Inverse Obstacle Scattering

An Inverse Scattering Problem

A time-harmonic acoustic or

electromagnetic plane wave

ui = eiκ x.d or point source

i

H (k|x−y|) is fired at a

4 0

cylindrical obstacle D of

unknown shape and location.

The wave us scattered from this

object is measured at “infinity”

– the far field pattern u∞ .

......................

....

...

.

.

...

...

.

...

.

.

.

..

..

..

.

.

.. ∞

.

.

..

..

..

.

.

..

.

.

..

.

.

..

.

.

..

.

...

.

.

s

..

.....

...

...

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

i.

iκ x.d

... ........ ..

..

.

.

.

....

..

.

...

.

.

...

.

..

................

.

.

.

.

.

.

........

..

.

.

.. .....

.

.

.

.

...

.

.

..

.

.

....

.

.

.

..................

..

.... ...

.

............................

.......

..

........

.. . ..

....

..

..

........................... .....

.

.

.

......... ... ... ........ ... .. ..........

... .................. ..

..

.

.

.

.

.

.

........... ...........

..

..

...... ... .........

.

i

iH

.. (k|x−y|)

..

..

..

4 .0

.

.

..

...

...

.

.

...

...

....

....

.....

.

.

.

.

.............

Far Field

u

u

u =e

D

u =

Inverse Obstacle Scattering

An Inverse Scattering Problem

A time-harmonic acoustic or

electromagnetic plane wave

ui = eiκ x.d or point source

i

H (k|x−y|) is fired at a

4 0

cylindrical obstacle D of

unknown shape and location.

The wave us scattered from this

object is measured at “infinity”

– the far field pattern u∞ .

......................

....

...

.

.

...

...

.

...

.

.

.

..

..

..

.

.

.. ∞

.

.

..

..

..

.

.

..

.

.

..

.

.

..

.

.

..

.

...

.

.

s

..

.....

...

...

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

i.

iκ x.d

... ........ ..

..

.

.

.

....

..

.

...

.

.

...

.

..

................

.

.

.

.

.

.

........

..

.

.

.. .....

.

.

.

.

...

.

.

..

.

.

....

.

.

.

..................

..

.... ...

.

............................

.......

..

........

.. . ..

....

..

..

........................... .....

.

.

.

......... ... ... ........ ... .. ..........

... .................. ..

..

.

2

.

.

.

.

.

........... ...........

..

..

...... ... .........

.

i

iH

.. (k|x−y|)

..

..

..

4 .0

.

.

..

...

...

.

.

...

...

....

....

.....

.

.

.

.

.............

Far Field

u

u

u =e

u=0

,→

D

4u + κ u = 0

u =

The total wave u = ui + us is modeled by an exterior boundary value

problem for the Helmholtz equation:

4u + κ2 u = 0 in IR2 \ D̄

with positive wave number κ and Dirichlet boundary condition

u = 0 on ∂D,

“sound soft object”

Inverse Obstacle Scattering

An Inverse Scattering Problem

A time-harmonic acoustic or

electromagnetic plane wave

ui = eiκ x.d or point source

i

H (k|x−y|) is fired at a

4 0

cylindrical obstacle D of

unknown shape and location.

The wave us scattered from this

object is measured at “infinity”

– the far field pattern u∞ .

......................

....

...

.

.

...

...

.

...

.

.

.

..

..

..

.

.

.. ∞

.

.

..

..

..

.

.

..

.

.

..

.

.

..

.

.

..

.

...

.

.

s

..

.....

...

...

..

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

i.

iκ x.d

... ........ ..

..

.

.

.

....

..

.

...

.

.

...

.

..

................

.

.

.

.

.

.

........

..

.

.

.. .....

.

.

.

.

...

.

.

..

.

.

....

.

.

.

..................

..

.... ...

.

............................

.......

..

........

.. . ..

....

..

..

........................... .....

.

.

.

......... ... ... ........ ... .. ..........

... .................. ..

..

.

2

.

.

.

.

.

........... ...........

..

..

...... ... .........

.

i

iH

.. (k|x−y|)

..

..

..

4 .0

.

.

..

...

...

.

.

...

...

....

....

.....

.

.

.

.

.............

Far Field

u

u

u =e

u=0

,→

D

4u + κ u = 0

u =

The scattered wave us is required to satisfy the Sommerfeld radiation

condition uniformly in all directions x̂ = x/|x|

µ

¶

s

∂u

1

s

√

− iκu = o

, r = |x| → ∞, .

∂r

r

Inverse Obstacle Scattering

An Inverse Scattering Problem

A time-harmonic acoustic or

electromagnetic plane wave

ui = eiκ x.d or point source

i