Regression Addendum Robert DeSerio

advertisement

Regression Addendum

Robert DeSerio

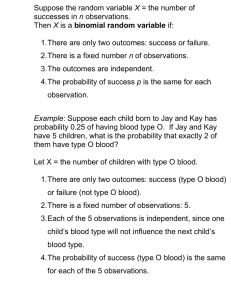

Linear algebra is used to demonstrate two important assertions in the main text: (1) that the

expectation value of the chi-square statistic χ2 is equal to the degrees of freedom—the number

of data points less the number of fit parameters and (2) the “∆χ2 = 1” rule—which states

that if any fit parameter is offset one standard deviation from its best-fit value, the minimum

χ2 (with that parameter fixed there) will increase by one.

Summary of linear regression formulas

The input

h idata is a set of N independent yi , i = 1...N and their N × N diagonal covariance

matrix σy2 . The yifit , i = 1...N are the best fit values based on the M best-fit parameters ak ,

k = 1...M (where M < N ) according to the linear fitting function:

y fit = [Jay ] a

(1)

where the Jacobian

∂yifit

(2)

∂ak

is independent of all fitting parameters. The means µi of the distributions for the yi are defined

by

µ = hyi

(3)

[Jay ]ik =

and are assumed to be determined according to that same fitting function using the true

parameters αk in place of the best-fit ak

µ = [Jay ] α

(4)

The least-squares solution for the best-fit parameters in terms of the yi is given by

h

i†

a = Jya y

h

where Jya

i†

(5)

is M × N weighted Moore-Penrose pseudo-inverse of [Jay ] given by

h

Jya

i†

h

= [X]−1 [Jay ]T σy2

1

i−1

(6)

2

and

h

[X] = [Jay ]T σy2

i−1

[Jay ]

(7)

is an M × M matrix and [X]−1 is the parameter covariance matrix

h

h

Jya

i†

i

σa2 = [X]−1

(8)

is called the pseudo-inverse of [Jay ] having the following inverse-like property.

h

i†

h

Jya [Jay ] = [X]−1 [Jay ]T σy2

i−1

[Jay ]

= [X]−1 [X]

= [I]

(9)

where [I] is the M × M identity matrix.

Taking the expectation value of the right side of Eq. 5 gives

h

i†

Jya y

=

h

i†

=

h

i†

=

h

i†

Jya hyi

Jya µ

Jya [Jay ] α

= [I] α

= α

(10)

where Eq. 4 was used in the third line above and Eq. 9 was used in the fourth line. This

demonstrates that

hai = α

(11)

and thus that the ak will be unbiased estimates of the αk .

The expectation value of χ2

The χ2 is based on the N data values yi , their variances σi2 , and the best fit values yifit .

χ2 =

N

X

i=1

yi − yifit

σi2

2

(12)

Before evaluating the expectation value of this χ2 , sometimes called the “best-fit” χ2 , let’s

evaluate the expectation value of the “true” χ2 defined with the true means µi in place of the

yifit .

N

X

(yi − µi )2

2

χ =

(13)

σi2

i=1

3

This evaluation will start from the linear algebra form of this equation

χ2 = y T − µT

h

σy2

i−1

(y − µ)

(14)

and will demonstrate techniques that will be useful when evaluating the expectation value of

the best-fit χ2 . The derivation proceeds from Eq. 14 as follows.

D

χ

2

E

=

T

T

y −µ

h

i−1

σy2

(y − µ)

(15)

Expanding the product, factoring out constants, and using Eq. 3 and its transpose gives

D

χ

2

E

=

y

=

=

T

i−1

σy2

h

h

y T σy2

h

y T σy2

i−1

i−1

D

y − yT

Eh

σy2

h

i−1

h

i−1

y − µT σy2

y − µT σy2

D

i−1

h

µ − µT σy2

h

µ − µT σy2

i−1

i−1

h

hyi + µT σy2

h

µ + µT σy2

i−1

i−1

µ

µ

µ

(16)

E

The expectation value y T [Q] y , where [Q] is any N by N matrix, can be simplified by

examining the implicit multiplications of such a vector-matrix-vector product.

T

y [Q] y =

N

N X

X

yi [Q]ij yj

(17)

i=1 j=1

Noting that hyi yj i = µi µj + σi2 δij gives

D

y T [Q] y

E

=

N X

N D

X

yi [Q]ij yj

E

i=1 j=1

=

N X

N

X

[Q]ij hyi yj i

i=1 j=1

=

N X

N

X

[Q]ij µi µj + σi2 δij

i=1 j=1

=

N X

N

X

µi [Q]ij µj +

i=1 j=1

N

X

[Q]ii σi2

(18)

i=1

or

D

E

h

y T [Q] y = µT [Q] µ + Tr [Q] σy2

i

(19)

Where the trace of any N × N square matrix [B] is the sum of its diagonal elements

Tr ([B]) =

N

X

i=1

[B]ii

(20)

4

Applying Eq. 19 to the first term on the right side of Eq. 16 gives

h

y T σy2

i−1

h

i−1

h

i−1

h

i−1

= µT σy2

y

= µT σy2

= µT σy2

h

µ + Tr σy2

i−1 h

σy2

i

µ + Tr [I]

µ+N

(21)

where the identity matrix

h i [I] above is of the N × N variety as it is formed by the product of

the N × N matrix σy2 and its inverse. In the last step, the trace of this identity matrix is

taken.

Substituting Eq. 21 into Eq. 16 then gives the expected result

D

E

χ2 = N

(22)

Finding the expectation value of the best-fit χ2 will be based on Eq. 12 in linear algebra

form:

h i−1 χ2 = y T − y fitT σy2

y − y fit

(23)

and begins

D

χ

2

E

=

T

y −y

fitT

h

i−1

σy2

y−y

fit

(24)

Substituting Eq. 1 and its transpose in Eq. 24 gives

D

χ

2

E

=

T

y −a

T

[Jay ]T

h

i−1

σy2

(y −

[Jay ] a)

(25)

Substituting Eq. 5 and its transpose then gives

D

χ

2

E

=

=

T

y −y

T

h

i†T

Jya

h

y T 1 − Jya

i†T

[Jay ]T

[Jay ]T

h

h

i−1

σy2

σy2

i−1

y−

[Jay ]

h

i†

Jya

h

1 − [Jay ] Jya

y

i† y

(26)

Expanding the product gives

D

E

χ2 =

yT

(27)

h

σy2

i−1

h

− σy2

i−1

h

[Jay ] Jya

i†

h

− Jya

i†T

h

[Jay ]T σy2

i−1

h

+ Jya

i†T

h

[Jay ]T σy2

i−1

h

[Jay ] Jya

i† y

Substituting Eq. 6 and its transpose in the last three matrix products, substituting [X]

h i−1

for the combination [Jay ]T σy2

[Jay ] (Eq. 7), and then canceling the product [X] [X]−1 shows

that, aside from their

signs, the last threeh matrix

products inside the parentheses are all the

h i−1

i−1

−1

2

y

y T

2

same and given by σy

[Ja ] [X] [Ja ] σy .

5

Taking account of the signs and using the form of the first matrix product, the resulting

expression is thus

D

2

χ

E

= y

i−1

σy2

h

T

y − y

T

h

i−1

σy2

h

[Jay ]

i†

Jya

y

(28)

Applying Eq. 19 to the first term in Eq. 28 gives

y

i−1

σy2

h

T

y

h

i−1

h

i−1

h

i−1

= µT σy2

= µT σy2

= µT σy2

h

µ + Tr σy2

i−1 h

σy2

i

µ + Tr [I]

µ+N

(29)

Applying Eq. 19 to the second term in Eq. 28 gives

y

T

h

i−1

σy2

[Jay ]

h

i†

Jya

h

y = µT σy2

i−1

i†

h

h

[Jay ] Jya µ + Tr σy2

i−1

h

[Jay ] Jya

i† h

σy2

i

(30)

Substituting Eq. 10 and then Eq. 4 provides the simplification of the first term on the right.

h

µT σy2

i−1

h

i†

h

[Jay ] Jya µ = µT σy2

h

= µ σy2

i−1

i−1

[Jay ] α

µ

(31)

h

Simplification of the second term in Eq. 30 begins by substituting Eq. 6 for Jya

proceeds as follows

h

Tr σy2

i−1

h

[Jay ] Jya

i† h

σy2

i

h

i−1

[Jay ] [X]−1 [Jay ]T σy2

h

i−1

[Jay ] [X]−1 [Jay ]T

h

i†T

[Jay ]T

= Tr σy2

= Tr σy2

= Tr Jya

h

h

= Tr [Jay ] Jya

h

i−1 h

σy2

i†

and then

i

i†

i†

= Tr Jya [Jay ]

= Tr [I]

= M

h

In the second line σy2

i−1 h

(32)

i

σy2 = [I] is used and in the third line the transpose of Eq. 6 is

h

i†

used. In the fourth line the equality between the trace of a square matrix ([Jay ] Jya ) and its

h

transpose ( Jya

i†T

h

h

[Jay ]T ) is used. But note that [Jay ] Jya

i†

i†

appearing in line four is an N × N

matrix whereas Jya [Jay ] appearing in line five is an M × M matrix. Consequently, the two

6

matrices cannot be equal. However, their traces are equal and only that fact is used in line

five. The substitution is valid because for any two matrices [A] of shape N × M and [B] of

shape M × N , both [A] [B] and [B] [A] exist and the rules for multiplication and trace give

Tr [A] [B] =

N X

M

X

[A]ij [B]ji

i=1 j=1

=

M X

N

X

[B]ji [A]ij

j=1 i=1

= Tr [B] [A]

(33)

In the sixth line of Eq. 32, Eq. 9 is substituted and in line 7 its trace is taken. Finally, using

Eq. 31 and Eq. 32 in Eq. 30 and combining this with Eq. 29 in Eq. 28 then gives

D

E

χ2 = N − M

(34)

Note the familiar result that the expectation value of χ2 is its number of degrees of freedom.

The true χ2 based on Eq. 14 has no adjustable parameters and the number of degrees of freedom

is the number of data points. The best-fit χ2 , on the other hand, is minimized by adjusting the

M fitting parameters a, thereby reducing the number of degrees of freedom by M . Note that

the best-fit χ2 is not just smaller than the true χ2 on average. It is, in fact, always smaller.

It is not always smaller by the amount M —the reduction can be smaller or larger than this.

The reduction is, in fact, a χ2 random variable with M degrees of freedom.

∆χ2 = 1 Rule

The ∆χ2 = 1 rule is based on the dependence of the χ2 on the fitting parameters as derived

in the main text and given by:

h i−1

∆χ2 = ∆aT σa2

∆a

(35)

where ∆χ2 is the increase in the χ2 from its best-fit minimum value that would occur if the

fitting parameters are changed by ∆a from their best-fit values while keeping all σi fixed at

their best-fit values.

The ∆χ2 = 1 rule can be stated:

If a fitting parameter is offset from its best-fit value by its standard deviation

e.g., from ak to ak + σk and then fixed there while all other fitting parameters

are readjusted to minimize the χ2 , the new χ2 will be one higher than its best-fit

minimum.

In the very rare case where all parameter covariances are zero, the covariance matrix [σa2 ] is

−1

−1

diagonal and its inverse [σa2 ] is also diagonal with elements [σa2 ]kk = 1/σk2 . The χ2 variation

given by Eq. 35 then becomes

∆χ

2

(∆ak )2

=

σk2

k=1

M

X

(36)

7

This equation gives ∆χ2 = 1 when any single parameter is moved one standard deviation σk

from its best-fit value. Changing any other parameters independently increases the ∆χ2 in a

like manner—quadratically, in ∆ak /σk .

While the independent increases in ∆χ2 given above for uncorrelated parameters is consistent with the ∆χ2 = 1 rule, the rule requires the re-minimization caveat for the general—and

vastly more likely—case of correlated parameters. With correlated parameters, an increase in

∆χ2 from one parameter change can be partially canceled by appropriate changes in the other

parameters. Offsetting any parameter one standard deviation from its best-fit value (keeping

the others at their best-fit values) generally gives a ∆χ2 ≥ 1. However, the ∆χ2 value always

decreases back to one if the other parameters are then re-optimized for the smallest possible χ2

holding the offset parameter fixed. This second χ2 minimization is the caveat to the ∆χ2 = 1

rule.

To see how the rule arises,1 the parameter vector ∆a is partitioned into a vector ∆a1 of

length K and another vector ∆a2 of length L = M − K.

∆a =

∆a1

∆a2

(37)

where the horizontal line represents the partitioning and does not represent a division operation. The parameters are ordered so those in the second group will be offset from their

best-fit values to see how the χ2 changes if the parameters in the first group are automatically

re-optimized for any variations in that second group. At the end of the derivation, the second

group will be taken to consist of a single parameter to prove the ∆χ2 = 1 rule.

−1

The weighting matrix [σa2 ] is then partitioned into an K × K matrix A, an L × L matrix

C, a K × L matrix B and its L × K transpose B T

h

σa2

i−1

=

[B]

[B]T [C]

[A]

(38)

−1

Note that the self-transpose symmetry of [σa2 ] implies that same symmetry for [σa2 ] and for

the square matrices [A] and [C]. It is also why the two off-diagonal matrices [B] and [B]T are

transposes of one another.

Equation 35 then becomes

h

∆χ2 = ∆aT σa2

i−1

∆a

=

1

∆aT1 ∆aT2

[B]

[B]T [C]

[A]

∆a1

∆a2

This derivation is from R. A. Arndt and M. H. MacGregor, Nucleon-Nucleon Phase Shift Analysis by

Chi-Squared Minimization in Methods of Mathematical Physics, vol. 6, pp. 253-296, Academic Press, New

York (1966).

8

=

∆aT1 ∆aT2

[A] ∆a1 + [B] ∆a2

T

[B] ∆a1 + [C] ∆a2

= ∆aT1 [A] ∆a1 + ∆aT1 [B] ∆a2 + ∆aT2 [B]T ∆a1 + ∆aT2 [C] ∆a2

(39)

Now we consider ∆a2 to be fixed and adjust ∆a1 to minimize ∆χ2 for this fixed ∆a2 . The

minimization proceeds as usual; finding this value of ∆a1 requires solving the set of K equations obtained by setting to zero the derivative of ∆χ2 with respect to each ∆ak , k = 1...K in

∆a1 . The equation for index k becomes

0 =

d∆χ2

d∆ak

= δkT [A] ∆a1 + ∆aT1 [A] δk + δkT [B] ∆a2 + ∆aT2 [B]T δk

(40)

where δk = d∆a1 /d∆ak is the unit column-vector with a single 1 in the kth row, and δkT is its

transposed row vector. All terms are scalars and the first two and second two are identical—as

the expressions are simply transposes of one another. Dividing both sides by two, Eq. 40 can

then be taken to be

0 = δkT [A] ∆a1 + δkT [B] ∆a2

(41)

and the full set of all K of these equations is the vector equation

0 = [A] ∆a1 + [B] ∆a2

(42)

which has the solution

∆a1 = − [A]−1 [B] ∆a2

(43)

where [A]−1 is the inverse of [A] and is also a self-transpose matrix. Inserting this equation

and its transpose in Eq. 39 then gives

∆χ2 = ∆aT2 [B]T [A]−1 [A] [A]−1 [B] ∆a2 − ∆aT2 [B]T [A]−1 [B] ∆a2

−∆aT2 [B]T [A]−1 [B] ∆a2 + ∆aT2 [C] ∆a2

(44)

After canceling an [A]−1 [A] = [I] in the first term, the first three terms are all identical (aside

from their signs) and the equation can be rewritten

∆χ2 = ∆aT2 [C] − [B]T [A]−1 [B] ∆a2

(45)

Note that this equation gives ∆χ2 as a function of the parameters in a subset of its parameter

space without specifying the values for the other parameters. Whenever this is done the

re-optimization of all unspecified parameters for a minimum χ2 is implied.

9

Now we must consider the covariance matrix [σa2 ]. It can be put in the same submatrix

form

h i

[A0 ] [B 0 ]

σa2 =

(46)

0 T

0

[B ] [C ]

h

i

The equation σy2 [σa2 ]

−1

= [I] then gives

[I] =

=

[A0 ]

[B 0 ]

[B 0 ]T [C 0 ]

[B]

[B]T [C]

[A]

[A0 ] [A] + [B 0 ] [B]T

0 T

0

[B ] [A] + [C ] [B]

T

[A0 ] [B] + [B 0 ] [C]

0 T

0

[B ] [B] + [C ] [C]

(47)

Consequently the K × K and L × L matrices in the top left and lower right must be identity

matrices and the L×K and K ×L matrices in the upper right and lower left must be identically

zero. That is,

[A0 ] [A] + [B 0 ] [B]T = [I]

(48)

[A0 ] [B] = − [B 0 ] [C]

T

[B 0 ] [A] = − [C 0 ] [B]T

T

[B 0 ] [B] + [C 0 ] [C] = [I]

(49)

(50)

(51)

Multiplying Eq. 50 by [A]−1 on the right gives

[B 0 ] = − [C 0 ] [B]T [A]−1

(52)

[B 0 ] [B] = − [C 0 ] [B]T [A]−1 [B]

(53)

T

or

T

which, when inserted into Eq. 51 gives

[C 0 ] [C] − [B]T [A]−1 [B] = [I]

(54)

Multiplying both sides of Eq. 54 by [C 0 ]−1 (the inverse of the L × L covariance submatrix [C 0 ])

on the left gives

−1

[C] − [B]T [A]−1 [B] = [C 0 ]

(55)

which can be substituted into Eq. 45 to get

∆χ2 = ∆aT2 [C 0 ]

−1

∆a2

(56)

The transpose of Eq. 52 is

[B 0 ] = − [A]−1 [B] [C 0 ]

(57)

10

(since [A]−1 and [C 0 ] are self-transpose matrices). Multiplying on the right by [C 0 ]−1 gives

[B 0 ] [C 0 ]

−1

= − [A]−1 [B]

(58)

Substituting this in Eq. 43 then gives

−1

∆a1 = [B 0 ] [C 0 ]

∆a2

(59)

Now we can consider the case that ∆a2 consists of a single parameter, say the very last

one, ∆aM . The rest are in ∆a1 and consist of the set {∆aj }, j = 1...M − 1. From Eq. 46, [C 0 ]

2

would then be a 1 × 1 submatrix of the covariance matrix and would be given by [C 0 ] = σM

2

2

and thus [C 0 ]−1 = 1/σM

. Solving Eq. 56 for σM

then gives

2

σM

=

(∆aM )2

∆χ2

(60)

Furthermore, the submatrix [B 0 ] would be the last column of the covariance matrix (less the

2

last element, σM

). Thus, it is a 1 × (M − 1) matrix whose elements are the covariances of the

2

other variables with aM ; [B 0 ]1j = σjM . Each element of Eq. 59 is then ∆aj = σjM ∆aM /σM

.

Eliminating σM using Eq. 60 and solving for σjM then gives

σjM =

∆aj ∆aM

∆χ2

(61)

If we further restrict ∆χ2 = 1, these last two equations give ∆aM = ±σM , and σjM =

∆aj ∆aM . This is the complete ∆χ2 = 1 rule.

Equations 60 and 61 are a more general form of the ∆χ2 = 1 rule that can be used

with such programs as Microsoft Excel’s Solver that perform minimizations but do not return

the parameter variances or covariances. The procedure consists of first finding the best-fit

parameters {aj } and the corresponding χ20 via a minimization including all parameters. Next

a parameter of interest aM is changed by an arbitrary amount ∆aM (no larger than a few

standard deviations σM ) and the program is used again to minimize the χ2 , but this time with

the parameter of interest kept fixed, i.e., aM is not included in the parameter list. The new χ2

and the new parameter values {a0j } are recorded. ∆χ2 = χ2 − χ20 and all parameter changes

∆aj = a0j − aj are calculated and used with Eqs. 60 and 61 to find the parameter’s variance

2

σM

and its covariances σjM .

The ∆χ2 = 1 rule answers the question: “What are the possible values for parameter ak

such that the χ2 can still be kept within one of its minimum?” Taking the partitioned set

{a2 } to include only that one variable, Eq. 56 gives the answer: inside the interval ak ± σk .

Taking the partitioned set {a2 } to consist of two elements, that same equation can also be

used to answer the question: “What are the possible pairs of values for parameters aj and ak

such that the χ2 can be kept within one of its minimum?” The answer turns out to be those

values inside an ellipse in the space of aj and ak on which ∆χ2 = 1.

11

To find this ellipse from Eq. 56, the inverse [C 0−1 ] of a 2 × 2 submatrix of the covariance

matrix will be required. The covariance submatrix for two parameters, say, a and b is expressed

[C 0 ] =

σa2

σab

σab

σb2

(62)

and its inverse is given by

0 −1

[C ]

−σab

σb2

1

= 2 2

2

2

σa σb − σab

− σab σa

(63)

Equation 56 then gives

∆χ

2

−σab

σb2

1

∆a ∆b

=

2

2

2

σa σb − σab

− σab σa2

∆a

∆b

!

!

1

σb2 ∆a − σab ∆b

∆a

∆b

=

2

−σab ∆a + σa2 ∆b

σa2 σb2 − σab

h

i

1

2

2

2

2

=

σ

(∆a)

−

2σ

∆a∆b

+

σ

(∆b)

ab

b

a

2

2

σa2 σb − σab

(64)

With the correlation coefficient ρab defined by

ρab =

σab

σa σb

(65)

and defining each parameter offset in units of its standard deviation by

∆a

σa

∆b

=

σb

δa =

(66)

δb

(67)

Eq. 64 becomes

∆χ2 =

i

1 h 2

2

δ

−

2ρ

δ

δ

+

δ

ab a b

b

1 − ρ2ab a

(68)

With no correlation, ρab = 0 and the ∆χ2 = 1 contour is given by

1 = δa2 + δb2

(69)

This is the equation for the unit circle.

The effect of non-zero correlation is clearer in the space of the variables δa0 and δb0 rotated

◦

45 counterclockwise from those of δa and δb and defined by

δa + δb

√

2

−δa + δb

√

=

2

δa0 =

δb0

(70)

12

δb

δa'

δ' b

1

ρab

where δa =1,

δb = ρab

-1

δa

1

ρab

-1

Figure 1: Three ∆χ2 = 1 ellipses are inscribed in a unit square, each with principal axes along

the square’s diagonals. The green circle is for uncorrelated parameters, the red ellipse is for a

medium positive correlation and the cyan ellipse is for a strong negative correlation.

The inverse transformation is then

δa

δb

δa0 − δb0

√

=

2

δa0 + δb0

√

=

2

(71)

Figure 1 shows these axes along with various ∆χ2 = 1 ellipses.

Using the transformation to δa0 and δb0 in Eq. 68 for the ∆χ2 = 1 contour gives

1 δa0 − δb0

√

1 =

1 − ρ2ab

2

!2

− 2ρab

δa0 − δb0

√

2

!

δa0 + δb0

√

2

1

δa02 δb02

02

02

=

δ

+

δ

−

2ρ

−

ab

b

1 − ρ2ab a

2

2

i

h

1

=

δa02 (1 − ρab ) + δb02 (1 + ρab )

(1 + ρab )(1 − ρab )

δa02

δb02

=

+

(1 + ρab ) (1 − ρab )

"

!

δ0 + δ0

+ a√ b

2

!2

!#

(72)

13

With correlation, the ∆χ2 = 1 contour is an ellipse with its principal axes along the δa0

0

and

45◦ to the

δa and δb axes and intersecting the δa0 -axis at

√ δb directions, i.e., oriented at

√

± 1 + ρab and intersecting the δb0 -axis at ± 1 − ρab . The correlation coefficient is limited√to

−1 < ρab < 1 and so the semimajor axis (long radius) must be between one (ρab = 0) and 2

(ρab = ±1) and the semiminor axis (short radius) must

be between zero (ρq

ab = ±1) and one

q

(ρab = 0). Notice that the ellipse area is given by π (1 + ρab )(1 − ρab ) = π 1 − ρ2ab and goes

from a maximum of π for ρab = 0 down to zero as ρab goes toward ±1.

When |ρab | 1, the ellipse is only slightly squashed along one axis and slightly expanded

along the other. When ρab is positive, the major axis is along δa0 and the ellipse has extra area

in the two quadrants where δa and δb have the same sign. If one parameter turns out to be

above its best estimate, the other is a more likely to be above its best estimate. When ρab is

negative, the major axis is along δb0 and the ellipse has extra area in the two quadrants where

δa and δb have the opposite sign. If one parameter turns out to be above its best estimate, the

other is more likely to be below its best estimate.

With nonlinear fitting functions, the linearity with fitting parameters (first order Taylor

expansion) is assumed to be valid over several standard deviations of those parameters. If the

data set is small or has large errors, the fitting parameter standard deviations may be large

enough that this condition is violated. It may also be violated if the fitting function derivatives

depend too strongly on the parameters. Consequently, it is a good idea to test this linearity

over an appropriate range. (For linear fitting functions, the formulation is exact and such a

test is unnecessary.)

Finding the covariance matrix elements using Eq. 60 for the variances and Eq. 61 for

the covariances should use parameter offsets that are small and large. Below some level of

∆χ2 , they should all give the same results. This would give relatively small ∆aM where the

first-order Taylor expansion should be accurate. However, high values for ∆χ2 —up to about

10—should also be tried. To get a ∆χ2 = 9, Eq. 60 predicts that aM will need to be offset

by ±3σM . If the same results are obtained with this value of ∆χ2 , the first-order Taylor

expansion must still be accurate out to aM ± 3σM , which is large enough to cover most of the

probability distribution for aM . For values of ∆χ2 much larger than this, the fitting function

will be evaluated for parameters where the first-order Taylor expansion need not and may

not be valid. On the other hand, variance and covariance calculations that differ significantly

between ∆χ2 values of 0.1 and 1 would be an indication that the first-order Taylor expansion

is already failing when aM is only one standard deviation from the best fit.