The Split Bregman Method for L1-Regularized Problems Tom Goldstein May 22, 2008

advertisement

The Split Bregman Method for L1-Regularized

Problems

Tom Goldstein

May 22, 2008

Some Common L1 Regularized Problems

TV Denoising: min kukBV +

u

µ

ku − f k22

2

De-Blurring/Deconvolution: min kukBV +

u

µ

kKu − f k22

2

µ

Basis Pursuit/Compressed Sensing MRI: min kukBV + kFu−f k22

u

2

What Makes these Problems Hard??

I

Some “easy” problems...

arg min kAu − f k22 (Differentiable)

u

arg min |u|1 + ku − f k22 (Solvable by shrinkage)

u

I

Some “hard” problems

arg min |Φu|1 + ku − f k22

u

arg min |u|1 + kAu − f k22

u

I

What makes these problems hard is the “coupling” between

L1 and L2 terms

A Better Formulation

I

We want to solve the general L1 regularization problem:

arg min |Φu| + kKu − f k2

u

I

We need to “split” the L1 and L2 components of this energy

I

Introduce a new variable

let d = Φu

I

We wish to solve the constrained problem

arg min kdk1 + H(u) such that d = Φ(u)

u,d

Solving the Constrained Problem

arg min kdk1 + H(u) such that d = Φ(u)

u,x

I

We add an L2 penalty term to get an unconstrained problem

arg min kdk1 + H(u) +

u,x

λ

kd − Φ(u)k2

2

I

This splitting was independently introduced by Wang and Dr.

Yin Zhang (FTVd)

I

We need a way of modifying this problem to get exact

enforcement of the constraint

I

The most obvious way is to use continuation: let λn → ∞

I

Continuation makes the condition number bad

A Better Solution: Use Bregman Iteration

I

We group the first two energy terms together:

λ

arg min kdk1 + H(u) + kd − Φ(u)k2

{z

} 2

u,d |

E (u,d)

I

to get...

arg min E (u, d) +

u,d

I

λ

kd − Φ(u)k2

2

We now define the “Bregman Distance” of this convex

functional as

DEp (u, d, u k , d k ) = E (u, d) − hpuk , u − u k i + hpdk , d − d k i

A Better Solution: Use Bregman Iteration

I

Rather than solve min E (u, d) + λ2 kd − Φ(u)k2 we recursively

solve

(u k+1 , d k+1 ) = arg min DEp (u, d, u k , d k ) +

u,d

I

λ

kd − Φ(u)k22

2

or

λ

arg min E (u, d) − hpuk , u − u k i + hpdk , d − d k i + kd − Φ(u)k22

u,d

2

I

Where pu and pd are in the subgradient of E with respect to

the variables u and d

Why does this work?

I

Because of the convexity of the functionals we are using, it

can be shown that

kd − Φuk → 0 as k → ∞

I

Furthermore, is can be shown that the limiting values,

u ∗ = limk→∞ u k and d ∗ = limk→∞ d k satisfy the original

constrained optimization problem

arg min kdk1 + H(u) such that d = Φ(u)

u,d

I

It therefore follows that u ∗ is a solution to the original L1

constrained problem

u ∗ = arg min |Φu| + kKu − f k2

u

Don’t Worry! This isn’t as complicated as it looks

I

As is done for Bregman iterative denoising, we can get explicit

formulas for pu and pd , and use them to simplify the iteration

I

This gives us the simplified iteration

(u k+1 , d k+1 ) = arg min kdk1 + H(u) +

u,d

λ

kd − Φ(u) − b k k2

2

b k+1 = b k + (Φ(u) − d k )

I

This is the analog of “adding the noise back” when we use

Bregman for denoising

Summary of what we have so far

I

We began with an L1-constrained problem

u ∗ = arg min |Φu| + kKu − f k2

I

We form the “Split Bregman” formulation

min kdk1 + H(u) +

u,d

λ

kd − Φ(u) − bk2

2

I

For some optimal value b ∗ = b of the Bregman parameter,

these two problems are equivalent

I

We solve the optimization problem by iterating

(u k+1 , d k+1 ) = arg min kdk1 + H(u) +

u,d

b

k+1

k

= b + (Φ(u) − d k )

λ

kd − Φ(u) − b k k2

2

Why is this better?

I

We can break this algorithm down into three easy steps

λ

kd − Φ(u) − b k k22

2

λ

Step 2 : d k+1 = arg min |d|1 + kd − Φ(u) − b k k22

d

2

k+1

k

k+1

Step 3 : b

= b + Φ(u

) − d k+1

Step 1 : u k+1 = arg min H(u) +

u

I

Because of the decoupled form, step 1 is now a differentiable

optimization problem - we can directly solve it with tools like

Fourier Transform, Gauss-Seidel, CG, etc...

I

Step 2 can be solved efficiently using shrinkage

d k+1 = shrink(Φ(u k+1 ) + b k , 1/λ)

I

Step 3 is explicit, and easy to evaluate

Example: Fast TV Denoising

I

I

We begin by considering the Anisotropic ROF denoising

problem

µ

arg min |∇x u| + |∇y u| + ku − f k22

u

2

We then write down the Split Bregman formulation

|dx | + |dy | +

arg min

x,y ,u

+

+

µ

ku − f k22

2

λ

kdx − ∇x u − bx k22

2

λ

kdy − ∇y u − by k22

2

Example: Fast TV Denoising

I

The TV algorithm then breaks down into these steps:

Step 1 : u k+1 = G (u k )

Step 2 : dxk+1 = shrink(∇x u k+1 + bxk , 1/λ)

Step 3 : dyk+1 = shrink(∇y u k+1 + byk , 1/λ)

Step 4 : bxk+1 = bxk + (∇x u − x)

Step 5 : byk+1 = byk + (∇y u − y )

where G (u k ) represents the results of one Gauss Seidel sweep

for the corresponding L2 optimization problem.

I

This is very cheap – each step is only a few operations per

pixel

Isotropic TV

I

This method can do isotropic TV using the following

decoupled formulation

q

µ

arg min

dx2 + dy2 + ku − f k22

2

λ

λ

+

kdx − ∇x u − bx k22 + kdy − ∇y u − by k22

2

2

I

We now have to solve for (dx , dy ) using the generalized

shrinkage formula (Yin et. al.)

∇x u k + bxk

sk

∇y u k + byk

= max(s k − 1/λ, 0)

sk

dxk+1 = max(s k − 1/λ, 0)

dyk+1

where

sk =

q

(∇x u k + bxk )2 + (∇y u k + byk )2

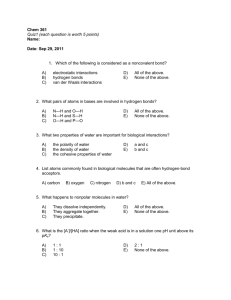

Time Trials

I

Time trials were done on a Intel Core 2 Due desktop (3 GHz)

I

Linux Platform, compiled with g++

Anisotropic

Image

256 × 256 Blocks

512 × 512 Lena

Time/cycle (sec)

0.0013

0.0054

Time Total (sec)

0.068

0.27

Isotropic

Image

256 × 256 Blocks

512 × 512 Lena

Time/cycle (sec)

0.0018

0.011

Time Total (sec)

0.0876

0.55

This can be made even faster...

I

Most of the denoising takes place in first 10 iterations

This can be made even faster...

I

Most of the denoising takes place in first 10 iterations

I

“Staircases” form quickly, but then take some time to flatten

out

I

If we are willing to accept a “visual” convergence criteria, we

can denoise in about 10 iterations (0.054 sec) for Lena, and

20 iterations (0.024 sec) for the blocky image.

This can be made even faster...

Compressed Sensing for MRI

I

Many authors (Donoho, Yin, etc...) get superior

reconstruction using both TV and Besov regularizers

I

We wish to solve

arg min |∇u| + |Wu| +

u

µ

kRFu − f k k22

2

where R comprises a subset of rows of the identity, and W is

an orthogonal wavelet transform (Haar).

I

Apply the “Split Bregman” method: Let w ← Wu,

dx ← ∇x u, and dy ← ∇y u

q

µ

arg min

dx2 + dy2 + |w | + kRFu − f k22

u,dx ,dy ,w

2

λ

λ

+ kdx − ∇x u − bx k22 + kdy − ∇y u − by k22

2

2

γ

+ kw − Wu − bw k22

2

Compressed Sensing for MRI

I

The optimality condition for u is circulant:

(µF T R T RF − λ∆ + γI )u k+1 = rhs k

I

The resulting algorithm is

Unconstrained CS Optimization Algorithm

u k+1 = F −1 K −1 Frhs k

(dxk+1 , dyk+1 ) = shrink(∇x u + bx , ∇y u + by , 1/λ)

w k+1 = shrink(Wu + bw , 1/γ)

bxk+1 = bxk + (∇x u − dx )

byk+1 = byk + (∇y u − dy )

bwk+1 = bwk + (Wu − w )

Compressed Sensing for MRI

I

To solve the constrained problem

arg min |∇u| + |Wu| such that kRFu − f k2 < σ

u

we use “double Bregman”

I

First, solve the unconstrained problem

arg min |∇u| + |Wu| +

u

µ

kRFu − f k k22

2

by performing “inner” iterations

I

Then, update

f k+1 = f k + f − RFu k+1

this is an “outer iteration”

Compressed Sensing

I

256 x 256 MRI of phantom, 30%

Bregman Iteration vs Continuation

I

As λ → ∞, the condition number of each sub-problem goes

to ∞

I

This is okay if we have a direct solver for each sub-problem

(such as FFT)

I

Drawback: Direct solvers are slower than iterative solvers, or

may not be available

I

With Bregman iteration, condition number stays constant we can use efficient iterative solvers

Example: Direct Solvers May be Inefficient

I

TV-L1:

arg min |∇u| + µ|u − f |

u

I

Split-Bregman formulation

arg min |d| + µ|v − f | +

u,d

I

γ

λ

kd − ∇u − bd k22 + ku − v − bv k22

2

2

We must solve the sub-problem

(µI − λ∆)u = RHS

I

If λ ≈ µ, then this is strongly diagonally dominant: use

Gauss-Seidel (cheap)

I

If λ >> µ, then we must use a direct solver: 2 FFT’s per

iteration (expensive)

Example: Direct Solvers May Not Exist

I

Total-Variation based Inpainting:

Z

Z

arg min |∇u| + µ

u

Ω

(u − f )2

Ω/D

arg min |∇u| + µkRu − f k2

u

where R consists of rows of the identity matrix.

I

The optimization sub-problem is

(µR T R − λ∆)u = RHS

I

Not Circulant! - We have to use an iterative solver (e.g.

Gauss-Seidel)

Generalizations

I

Bregman Iteration can be used to solve a wide range of

non-L1 problems

arg min J(u) such that A(u) = 0

I

where J and kA(·)k2 are convex.

We can use a Bregman-like penalty function

u k+1 = arg min J(u) +

λ

kA(u) − b k k2

2

b k+1 = b k − A(u)

I

I

Theorem: Any fixed point of the above algorithm is solution

to the original constrained problem

Convergence can be proved for a broad class of problems:

If J is strictly convex and twice differentiable, then ∃λ0 > 0

such that the algorithm converges for any

λ < λ0

Conclusion

I

The Split Bregman formulation is a fast tool that can solve

almost any L1 regularization problem

I

Small memory footprint

I

This method is easily parallelized for large problems

I

Easy to code

Conclusion

Acknowledgment

We thank Jie Zheng for his helpful discussions regarding MR image

processing. This publication was made possible by the support of

the National Science Foundation’s GRFP program, as well as ONR

grant N000140710810 and the Department of Defense.