P CONTINUOUS IMPROVEMENT PLAN

advertisement

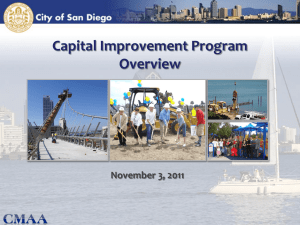

CONTINUOUS IMPROVEMENT PLAN PAST PRACTICES Assessment in the College was relatively dynamic during the period between accreditation visits. In the past, accreditation was largely a function of an external consultant hired prior to the site visit. This practice, coupled with a number of changes in leadership, not surprisingly yielded a 2007 NCATE visit where the College was cited for deficiencies in assessment. A number of faculty worked to improve assessments for the focused visit that occurred the following year but the changes were at the programmatic level. The ongoing assessments that were conducted were largely compliance driven: Title II, Professional Education Data System (PEDS), Colorado Department of Education (CDE), and Specialty Professional Associations (SPAs). The identification of assessment as an area needing improvement and the College’s understanding that better assessments and a unit-wide assessment plan are needed to move it forward are the primary factors that led to Assessment being the area of focus for the Continuous Improvement Plan. Currently the College is undergoing a number of important transitions related to assessment. The most important is a movement from compliance-driven assessment with little long-term vision to an internally-directed, program-improvement framework. The second shift is a movement from NCATE to CAEP. The former marks not only a new use in assessment data, but also new assessments and audiences. The third change is a shift from the College’s use of internally validated assessments to more nationally and externally-validated measures. Understandably, the College is working to adjust to these differences and integrate substantial cultural and practical shifts. Departments often functioned as autonomous units with limited integration of assessment, data sharing, or concrete steps toward achieving shared goals. This culture has begun to visibly shift under a newly revitalized Assessment and Accreditation Committee supported by improved business practices, dedicated personnel and program support, and increased availability of data and new tools. Among these important steps are: the creation of an integrated database capable of tracking students from inquiry to the workplace (Cherwell) and an Assessment and Operations Specialist supporting data flow and assessment needs. Finally, the University has begun to realize some of the benefits of the multi-million dollar, system-wide student record system (ISIS) capable of supporting advanced business analytics. As a result of this evolution, the Assessment and Accreditation Committee and College as a whole have begun to identify key needs in order to support this change. Among these needs are regular reports and new assessment tools in order to answer vital questions in a more timely and transparent manner. More importantly, they have begun to address broader questions of candidate achievement and program improvement. These needs were highlighted by the convergence of two key events that occurred over the past 14 months. The first were SPA reports. As part of the first step toward national program recognition and CAEP accreditation, the majority of programs submitted SPA reports. Because of the multitude of loosely integrated data streams and a limited assessment framework, the work proved exceptionally taxing. The difficulty in producing strong SPA reports highlighted the need 1 for better tools, planning, and reporting to departments and program leaders. Nearly simultaneously, the University of Colorado Board of Regents initiated a program prioritization process based largely on the work of Dickeson (2010). Similar to the SPA process, the campus struggled to provide the required data and was not positioned to provide the program-specific metrics required to successfully benefit from the prioritization process. Although the College was able to provide program-specific data, it was a cumbersome and time-consuming process. CURRENT STATE Concurrent with this shift in how data are collected and utilized are a number of key drivers of change. The most important is Colorado’s entry into the use of value added measures (VAM) tied to teacher effectiveness. While the legitimacy of tying student growth data to individual teachers continues to be researched, the State’s data collection will provide critical data to Educator Preparation Programs. An outgrowth of Senate Bill 191, this system not only requires districts to use measures of student growth for teacher retention, it links this data back to EPPs for the first time. The data, when fully available, will allow EPPs to longitudinally track the workforce participation and results of program completers in a systematic way never previously possible. With this increase in data quality and availability, there will surely come increased scrutiny of EPPs to account for differences in workforce outcomes. In addressing the implementation with the Colorado Department of Education, and similar to other states, it will take multiple iterations before full implementation. The wealth of information provided through this framework is hard to overstate. These data will help inform the College on teacher impact on student growth. Unlike other measures such as employer surveys, portfolios, or alumni surveys, the data returned to the College will provide a view of teachers as their districts see them. It will shift the foundation for a comprehensive framework of conversations between school district superintendents and human resource directors and the College to a more outcome-centric view. Given the diversity of districts served by the College—from high-risk, urban to exceptionally small rural—the College can better address the needs of regional employers. The data will serve as a lever toward measuring and improving program efficacy. The second key driver is the rapid growth of both UCCS and the College. There are a number of new programs entering the assessment system, often bringing new perspectives on the role and nature of assessment. These include: UCCSTeach—a UTeach replication site—with their first program completer in Fall of 2013; a new, cross-disciplinary Bachelor of Innovation in Inclusive Early Childhood Education (BI:IECE) started fall of 2013; a proposed Bachelor of Arts in Inclusive Elementary Education to begin in 2015; and school-district level cohorts in principal preparation and educational leadership begun in 2013. All of these have leaders eager for feedback on how to improve and grow their programs. As a result, there is a renewed and pressing need for candidate and course-level data to inform questions about programs as well as build an infrastructure to support unprecedented levels of growth. Another driver of change were the college-level conversations that emerged over the past three years. The impact is seen in departmental meetings, cross-department discussions, and program creations. The lessons from SPA and the program prioritization process also have impacted this change. The combination of experiences highlighted the demand for quality, timely data and the 2 need to build the requirements of accreditation into the regular operations of programs and departments. The first steps in this process were identifying regular reports to inform practice. In conjunction with this need, the College has taken tangible steps to automate processes. The combination of the revitalized Assessment and Accreditation Committee and new data streams have laid the foundation for departments to work collaboratively in strategic investigations for regular program improvement. A final critical component in the move toward an integrated assessment framework is the reconstitution of the College Advisory Board (CAB). The CAB has members from a number of stakeholder groups beyond the College, including district and community representatives. As a result, CAB provides an authentic audience for the work of the assessment committee in addition to serving as a source of feedback. Fully integrating the CAB into the assessment framework represents a key component in the shift from predominantly internally-generated assessments with largely internal audiences to a variety of internal and external assessments reported to a larger audience. FUTURE STATE In order to accomplish the critical changes needed to move assessment and accreditation initiatives forward and institutionalize practices, the College has already taken a number of concrete steps. The first is regular reports of data on key internal measures. These data will provide new and more complete final measures of program impact and will serve as a springboard for more detailed conversations and investigations into candidate quality and content knowledge. Because of the large numbers of programs that utilize a cohort model, the College is able to link course-taking patterns, year of completion, and instructor to groups as well as individual students. Thus, if a program makes a change within a given year, it is reasonably easy to trace the effects through cohorts The biggest changes include incorporating new, externally validated measures into programs. Educator preparation is implementing a number of new instruments in the near future. These include: Student Perception Survey (SPS) - jointly developed by the Gates Foundation, Tripod Project, and Colorado Legacy Foundation. The instrument is directly aligned to the Colorado Teacher Quality Standards and measures multiple domains of student perceptions including learning environment and classroom management. edTPA- developed at Stanford to predict classroom readiness for candidates, it is a performance-based assessment of teaching. COE educators will also score candidates’ work to compare their predicted scores with nationally certified examiners. UCCSTeach, TELP, and ALP are piloting the use of edTPA in 2014. The Classroom Assessment Scoring System (CLASS) - developed by the University of Virginia’s Curry School of Education. The domains for the instrument target the craft of teaching and will be administered multiple times to measure candidate growth. Both Special Education and UCCSTeach are currently piloting CLASS. The Novice Teacher Project is a collaborative statewide effort is being piloted by a number of institutions and led by the University of Denver. The Novice Teacher Core Competencies: Impacts on Student Achievement and Effectiveness of Teacher 3 Preparation. The participating EPPs have received data from the 2013 administration of the survey and are anticipating 2nd year data sometime in summer 2014. Internally developed measures will remain an important part of the College’s assessment framework. The College already utilizes an exit survey for program completers. Utilizing workforce data from SB 191, the College is building a number of new instruments including: a post-employment survey to be administered annually for the first five years after exiting a program and an employer survey to be administered annually. The post-employment survey is being jointly developed with the Office of Alumni Relations to provide feedback for the University’s strategic plan without creating redundant surveys. Paramount among the internally developed measures are the key assignments linked to SPA standards in each course throughout the College. Departments and programs are understandably at different places culturally and technically with this process. Leadership and Special Education are front runners in this process, having already implemented initial rounds of key assignments. These assignments are aligned to ELCC and CEC standards as well as the appropriate Colorado standards. To facilitate this process, the key assignments and rubrics are incorporated into the Blackboard platform faculty already use. This also allows for automation of reporting and ease of sharing rubrics between faculty members teaching the same course. In the case of TELP And ALP, assignments are linked to the Colorado Standards in both Blackboard and Task Stream. Reflecting the collaborative nature of educator preparation, the College has initiated an annual dialogue with faculty in the College of Letters Arts and Sciences (LAS) who teach in those content areas. This dialogue is designed to serve a number of functions including dissemination of SPA requirements regarding content knowledge; establishing a framework for sharing internally and externally collected data about candidates and completers; and identifying areas for improved articulation between the two Colleges. The College has utilized a modified version of the ‘Plan/Do/Study/Act’ cycle outlined in the CAEP Evidence Guide for introducing its major College-wide initiatives. These include a multitude of departmental projects such as key assignment reporting, new courses, online offerings, and the implementation and adoption of external measures such as CLASS. 4 WHEN Spring 2014 2014- 15 Academic Year ● WHAT Full implementation of the student exit survey WHAT'S NEXT Departments utilize data in reports to A&A Committee, College Advisory Board, and Dean. Recommend changes to instrument if needed ● edTPA piloted Programs will analyze data to determine program weaknesses, strengths. Make programmatic adjustments based on findings ● CLASS piloted Scores and process will be analyzed by programs. Cross-departmental faculty will make recommendations for 'next steps' ● CAEP BOE on-site visit November 16-18 Consider lessons learned from visit, make changes if needed ● Pilot for Student Perception Survey (SPS) Programs will utilize data to inform candidates of their performance and analyze data for programmatic use ● Full implementation of: CLASS and edTPA Analyze and disseminate findings, make programmatic adjustments if necessary ● Pilot employer survey: TELP, ALP, SELP, LEAD Analyze and disseminate findings, adjust instrument, process, consider programmatic adjustments based on results 5 2015-16 Academic Year 2016-2017 Academic Year ● Pilot alumni survey: TELP, ALP, SELP, LEAD, School counselors Analyze and disseminate findings, adjust instrument, process, consider programmatic adjustments based on results ● First wave of SB 191 data Determine accuracy of data per program; establish a process for analyzing data, dissemination. COE discussion of data's implications ● Initial analysis and reflection on: CLASS and edTPA Analyze and disseminate findings, consider programmatic adjustments based on results ● Full implementation of: Student Perception Survey (SPS) Analyze and disseminate findings, consider programmatic adjustments based on results ● Unified COE employer survey: TELP, ALP, SELP, LEAD Finalize instrument and conduct surveys. Analyze and disseminate findings, adjust instrument, process. Consider programmatic adjustments based on results ● Unified COE alumni survey: TELP, ALP, SELP, LEAD, School counselors Finalize instrument and conduct surveys. Analyze and disseminate findings, adjust instrument, process. Consider programmatic adjustments based on results ● Second wave of SB 191 data—first year two begin examining growth Continue to monitor data's accuracy. Analyze and disseminate findings, determine patterns that need to be addressed, consider programmatic adjustments based on results 6 2017-2018 Academic Year Ongoing Reporting ● Continuous improvement adjustments for: CLASS and edTPA Continue to collect and analyze findings, disseminate results and consider programmatic adjustments if needed ● Initial analysis and reflection on: SB 191 data Continue to monitor data's accuracy. Analyze and disseminate findings, determine patterns that need to be addressed, consider programmatic adjustments based on results ● Initial analysis and reflection on: Student Perception Survey (SPS) Analyze and disseminate findings, consider programmatic adjustments based on results ● Full implementation of surveys: TELP, ALP, SELP, LEAD, School counselors Finalize instrument and conduct surveys. Analyze and disseminate findings, adjust instrument, process. Consider programmatic adjustments based on results ● Ongoing monitoring of: CLASS and edTPA Analyze and disseminate findings, consider programmatic adjustments based on results ● ● ● ● Title II CAEP annual report SPA updates PLACE, PRAXIS, edTPA, course grades, enrollment, degree completions, Placements for internships, faculty course loads, Faculty Report of Professional Activities (FRPA), budget data ● 7 CONCEPTUAL DIAGRAM OF THE ASSESSMENT FRAMEWORK Figure 1 is a visual representation of the assessment and accreditation framework for the College. It shows the flow of aggregated data from support personnel for the College to departments. Departments—and programs within departments—make use of the data both internally and to generate an annual report to the Assessment and Accreditation Committee and externally for reporting purposes such as SPAs. The annual report is framed around the CAEP, CACREP, and SPA standards and is presented to the Assessment and Accreditation Committee at the February meeting. The Assessment and Accreditation Committee provides feedback to departments and the Associate Dean aggregates the reports into an annual report for the College. This report is presented to the CAB and Dean at their April meeting. The Dean and Associate Dean are integral to this process at a number of junctures, providing feedback to the departments on the Colorado, CAEP, CACREP, and SPA standards as well as the College’s own initiatives such as mission/vision and candidate learning outcomes. The Associate Dean is also central in providing feedback on data quality and collection to support personnel throughout the process. The values and mission of the College are infused throughout all our work rom program design, courses, and assessments and ensure that innovation, equity and inquiry are woven throughout programs. They also are considered when determining that program completers are competent representatives of the College of Education as well as their chosen professions. Among these internal measures are specific visions regarding equity, diversity, and social justice. I. Preliminary Response to the Continuous Improvement Plan (CIP) Use the Rubric for Evaluating the Capacity and Potential in the CIP to provide feedback on: a. The EPPs capacity for initiating, implementing and complete the CIP. The EPP acknowledges the importance of assessment leadership in the process of continuous improvement and does outline basic information in the form of five key features related to the success of the CIP: the Assessment and Accreditation Committee, improved business practices, dedicated personnel, program support, and increased availability of data and tools. While the timetable for implementation of assessments, the collection of assessment data, and the subsequent review of assessment data, the CIP demonstrates only a moderate level of commitment by the EPP. The CIP stresses the importance of assessment and offers a narrative explaining how the EPP’s culture of assessment has evolved since the previous accreditation visit but detailed information related to the overall key personnel and resources needed to carry out the CIP are needed. The EPPs capacity for initiating, implementing, and completing the CIP is emerging. b. The potential of the CIP to have a positive impact on the EPP and its candidates. While the EPP emphasizes a shift in assessment culture, CIP goals are very loosely defined in the plan. The EPP is dedicated to moving from a compliance driven to a program improvement driven framework with more nationally and externally-validated measures, but the CIP offers limited information as to how the EPP will achieve this goal. Specific goals for EPP programs would add focus and clarity to the plan. The potential of the CIP to have a positive impact on the EPP and its candidates is emerging. c. The proposed use of data and evidence. As evidenced by the CIP, the EPP plans to implement assessments and collect data in a systematic method. The CIP does not, however, include any mention of collecting and analyzing baseline and final data. Plans for baseline data and yearly objectives are needed beyond planned implementation of assessments. Further, the CIP should include a plan to gather final evidence based on processes and outcomes in addition to moving beyond piloted assessments. The proposed use of data and evidence is undefined. d. The potential of the EPP to demonstrate a higher level of excellence beyond what is required in the standards. The CIP offers a clear plan to implement current and piloted assessments as well as to regularly collect assessment data in a systematic fashion. By the conclusion of the 2017 academic year, the EPP will have implemented more than five assessment measures, allowing for the collection of data related to candidate performance, impact on P-12 learners, and EPP operations. The EPP includes planned instances of internal and external stakeholder review of assessment data and indicates that it will respond with program enhancements accordingly. The CIP should include a statement as to how the CIP will lead to a higher level of excellence beyond what is required for most of the CIP’s focal areas. The CIP shows some promise; there are more than two elements at the emerging level and two elements that are undefined, needing clarification and/or enhancements. The CIP offers basic information on personnel and resources expected to support successful implementation. Further, the CIP outlines basic plans to implement multiple measures. Assessment implementation appears to be planned, but no yearly indicators are identified. CIP goals are very loosely defined and do not address specific programs. There does not appear to be any mention of baseline or final data; while this CIP would not include final data as it projects multiple academic terms in the future, the CIP does not mention any plans for comparing multiple data sets aside from statements concerning the review of data annually. The EPP identifies the commitment to an evolving culture of assessment; it does not, however, include a statement as to how the CIP will lead to a higher level of excellence beyond what is required for most of the CIP’s focal areas. Please click here to see the updated Continuous Improvement Plan Matrix Updated FIGURE 1 Assessment and Accreditation Visual Representation Dean Associate Dean A & A: • Generates annual report aligned to CAEP, CACREP, and SPA standards. • Provides structure and guidance for departments. Curriculum & Instruction CAB Counseling Leadership Regular Reporting Title II CAEP Annual Report CACREP SPA updates PLACE, PRAXIS, edTPA, course grades, enrollment, degree completions, Placements for internships, faculty course loads, FRPA, budget data Special Education Data support: COE Technology Support, Finance and Budget Specialist, Office of Assessment and Accreditation, Faculty