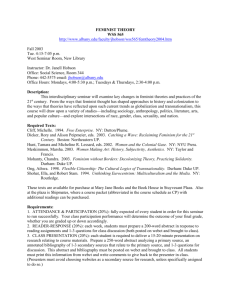

EECS 556 – Image Processing– W 09 Spectral Estimation and Wiener Filtering Random processes

advertisement

EECS 556 – Image Processing– W 09

Spectral Estimation and Wiener Filtering

•

•

Random processes

Properties of random processes

What is image processing?

Image processing is the application of 2D signal processing methods to images

• Image representation and modeling

• Image enhancement

• Image restoration/image filtering

• Compression

(computer vision)

Filtering methods: Wiener filter

• The Wiener filter (and its relatives) are formulated based on random process principles

• random variables, random vectors, random processes

• 2nd‐order properties, through LSI systems

• Wiener filter

Random variables

• A random variable can be thought of as a function mapping the sample space of a random process to the real numbers

• Discrete Random variables

– Bernoulli; binomial; Poisson

• probability mass function (PMF)

Poisson distribution

Random variables

• Continuous random variables:

– Uniform; Gaussian

• probability density function (pdf)

Properties of random variables

• Mean or expected value

• Expectation of a function of a RV:

• Variance:

2nd‐order properties of random variables

• Correlation (summarize information about their joint behaviors)

• Covariance

•X,Y are independent Æ cov(X,Y)=0

•If cov(X,Y)=0, X and Y are uncorrelated Random Vectors

• A finite collection of random variables (over a common probability space) is called a random vector

• X = (X1, . . . ,Xn), where each Xi is a random variable

Random Vectors

• Cumulative distribution function (CDF) for a random vector:

• Probability density function (pdf)

Random vectors

• Mean or expected value

• Expectation of a function of a RV:

Random vectors

• n × n correlation matrix (length‐n RV)

• The n × n covariance matrix (length‐n RV)

Example

• X has a gaussian or normal distribution

Random processes

• An infinite collection of random variables is called a random process.

• In the context of imaging problems, random processes are often called random fields

• If x[n,m] denotes a random field, Æ∀[n,m], x[n,m] is a random variable

defined over a common probability space.

Random processes

• The behavior of a random process is specified completely by all of its joint distribution functions.

• Gaussian random processes are specified completely by the first two moment functions

• Poisson random processes single moment suffices

• mean function: • auto‐correlation function:

• auto‐covariance function:

Wide‐sense stationary (WSS)

• A random process x[n,m] is called wide‐sense stationary (WSS) if:

– the mean function is a constant

– the auto‐correlation function depends only on the difference in spatial coordinates:

Wide‐sense stationary (WSS)

– A more compact notation:

Examples

• A zero‐mean WSS white random process with variance 2 has the following auto‐correlation:

There is correlation on if shift is 0

Examples

Realization of the random process

Examples

Realization of the random process

Example: Random process X(t):

Is X(t) WSS?

Random processes in freq domain

• Interested to represent the behavior of WSS random processes in the frequency domain

• DSFT of the auto‐correlation function

= power spectrum or power spectral density of the random process

Random processes in freq domain

• Property: auto‐correlation function of a WSS random process is Hermitian symmetric:

• Is the power spectrum real or complex? Real

Random processes in freq domain

• Example: a zero‐mean WSS white random process with variance σ2

constant (flat) power spectrum

• WSS white random process with mean μ and variance σ2

Non‐negativity & non‐negative definiteness

• random variables:

– variances are non‐negative, because: integral of nonnegative quantities

Non‐negativity & non‐negative definiteness

• random vectors:

– The covariance matrix is non‐negative definite

• A square N × N matrix A is called nonnegative definite iff: scalar

Why this is important?

Suppose x is a random vector with cov matrix: cov{x)= I

Goal: synthesize random vector y with cov{y)= A

Æ it turn out that y = S’ x yields cov{y} =A

• Any (Hermitian symmetric) non‐negative definite matrix A can be decomposed as:

Square root of A

Non‐negativity & non‐negative definiteness

• random processes (1D):

– The autocorrelation function is a non‐negative definite function

• A function a[n] : Z × Z Æ C is called nonnegative definite iff

for all functions for which the above summation converges

Non‐negativity & non‐negative definiteness

• random processes (2D):

– The autocorrelation function is a non‐negative definite function

• A function a[n] : (ZxZ) × (ZxZ )Æ C is called nonnegative definite iff

for all functions for which the above summation converges

Why this is important?

• A nonnegative definite function also has a square root

• One can generate WSS random processes with any such autocorrelation function by filtering white noise

Pairs of random processes

• Consider both input and output images for LSI systems

• Analyze multiple random processes simultaneously

• Cross‐correlation function:

Properties of cross‐correlation function

• Linearity:

• Autocorrelation:

Properties of cross‐correlation function

• Hermitian symmetry:

• x[n,m] and w[n,m] are jointly WSS, iff

– x[n,m] and w[n,m] are individually WSS

cross power spectrum

• DSFT of the cross‐correlation function:

• Properties of cross power spectrum: – Linearity

‐

Note: This doesn’t imply P is real! This is because: Uncorrelated random processes

• x[n,m] and y[n,m] are uncorrelated random processes iff: • If in addition at least one of the processes is zero mean: • If both are zero mean:

Random processes through LSI systems

• What happens to the cross‐correlation of jointly distributed random processes w[n,m] and x[n,m] after being passed through filters?

Random processes through LSI systems

• Let’s compute:

Random processes through LSI systems

• If the inputs x[n,m] and w[n,m] are jointly WSS

Spectral domain:

Special case 1

• Relate behavior of output to behavior of input.

• Thus: If zero‐mean input, then zero‐mean output

Special case 2

Assume:

EECS 556 – Image Processing– W 09

Next lecture

•Spectral Estimation

•Wiener Filtering