Estimating the Empirical Lorenz Curve in the Presence of Error

advertisement

Estimating the Empirical Lorenz Curve in the Presence of Error

Chaya S. Moskowitz

∗

Colin B. Begg

Department of Epidemiology and Biostatistics

Memorial Sloan-Kettering Cancer Center

307 East 63rd Street, 3rd Floor, New York, NY 10021 U.S.A.

∗

e-mail: moskowc1@mskcc.org

1

Abstract

It is frequently the case that the measure of interest used to rank experimental units when

estimating the empirical Lorenz curve is subject to random error. This error can result in an

incorrect ranking of experimental units which inevitably leads to a curve that exaggerates the

degree of variation in the population. We will explore this bias and discuss several widely available statistical methods that have the potential to reduce or remove the bias in the empirical

Lorenz curve. The properties of these methods will be examined and compared in a simulation study in order to determine which analytic strategies have sufficiently good performance

characteristics to be recommended for widespread use.

2

1

Introduction

Both the Lorenz curve and Gini coefficient were originally proposed as methods for studying the

concentration of income in a population. While both tools have been primarily utilized in the

economic and social sciences over the last century, in recent years these methods have also seen

application in other areas such as medical and health services research. As use of the Lorenz curve

and Gini coefficient has expanded into these other fields, the tools have been applied to data that

are increasingly complex. With this added level of complexity, the possibility of error or bias in

the estimation of the Lorenz curve and Gini coefficient may arise. This potential bias is frequently

ignored in the applied literature.

As is described in further detail below, the estimation of both the Lorenz curve and the Gini

coefficient involves ranking the units of observation on the basis of some quantity of interest and

then estimating cumulative proportions. When there is error or variation in the measurement of the

quantity of interest, an analysis that does not account for this error or variation may incorrectly rank

the units and result in biased estimates for the Lorenz curve and Gini coefficient. This situation can

arise in many different circumstances. For instance, the Lorenz curve has traditionally been used

to study the distribution of income in a population. In this case depending upon the study design,

there may be variation in the measurement of income from a number of possible sources including

error in the reported income (i.e. measurement error) and variation attached to estimating income

if the income must be estimated for each member of the population.

To our knowledge, this bias has been explicitly recognized by a limited number of investigators.

Lee (1999) compared the potential for bias in this setting with the inherent bias in estimating

predictive accuracy for a model using the same data that was used to construct the model, an

issue that has been studied widely by statistical theorists. Suggesting bootstrapping to correct

the bias, Lee proposed using the bootstrap samples to reorder the experimental components while

3

using the original data to estimate the Gini coefficient. Pham-Gia and Turkkan (1997) proposed a

parametric approach when using a Lorenz curve to study inequality in income distributions when

the incomes are measured with error, and in principle this has the potential to resolve the bias

issue. In their formulation, they reason that true income = observed income + error and model

the observed income with a parametric distribution and the error with a separate parametric

distribution. Their paper contains theoretical results for obtaining the distribution of the true

income and the corresponding Lorenz curve.

In this manuscript we are particularly interested in the bias that may occur in a specific type

of study design that can occur frequently in practice. This design is in some sense a two-stage or

nested design where the members of the population, or experimental units, are the primary units

of analysis, but within each experimental unit there are multiple observations that are aggregated

to form the outcome whose distribution is of interest. For example, using Lorenz curves and

Gini coefficients Praksam and Murthy (1992) look at couples within states in India to explore the

acceptance of family planning methods for different levels of literacy. Tickle (2001) divides the

North West Region of England into market areas and studies children in these areas who were

included in the 1995/6 NHS epidemiology survey to examine the distribution of dental caries using

Lorenz curves and Gini coefficients. Elliot et al. (2002) study geographical variations of sexually

transmitted diseases across regions of Manitoba, Canada by looking at individuals within each

region who have a reported infection.

We are interested in “nonparametric” methods for estimating a Lorenz curve and Gini coefficient

in this type of data configuration, where the methods are nonparametric in the sense that the

ranking of the experimental units is unconstrained. A parametric approach such as that suggested

by Pham-Gia and Turkkan may be too restrictive in practice as well as difficult to easily apply to

complex data. While the bootstrap approach is a nonparametric method, we are skeptical that it

4

will adequately correct for the bias in the types of data often encountered in practice. We will,

however, discuss and evaluate this approach in more detail below. In addition to the bootstrap,

we consider two other approaches using random effects models for estimating the Lorenz curve and

Gini coefficient.

In the next section, we introduce a health services application that was our motivation for

undertaking this work. In addition to motivating the problem, we will use this dataset to illustrate

the different methods discussed below. In Section 3, we define the Lorenz Curve and Gini coefficient

and discuss the potential bias in more detail. In Section 4, we present several different analytic

strategies that can be used to estimate the Lorenz Curve and Gini coefficient. Section 5 contains

the results of a simulation study evaluating these methods. In Section 6 we return to the health

services application. We make our concluding remarks in Section 7.

2

Motivating Application

In a recently published paper, Bach et al. (2004) explore whether the quality of health care received

by patients in the United States is associated with race. They study a sample of Medicare patients

together with the patients’ primary care physicians in order to assess if black patients receive a

lower quality of care than do white patients. Their analysis involved several different components,

including a Lorenz curve type analysis studying whether patient visits made by black patients to

their physicians were concentrated among a select group of physicians. Specifically, they examine

a sample of 5% of black and white Medicare beneficiaries who were treated during 2001 by 4,355

primary care physicians who participated in the 2000-2001 Community Tracking Study survey.

The distribution of the black patients across these physicians was characterized by a Lorenz curve

in which the cumulative proportion of black patient visits was plotted against the cumulative

proportion of physicians in the population. They constructed a curve by ranking the physicians on

5

the basis of the proportion of blacks in the physician’s profile.

In order to understand the potential problem, notice that an empirical ranking of these observed

proportions tends to exaggerate the level of concentration or mal-distribution in the curve, since

random variation in the “observed” profile of each physician would lead to the result that the

relative rankings of some physicians would be incorrect, yet the empirical ranking chosen for the

curve necessarily maximizes the empirical mal-distribution. In this context, an “incorrect” ranking

would occur under the following circumstances. Let us say physician A typically treats patients of

whom 10% are black while physician B typically has 7% black patients in his/her practice. However,

the actual proportions of their patients who made office visits during 2001, submitted a suitable

claim to Medicare, and were included in the 5% Medicare sample, were 9% and 11% respectively.

That is, physician A had slightly fewer blacks than normal and physician B had slightly more, and

as a result physician A is incorrectly ranked lower. In these circumstances random error in the

outcome (proportion of black patients) for each experimental unit (physician) necessarily leads to

an incorrect ordering of the units which alters the curve in the direction of an exaggerated estimate

of the degree of concentration. Our goal in this manuscript is to carefully study the properties of

several analytic methods that could be used to correct this bias.

3

Lorenz Curve and Gini Coefficient

To study the issue further we first establish some notation and define the empirical Lorenz curve

and Gini coefficient. We suppose that N observations are dispersed among n experimental units

and represent the number of observations for each experimental unit as m k , k = 1, ..., n. Interest lies

in studying the concentration or distribution of a feature of the N observations, say Y , across the

n members of the population. Let Xk denote the value of some summary of the Y jk , j = 1, ..., mk ,

for the k th experimental unit. In terms of our example, N is the number of patients in the sample

6

seen by the n primary care physicians. Y is a binary indicator of whether or not a patient is black,

and Xk =

P mk

j=1 Yjk

is the number of black patients in the profile of the k th physician.

We obtain the empirical Lorenz curve by first ranking the n units using the X k . We write these

ordered values as X(1) , ..., X(i) , ....X(n) , using i to index the experimental units after they have

Pi

X(l)

. The empirical Lorenz curve is then a plot of the set of points

been ranked. Let Li = Pl=1

n

X

l=1

(l)

{ ni , Li ; i = 1, ..., n}. The horizontal axis represents the cumulative proportion of the population

while the vertical axis represents the cumulative proportion of X. In the context of our motivating

example where the mk vary, a more appropriate choice might be to plot the cumulative proportion

of the {mk }, that is the cumulative proportion of patients seen by the physicians, along the x-axis.

This approach is somewhat different that the traditional empirically estimated Lorenz curve. For

the purposes of simplicity in this paper, we plot { ni } along the x-axis but we note that in some

cases obtaining a meaningful measure of inequality may require careful consideration of the quantity

plotted.

The Gini coefficient is estimated from the same quantities as GC = 1−

Pn−1

i=0

i

( i+1

n − n )(Li+1 +Li ).

It is a measure of the distance of the Lorenz curve from the 45 ◦ degree line and often accompanies

the Lorenz curve when analysis results are presented.

In the situation where there is perfect equality in the distribution so that Y is evenly dispersed

among the experimental units, X1 = Xk ∀k, the Lorenz curve will fall on the 45 ◦ degree line

connecting the origin (0,0) of the unit square to the top right corner (1,1), and GI = 0. On the

other end of the spectrum when all of the outcome is contained in a single member of the population

(maximum inequality in the distribution), the Lorenz curve will lie along the bottom and right side

of the unit square forming an equilateral triangle with the 45 ◦ degree line and GI = 1. In practice,

the Lorenz curve lies somewhere between these two extremes.

The bias about which we are concerned arises because the quantities used to calculate the

7

curve must be estimated from the data. Specifically, in many applications we observe only an

estimate, X̂k , of the true unobserved Xk , where each of these estimates is based on a limited

sample and/or limited time period of study, characterized by m k . Thus, while the empirical Lorenz

curve is estimated as described above using the { X̂k }, the true curve involves a ranking of the

data according to the Xk . The problem is that when X̂k 6= Xk not only will the estimated relative

frequencies {Xk } be subject to random error, but also their ordering in the construction of {L i }

will be redistributed erroneously in some cases. That is, the ranking of the data which is now based

on X̂k may be incorrect. Members of the population with values of X̂k that are larger than their

corresponding true Xk will be ranked higher than they should be while members of the population

with values of X̂k that are smaller than their corresponding true X k will be ranked lower than they

should be. Consequently the empirically estimated Lorenz curve will fall below the true Lorenz

curve and the Gini coefficient will be overestimated, causing the distribution of X to appear more

concentrated than it truly is. In more precise terms, if { L̂i } are calculated using an ordering based

X̂k

} then we are saying that L̂i ≤ Li ∀i.

on the denotes ordered according to { m

k

Because of this systematic bias, we are interested in methods that will produce bias-corrected

Lorenz curves. We next propose several methods that could be used for this purpose and describe

them in detail. In these approaches we focus on adjusting the estimates of the proportions p k =

Xk

mk ,

the proportion of observations with Y = 1 that belong to the k th experimental unit in recognition

of the fact that the distribution of emprical proportion will always have greater variance that

the distribution of true proportion from which they are generated. The estimates X̂k = p̂k mk ,

k = 1, ..., n, are then used to construct the Lorenz curve and estimate the Gini coefficient.

8

4

Analytic strategies for unbiased estimation

4.1

Random effects with logistic regression

The first method we consider is a random effects model. By using programs that are available in

standard statistical software packages, we can estimate a random effect for each experimental unit

and use this random effect to estimate the p k . In this approach we consider the model:

E[Yjk ] = logit(pk ) = θ + γk ,

j = 1, ..., mk ; k = 1, ..., n

Here the Yjk are assumed to be independent Bernoulli variables conditional on the experimental

unit, denoted by the subscript k. θ is a fixed effect common to all experimental units, and the {γ k }

are the random effects. In order for the random effects to be identifiable, we need to postulate a

model for their distribution. We follow convention and assume that γ k , k = 1, ..., n, are independent

observations from a Normal(0,σ 2 ) distribution. A random effects model of this nature induces

“shrinkage” in the random effects estimators for the empirical estimates. The effect is to reduce

the spread of the proportions between physicians and consequently reduce the Gini coefficient and

raise the height of the Lorenz Curve toward the 45 o line. The degree of shrinkage should be inversely

related to the sample size of the individual experimental units.

The parameters in this model can be estimated using previously developed methods for nonlinear

mixed models. A number of such methods have been proposed and are available in statistical

software packages. (Pinheiro and Bates (2000) and Davidian and Giltinan (1995) are two references

that contain overviews of these methods.) In this manuscript we use a numerical quadrature

approach to integrating the likelihood of the data over the random effects. This approach is easily

implemented for reasonably sized datasets in the software SAS through the PROC NLMIXED

program.

9

4.2

Normal random effects analysis

Although the preceding approach is conceptually appealing, it becomes computationally burdensome in large datasets. Indeed, it is not feasible to complete this analysis using the entire 80,000

physicians in the Community Tracking Study. Historically, it has often been shown that analyses

of binary data in large samples can be accomplished by simply using the more available and computationally less burdensome normal mixed models, in effect treating each binary outcome as if it

were a normal variate. We include this model for its accessibility and computational speed, and

compare its results with the preceding logistic regression model in our simulations. In this model

yjk = θ + γk + jk

where γk represents the independent normal random effect for the k th physician and the jk are the

error terms for the j th patient of the k th physician. Since the variance of this term for the patients

of the k th physician really reflects the variance of the binomial variate X k , we must perform a

weighted regression, with weights

1

mk

to more appropriately characterize the contributions of each

patient.

Multiple standard statistical software packages provide programs that can be used to estimate

the parameters in this model. These packages use restricted maximum likelihood to estimates the

variance parameters and solve the mixed model equations to obtain estimates of the fixed and

random effects.

4.3

Bootstrap

The bootstrap (Efron and Tibshirani, 1993) is a nonparametric method that is frequently employed

by statisticians. Very generally, data elements are randomly sampled with replacement from the

entire dataset to create a “bootstrap” sample, and the statistic of interest is calculated using this

bootstrap sample. This process is repeated numerous times in order to determine the distribution

10

of the statistic and associated information (such as standard errors, confidence intervals, and bias

for example).

In the context of the Lorenz curve, Lee (1999) suggests using the bootstrap. Considering a

data configuration that is simpler than the one in which we are interested, when there is only one

observation per experimental unit (not multiple observations that need to be aggregated within

unit) and the observation is continuous Lee proposes drawing bootstrap samples of experimental

units, reordering the data based on the bootstrap samples, and then using the original data to

calculate summary measures of the Lorenz curve. We plan to evaluate a slight modification of

this method, where we adapt Lee’s approach to our more complex data configuration by drawing

a separate bootstrap sample of observations for each experimental unit, reordering the experimental units based on the estimated {Xk } from the bootstrapped samples, and then estimating the

components of the Lorenz curve and Gini coefficient using the original data.

Specifically, for this approach we draw bootstrap samples of observations within experimental

(b)

units. With this bootstrap sample we compute p k , the proportion of observations in the bootstrap

sample for the k th unit that have the feature Y . We rank the experimental units according to

(b)

(b)

the pk . We then use the original data to calculate {L i }, arranged in the order specified by the

(b)

bootstrap sample. We repeat this process B times to obtain B values of L i . Separately for each

i, we take the mean of these values to obtain L B

i =

1

B

PB

b=1

(b)

Li , the bootstrap estimate of the ith

ranked contribution to the Lorenz curve. Plotting { ni , LB

i ; i = 1, ..., n} gives the bootstrap estimate

of the Lorenz curve and the Gini coefficient is similarly estimated using the L B

i .

A potential problem that we forsee with this approach is that it will not work well when there

are a large number of zeros in the data for experimental units with possibly small but nonzero

probabilities. In this case the bootstrap samples would continually estimate these probabilities as

zero and not correct for the bias.

11

5

Simulation Study

We conducted a simulation study to (1) assess and characterize the magnitude of bias present in

the empirically estimated Lorenz curve and Gini coefficient and (2) to evaluate the ability of the

previously discussed methods to produce bias-corrected estimates of the Lorenz curve and Gini

coefficient. We first describe how we generated data and then follow this description with the

simulation results.

5.1

Simulation of Data

We specified N and n and studied equal cluster sizes, m 1 = mk ∀k, and unequal cluster sizes

generated from a multinomial distribution with probabilities α k ranging from 1/n, ..., 1, but scaled

by the sum of these probabilities,

P

k

αk , so they added to one, in order to study potential bias

when there is wide variation in cluster sizes. We generated a variable Z from a Normal(µ, 1)

distribution where µ was prespecified, and then obtained probabilities p ∗k = Φ(z) where Φ is the

cumulative distribution function for the standard normal distribution. We further specified a desired

π = P (Y = 1) and obtained the pk = P (Yk = 1) by scaling the p∗k so that the overall proportion of

observations with Y = 1 across all simulated experimental units was equal to a chosen π. The {X k }

were then generated from a Binomial(m k , pk ) distribution. By generating data in this fashion, we

were able to control the degree of mal-distribution/concentration by changing the value of µ.

In the simulations shown here, we fixed N = 5000 and π = 0.1. We varied µ so as to obtain

distributions with very little inequality, GC = 0.05, with moderate inequality, GC = 0.50, and with

high inequality, GC = 0.9. We also varied the parameter n using values n = 50, 100, 500 which

resulted in average cluster sizes of 100, 50, and 10. For each scenario we simulated 1000 datasets

and report the mean values of the estimated Gini coefficients across the 1000 simulations.

In running these simulations, we found that in order to obtain convergence of the PROC

12

NLMIXED program for the logistic regression, we needed to constrain the estimate of the variance of the random effects to be greater than zero.

5.2

Results

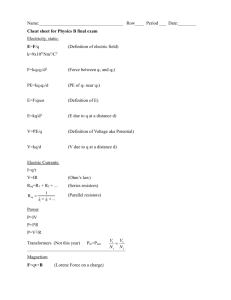

Figure 1 gives examples of the Lorenz curves generated from data simulated with equal cluster sizes.

In each graph a single simulated dataset is plotted together with curves produced from the different

analytic strategies below. Figure 1(a) depicts data with little inequality. The curve drawn using

the “true” probabilities (which would be unobserved in a real application), lies very close to the 45 ◦

degree line. The empirically estimated Lorenz curve falls substantially below this line suggesting

even greater inequality. The curve drawn using estimates from the logistic regression approach

lies almost directly on top of the true curve, producing the best estimate in this dataset. The

normal regression approach is also close to the true curve, but appears to slightly underestimate

it. The curve resulting from using the bootstrap estimates lies in between the true curve and the

empirically estimated curve, not fully correcting the bias. Figure 1(b) shows data with a moderate

degree of inequality. Here the empirically estimated curve again lies well below the true curve. In

this dataset, though, both random effects approaches clearly overestimate the curve suggesting less

inequality than in truth. The Lorenz curve drawn using the bootstrap approach is the closest to

the true curve and appears to provide a relatively reasonable approximation. Figure 1(c) illustrates

the situation when there is a high degree of inequality in the data. Here all the estimated curves

lie along the true curve indicating little bias in this case. Overall, these plots show that there is

the potential for bias when estimating the Lorenz curve and suggest that the bias decreases as

the inequality in the data increases. It is difficult to draw conclusions, however, based on a single

simulation, so to further characterize the bias and different methods of estimation we turn to results

summarizing the Gini coefficient across multiple simulations.

13

0.8

0.6

0.4

0.0

0.2

cumulative proportion of X

0.8

0.6

0.4

0.2

0.0

cumulative proportion of X

1.0

(b) GI=0.5

1.0

(a) GI=0.05

0.0

0.2

0.4

0.6

0.8

1.0

0.0

cumulative proportion of population

0.2

0.4

0.6

0.8

1.0

cumulative proportion of population

0.8

0.6

0.2

0.4

Truth

Empirical

Logistic regression

Normal regression

Bootstrap

0.0

cumulative proportion of X

1.0

(c) GI=0.9

0.0

0.2

0.4

0.6

0.8

1.0

cumulative proportion of population

Figure 1: Examples of Lorenz curves for data simulated with equal cluster sizes and n = 500.

Table 1 and Table 2 contain these results. Looking first at the empirically estimated Gini

coefficients in both tables, we see that the magnitude of bias depends at least partially on the

cluster size. The bias can be determined by comparing the estimated GC with the known value in

the first column. Thus, in the first row, the logistic and normal GC estimates are 0.03 and 0.02,

respectively, lower than the true value of 0.05. By contrast the emprical (0.18) and bootstrap (0.13)

values clearly overestimate the true GC. The larger the cluster size, the less bias there is. The bias

is always smallest when the average cluster size is 100 and largest when the average cluster size is

ten. For the case when GC=0.05, the difference in bias between the situations where the average

14

cluster size is 100 and where the average cluster size is 10 is quite considerable (ie, for estimating

GC=0.05 we find an empirically estimated GC=0.18 versus 0.50 from Table 1 and GC=0.37 versus

0.58 from Table 2). We also see that there is a very large degree of bias corresponding to a small

degree of inequality in the population. The bias gets smaller as the inequality increases. If we

compare the degree of bias for a fixed average cluster size across the different scenarios this trend

becomes evident. For example, from Table 1 where the cluster size is 100, the bias is approximately

0.13 (0.18-0.05) for data with little inequality and GC=0.05, approximately 0.02 (0.52-0.50) for

data with moderate inequality and GC=0.50, and approximately 0.00 (0.90-0.90) for data with

high inequality and GC=0.90. Similarly, in Table 2 where the average cluster size is 100, the bias

is approximately 0.32 (0.37-0.05) for data with little inequality and GC=0.05, approximately 0.10

(0.60-0.50) for data with moderate inequality and GC=0.50, and approximately 0.02 (0.92-0.90)

for data with high inequality and GC=0.90. The pattern of bias at the other cluster sizes is the

same. To understand why the bias decreases as the inequality increases, consider the following

small example illustrated in Table 3.

Suppose there are n = 25 experimental units and N = 200 equally distributed observations

across the experimental units (ie. equal cluster sizes with 8 observations each) with π = 0.1. We

consider first the situation where there is very little inequality in the distribution of the p k ’s across

the population. This scenario is represented in the first four columns of Table 3 where GC is

approximately 0.05. Because the cluster sizes are equal and there is little inequality in the data,

we expect all the pk s to have similar values reflecting that about 10% of the observations for each

experimental unit have Y = 1. The ranking for what would be the true, unobserved Lorenz curve

ranks the units based on the Xk = mk pk , which in this case is equivalent to ranking the units based

on the pk since the mk are identical across all units. In this example we have four distinct values

of pk and the order in which the units would be ranked based on those values is shown in the third

15

column of the table. The empirically estimated Lorenz curve requires ranking the experimental

units based on the X̂k which in this case is equivalent to ranking the units based on the p̂ k . There

are a relatively large number of experimental units that have p̂ k equal to zero. Notice that because

there are a small number of observations for each unit and on average 10% of these observations

have Y = 1, simply by chance many of the experimental units will have no observations with Y = 1

yielding p̂k = 0. The ranking of the units based on the p̂ k is shown in the fourth column. It is

clearly very different from the ranking based on the p k . The rank correlation coefficient comparing

{pk } with {p̂k } is 0.06. This difference is due primarily to the variation in estimating values of {p k }

that are very similar to one another when the estimate is based on a small number of observations.

Consider now a similar data structure except with a high degree of inequality in the data. This

scenario is represented in the last four columns of Table 3. Here most experimental units will have

no observations with Y = 1. The 10% of the observations with Y = 1 will be concentrated in a

few experimental units which is reflected by the vast majority of the values of p k equal to zero

with only three exceptions, two of which have only observations with Y = 1 (p k = 1). When the

observations for an experimental unit are consistently Y = 0 (p k = 0) or Y = 1 (pk = 1), then

essentially there is no room for error in the estimate of the probability. In these cases we will

always have p̂k = pk which is exactly what occurs in this dataset. In fact, we see only one unit with

p̂k 6= pk , but the differences in the probabilities does not affect the ranking. Looking at the last two

columns in the table, we see that the rankings are identical. Although this example is a somewhat

simplified situation of what occurs in larger datasets, the general idea is the same. When there

is little inequality in the population, there is the potential for variation in the estimates of the p k

and Xk and even the slightest bit of variation can change the ranking of the experimental units.

When there is a large degree of inequality in the populations, there is less potential for variation in

the estimates of the pk and Xk . Most of the Xk will take values at the two extremes. Most of the

16

variation in estimating these quantities will occur in the few experimental units that do not have

extreme values, but these units will be few in number and so the correct ranking will still be fairly

evident.

Returning to Table 1 and Table 2, also notice that the magnitude of the bias is larger for unequal

cluster sizes, i.e. when there is variation in the m k . Variation in the number of observations for

each experimental unit is associated with greater bias because some experimental units may have

very small mk leading to potentially large error in estimating the p k .

Looking now at the different methods proposed to correct the bias, we look first at Table 1. In

this situation, not surprisingly the bootstrap does not work well when there is very little inequality in

the distribution. Recall from the example in Table 3 that in this situation the observed values, { X̂k },

may contain many zeros when the {Xk } are small but not equal to zero. Drawing a bootstrap sample

for an experimental unit with no observations that have Y = 1 will consistently yield bootstrap

samples with X̂k = 0. The bootstrap approach is limited by this fact. For moderate inequality and

a large degree of inequality in the data, it appears to work better. For the situation with equal

cluster sizes, however, the logistic regression with random effects, while slightly underestimating

the true Gini coefficient, appears to be the most reliable estimate across all levels of inequality in

the data. The normal regression with random effects yields estimates that are very close to the

logistic regression approach and so also appears to be a viable option.

In Table 2, however, we see that when there is variation in the m k , none of the estimators

produce an unbiased estimate. The random effects approaches, which work reasonably well when

the cluster sizes are equal, do very little for correcting the bias. In the scenarios we considered

here, there can be many experimental units with extremely small cluster sizes. Recall that we

incorporate the estimates of the random effects in computing the Lorenz curve. It is possible that

when the sample sizes are small the estimates of the random effects are not very good resulting in

17

the poor properties seen in Table 2. The bootstrap approach seems to have the least amount of

bias in this situation, and works fairly well when GC=0.50 and GC=0.90. However, the Lorenz

curve estimated using the bootstrap when GC = 0.05 is still highly biased for the same reasons

explained in the preceding paragraph. We point out that in this set of simulations we have looked

at a rather extreme situation when there is a very high degree of variation in the cluster sizes which

may exaggerate what is typically seen in practice, particularly for the larger average cluster sizes..

For example, when the average cluster size is 100 we have cluster sizes ranging from 1 to over 250.

In future work we plan to explore the properties of these estimates when the variation in cluster

sizes is not so large.

6

Health Care Data

For this example we analyzed data on a random sample of 500 primary care physicians. There were

4892 total patient visits made to these 500 physicians. Three hundred and fifteen of the visits (6%)

were made by black patients. The number of patient visits in the profile for a physician ranged from

one to 58. The average number of patient visits to each physician was approximately 10 visits with

a variance of around 77. Figure 2 shows the estimated Lorenz curves for these data. Because of the

large number of zeros observed for the X̂k , as evidenced by the flat line in the empirically estimated

curve along the x-axis, we suspect that the empirical estimate is may be exaggerating the degree

of inequality. The empirically estimated Gini coefficient is 0.83. The other three analytic methods

yield Gini coefficients near 0.75 which does reduce the estimated degree of inequality somewhat.

18

1.0

0.8

0.6

0.4

0.2

0.0

cumulative proportion of black patient visits

Empirical

Logistic

Normal

Bootstrap

0.0

0.2

0.4

0.6

0.8

1.0

proportion of physicians

Figure 2: Lorenz curves showing the concentration of black patient visits within a random sample

of 500 physicians.

7

Discussion

In this manuscript we have demonstrated that ignoring variation in the outcome may lead to substantial bias in the empirically estimated Lorenz curve and Gini coefficient causing overestimation

of the degree of concentration. This bias is most profound when there is relative equality in the

distribution.

When cluster sizes are equal, a random effects approach appears to produce estimates of the

Lorenz Curve and Gini coefficient that are relatively close to the true values. While in many

applications cluster sizes vary, equal cluster sizes could arise in a designed study where it is specified

a priori to take the same number of measurements for each experimental unit or when there are

19

only a limited number of sources from which to make observations (such as annual survey results

for a five year period).

Unfortunately, when cluster sizes are not equal, none of the approaches results in satisfactory

estimates of these quantities. There are certainly other methods that could be used for this purpose

which might produce better estimates. For example, estimating the probabilities from a Betabinomial hierarchy might work well here. A Bayesian random effects model might also perform

well. Further work in this area is warranted.

20

References

[1] P. B. Bach, H. H. Pham, D. Schrag, R. C. Tate, and J. L. Hargaves. Primary care physicians

who treat whites and blacks. New England Journal of Medicine, 351:575–584, 2004.

[2] R.-K. R. Chang and N. Halfon. Geographic distribution of pediatricians in the United States:

an analysis of the fifty states and Washington, DC. Pediatrics, 100(2):172–179, 1997.

[3] M. Davidian and D. M. Giltinan. Nonlinar Models for Repeated Measurement Data. Chapman

and Hall, 1995.

[4] B. Efron and R. J. Tibshirani. An Introduction to the Bootstrap. Chapman and Hall, New

York, 1993.

[5] L. J. Elliot, J. F. Blanchard, C. M. Beaudoin, C. G. Green, D. L. Nowicki, P. Matusko, and

S. Moses. Geographical variations in the epidemiology of bacterial transmitted infections in

manitoba, canada. Sexually Transmitted Infections, 78(Suppl 1):i139–i144, 2002.

[6] J. L. Gastwirth. A general definition of the Lorenz curve. Econometrica, 39:1037–1039, 1971.

[7] C. Gini. Sulla misura della concetrazione e della variability dei cartteri. Atti del Reale Instituto

Veneto di Scienze, LXXII:1203–1248, 1914. lettere ed Arti.

[8] Y. Kobayashi and H. Takaki. Geographic distribution of physicians in Japan. Lancet, 340:1391–

1393, 1992.

[9] W. C. Lee. Probabilistic analysis of global performances of diagnostic tests: interpreting the

Lorenz curve-based summary measures. Statistics in Medicine, 18:455–471, 1999.

[10] M. O. Lorenz. Methods of measuring the concentration of wealth. Publications of the American

Statistical Association, 9(70):209–219, 1905.

21

[11] T. Pham-Gia and N. Turkkan. Change in income distribution in the presence of reporting

errors. Mathematical and Computer Modelling, 25(7):33–42, 1997.

[12] J. C. Pinheiro and D. M. Bates. Mixed-Effects Models in S and S-Plus. Springer, 2000.

[13] C. P. Prakasam and P. K. Murthy. Couple’s literacy levels and acceptance of family planning

methods: Lorenz curve analysis. Journal of Institute of Economic Research, 27(1):1–11, 1992.

[14] M. Tickle. The 80:20 phenomenon: help or hindrance to planning caries prevention programmes? Community Dental Health, 19:39–42, 2001.

Table 1: Equal cluster sizes: Average Gini coefficients across 1000 simulations when the m k are

equal. Data were generated with N = 5000 observations, π = P (Y = 1) = 0.1 and GC=0.05 for

data with little inequality, GC=0.50 for data with moderate inequality, and GC=0.90 for data with

high inequality.

GC

0.05

0.50

0.90

n

Cluster size

Estimated GC

Empirical

Logistic

Normal

Bootstrap

50

100

0.18

0.03

0.02

0.13

100

50

0.24

0.03

0.02

0.18

500

10

0.50

0.02

0.02

0.38

50

100

0.52

0.48

0.47

0.50

100

50

0.62

0.47

0.45

0.52

500

10

50

100

0.90

0.90

0.90

0.90

100

50

0.90

0.90

0.90

0.90

500

10

0.90

0.90

0.90

0.90

22

Table 2: Unequal cluster sizes: Average Gini coefficients across 1000 simulations when the m k

vary. Data were generated with N = 5000 observations, π = P (Y = 1) = 0.1 and GC=0.05 for

data with little inequality, GC=0.50 for data with moderate inequality, and GC=0.90 for data with

high inequality. Also shown are average cluster size, range in cluster sizes, and average variation

in cluster sizes across the simulations.

GC

0.05

0.50

0.90

n

Cluster size

Estimated GC

Avg.

Range

Variation

Empirical

Logistic

Normal

Bootstrap

50

100

1-251

3298.4

0.37

0.33

0.34

0.15

100

50

1-133

839.6

0.41

0.33

0.36

0.21

500

10

1-38

35.9

0.58

0.32

0.43

0.44

50

100

1-246

3304.2

0.60

0.58

0.57

0.50

100

50

1-136

840.3

0.62

0.57

0.57

0.52

500

10

50

100

1-237

3298.5

0.92

0.92

0.92

0.91

100

50

1-133

841.5

0.93

0.93

0.93

0.92

500

10

1-37

35.9

0.93

0.93

0.93

0.92

23

Table 3: Example of potential error in ranking experimental units. Data generated with N = 200

and n = 25.

GC=0.05

pk

p̂k

GC=0.90

Ranked by

Xk

X̂k

pk

p̂k

Ranked by

Xk

X̂k

0.11

0.125

4

2

0

0

1

1

0.11

0.125

4

2

0

0

1

1

0.09

0

2

1

0

0

1

1

0.10

0

3

1

0

0

1

1

0.09

0

2

1

1

1

3

3

0.10

0.125

3

2

0.5

0.75

2

2

0.10

0.125

3

2

0

0

1

1

0.10

0

3

1

0

0

1

1

0.11

0

4

1

0

0

1

1

0.09

0.125

2

2

0

0

1

1

0.08

0

1

1

0

0

1

1

0.11

0

4

1

1

1

3

3

0.10

0.375

3

4

0

0

1

1

0.10

0.125

3

2

0

0

1

1

0.10

0

3

1

0

0

1

1

0.10

0

3

1

0

0

1

1

0.10

0.125

3

2

0

0

1

1

0.10

0.250

3

2

0

0

1

1

0.09

0

2

1

0

0

1

1

0.10

0

3

1

0

0

1

1

0.09

0

2

1

0

0

1

1

0.10

0

3

1

0

0

1

1

0.11

0

4

1

0

0

1

1

0.11

0

4

1

0

0

1

1

0.11

0

4

1

0

0

1

1

24