IDPM DISCUSSION PAPER SERIES R S

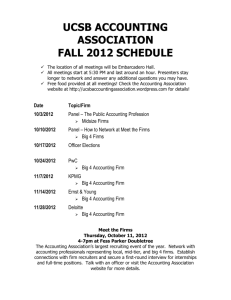

advertisement