Public Opinion

advertisement

American Government

Public Opinion

Public Opinion

Public opinion is an important topic in American politics

• Since we define ourselves as a democratic society, what the

people want matters --they are the ultimate source of

governmental legitimacy

• On the other hand, the founders explicitly and extensively

sought to limit the impact of public opinion on politics in the

U.S.

• As we've noticed earlier, numerous checks on majority rule:

founders feared that majority could -- and probably would -act unwisely

• Founders were more interested in fulfilling the goals outlined in

the preamble to the Constitution.

• Therefore, they created a system in which public opinion could

translate itself into public policy, but not one in which it

necessarily would translate itself into public policy.

3 Facts about Public Opinion

Important Facts to Know

1. Public opinion may conflict with other

important values (Madison's old majority

faction problem)

• Most notably, public opinion may conflict

with fundamental, constitutionally protected

rights

• potentially -- and sporadically -- a serious

problem

• in actuality, though, our political culture is mild

Public Opinion is Hard to Figure

2. Public opinion is very difficult to interpret

• Often there is no one "public", rather many

publics many people in the public are:

• uninformed

• unconstrained -- want mutually exclusive things

• As a result, while several preferences are

stable, many are fickle

• stable: peace, prosperity

• fickle: energy, environment, nuclear freeze

• N.B. ... stable on ideals; fickle on

implementation/details

Public Opinion is Hard…

• Public Opinion Polls are sensitive to the

wording of questions and, therefore,

easily manipulated

• options/tradeoffs -- taxes for deficit

• order of alternatives

• loaded questions

• so called "astroturf" campaigns

• result = we must be careful when

asked to evaluate options based on

what "the public" feels.

Public Opinion & Elites

3. Public opinion is mediated by political

elites

• not everyone's opinion has the same

political weight

• opinion of more politically active people

is more politically important

• political elites drive the wheel of

politics

Origins of Public Opinion

• Family

• party ID is inherited

• issue positions are generally not transmitted

• Reference groups

• churches -- different traditions and social bases

• Jews: persecuted; generally liberal views on both social and

economic matters

• Catholics: lower SES class often, some persecution, group

focus; economically liberal, socially conservative

• Protestants: dominant group, personal focus; more

conservative on both economic and social issues

• Other groups -- we are a nation of joiners

The Biology of Politics

Biological factors in Public Opinion

• Gender gap

• -because they bear a greater responsibility

for bearing/raising children, women tend

to be more interested in and liberal on

issues concerning social welfare

• Age

• younger people tend to be more liberal

• less invested in the system

• more flexible (old dogs and all that)

Socioeconomic Factors in Public Opinion

Economic class

• wealthy have more

• human nature to think it is deserved

• favor policies that let them keep their well-deserved wealth

--- much invested in the system

Education

• generally, education leads to more liberal views (liberal

arts)

• A great example of cross-cutting cleavages, however.

• -income tends to rise with education

• -therefore, cross pressures

• Examples of “Big Tent”

• GOP composed of Wall Street types, farmers, blue collar

Reaganites, Bible thumpers;

• Democrats composed of poor, limosine liberals, labor, prochoice, Catholics

Cleavages in Comparative Perspective

Contrast with fragmented societies like

Northern Ireland

• -Catholics: poorer, Celtic, nationalist

• -Prots: richer, Anglo-Saxon, loyalist

• -result = on-going, intractable violence

Ideology & Public Opinion

Liberal vs. Conservative can be confusing

• Classically

• liberals = proponents of limited government

• conservatives = opponents of French Revolution

• socially conservative -- believed in a larger role for government -protect the traditional social fabric

• Changed in the U.S. during the Progressive Era (1890-1910)

and the New Deal (1930s)

• FDR described his efforts to have federal government take a larger

responsibility for social welfare as "liberal"

• conservatives opposed this --- called for smaller government

• Formed the basis for the so-called "New Deal Realignment"

• Democrats became the party of larger government

• Republicans became the party of smaller government

U.S. Politics: Centrist

Important to note --- in US, ideology is bounded

• both liberals and conservatives hail from classical

liberal roots - split between them is small

• even the most virulent liberals in the US believe in a

basically free market

• -few anarchists

• -few revolutionary Maoists

• even the most virulent conservatives believe in

basic human rights, civil liberties, equality

• -few monarchists

• -few theocrats

• -few Nazis

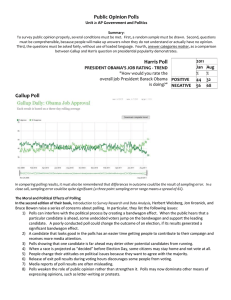

Polling Defined

• Opinion polls are surveys of public opinion

using sampling.

• Polls are usually designed to represent the

opinions of a population by asking a small

number of people a series of questions and

then extrapolating the answers to the larger

group.

• Day after day polls dealing with questions

about public affairs and private business are

being conducted throughout the United

States.

• Opinion polling has also spread to England,

Canada, Australia, Sweden, and France.

Polling: History & Perspective

• Polls are used by businesses, government,

universities, and host of other organizations

to answer a variety of questions.

• The first known example of an opinion poll

was a local straw vote conducted by The

Harrisburg Pennsylvanian in 1824, showing

Andrew Jackson leading John Quincy Adams

by 335 votes to 169 in the contest for the

United States Presidency.

• This poll was unscientific – as were all

attempts to systematically identify public

opinion then.

• A scientific poll must be representative.

The Onset of Modern Polling

• In 1916, the Literary Digest embarked on a national

survey (partly as a circulation-raising exercise) and

correctly predicted Woodrow Wilson's election as

President. Mailing out millions of postcards and simply

counting the returns, the Digest correctly called the

following four presidential elections.

• In 1936, however, the Digest conducted another poll. Its

2.3 million "voters" constituted a huge sample; however

they were generally more affluent Americans who tended

to have Republican sympathies.

• The Literary Digest did nothing to correct this bias. The

week before election day, it reported that Alf Landon was

far more popular than Franklin D. Roosevelt.

• At the same time, George Gallup conducted a far smaller,

but more scientifically-based survey, in which he polled a

demographically representative sample. Gallup correctly

predicted Roosevelt's landslide victory

Polling in Politics

Functions of Polls in Politics

• Used by candidates to determine strategy

• Used by interest groups to decide who to

support and with how much resources

• Used by election observers to track the

‘horse race’

• Used by leaders to determine will of the

people (ex. Newt Gingrich and the Contract

With America)

• It is also useful where leaders want to

‘manipulate’ public opinion (though to the

extent that they do this is often difficult to

determine)

Modern Media & Poll Proliferation

What to Know about a Poll

• 1. Who paid for the poll?

• 2. What does the poll’s sponsor have to gain by

particular results?

• 3. What were the exact questions?

• 4. How and when was the poll administered?

• 5. How many people were polled?

• 6. Who analyzed or interpreted the poll?

Polling Nuts & Bolts: Sampling

• Polls require taking samples from populations. In

the case of, say, a Newsweek poll from the 2004

Presidential election, the population of interest is

the population of people who will vote.

• Since it is impractical to poll everyone who will vote,

pollsters take smaller samples that are intended to

be representative, that is, a random sample of the

population.

• It is possible that pollsters happen to sample 1,013

voters who happen to vote for Bush when in fact the

population is split, but this is very, very unlikely

given that the sample is representative.

• A good sample MUST be a representative sample

and it must be a random sample.

Internet Polling: Non-Scientific

Polling: A Concrete Example

• Recall our example from the 2004

Presidential Race.

• According to an October 2, 2004 survey by

Newsweek, 42 % of registered voters would

vote for John Kerry/John Edwards if the

election were held then.

• 46% would vote for George W. Bush/Dick

Cheney, and 2 % would vote for Ralph

Nader/Peter Camejo.

• The size of the sample is 1,013, and the

reported margin of error is ±4 %. The 99 %

level of confidence was used to determine

the bound.

Poll Results

Candidate

Choices

Bush/Cheney

% Will Vote for

candidate in

2004 election

42%

Kerry/Edwards 46%

Nader

2%

N = 1013

MoE: 4%

Poll Results w/ Bounds

Candidate

Choices

% Will Vote for Bounds on

candidate in

Estimates

2004 election

Bush/Cheney

42%

38 – 46%

Kerry/Edwards 46%

42 – 50%

Nader

2%

0 – 6%

N = 1013

MoE: 4%

Interpreting Polls with Bounds

• Who is ahead in this race, according to

the poll?

• Because the bounds of the two

estimates for Bush & Kerry overlap,

this is a “statistical tie.”

• It is possible, given the margin of error

and the results, that either Bush or

Kerry may have been ahead.

• Nader, however, is definitely behind.

Sample Size & Margin of Error

• The size of the bound (or the margin of

error) is directly tied to the size of the

sample.

• The larger the sample, the smaller the

bound, the more confident we are in

the estimate.

Sample Size & Margin of Error

Sample Size & Estimates

Candidate

Choices

% Will Vote for Bounds on

candidate in

Estimates

2004 election

Bush/Cheney

42%

41 – 43%

Kerry/Edwards 46%

45 – 47%

Nader

2%

1 – 3%

N = 6,243

MoE: 1%

Choosing your Sample

• The relationship between the sample size

and the margin of error is NOT 1:1.

• Diminishing returns are obtained as the size

of the sample increases.

• Hence you need an ever larger and larger

increase in your sample to get the same

reduction in the margin of error.

• Most pollsters strike a balance between cost

of the sample and reduction in the margin of

error, resulting in commonly used sample

sizes of 1,000 to 2,000.

Problems in Polling: Response Bias

• Not all error in polling is statistical.

• Survey results may be affected by response bias, where the

answers given by respondents do not reflect their true beliefs.

• This may be deliberately engineered by unscrupulous pollsters

in a push poll, but more often is a result of the detailed

wording or ordering of questions.

• Respondents may deliberately try to manipulate the outcome

of a poll by e.g. advocating a more extreme position than they

actually hold in order to boost their side of the argument or

give rapid and ill-considered answers in order to hasten the

end of their questioning.

• Respondents may also feel under social pressure not to give an

unpopular answer. If the results of surveys are widely

publicized this effect may be magnified - the so-called spiral of

silence.

Response Bias: Problems on Our End

• Observer Effects – poll changes attitude or

behavior

• Presence of an observer – polls can be taken on a

telephone, in person (door to door), etc.

Problems – ex. a white man asking a black man

about his racial attitudes. A woman asking a

man about his position on sexual behavior.

• Taking poll changes attitude

• Ex. Nielsen poll – you’re a Celebrity Death Match

/ WWF kind of guy, but during the poll suddenly

you are a PBS / CNN kind of guy.

• Timing

• Question Wording

Response Bias: Timing

• Doing a poll on Sept. 12th, 2001 on

immigration or feelings about Arabs

• Daytime/Nighttime

• Most polls are taken from 6pm – 10pm…thus

short term effects (news program on poverty)

can have effects on the responses

• What if we took polls at 1pm in the afternoon?

• Thermometer scores – correlation with

actual temperature outside (giving higher

thermometer scores in the summer)

Response Bias: Question Wording

• It is well established that the wording of the

questions, the order in which they are asked

and the number and form of alternative

answers offered can influence results of

polls. Thus comparisons between polls often

boil down to the wording of the question.

• One way in which pollsters attempt to

minimize this effect is to ask the same set of

questions over time, in order to track

changes in opinion.

Push Polling & Question Wording

• A push poll is a political campaign technique

in which an individual or organization

attempts to influence or alter the view of

respondents under the guise of conducting a

poll. Push polls are generally viewed as a

form of negative campaigning.

• The mildest forms of push polling are

designed merely to remind voters of a

particular issue.

• For instance, a push poll might ask respondents

to rank candidates based on their support of

abortion in order to get voters thinking about

that issue.

Push Polling & Astro-Turf Campaigns

• The main advantage of push polls is that they are an

effective way of maligning an opponent ("pushing" voters

away) while avoiding responsibility for the distorted or

false information used in the push poll. They are risky for

the same reason: if credible evidence emerges that the

polls were ordered by a campaign, it would do serious

damage to that campaign.

• Push polls are also used by interest groups to generate

the appearance of grassroots support for their positions.

Hence they may ask respondents questions designed to

illicit the preferred responses:

• Pro-Choice Push Poll Question: Do you believe women should have

a right to privacy?

• Pro-Life Push Poll Question: Do you approve of the laws permitting

abortion on demand, resulting in the murder of 1.5 million babies

a year?

Question Wording Example 1956:

Subtle ‘Push” polling

The U.S. should keep soldiers

overseas where they can help

countries that oppose communism.

Agree Strongly

49%

Agree

24%

Neutral

10%

Disagree

7%

Disagree Strongly

10%

Question Wording Example 1964: Subtle

“push” polling

Some people think that we should sit

down and talk to the leaders of the

communist countries and try to settle

our differences while others think we

should refuse to have anything to do

with them, what do you think?

Should Talk

62%

Depends on Situation

15%

Should Refuse to Talk 13%

Response Bias: Problems on Their End

• Neutral effects – people ‘tend’ to choose the middle category.

• folks tend to go for that middle category no matter what their

actual feelings are.

• Because they don’t want to appear extreme

• Because they don’t know

• Avoidance of honesty – people will lie (use terminological

inexactitudes) when being polled.

• They don’t want to admit they don’t vote

• They don’t want to admit they are bigots…etc.

• Non-Attitudes – people will choose a choice even when they

don’t have an opinion on either of them

• Who would you rather have as president?

•

•

•

•

•

Beavis

Butthead

Eric Carman

Frylock

Master Shake

Respondent Problems: Neutral Effect Example

QUESTION:

--------Where would you place YOURSELF on this scale, or haven't

you thought much about this?

VALID CODES:

-----------01. Extremely liberal

02. Liberal

03. Slightly liberal

04. Moderate; middle lf the road

05. Slightly conservative

06. Conservative

07. Extremely conservative

Neutral Effects: Helping to Solve them

PRE-ELECTION SURVEY:

IF R'S PARTY PREFERENCE IS INDEPENDENT, NO PREFERENCE,

OTHER:

QUESTION:

--------Do you think of yourself as CLOSER to the Republican

Party or to the Democratic party?

VALID CODES:

-----------1. Closer to Republican

3. Neither {VOL}

5. Closer to Democratic

Avoiding Honesty: Helping to Solve them

• The age old age question problem.

• How do you ask it:

• What is your age?

• What is your date of birth?