Document

advertisement

8

Probability Distributions and Statistics

Distributions of Random Variables

Expected Value

Variance and Standard Deviation

The Binomial Distribution

The Normal Distribution

Applications of the Normal Distribution

8.1

Distributions of Random Variables

.03

.02

.01

0

1

2

3

4

5

6

7

8

x

Random Variable

A random variable is a rule that assigns

a number to each outcome of a chance

experiment.

Example

A coin is tossed three times.

Let the random variable X denote the number of heads

that occur in the three tosses.

✦ List the outcomes of the experiment; that is, find the

domain of the function X.

✦ Find the value assigned to each outcome of the

experiment by the random variable X.

✦ Find the event comprising the outcomes to which a value

of 2 has been assigned by X.

This event is written (X = 2) and is the event consisting of

the outcomes in which two heads occur.

Example 1, page 418

Example

Solution

As discussed in Section 7.1, the set of outcomes of this

experiment is given by the sample space

S = {HHH, HHT, HTH, THH, HTT, THT, TTH, TTT}

The table below associates the outcomes of the

experiment with the corresponding values assigned to

each such outcome by the random variable X:

Outcome

X

HHH HHT HTH THH HTT THT TTH

3

2

2

2

1

1

1

TTT

0

With the aid of the table, we see that the event (X = 2) is

given by the set

{HHT, HTH, THH}

Example 1, page 418

Applied Example: Product Reliability

A disposable flashlight is turned on until its battery runs

out.

Let the random variable Z denote the length (in hours)

of the life of the battery.

What values can Z assume?

Solution

The values assumed by Z can be any nonnegative real

numbers; that is, the possible values of Z comprise the

interval 0 Z < ∞.

Applied Example 3, page 419

Probability Distributions and Random Variables

Since the random variable associated with an

experiment is related to the outcome of the experiment,

we can construct a probability distribution associated

with the random variable, rather than one associated

with the outcomes of the experiment.

In the next several examples, we illustrate the

construction of probability distributions.

Example

Let X denote the random variable that gives the sum of the

faces that fall uppermost when two fair dice are thrown.

Find the probability distribution of X.

Solution

The values assumed by the random variable X are

2, 3, 4, … , 12, correspond to the events E2, E3, E4, … , E12.

Next, the probabilities associated with the random variable

X when X assumes the values 2, 3, 4, … , 12, are precisely

the probabilities P(E2), P(E3), P(E4), … , P(E12),

respectively, and were computed as seen in Chapter 7.

Thus,

213

P( X …3)

2)

4)and

P(soE324on.

))

36

36

Example 5, page 420

Applied Example: Waiting Lines

The following data give the number of cars observed

waiting in line at the beginning of 2-minute intervals

between 3 p.m. and 5 p.m. on a given Friday at the Happy

Hamburger drive-through and the corresponding

frequency of occurrence.

Find the probability distribution of the random variable X,

where X denotes the number of cars found waiting in line.

Cars

0

1

2

3

4

5

6

7

8

Frequency

2

9

16

12

8

6

4

2

1

Applied Example 6, page 420

Applied Example: Waiting Lines

Cars

0

1

2

3

4

5

6

7

8

Frequency

2

9

16

12

8

6

4

2

1

Solution

Dividing each frequency number in the table by 60 (the

sum of all these numbers) give the respective probabilities

associated with the random variable X when X assumes the

values 0, 1, 2, … , 8.

For example,

12

16

92

…and

P( X

1)

3)

0)

2) so on.

.15

.20

.03

.27

60

60

Applied Example 6, page 420

Applied Example: Waiting Lines

Cars

0

1

2

3

4

5

6

7

8

Frequency

2

9

16

12

8

6

4

2

1

Solution

The resulting probability distribution is

x

0

1

2

3

4

5

6

7

8

P(X = x)

.03

.15

.27

.20

.13

.10

.07

.03

.02

Applied Example 6, page 420

Histograms

A probability distribution of a random variable may be

exhibited graphically by means of a histogram.

Examples

The histogram of the probability distribution from the last

example is

.30

.20

.10

0

1

2

3

4

5

6

7

8

x

Histograms

A probability distribution of a random variable may be

exhibited graphically by means of a histogram.

Examples

The histogram of the probability distribution for the sum

of the numbers of two dice is

6/36

5/36

4/36

3/36

2/36

1/36

2

3

4

5

6

7

8

9 10 11 12

x

8.2

Expected Value

18

P( E )

3818

1 P( E ) 1 38

18

38

20

38

18 38 18 9

38 20 20 10

1 P( E ) 1 18

18 38

P( E )

38

20

38

18

38

20 38 20 10

38 18 18 9

Average, or Mean

The average, or mean, of the n numbers

x1 , x2 , … , x n

is x (read “x bar”), where

x1 x2 xn

x

n

Applied Example: Waiting Lines

Find the average number of cars waiting in line at the

Happy Burger’s drive-through at the beginning of each

2-minute interval during the period in question.

Cars

0

1

2

3

4

5

6

7

8

Frequency

2

9

16

12

8

6

4

2

1

Solution

Using the table above, we see that there are all together

2 + 9 + 16 + 12 + 8 + 6 + 4 + 2 + 1 = 60

numbers to be averaged.

Therefore, the required average is given by

0 2 1 9 2 16 3 12 4 8 5 6 6 4 7 2 8 1

3.1

60

Applied Example 1, page 428, refer Section 8.1

Expected Value of a Random Variable X

Let X denote a random variable that assumes

the values x1, x2, … , xn with associated

probabilities p1, p2, … , pn, respectively.

Then the expected value of X, E(X), is given by

E ( X ) x1 p1 x2 p2 xn pn

Applied Example: Waiting Lines

Use the expected value formula to find the average

number of cars waiting in line at the Happy Burger’s

drive-through at the beginning of each 2-minute interval

during the period in question.

x

0

1

2

3

4

5

6

7

8

P(X = x)

.03

.15

.27

.20

.13

.10

.07

.03

.02

Solution

The average number of cars waiting in line is given by the

expected value of X, which is given by

E ( X ) (0)(.03) (1)(.15) (2)(.27) (3)(.20) (4)(.13)

(5)(.10) (6)(.07) (7)(.03) (8)(.02)

3.1

Applied Example 2, page 428, refer Section 8.1

Applied Example: Waiting Lines

The expected value of a random variable X is a measure of

central tendency.

Geometrically, it corresponds to the point on the base of

the histogram where a fulcrum will balance it perfectly:

.20

.10

x

0

1

4

2

3.1

E(X)

Applied Example 2, page 428

5

6

7

8

Applied Example: Raffles

The Island Club is holding a fundraising raffle.

Ten thousand tickets have been sold for $2 each.

There will be a first prize of $3000, 3 second prizes of

$1000 each, 5 third prizes of $500 each, and 20

consolation prizes of $100 each.

Letting X denote the net winnings (winnings less the cost

of the ticket) associated with the tickets, find E(X).

Interpret your results.

Applied Example 5, page 431

Applied Example: Raffles

Solution

The values assumed by X are (0 – 2), (100 – 2), (500 – 2),

(1000 – 2), and (3000 – 2).

That is –2, 98, 498, 998, and 2998, which correspond,

respectively, to the value of a losing ticket, a consolation

prize, a third prize, and so on.

The probability distribution of X may be calculated in

the usual manner:

x

–2

98

498

998

2998

P(X = x)

.9971

.0020

.0005

.0003

.0001

Using the table, we find

E ( X ) ( 2)(.9971) 98(.0020) 498(.0005)

Applied Example 5, page 431

998(.0003) 2998(.0001)

0.95

Applied Example: Raffles

Solution

The expected value of E(X) = –.95 gives the long-run

average loss (negative gain) of a holder of one ticket.

That is, if one participated regularly in such a raffle by

purchasing one ticket each time, in the long-run, one

may expect to lose, on average, 95 cents per raffle.

Applied Example 5, page 431

Odds in Favor and Odds Against

If P(E) is the probability of an event E

occurring, then

✦ The odds in favor of E occurring are

P( E )

P( E )

1 P( E ) P( E c )

[ P( E ) 1]

✦ The odds against E occurring are

1 P( E ) P( E c )

P( E )

P( E )

[ P( E ) 0]

Applied Example: Roulette

Find the odds in favor of winning a bet on red in American

roulette.

What are the odds against winning a bet on red?

Solution

The probability that the ball lands on red is given by

18

P

38

Therefore, we see that the odds in favor of winning a bet

on red are

18

P( E )

3818

1 P( E ) 1 38

Applied Example 8, page 433

18

38

20

38

18 38 18 9

38 20 20 10

Applied Example: Roulette

Find the odds in favor of winning a bet on red in American

roulette.

What are the odds against winning a bet on red?

Solution

The probability that the ball lands on red is given by

18

P

38

The odds against winning a bet on red are

1 P( E ) 1 18

18 38

P( E )

38

Applied Example 8, page 433

20

38

18

38

20 38 20 10

38 18 18 9

Probability of an Event (Given the Odds)

If the odds in favor of an event E occurring are

a to b, then the probability of E occurring is

a

P( E )

ab

Example

Consider each of the following statements.

✦ “The odds that the Dodgers will win the World Series

this season are 7 to 5.”

✦ “The odds that it will not rain tomorrow are 3 to 2.”

Express each of these odds as a probability of the event

occurring.

Solution

With a = 7 and b = 5, the probability that the Dodgers will

win the World Series is

a

7

7

P( E )

.58

a b 7 5 12

Example 9, page 434

Example

Consider each of the following statements.

✦ “The odds that the Dodgers will win the World Series

this season are 7 to 5.”

✦ “The odds that it will not rain tomorrow are 3 to 2.”

Express each of these odds as a probability of the event

occurring.

Solution

With a = 3 and b = 2, the probability that it will not rain

tomorrow is

a

3

3

P( E )

.6

a b 3 2 5

Example 9, page 434

Median

The median of a group of numbers arranged

in increasing or decreasing order is

a. The middle number if there is an odd

number of entries or

b. The mean of the two middle numbers if

there is an even number of entries.

Applied Example: Commuting Times

The times, in minutes, Susan took to go to work on nine

consecutive working days were

46 42 49 40 52 48 45 43 50

What is the median of her morning commute times?

Solution

Arranging the numbers in increasing order, we have

40 42 43 45 46 48 49 50 52

Here we have an odd number of entries, and the middle

number that gives us the required median is 46.

Applied Example 10, page 435

Applied Example: Commuting Times

The times, in minutes, Susan took to return from work on

nine consecutive working days were

37 36 39 37 34 38 41 40

What is the median of her evening commute times?

Solution

If we include the number 44 for the tenth work day and

arrange the numbers in increasing order, we have

34 36 37 37 38 39 40 41

Here we have an even number of entries so we calculate

the average of the two middle numbers 37 and 38 to find

the required median of 37.5.

Applied Example 10, page 435

Mode

The mode of a group of numbers is the

number in the set that occurs most

frequently.

Example

Find the mode, if there is one, of the given group of

numbers.

a. 1 2 3 4 6

b. 2 3 3 4 6 8

c. 2 3 3 3 4 4 4 8

Solution

a. The set has no mode because there isn’t a number that

occurs more frequently than the others.

b. The mode is 3 because it occurs more frequently than the

others.

c. The modes are 3 and 4 because each number occurs three

times.

Example 11, page 436

8.3

Variance and Standard Deviation

x (15.8)(.1) (15.9)(.2) (16.0)(.4)

(16.1)(.2) (16.2)(.1) 16

Var( X ) (.1)(15.8 16) (.2)(15.9 16) (.4)(16.0 16)

(.2)(16.1 16) (.1)(16.2 16) 0.012

x Var( X ) 0.012 0.11

Variance of a Random Variable X

Suppose a random variable has the probability

distribution

x

x1

x2

x3

···

xn

P(X = x)

p1

p2

p3

···

pn

and expected value

E(X ) =

Then the variance of the random variable X is

Var ( X ) p1 ( x1 )2 p2 ( x2 ) 2 pn ( xn ) 2

Example

Find the variance of the random variable X whose

probability distribution is

x

1

2

3

4

5

6

7

P(X = x)

.05

.075

.2

.375

.15

.1

.05

Solution

The mean of the random variable X is given by

x 1(.05) 2(.075) 3(.2) 4(.375) 5(.15)

6(.1) 7(.05)

4

Example 1, page 442

Example

Find the variance of the random variable X whose

probability distribution is

x

1

2

3

4

5

6

7

P(X = x)

.05

.075

.2

.375

.15

.1

.05

Solution

Therefore, the variance of X is given by

Var ( X ) (.05)(1 4) 2 (.075)(2 4) 2 (.2)(3 4) 2

(.375)(4 4) 2 (.15)(5 4) 2

(.1)(6 4) 2 (.05)(7 4) 2

1.95

Example 1, page 442

Standard Deviation of a Random Variable X

The standard deviation of a random variable X,

(pronounced “sigma”), is defined by

Var( X )

p1 ( x1 )2 p2 ( x2 )2 pn ( xn )2

where x1, x2, … , xn denote the values assumed by

the random variable X and

p1 = P(X = x1), p2 = P(X = x2), … , pn = P(X = xn).

Applied Example: Packaging

Let X and Y denote the random variables whose values are

the weights of brand A and brand B potato chips,

respectively.

Compute the means and standard deviations of X and Y

and interpret your results.

x

15.8

15.9

16.0

16.1

16.2

P(X = x)

.1

.2

.4

.2

.1

y

15.7

15.8

15.9

16.0

16.1

16.2

16.3

P(Y = y)

.2

.1

.1

.1

.2

.2

.1

Applied Example 3, page 443

Applied Example: Packaging

x

15.8

15.9

16.0

16.1

16.2

P(X = x)

.1

.2

.4

.2

.1

y

15.7

15.8

15.9

16.0

16.1

16.2

16.3

P(Y = y)

.2

.1

.1

.1

.2

.2

.1

Solution

The means of X and Y are given by

x (.1)(15.8) (.2)(15.9) (.4)(16.0)

(.2)(16.1) (.1)(16.2)

16

y (.2)(15.7) (.1)(15.8) (.1)(15.9) (.1)(16.0)

(.2)(16.1) (.2)(16.2) (.1)(16.3)

16

Applied Example 3, page 443

Applied Example: Packaging

x

15.8

15.9

16.0

16.1

16.2

P(X = x)

.1

.2

.4

.2

.1

y

15.7

15.8

15.9

16.0

16.1

16.2

16.3

P(Y = y)

.2

.1

.1

.1

.2

.2

.1

Solution

Therefore, the variance of X and Y are

Var ( X ) (.1)(15.8 16) 2 (.2)(15.9 16) 2 (.4)(16.0 16) 2

(.2)(16.1 16) 2 (.1)(16.2 16) 2

0.012

Var (Y ) (.2)(15.7 16) 2 (.1)(15.8 16) 2 (.1)(15.9 16) 2

(.1)(16.0 16) 2 (.2)(16.1 16) 2 (.2)(16.2 16) 2

(.1)(16.3 16) 2

0.042

Applied Example 3, page 443

Applied Example: Packaging

x

15.8

15.9

16.0

16.1

16.2

P(X = x)

.1

.2

.4

.2

.1

y

15.7

15.8

15.9

16.0

16.1

16.2

16.3

P(Y = y)

.2

.1

.1

.1

.2

.2

.1

Solution

Finally, the standard deviations of X and Y are

x Var ( X ) 0.012 0.11

y Var (Y ) 0.042 0.20

Applied Example 3, page 443

Applied Example: Packaging

x

15.8

15.9

16.0

16.1

16.2

P(X = x)

.1

.2

.4

.2

.1

y

15.7

15.8

15.9

16.0

16.1

16.2

16.3

P(Y = y)

.2

.1

.1

.1

.2

.2

.1

Solution

The means of X and Y are both equal to 16.

✦ Therefore, the average weight of a package of potato

chips of either brand is the same.

However, the standard deviation of Y is greater than that

of X.

✦ This tells us that the weights of the packages of brand B

potato chips are more widely dispersed than those of

brand A.

Applied Example 3, page 443

Chebychev’s Inequality

Let X be a random variable with expected

value and standard deviation .

Then the probability that a randomly chosen

outcome of the experiment lies between

– k and + k is at least 1 – (1/k2) .

That is,

1

P( k X k ) 1 2

k

Applied Example: Industrial Accidents

Great Lumber Co. employs 400 workers in its mills.

It has been estimated that X, the random variable

measuring the number of mill workers who have

industrial accidents during a 1-year period, is distributed

with a mean of 40 and a standard deviation of 6.

Use Chebychev’s Inequality to find a bound on the

probability that the number of workers who will have an

industrial accident over a 1-year period is between 30 and

50, inclusive.

Applied Example 5, page 445

Applied Example: Industrial Accidents

Solution

Here, = 40 and = 6.

We wish to estimate P(30 X 50) .

To use Chebychev’s Inequality, we first determine the

value of k from the equation

– k = 30

or

+ k = 50

Since = 40 and = 6, we see that k satisfies

40 – 6k = 30

and

40 + 6k = 50

from which we deduce that k = 5/3.

Applied Example 5, page 445

Applied Example: Industrial Accidents

Solution

Thus, the probability that the number of mill workers who

will have an industrial accident during a 1-year period is

between 30 and 50 is given by

P(30 X 50) 1

that is, at least 64%.

Applied Example 5, page 445

1

5

3

2

16

.64

25

8.4

The Binomial Distribution

P(SFFF) = P(S)P(F)P(F)P(F) = p · q · q · q = pq3

P(FSFF) = P(F)P(S)P(F)P(F) = q · p · q · q = pq3

P(FFSF) = P(F)P(F)P(S)P(F) = q · q · p · q = pq3

P(FFFS) = P(F)P(F)P(F)P(S) = q · q · q · p = pq3

P( E ) pq3 pq3 pq3 pq3 4 pq3

3

1 5

4 .386

6 6

Binomial Experiment

A binomial experiment has the following

properties:

1. The number of trials in the experiment is

fixed.

2. There are two outcomes in each trial:

“success” and “failure.”

3. The probability of success in each trial is

the same.

4. The trials are independent of each other.

Example

A fair die is thrown four times. Compute the probability of

obtaining exactly one 6 in the four throws.

Solution

There are four trials in this experiment.

Each trial consists of throwing the die once and observing

the face that lands uppermost.

We may view each trial as an experiment with two

outcomes:

✦ A success (S) if the face that lands uppermost is a 6.

✦ A failure (F) if it is any of the other five numbers.

Letting p and q denote the probabilities of success and

failure, respectively, of a single trial of the experiment, we

find that

1

1 5

p

and q 1

6

6 6

Example 1, page 453

Example

A fair die is thrown four times. Compute the probability of

obtaining exactly one 6 in the four throws.

Solution

The trials of this experiment are independent, so we have a

binomial experiment.

Using the multiplication principle, we see that the

experiment has 24 = 16 outcomes.

The possible outcomes associated with the experiment are:

0 Successes

FFFF

Example 1, page 453

1 Success

SFFF

FSFF

FFSF

FFFS

2 Successes

SSFF

SFSF

SFFS

FSSF

FSFS

FFSS

3 Successes

SSSF

SSFS

SFSS

FSSS

4 Successes

SSSS

Example

A fair die is thrown four times. Compute the probability of

obtaining exactly one 6 in the four throws.

Solution

From the table we see that the event of obtaining exactly

one success in four trials is given by

E = {SFFF, FSFF, FFSF, FFFS}

The probability of this event is given by

P(E) = P(SFFF) + P(FSFF) + P(FFSF) + P(FFFS)

Success

00Successes

FFFF

Example 1, page 453

1 Success

SFFF

FSFF

FFSF

FFFS

2 Successes

SSFF

SFSF

SFFS

FSSF

FSFS

FFSS

3 Successes

SSSF

SSFS

SFSS

FSSS

4 Successes

SSSS

Example

A fair die is thrown four times. Compute the probability of

obtaining exactly one 6 in the four throws.

Solution

Since the trials (throws) are independent, the probability of

each possible outcome with one success is given by

P(SFFF) = P(S)P(F)P(F)P(F) = p · q · q · q = pq3

P(FSFF) = P(F)P(S)P(F)P(F) = q · p · q · q = pq3

P(FFSF) = P(F)P(F)P(S)P(F) = q · q · p · q = pq3

P(FFFS) = P(F)P(F)P(F)P(S) = q · q · q · p = pq3

Therefore, the probability of obtaining exactly one 6 in four

throws is

P( E ) pq3 pq3 pq3 pq3 4 pq3

3

Example 1, page 453

1 5

4 .386

6 6

Computation of Probabilities in Bernoulli Trials

In general, experiments with two outcomes

are called Bernoulli trials, or binomial trials.

In a binomial experiment in which the

probability of success in any trial is p, the

probability of exactly x successes in n

independent trials is given by

C ( n, x ) p x q n x

Binomial Distribution

If we let X be the random variable that gives the

number of successes in a binomial experiment, then

the probability of exactly x successes in n

independent trials may be written

P( X x) C (n, x) p x qn x

( x 0,1,2,..., n)

The random variable X is called a binomial

random variable, and the probability distribution

of X is called a binomial distribution.

Example

A fair die is thrown five times.

If a 1 or a 6 lands uppermost in a trial, then the throw is

considered a success.

Otherwise, the throw is considered a failure.

Find the probabilities of obtaining exactly 0, 1, 2, 3, 4, and 5

successes, in this experiment.

Using the results obtained, construct the binomial

distribution for this experiment and draw the histogram

associated with it.

Example 2, page 455

Example

Solution

This is a binomial experiment with X taking on each of the

values 0, 1, 2, 3, 4, and 5 corresponding to exactly 0, 1, 2, 3,

4, and 5 successes, respectively, in five trials.

Since the die is fair, the probability of a 1 or a 6 landing

uppermost in any trial is given by

2 1

p

6 3

from which it also follows that

1 2

q 1 p 1

3 3

Finally, n = 5 since there are five trials (throws) in this

experiment.

Example 2, page 455

Example

Solution

Using the formula for the binomial random variable, we

find that the required probabilities are

P( X x ) C (n, x) p x qn x

0

1 2

P( X 0) C (5, 0)

3 3

x

0

P(X = x)

.132

Example 2, page 455

1

2

5 0

5! 32

.132

0!5! 243

3

4

5

Example

Solution

Using the formula for the binomial random variable, we

find that the required probabilities are

P( X x ) C (n, x) p x qn x

1

1 2

P( X 1) C (5,1)

3 3

x

0

1

P(X = x)

.132

.329

Example 2, page 455

51

2

5! 16

.329

1!4! 243

3

4

5

Example

Solution

Using the formula for the binomial random variable, we

find that the required probabilities are

P( X x ) C (n, x) p x qn x

2

1 2

P( X 2) C (5, 2)

3 3

5 2

x

0

1

2

P(X = x)

.132

.329

329

Example 2, page 455

5!

8

.329

2!3! 243

3

4

5

Example

Solution

Using the formula for the binomial random variable, we

find that the required probabilities are

P( X x ) C (n, x) p x qn x

3

1 2

P( X 3) C (5,3)

3 3

53

5!

4

.165

3!2! 243

x

0

1

2

3

P(X = x)

.132

.329

329

.165

Example 2, page 455

4

5

Example

Solution

Using the formula for the binomial random variable, we

find that the required probabilities are

P( X x ) C (n, x) p x qn x

4

1 2

P( X 4) C (5, 4)

3 3

5 4

5! 2

.041

4!1! 243

x

0

1

2

3

4

P(X = x)

.132

.329

329

.165

.041

Example 2, page 455

5

Example

Solution

Using the formula for the binomial random variable, we

find that the required probabilities are

P( X x ) C (n, x) p x qn x

5

1 2

P( X 5) C (5,5)

3 3

55

5!

1

.004

5!0! 243

x

0

1

2

3

4

5

P(X = x)

.132

.329

329

.165

.041

.004

Example 2, page 455

Example

Solution

We can now use the probability distribution table to

construct a histogram for this experiment:

.4

.3

.2

.1

0

1

2

3

4

x

5

x

0

1

2

3

4

5

P(X = x)

.132

.329

329

.165

.041

.004

Example 2, page 455

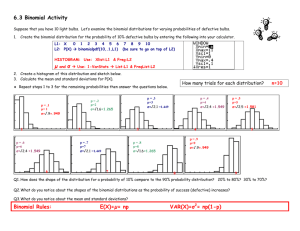

Applied Example: Quality Control

A division of Solaron manufactures photovoltaic cells to

use in the company’s solar energy converters.

It estimates that 5% of the cells manufactured are

defective.

If a random sample of 20 is selected from a large lot of

cells manufactured by the company, what is the

probability that it will contain at most 2 defective cells?

Applied Example 5, page 457

Applied Example: Quality Control

Solution

We may view this as a binomial experiment with n = 20

trials that correspond to 20 photovoltaic cells.

There are two possible outcomes of the experiment:

defective (“success”) and non-defective (“failure”).

The probability of success in each trial is p = .05 and the

probability of failure in each trial is q = .95.

✦ Since the lot from which the sample is selected is large,

the removal of a few cells will not appreciably affect the

percentage of defective cells in the lot in each successive

trial.

The trials are independent of each other.

✦ Again, this is because of the large lot size.

Applied Example 5, page 457

Applied Example: Quality Control

Solution

Letting X denote the number of defective cells, we find that

the probability of finding at most 2 defective cells in the

sample of 20 is given by

P( X 0) P ( X 1) P ( X 2)

C (20,0)(.05)0 (.95) 20 C (20,1)(.05)1 (.95)19

C (20, 2)(.05) 2 (.95)18

.3585 .3774 .1887 .9246

Thus, approximately 92% of the sample will have at most 2

defective cells.

Equivalently, approximately 8% of the sample will contain

more than 2 defective cells.

Applied Example 5, page 457

Mean, Variance, and Standard Deviation

of a Random Variable

If X is a binomial random variable associated with

a binomial experiment consisting of n trials with

probability of success p and probability of failure

q, then the mean (expected value), variance, and

standard deviation of X are

E ( X ) np

Var ( X ) npq

X npq

Applied Example: Quality Control

PAR Bearings manufactures ball bearings packaged in lots of

100 each.

The company’s quality-control department has determined

that 2% of the ball bearings manufactured do not meet

specifications imposed by a buyer.

Find the average number of ball bearings per package that

fail to meet the buyer’s specification.

Solution

Since this is a binomial experiment, the average number of

ball bearings per package that fail to meet the specifications

is given by the expected value of the associated binomial

random variable.

Thus, we expect to find

E ( X ) np (100)(.02) 2

substandard ball bearings in a package of 100.

Applied Example 7, page 459

8.5

The Normal Distribution

y

y f ( x)

Area is

.9545

– 2

+ 2

x

Probability Density Functions

In this section we consider probability distributions

associated with a continuous random variable:

✦ A random variable that may take on any value lying in

an interval of real numbers.

Such probability distributions are called continuous

probability distributions.

A continuous probability distribution is defined by a

function f whose domain coincides with the interval of

values taken on by the random variable associated with

the experiment.

Such a function f is called the probability density function

associated with the probability distribution.

Properties of a Probability Density Function

The properties of a probability density function are:

✦ f(x) is nonnegative for all values of x.

✦ The area of the region between the graph of f and

the x-axis is equal to 1.

For example:

y

y f ( x)

Area = 1

x

Properties of a Probability Density Function

Given a continuous probability distribution defined by a

probability density function f, the probability that the

random variable X assumes a value in an interval

a < x < b is given by the area of the region between the

graph of f and the x-axis, from x = a to x = b:

y

y f ( x)

Area = P(a < x < b)

a

b

x

Properties of a Probability Density Function

The mean and the standard deviation of a continuous

probability distribution have roughly the same meaning as

the mean and standard deviation of a finite probability

distribution.

The mean of a continuous probability distribution is a

measure of the central tendency of the probability

distribution, and the standard deviation measures its

spread about the mean.

Normal Distributions

A special class of continuous probability distributions is

known as normal distributions.

The normal distributions are without doubt the most

important of all the probability distributions.

There are many phenomena with probability

distributions that are approximately normal:

✦ For example, the heights of people, the weights of

newborn infants, the IQs of college students, the actual

weights of 16-ounce packages of cereal, and so on.

The normal distribution also provides us with an accurate

approximation to the distributions of many random

variables associated with random-sampling problems.

Normal Distributions

y

y f ( x)

x

The graph of a normal distribution is bell shaped and is

called a normal curve.

The curve has a peak at x = .

The curve is symmetric with respect to x = .

Normal Distributions

y

y f ( x)

Area = 1

x

The curve always lies above the x-axis but approaches the

x-axis as x extends indefinitely in either direction.

The area under the curve is 1.

Normal Distributions

y

y f ( x)

Area is

.6827

–

+

For any normal curve, 68.27% of the area under the

curve lies within 1 standard deviation.

x

Normal Distributions

y

y f ( x)

Area is

.9545

– 2

+ 2

For any normal curve, 95.45% of the area under the

curve lies within 2 standard deviations.

x

Normal Distributions

y

y f ( x)

Area is

.9973

– 3

+ 3

For any normal curve, 99.73% of the area under the

curve lies within 3 standard deviations.

x

Normal Distributions

y

1

2

3

4

A normal distribution is completely described by the

x

mean and the standard deviation :

✦ The mean of a normal distribution determines where

the center of the curve is located.

Normal Distributions

1

2

3

4

y

A normal distribution is completely described by the

mean and the standard deviation :

✦ The standard deviation of a normal distribution

determines the sharpness (or flatness) of the curve.

x

Normal Distributions

There are infinitely many normal curves corresponding

to different means and standard deviations .

Fortunately, any normal curve may be transformed into

any other normal curve, so in the study of normal curves

it is enough to single out one such particular curve for

special attention.

The normal curve with mean = 0 and standard

deviation = 1 is called the standard normal curve.

The corresponding distribution is called the standard

normal distribution.

The random variable itself is called the standard normal

variable and is commonly denoted by Z.

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(Z < 1.24).

Solution

The region under the standard normal curve associated

with the probability P(Z < 1.24) is

y

0

Example 1, page 465

1.24

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(Z < 1.24).

Solution

Use Table 2 in Appendix B to find the area of the required

region:

✦ We find the z value of 1.24 in the table by first locating

the number 1.2 in the column and then locating the

number 0.04 in the row, both headed by z.

✦ We then read off the number 0.8925 appearing in the

body of the table, on the found row and column that

correspond to z = 1.24.

Example 1, page 465

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(Z < 1.24).

Solution

Thus, the area of the required region under the curve

is .8925, and we find that P(Z < 1.24) = 0.8925.

y

Area is

.8925

0

Example 1, page 465

1.24

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(Z > 0.5).

Solution

The region under the standard normal curve associated

with the probability P(Z > 0.5) is

y

0 0.5

Example 1, page 465

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(Z > 0.5).

Solution

Since the standard normal curve is symmetric, the

required area is equal to the area to the left of z = – 0.5, so

P(Z > 0.5) = P(Z < – 0.5)

y

– 0.5 0

Example 1, page 465

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(Z > 0.5).

Solution

Using Table 2 in Appendix B as before to find the area of

the required region we find that

P(Z > 0.5) = P(Z < – 0.5) = .3085

y

Area is

.3085

– 0.5 0

Example 1, page 465

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(0.24 < Z < 1.48).

Solution

The region under the standard normal curve associated

with the probability P(0.24 < Z < 1.48) is

y

P(0.24 < Z < 1.48)

0 0.24 1.48

Example 1, page 465

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(0.24 < Z < 1.48).

Solution

This area is obtained by subtracting the area under the

curve to the left of z = 0.24 from the area under the curve

to the left of z = 1.48:

y

P(Z < 1.48)

0 0.24 1.48

Example 1, page 465

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(0.24 < Z < 1.48).

Solution

This area is obtained by subtracting the area under the

curve to the left of z = 0.24 from the area under the curve

to the left of z = 1.48:

y

P(Z < 0.24)

0 0.24 1.48

Example 1, page 465

z

Examples

Let Z be the standard normal variable. Make a sketch of

the appropriate region under the standard normal curve,

and find the value of P(0.24 < Z < 1.48).

Solution

Thus, the required area is given by

y

P(0.24 Z 1.48) P(Z 1.48) P(Z 0.24)

.9306 .5948 .3358

Area is

.3358

0 0.24 1.48

Example 1, page 465

z

Transforming into a Standard Normal Curve

When dealing with a non-standard normal curve, it is

possible to transform such a curve into a standard

normal curve.

If X is a normal random variable with mean

and standard deviation , then it can be

transformed into the standard normal

random variable Z by substituting in

Z

X

Transforming into a Standard Normal Curve

The area of the region under the normal curve

between x = a and x = b is equal to the area of the

region under the standard normal curve between

z

a

and

z

b

Thus, in terms of probabilities we have

b

a

P ( a X b) P

Z

Transforming into a Standard Normal Curve

Similarly, we have

a

P( X a ) P Z

and

b

P ( X b) P Z

Transforming into a Standard Normal Curve

This transformation can be seen graphically as well.

The area of the region under a nonstandard normal curve

between a and b is equal to the area of the region under a

standard normal curve between z = (a – )/ and z = (b – )/ :

y

Nonstandard

Normal Curve

Same Area

a

b

x

Transforming into a Standard Normal Curve

This transformation can be seen graphically as well.

The area of the region under a nonstandard normal curve

between a and b is equal to the area of the region under a

standard normal curve between z = (a – )/ and z = (b – )/ :

y

Standard

Normal Curve

Same Area

a

0

b

x

Example

If X is a normal random variable with

= 100 and = 20,

find the values of P(X < 120), P(X > 70), and P(75 < X <110).

Solution

For the case of P(X < 120), we use the formula

b

P ( X b) P Z

with = 100, = 20, and b = 120, which gives us

120 100

P( X 120) P Z

20

P( Z 1)

=.8413

Example 3, page 468

Example

If X is a normal random variable with

= 100 and = 20,

find the values of P(X < 120), P(X > 70), and P(75 < X <110).

Solution

For the case of P(X > 70), we use the formula

a

P( X a ) P Z

with = 100, = 20, and a = 70, which gives us

70 100

P( X 70) P Z

20

P( Z 1.5)

P( Z 1.5)

=.9332

Example 3, page 468

Example

If X is a normal random variable with

= 100 and = 20,

find the values of P(X < 120), P(X > 70), and P(75 < X <110).

Solution

Finally, for the case of P(75 < X <110), we use the formula

b

a

P ( a X b) P

Z

with = 100, = 20, a = 75, and b = 110, which gives us

110 100

75 100

P(75 X 110) P

Z

20

20

P( 1.25 Z 0.5)

P( Z 0.5) P( Z 1.25)

.6915 .1056 .5859

Example 3, page 468

8.6

Applications of the Normal Distribution

.20

.15

.10

.05

9.5

0

1

2 3 4 5 6 7 8 9 10

12

14

16

x 10

18

20

x

Applied Example: Birth Weights of Infants

The medical records of infants delivered at the Kaiser

Memorial Hospital show that the infants’ birth weights in

pounds are normally distributed with a mean of 7.4 and a

standard deviation of 1.2.

Find the probability that an infant selected at random

from among those delivered at the hospital weighed more

than 9.2 pounds at birth.

Applied Example 1, page 471

Applied Example: Birth Weights of Infants

Solution

Let X be the normal random variable denoting the birth

weights of infants delivered at the hospital.

Then, we can calculate the probability that an infant selected

at random has a birth weight of more than 9.2 pounds by

setting = 7.4, = 1.2, and a = 9.2 in the formula

a

P( X a ) P Z

to find

9.2 7.4

P ( X 9.2) P Z

P ( Z 1.5)

1.2

P ( Z 1.5) .0668

Thus, the probability that an infant delivered at the hospital

weighs more than 9.2 pounds is .0668.

Applied Example 1, page 471

Approximating Binomial Distributions

One important application of the normal distribution is

that it can be used to accurately approximate other

continuous probability distributions.

As an example, we will see how a binomial distribution

may be approximated by a suitable normal distribution.

This provides a convenient and simple solution to certain

problems involving binomial distributions.

Approximating Binomial Distributions

Recall that a binomial distribution is a probability

distribution of the form

P( X x ) C (n, x) p x qn x

For small values of n, the arithmetic computations may be

done with relative ease. However, if n is large, then the

work involved becomes overwhelming, even when tables of

P(X = x) are available.

Approximating Binomial Distributions

To see how a normal distribution can help in such

situations, consider a coin-tossing experiment.

Suppose a fair coin is tossed 20 times and we wish to

compute the probability of obtaining 10 or more heads.

The solution to this problem may be obtained, of course,

by laboriously computing

P( X 10) P( X 10) P( X 11) P( X 20)

As an alternative solution, let’s begin by interpreting the

solution in terms of finding the areas of rectangles in the

histogram.

Approximating Binomial Distributions

We may calculate the probability of

obtaining exactly x heads in 20 coin

tosses with the formula

P( X x ) C (n, x) p x qn x

The results lead to the binomial

distribution shown in the table to

the right.

x

0

1

2

3

4

5

6

7

8

9

10

11

12

20

P(X = x)

.0000

.0000

.0002

.0011

.0046

.0148

.0370

.0739

.1201

.1602

.1762

.1602

.1201

.0000

Approximating Binomial Distributions

Using the data from the table, we may construct a

histogram for the distribution:

.20

.15

.10

.05

0

1

2 3 4 5 6 7 8 9 10

12

14

16

18

20

x

Approximating Binomial Distributions

The probability of obtaining 10 or more heads in 20 coin

tosses is equal to the sum of the areas of the blue shaded

rectangles of the histogram of the binomial distribution:

.20

.15

.10

.05

0

1

2 3 4 5 6 7 8 9 10

12

14

16

18

20

x

Approximating Binomial Distributions

Note that the shape of the histogram suggests that the

binomial distribution under consideration may be

approximated by a suitable normal distribution.

.20

.15

.10

.05

0

1

2 3 4 5 6 7 8 9 10

12

14

16

18

20

x

Approximating Binomial Distributions

The mean and standard deviation of the binomial

distribution in this problem are given, respectively, by

np

(20)(.5)

10

npq

(20)(.5)(.5)

2.24

Thus, we should choose a normal curve for this purpose

with a mean of 10 and a standard deviation of 2.24.

Approximating Binomial Distributions

Superimposing on the histogram a normal curve with a

mean of 10 and a standard deviation of 2.24 clearly gives

us a good fit:

.20

.15

.10

.05

0

1

2 3 4 5 6 7 8 9 10

12

14

16

18

20

x

Approximating Binomial Distributions

The good fit suggests that the sum of the areas of the

rectangles representing P(X = x), the probability of obtaining

10 or more heads in 20 coin tosses, may be approximated by

the area of an appropriate region under the normal curve.

.20

.15

.10

.05

0

1

2 3 4 5 6 7 8 9 10

12

14

16

18

20

x

Approximating Binomial Distributions

To determine this region, note that the base of the portion of

the histogram representing the required probability extends

from x = 9.5 on, since the base of the leftmost rectangle is

centered on 10:

.20

.15

.10

.05

9.5

0

1

2 3 4 5 6 7 8 9 10

12

14

16

x 10

18

20

x

Approximating Binomial Distributions

Therefore, the required region under the normal curve

should also have x 9.5.

Letting Y denote the continuous normal variable, we obtain

P( X 10) P(Y 9.5)

P(Y 9.5)

9.5 10

PZ

2.24

P( Z 0.22)

= P ( Z 0.22)

.5871

Approximating Binomial Distributions

The exact value can be found by computing

P( X 10) P( X 10) P( X 11) P( X 20)

in the usual (time-consuming) fashion.

Using this method yields a probability of .5881, which is not

very different from the approximation of .5871 obtained

using the normal distribution.

Theorem 1

Suppose we are given a binomial distribution

associated with a binomial experiment

involving n trials, each with a probability of

success p and a probability of failure q.

Then, if n is large and p is not close to 0 or 1,

the binomial distribution may be

approximated by a normal distribution with

np

and

npq

Applied Example: Quality Control

An automobile manufacturer receives the

microprocessors used to regulate fuel consumption in its

automobiles in shipments of 1000 each from a certain

supplier.

It has been estimated that, on the average, 1% of the

microprocessors manufactured by the supplier are

defective.

Determine the probability that more than 20 of the

microprocessors in a single shipment are defective.

Applied Example 4, page 475

Applied Example: Quality Control

Solution

Let X denote the number of defective microprocessors in a

single shipment.

Then X has a binomial distribution with n = 1000, p = .01,

and q = .99, so

np

(1000)(.01) 10

npq

(1000)(.01)(.99) 3.15

Applied Example 4, page 475

Applied Example: Quality Control

Solution

Approximating the binomial distribution by a normal

distribution with a mean of 10 and a standard deviation of

3.15, we find that the probability that more than 20

microprocessors in a shipment are defective is given by

P( X 20) P(Y 20.5)

20.5 10

PZ

3.15

P( Z 3.33)

P( Z 3.33)

.0004

Thus, approximately 0.04% of the shipments with 1000

microprocessors each will have more than 20 defective units.

Applied Example 4, page 475

End of

Chapter