A COMPARATIVE STUDY OF READING TEST ABOUT NEWS ITEM

advertisement

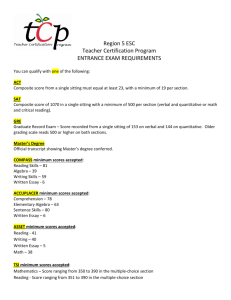

A COMPARATIVE STUDY OF READING TEST ABOUT NEWS ITEM MATERIAL USING MULTIPLE-CHOICE AND ESSAY FORMAT AT THE FIRST GRADE STUDENTS OF SMA NEGERI 1 PADALARANG IN ACADEMIC YEAR 2012/2013 Rani Ranjani Permana ranz_myoung@yahoo.com English Education Study Program Language and Arts Departement STKIP Siliwangi Bandung Abstract The objectives of this research entitled “A Comparative Study of Reading Test about News Item Material Using Multiplechoice and Essay Format at the First Grade Students of SMA Negeri 1 Padalarang in Academic Year 2012/2013” were 1) to know whether or not there is a significant difference students’ score between multiple-choice and essay test; 2) to find out what factor that cause the significant difference; 3) to find out the index of difficulty or facility falue (FV); and 4) to find out the index of discrimination (D) of multiple-choice and essay tests. The design of this research was causal-comparative study and this research used quantitative research method. The instruments of this research were the tests, interview guide and questionnaire. The population of this research was 9 classes of students of the first grade and used random sampling technique to decide the samples of this research that consists of 38 students in 1 class. The collected data were analysing using t test for non-independent means. The results showed that: 1) the t-observed was 8.88 with the critical value df = 37 and significance level at 0,001. Then, the alternative hypothesis was accepted because the t-observed was bigger than t-critical value (8.88 > 3.33). 2) The factors that influenced different score of multiple-choice and essay tests were test items itself, time of conducting a test, and the students them self. 3) 45% of multiple-choice test items were easy and 55% of them were difficult to answer by students. In addition, 85% of essay test items were easy and 15% of them were difficult to answer by students. 5% items of multiple-choice belong to zero discrimination index, 15% items belong to negative discrimination index, 30% of items belong to middle discrimination index, and 50% items belong to low discrimination index. And 5% of essay items belong to negative discrimination index, 15% of items belong to middle discrimination index and 80% items belong to low discrimination index. Keywords: Comparative Study, Multiple-choice, Essay, Test common and widely used assessment tool for the measurement of knowledge, ability and complex learning outcomes” (Annie & Alan, 2009). Beside, “since a hundred years ago, all college course exams were essay items and today many teachers still consider essay questions the preferred method of assessment” (http://cfe.unc.edu, 2012). A. BACKGROUND Test is a specific way to measure performance because it will produce a number as a result of measuring individual’s performances. This numbers will be calculated because “measurement is a process of assigning numbers to qualities or characteristics of an object or person according to some rule or scale and analyzing that data based on psychometric and statistical theory” (Zimmaro, 2004:4). He also explained that: After the data assigned and analyzed in measurement process, the data will be assess in assessment process - process of gathering, describing or quantifying information about performance. Then, the data will be evaluated in evaluation process examining information about many components of the thing being evaluated and comparing or judging its quality, worth or effectiveness in order to make decisions. Finally, by passing testing, measurement, assessment and evaluation process, the learner’s performance can be described as student achievement. There are two kinds of test which is usually used in language teaching. They are multiple-choice and essay test. This fact reflected from many statements from experts. They are “today multiple-choice test is the most B. LITERATURE REVIEW 1. Test Douglas (2003) explained that: Test is a method because test is an instrument – a set of techniques, procedures or items – that requires performance on the part of the test-taker. Then, a test itself must measure general ability or very specific competencies or objectives from an individual’s ability, knowledge or performance. In language test, this performance tests is measure one’s ability to perform language like how to speak, write, read or listen to a subset of language. Finally, a test itself has to measure a given domain which is only measure the desired criterion and not include the other factors inadvertently. 1 whole test tended to do well or badly on each item in the test. The formula of item discrimination (D) shows in box: 2. Multiple-choice Items Definition of multiple-choice items was explained by Cunningham (2005:76), he argued that “multiplechoice items is one type of objective response items – conventional tests - which has four options although it may have three or five option provided”. Burton et al (1991) claim that “a multiple-choice items consists of two basic parts: they are a problem (stem) and the list of suggested solutions (alternatives)”. He also explains that “the stem may be in the form of either a question or an incomplete statement and the list of alternatives contains one correct or best alternative (answer) and a number of incorrect or inferior alternatives (distracters)”. 3. Essay Items According to Cunningham (2005:76) “essay items is one type of constructed response items which requires more emphasis on style, the mechanics of writing and other considerations that allow students to demonstrate their knowledge of a topic or their ability to perform the cognitive tasks”. He also claims that “essay test item requires the student to construct a longer response than is required for a short answer item. Its length can range from a paragraph to an answer that requires multiple pages”. Based on that definition that definitions, the writer wanted to know wheather or not significant different score between multiple-choice and essay score to find out the findings of Hickson & Reed (2009) research that claims “multiple-choice questions give higher scores than essay questions” and “multiple-choice scores have mean scores higher than essay scores”. 4. Item Analysis Item analysis is needed in analyzing a test questions because all items should be examined from the point of view of (a) their difficulty used the index of difficulty or facility value (FV) and (b) their level of discrimination used the index of discrimination (D) by Heaton (1990). a. The Index of Difficulty or Facility Value (FV) In his book, Heaton (1990:178) explains that “the index of difficulty (or facility value) of an item simply shows how easy or difficult the particular item proved in the test”. The formula of the index of difficulty or facility value (FV) shows in box: FV = D = Correct U – Correct L n *Note: ~ n = the number of candidates in either the upper or lower group (n = ½ N) ~ N = the number in the whole group as used previously in the facility value (FV) ~ U is Upper Groups of twenty students who got higher scores ~ L is Lower Groups of twenty students who got lower scores C. RESEARCH METHODOLOGY 1. Research Method Regarding the main objective of the study, the writer used quantitative research: causal-comparative study or ex post facto research because the writer systematically select two criterions of scores from the same groups of students (matched pairs subject) while answered the problems of this research to find out the cause for the significant differences between multiple-choice and essay scores in reading test about news item material in the same groups of students by directly observed and collected the data from the place that the research was placed in the first grade students class at SMA Negeri 1 Padalarang in academic year 2012/2013 consists of thirty eight students that conducted in one class – X1 class. Then, the other data took from all of students fill the questionnaire that given by the writer and also three of English teachers was visited by the writer to had interview sessions with them. 2. Instrument of the Research This research was analyzing the student comprehension by implemented achievement test. This test using multiple-choice and essay format in the first grade students of SMA Negeri 1 Padalarang conducted with reading test about news item material, then after collected the data from test, the scores that produced by students analyzed by using item analysis based on their difficulty and their level of discrimination also interview session used to collected data from English teachers and used questionnaire to collecting the data from the students to supported the other data from test and interview session. 3. Research Population and Samples The study of this research took place in SMA Negeri 1 Padalarang and covers the first grade students.And the samples for this research are first grade students at X1 class that consists of thirty eight students and three English teachers. 4. Data Collection The data were collected using three techniques, test, interview and questionnaire. This research employed test as an instrument to gain detail information. Each tests consists of twenty questions which was divided into four texts about news item n multiple-choice and essay format. Then, 𝑹 𝑵 * Note : ~ FV = Facility Value or Index of Difficulty ~ R = the number of correct answers ~ N = the number of students who are taking the test b. The Index of Discrimination (D) Still based on the same expert, Heaton (1990:179) explained that the index of discrimination (D) tells us whether those students who performed well on the 2 interview guide for English teachers consists of three questions about test in multiple-choice and essay. Also, the questionnaire for students who did tests consists of three questions about test in multiple-choice and essay format. 5. Data Analysis In this research, the data which had been collected from test, interview session and questionnaire was analyzed by many steps, they are: a. The data from test were analyzed using t-test for nonindependent means and item analysis (The Item Difficulty / FV and The Item Discrimination / D). b. The data from interview session was analyzed by generating natural units of meaning – limitation for the topic in categories, classifying, categorizing and ordering these units of meaning, structuring narratives to describe the interview and interpreting the interview that data. c. The data from questionnaire was analyzed by data reducing and data editing. 6. Research Procedures The writer divided the procedures of this research into three days of observation. 1. In the first day, the writer gave the multiple-choice test of news item material that consists of twenty questions to the thirty eights students in X1 class and questionnaire about multiple-choice and essay tests. 2. In the second day, the writer gave the essay test of news item material that consists of twenty questions to the thirty students in X1 class. 3. In the third day, the writer interviewed the three of English teacher with three questions about multiplechoice and essay tests. 2. The t Test The writer of this research employed t test for nonindependent or correlated means – in minitab 14 called paired t test – to determine whether there is a statistically significant difference between the means of two matched or non-independent samples – two groups consisting of the same people or matched pairs (Fraenkel et al, 2012:G-9; Crowl, 1996:432). The summary of that paired samples data presents in Table 2. Table 2 Statistical Summary of Paired Sample Test Paired Samples Test Paired Differences 95 % Confidence Interval of the Difference Mean Multiplechoice Scores Essay Scores Mean (M) Minimum Maximum Std. Dev (SD) SE Mean 38 44.60 25.00 70.00 10.48 1.70 38 60.42 28.75 81.25 11.30 1.83 1 & Essay Dev Mean -15.82 10.98 Lower 1.78 -19.43 Upper -12.21 t 8.88 df 37 Sig. (2tailed) 0.000 B. Research Discussion 1. Explanation of Significant Difference between Multiple-choice and essay scores based on Calculation of t Test for Non-independent or Correlated Means – in minitab paired t test Based on the Table 1 above, the mean of essay scores (M = 60.42; SD = 11.30) is significantly different from the mean of multiple-choice scores (M = 44.60; SD = 10.48) of the same group who had been tested in news item material using multiple-choice and essay format test. It was a fact that for each student who did tests, the scores of essay test is bigger than multiple- Table 1 Means and Standard Deviation in a Paired Samples t Test Observations Multiple-choice SE 3. Interview This research employed interview session for collecting the descriptive data from the respondents who are three teachers of English Major at SMA Negeri 1 Padalarang. All of the respondents was given three questions about test that specifically talked about multiple-choice and essay test. The writer tried to find out the preference test of each English teacher between multiple-choice and essay format in which is always used in their school environment. Then, the writer wanted to know the opinion of all of the respondents about significant different scores between multiplechoice and essay test which has been experienced by them in the class. And finally, the writer tried to find out types of test which always used by the respondents to evaluate their students but specifically to multiplechoice and essay test. 4. Questionnaire This research employed questionnaire for collecting the descriptive data from the respondents who are thirty eight students in X1 class at SMA Negeri 1 Padalarang. All of the respondents was given three questions about test that specifically talked about multiple-choice and essay test. D. RESEARCH FINDINGS AND DISCUSSIONS A. Research Findings Based on the data which was collected in this research, there are several findings that can be discussed as a result in this research. 1. Means and Standard Deviation in a Paired Samples t Test for Multiple-choice and Essay Scores The writer employed t test for non-independent – in minitab 14 is paired samples test. And The data then presented the statistical summary of the data in Table 1. Variable Pair Std. 3 choice. So, it was because the mean for essay scores is bigger than the mean for multiple-choice scores. Also, based on the Table 2 above, the difference mean of multiple-choice (M = 44.60; SD = 10.48) and essay scores (M = 60.42; SD = 11.30) is M = -15.82 and SD = 10.98. Besides, the t value for paired sample test between multiple-choice and essay test is -8.88. The negative t value means that the essay scores in row 2 is higher than multiple-choice scores in row 1. But, it isn’t a problem because “a t value may be either positive or negative” depend on the arrangement of the data in the table (Crowl, 1996:180). Finnally, it presented the evidence that there is a statistically significant difference between the two means of multiple-choice and essay scores which was tested in the same groups of samples – thirty eight students in paired sample – because the t value for df= 37 are 3.3 (more detail t value = 3.326) and 3.5 (more detail t value = 3.474) where the obtained t value of 8.88 is higher than 3.3 and 3.5. Based on Table 4, the writer classified four performances of items for multiple-choice questions. First, 1 item (5%) of multiple-choice item belong to zero discriminant index. Second, 3 items (15%) of multiple-choice items belong to negative discriminant index. Third, 6 items (30%) of multiple-choice items belong to middle discriminant index. And fourth, 10 items (50%) of multiple-choice items belong to low discriminant index. In addition, based on Table 4 the writer classified three performances of items for essay questions. First, 1 item (5%) of essay item belong to to negative discriminant index. Second, 3 items (15%) of essay items belong to middle discriminant index. And third, 16 items (80%) of essay items belong to low discriminant index. The detail information present in the Table 4. Table 4 Table of Item Difficulty or Index of Facility Value (FV) and Index of Discriminat (D) of Essay Items Items 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 2. Explanation of Item Difficulty or Index of Facility Value (FV) of Multiple-choice and Essay Items Based on the Table 5, the writer classified two performances of items for multiple-choice questions. First, the writer claims that only 9 items (45%) – the facility value (FV) with black and green colors – from 20 items were easy to answer by students. Second, 11 items (55%) –the facility value (FV) with red color – from 20 items were difficult to answer by students. In addition, based on Table 3 the writer classified two performances of items for essay questions. First, the writer claims that only 3 items (15%) – the facility value (FV) with red color – from 20 items were difficult to answer by students. And 17 items (85%) – the facility value (FV) with green and blue colors – from 20 items were easy to answer by students. The detail information present in the Table 3. U+L 3 31 9 10 5 7 20 26 3 37 13 12 1 30 28 9 27 33 26 11 FV 0,08 0,82 0,24 0,26 0,13 0,18 0,53 0,68 0,08 0,97 0,34 0,32 0,03 0,79 0,74 0,24 0,71 0,87 0,68 0,29 U-L 1 1 7 8 3 -1 8 0 1 1 7 4 -1 6 8 3 3 5 2 -1 FV 0,62 0,42 0,47 0,32 0,64 0,88 0,75 0,88 0,48 0,70 0,36 0,66 0,37 0,67 0,67 0,53 0,68 0,71 0,78 0,51 U-L 2,00 0,50 6,25 2,50 1,75 -0,25 1,00 4,75 2,75 4,25 4,50 6,25 1,50 5,50 2,50 0,50 2,00 8,00 2,25 2,75 D 0,11 0,03 0,33 0,13 0,09 -0,01 0,05 0,25 0,14 0,22 0,24 0,33 0,08 0,29 0,13 0,03 0,11 0,42 0,12 0,14 3. Interpretation of Result of Interview Session with Teachers This research employed interview session for collecting the descriptive data from the respondents who are three teachers of English Major at SMA Negeri 1 Padalarang. Then, all of the respondents were given three questions about test that specifically talked about multiple-choice and essay test. The writer tried to find out the preference test of each English teacher between multiple-choice and essay format in which is always used in their school environment. Then, the writer wanted to know the opinion of all of the respondents about significant different scores between multiplechoice and essay test which has been experienced by them in the class. And finally, the writer tried to find out types of test which always used by the respondents to evaluate their students but specific to multiple-choice and essay test. After transcribing, analyzing and verifying the data from interview with the three respondents, the detail information presented in the Table 5. Table 3 Table of Item Difficulty or Index of Facility Value (FV) and Index of Discriminant (D) of Multiple-choice Items Items 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 U+L 23,50 16,00 17,75 12,00 24,25 33,25 28,50 33,25 18,25 26,75 13,50 25,25 14,00 25,50 25,50 20,00 26,00 27,00 29,75 19,25 D 0,05 0,05 0,37 0,42 0,16 -0,05 0,42 0 0,05 0,05 0.37 0,21 -0,05 0,32 0,42 0,16 0,16 0,26 0,11 -0,05 4 Table 5 Descriptions of Interview Contents Multiple-choice Test 1. Multiple-choice was choosed by the respondents because this test can give advantages not only for the students but also the teacher as tester. 2. The advantage for the students itself is can help them with the optional answers if they don’t know the answer or may be feel confuse with the questions (although it’s also a disadvantage of multiplechoice test by doing guessing or choose the answer randomly) 3. Multiple-choice test also give an analytical thinking for the students because to answer the questions correctly, the students have to think analytically to choose the right answer between the optional answers that always given in multiple-choice test. 4. The advantage also can be felt by teacher while they are checking their students’ answer. To correcting ot checking the multiple-choice is not take a long time. 5. Multiple-choice also able to produce higher scores but it’s depend on the quantity of questions (how many questions are given in one test) and the quantity of scores for each question (how many points are given in each items) or when this scores calculated with essay or the other scores that given in one time of test. 6. Multiple-choice could give higher scores for students if the material in the test prevoiusly given to the students although in different format test, students able to answer because this material understood and known by students before. Students' Preference Tests Essay Test 1. Essay test was choosed by the respondent because this test can give advantages not only for the students but also the teacher as tester. 2. The advantage for the students itself is can help them to express their opinion or comprehension about material that have been studied by them freely (although it’s always not supported by students who unable to express their opinion in written text). 3. Essay test also give the opportunity for the teacher to measure their students’ ability in writing and make students can’t easily cheat while they do the test (although this test is take a long time to check and correct all of the students’ answer. 4. Essay test mostly belived can give higher scores by both of the students or teacher because the quantity of each item is higher than multiplechoice item (although essay test only consists of a few items). Multiplechoice Test 13% Essay Test 32% 55% Both Multiplechoice and Essay Test Chart 1 Percentage of Students Preference Tests b. Students’ Preference Tests that Produce Higher Scores Chart Based on the data of questionnaire from the students, the writer found that: First, 63% of the students in X1 class choose essay test as their preference test that can give higher scores (if multiplechoice and essay test compared). Second, 18% of the students in X1 class choose multiple-choice test as their preference test that can give higher scores (if multiplechoice and essay test compared). And third, 18% of the students in X1 class choose both of multiple-choice and essay tests as their preference test that can give higher scores (if multiple-choice and essay test compared). The detail interpretation present in the Chart 2. Students' Preference Tests that Produce Higher Scores Multiplechoice Test 18% 19% Essay Test Both Multiplechoice and Essay Test 63% Chart 2 Percentage of Tests that Produce Higher Scores c. Students’ Suggestion Tests for Teacher Chart Based on the data of questionnaire from the students, the writer found that: First, 50% of the students in X1 class choose both of multiple-choice and essay tests as their suggestion tests to teacher to be given for their students. Second, 37 % of the students in X1 class choose essay test as their suggestion test for teacher to be given to their students. And third, 13% of the students in X1 class choose multiple-choice test as their suggestion test for teacher to be given to their students. The detail interpretation present in the Chart 3. 4. Interpretation of the Result of Questionnaire from Students After reduced and edited by the writer, the data from questionnaire presents in the Table 4.12 but to summarized the data, the writer reduced the data and changed into pie charts that consists of three chart which is each chart presents a description data from questionnaire. a. Students’ Preference Tests Chart Based on the data of questionnaire from the students, the writer found that: First, 55% of the students in X1 class choose multiple-choice test as their preference test. Second, 32% of the students in X1 choose essay test as their preference test. And third, 13% of the students in X1 choose both of multiplechoice and essay tests as their preference test. The detail interpretation present in the Chart 1. Students Suggestion's Tests for Teacher Multiple-choice Test 13% Essay Test 50% 37% Both Multiplechoice and Essay Test Chart 3 Percentage of Students Suggestion’s Tests for Teacher 5 Research”. Journal the International Multi Conference of Engineers and Computer Scientists (Vol II), pp. 18 – 20. Brown, H. D. (2003). Language Assessment: Principles and Classroom Practices. California: Longman. Burton, S. J. et al. (1991). “How to Prepare Better Multiple-Choice Test Items: Guidelines for University Faculty”. Journal of Brigham Young University Testing Services and The Department of Instructional Science., pp. 1-33. Cohen, L. et al. (2007). Research Methods in Education (Sixth Edition). New York: the Taylor & Francis e-Library. Crowl, T. K. (1996). Fundamentals of Educational Research (Second Edition). Dubuque: Times Higher Education Group Inc. Cunningham, G. K. (2005). Assessment in the Classroom: Constructing and Interpreting Tests. London: Falmer Press. Doddy, A. et al. (2008). Developing English Competencies for Senior High School Grade X. Jakarta: Setia Purna Invest. Fraenkel, J. R. et al. (2012). How to design and evaluate research in education (Eighth edition).New York: McGraw Hill. Fulcher, G. (2010). Practical Language Testing. London: Hodder Education. Heaton, J. B. (1990). Writing English Language Tests. New York: Longman Inc. Hickson, S.And Reed, W. R. (2009).“Do Essay and Multiple-choice Questions measure the Same Thing?”. March (3rd), pp. 127. Hughes, A. (2003). Testing for Language Teachers (Second Edition). Cambridge: Cambridge University Press. Karimi, L. and Mehrdad, A. G. (2012).“Investigating Administered Essay and Multiple-choice Tests in the English Department of Islamic Azad University, Hamedan Branch”. Journal of Canadian Center of Science and Education Vol. 2 (3), pp. 69-76. Kuechler, W. L. and Simkin, M. G. “How Well Do Multiple Choice Tests Evaluate Student Understanding in Computer Programming Classes?”. Journal of Information Systems Education, Vol. 14(4), pp. 389-399. Kusumawardhani, S. (2012). “The Effect of Test using English on Students’ Achievement in Mathemathics Formative Test”. Apple3l of Journal vol. 1, pp. 66-79. Meyers, J. L. et al.(2010). “Performance of Ability Estimation Methods for Writing Assessments under Conditions of Multidimensionality”.Journal of National Council on Measurement in Education.May, pp. 1-45. Ramraje, S. N. (2011). “Comparison of the Effect of Post-instruction Multiple-choice and Short-answer Tests on Delayed Retention Learning”. Journal of Australasian Medical, 4, 6, pp. 332-339. University of North Carolina at Chapel Hill.(2012). “Writing and Grading Essay Questions”.March.Retrieved from http://cfe.unc.edu. Walstad, W. And Becker, W. (1994). “Achievement Differences on Multiple-Choice and Essay Tests in Economics”.Journal of CBA Faculty Publications.Retrieved from http://digitalcommons.unl.edu/cbafacpub/34. Webster, N. (1979). Webster’s Deluxe Unabridged Dictionary. USA: Simon & Schuster, a division of Gulf and Webster Corporation. Zimmaro, D. M. (2004). “Writing Good Multiple-Choice Exams”. Jornal of the Division of Instructional Innovation and Assessment”. August, pp. 1-40). E. CONCLUSIONS AND SUGGESTIONS A. Conclusions 1. There is significant difference in score based on multiple-choice mean (M = 44.60) and essay mean (M = 60.42) calculated by using t test for non-directional or correlated means – in minitab paired t test – and found that the mean difference of their means is -15.82, t observed is -8,88, the critical value with df = 37 and significant at level 0,001 (t table value = 3.32). 2. The factors that influenced different score of multiple-choice and essay tests were test items itself, time of conducting a test, and the students them self ( as subject of learning process). 3. Based on item difficulty (FV), 45% of multiplechoice test items were easy and 55% of them were difficult to answer by students. And in addition, 85% of essay test items were easy and 15% of them were difficult to answer by students. 4. Based on item discrimination (D), 5% items of multiple-choice belong to zero discrimination index, 15% items belong to negative index discrimination index, 30% of items belong to middle discrimination index, and 50% items belong to low discrimination index. In addition, 5% of essay items belong to negative discrimination index, 15% of items belong to middle discrimination index and 80% items belong to low discrimination index. B. Suggestion Based on the findings of this research, the writer proposes several suggestions as follows: 1. Teachers should produce a test with a good proportion that can be answered by the students correctly when they want to evaluate their students’ comprehension about the topic. 2. Teachers should use not only one type of test but at least combine two types of test to make valid measurements of their students’ ability. 3. Teachers should design test items with item difficulty (FV) in balanced proportion such as easy items = 25%, moderate items = 50% and difficult items = 25%. 4. Teachers should design the test with good discriminating by employing index of discrimination (D) to distinguish the ability of her/his students (between above-average student and below-average student). 5. For another future research, it is important to analyze each kind of tests as the focus of the research. BIBLIOGRAPHY Annie, W. Y. N. And Alan, H. S. C. (2009).“Different Methods of Multiple-Choice Test: Implications and Design for Further 6