document

advertisement

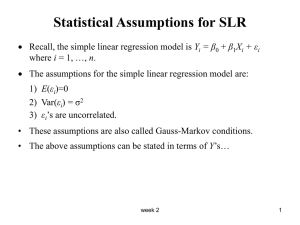

Heteroskedasticity Outline 1) What is it? 2) What are the consequences for our Least Squares estimator when we have heteroskedasticity 3) How do we test for heteroskedasticity? 4) How do we correct a model that has heteroskedasticity 11.1 What is Heteroskedasticity 11.2 Review the assumption of Gauss-Markov 1. 2. 3. 4. 5. Linear Regression Model y = 1 + 2x + e Error Term has a mean of zero: E(e) = 0 E(y) = 1 + 2x Error term has constant variance: Var(e) = E(e2) = 2 Error term is not correlated with itself (no serial correlation): Cov(ei,ej) = E(eiej) = 0 ij Data on X are not random and thus are uncorrelated with the error term: Cov(X,e) = E(Xe) = 0 This is the assumption of a homoskedastic error A homoskedastic error is one that has constant variance. A heteroskedastic error is one that has a nonconstant variance. Heteroskedasticity is more commonly a problem for cross-section data sets, although a time-series model can also have a non-constant variance. 11.3 This diagram shows a non-constant variance for the error term that appears to increase as X increases. There are other possibilities. In general, any error that has a non-constant variance is heteroskedastic. f(y|x) . . x1 x2 x3 . x What are the Implications for Least Squares? 11.4 We have to ask “where did we used the assumption”? Or “why was the assumption needed in the first place?” We used the assumption in the derivation of the variance formulas for the least squares estimators, b1 and b2. For b2 is was b2 2 wt et Var(b2 ) Var( 2 wt et ) wt2Var(et ) wt2 t2 2 2 ( x x ) t This last step uses the assumption that t2 is a constant 2. If we don’t make this assumption, then the formula is: 11.5 Remember: Var(b2 ) Var( 2 wt et ) wt2Var(et ) wt2 t2 wt xt x 2 ( x x ) t 2 2 ( x x ) t t 2 2 ( xt x ) Therefore, if we ignore the problem of a heteroskedastic error and estimate the variance of b2 using the formula on the previous slide, when in fact we should have used the formula directly on this slide, then our estimates of the variance of b2 are wrong. Any hypothesis tests or confidence intervals based on them will be invalid. However, E(b2) = 2 (Verify that the proof of Unbiasedness did not use the assumption of a homoskedastic error. How do We Test for a Heteroskedastic Error Food Expenditure Regression 300 food expenditure 1) Visual Inspection of the residuals: Because we never observe actual values for the error term, we never know for sure whether it is heteroskecastic or not. However, we can run a least squares regression and examine the residuals to see if they show a pattern consistent with a nonconstant variance. 11.6 250 200 y = 0.1283x + 40.768 150 100 50 0 0 500 1000 weekly income 1500 11.7 This regression resulted in the following residuals plotted against the variable X (weekly income). What do you see? Residual Plot Residuals 100 50 0 0 500 1000 -50 -100 x 1500 2) Formal Tests for Heteroskedasticity (Goldfeld Quandt Test) Many different tests, we will study the Goldfeld Quandt test: a) Examine the residuals and notice that the variance in the residuals appears to be larger for larger values of xt t2 2 xt Must make some assumption about the form of the heteroskedasticity (how the variance of et changes) For the food expenditure problem, the residuals tell us that an increasing function of xt (weekly income) is a good candidate. Other models may have a variance that is a decreasing function of xt or is a function of some variable other than xt. 11.8 11.9 b) The Goldfeld Quandt Test: • Sort the data in descending order, and the split the data in half. • Run the regression on each half of the data. • use the SSE from each regression to conduct a formal hypothesis test for heteroskedasticity • If the error is heteroskedastic with a larger variance for the larger values of xt, then we should find: ˆ12 ˆ 22 Where: ˆ12 SSE1 eˆt2 t1 k t1 k 2 ˆ e SSE t 2 ˆ 22 t2 k t2 k And where SSE1 comes from the the regression using the subset of “large” values of xt., which has t1 observations SSE2 comes from the regression using the subset of “small” values of xt, which has t2 observations 11.10 c) Conducting the Test: 2 2 H o : 1 2 2 2 H1 : 1 2 The error is Homoskedastic so that t2 2 The error is Heteroskedastic t2 2 xt ˆ12 SSE1 /(t1 k ) GQ 2 ˆ 2 SSE2 /(t2 k ) It can be shown that the GQ statistic has a F-distribution with (t1-k) d.o.f. in the numerator and (t2-k) d.o.f. in the denominator. If GQ > Fc we reject Ho. We find that the error is heteroskedastic. Food Expenditure Example: proc sort data=food; by descending x; This code sorts the data according 11.11 to X because we believe that the error variance is increasing in xt. data food1; This code estimates the model for the first 20 observations, which are the observations with large values of xt. set food; if _n_ <= 20; proc reg; bigvalues: model y = x; data food2; This code estimates the model for the second 20 observations, which are the observations will small values of xt. set food; if _n_ >= 21; proc reg; littlevalues: run; model y = x; Source The REG Procedure Model: bigvalues Dependent Variable: y Analysis of Variance Sum of DF Squares Model Error Corrected Total 1 18 19 4756.81422 41147 45904 11.12 Mean Square 4756.81422 2285.93938 F Value Pr > F 2.08 0.1663 Root MSE Dependent Mean Coeff Var Variable Intercept x DF 1 1 47.81150 R-Square 0.1036 148.32250 Adj R-Sq 0.0538 32.23483 Parameter Estimates Parameter Standard Estimate Error t Value Pr > |t| 48.17674 70.24191 0.69 0.5015 0.11767 0.08157 1.44 0.1663 The REG Procedure Model: littlevalues Dependent Variable: y Source Model Error Corrected Total DF 1 18 19 Root MSE Dependent Mean Coeff Var Variable Intercept x DF 1 1 Analysis of Variance Sum of Mean Squares Square 8370.95124 8370.95124 12284 682.45537 20655 26.12385 112.30350 23.26183 R-Square Adj R-Sq Parameter Estimates Parameter Standard Estimate Error 12.93884 28.96658 0.18234 0.05206 F Value 12.27 Pr > F 0.0025 0.4053 0.3722 t Value 0.45 3.50 Pr > |t| 0.6604 0.0025 41147 /( 20 2) 12284 /( 20 2) 2285 .9 682 .46 3.35 GQ Fc= 2.22 (see SAS) Reject Ho How Do We Correct for a Heteroskedastic Error? 1) White Standard Errors: the correct formula for the variance of b2 is: Var(b2 ) 2 2 ( x x ) t t ( x x ) 2 2 t Estimate 2t in the above formula using the squared residual for each observation as the estimate of its variance: ˆ t2 eˆt2 This gives us what are called “White’s estimator” of the error variance. In SAS: PROC REG; MODEL Y = X / ACOV; RUN: Food Expenditure example: White standard error: se(b2) = 0.0382 Typical Least Squares: se(b2) = 0.0305 11.13 11.14 2) Generalized Least Squares Idea: Transform the model with a heteroskedastic error into a model with a homoskedastic error. Then do least squares. yt 1 2 xt et Where: Var (et ) t2 Suppose we knew σt. Transform the model by dividing every piece of it by the standard deviation of the error: xt et 1 2 t t t t yt This model has an error with a constant variance: e Var t t 1 1 2 Var (et ) 2 t2 1 t t 11.15 2) Generalized Least Squares (con’t) Problem: we don’t know σt. This requires us to assume a specification for the error variance. Let’s assume that the variance increases linearly with xt . yt 1 2 xt et Where: 2 t xt 2 Transform the model by dividing every piece of it by the standard deviation of the error. t t2 2 xt xt yt 1 xt et 2 xt xt xt xt 11.16 This new model has an error term that is the original error term divided by the square root of xt. Its variance is constant. et var(et ) 2 xt 2 var xt xt x t This method is called “Weighted Least Squares”. More efficient than Least Squares: Least Squares gives equal weight to all observations. Weighted Least Squares gives each observation a weight that is inversely related to its value of the square root of xt large values of xt which we have assumed have a large variance will get less weight than smaller values of xt when estimating the intercept and slope of the regression line 11.17 We need to estimate this model: yt 1 xt et 2 xt xt xt xt This requires us to construct 3 new variables: yt* yt xt x1*t 1 xt x2*t xt xt We estimate this model: yt* 1x1*t 2 x2*t et* Notice that it doesn’t have an intercept SAS code to do Weighted Least Squares: ystar = y/sqrt(x); x1star = 1/sqrt(x); x2star = x/sqrt(x); proc reg; foodgls:model ystar=x1star x2star/noint; run; 11.18 11.19 Dependent Variable: ystar NOTE: No intercept in model. R-Square is redefined. Analysis of Variance Sum of Mean Source DF Squares Square F Value Model 2 978.84644 489.42322 270.71 Error 38 68.70197 1.80795 Uncorrected Total 40 1047.54841 Root MSE Dependent Mean Coeff Var Variable x1star x2star DF 1 1 1.34460 4.93981 27.21965 R-Square Adj R-Sq Parameter Estimates Parameter Standard Estimate Error 31.92438 17.98608 0.14096 0.02700 Pr > <.000 0.9344 0.9310 t Value 1.77 5.22 Pr > |t| 0.0839 <.0001