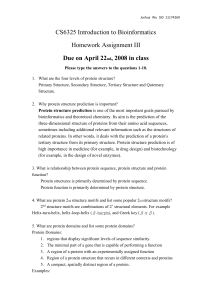

Transfer Learning and CF - Hong Kong University of Science and

advertisement

Transfer Learning

for Link Prediction

Qiang Yang, 楊強,香港科大

Hong Kong University of Science and Technology

Hong Kong, China

http://www.cse.ust.hk/~qyang

1

We often find it easier …

2

We often find it easier…

3

We often find it easier…

4

We often find it easier…

5

Transfer Learning? 迁移学习…

People often transfer knowledge to

novel situations

Chess Checkers

C++ Java

Physics Computer Science

Transfer Learning:

The ability of a system to recognize and apply knowledge

and skills learned in previous tasks to novel tasks (or new

domains)

6

But, direct application will not

work

Machine Learning:

Training and future (test) data

follow the same distribution, and

are in same feature space

7

When distributions are different

Classification Accuracy (+, -)

Training: 90%

Testing: 60%

8

When features and distributions

are different

Classification Accuracy (+, -)

Training: 90%

Testing: 10%

9

Cross-Domain Sentiment Classification

Training

Test

Classifier

DVD

82.55%

DVD

Training

Test

71.85%

Classifier

Electronics

DVD

When distributions are different

Wireless sensor networks

Different time periods,

devices or space

Night time

Day time

Device 1

Device 2

Device,

Space, or

Time

11

When Features are different

Heterogeneous: different feature spaces

Training: Text

Apples

Bananas

Future: Images

The apple is the pomaceous fruit of

the apple tree, species Malus

domestica in the rose family

Rosaceae ...

Banana is the common name for a

type of fruit and also the

herbaceous plants of the genus

Musa which produce this commonly

eaten fruit ...

12

Transfer Learning: Source

Domains

Input

Learning

Output

Source

Domains

Training Data

Test Data

Source Domain

Target Domain

Labeled/Unlabeled

Labeled/Unlabeled

Unlabeled

13

An overview of

various settings of

transfer learning

源數據

Self-taught

Learning

Case 1

No labeled data in a source domain

Inductive Transfer

Learning

目標數據

Labeled data are available in a source domain

Labeled data are available

in a target domain

Case 2

Source and

target tasks are

learnt

simultaneously

Multi-task

Learning

Transfer

Learning

Labeled data are

available only in a

source domain

No labeled data in

both source and

target domain

Transductive

Transfer Learning

Assumption:

different

domains but

single task

Domain

Adaptation

Assumption: single domain

and single task

Unsupervised

Transfer Learning

Sample Selection Bias

/Covariance Shift

Talk Outline

Transfer Learning: A quick introduction

Link prediction and collaborative filtering

problems

Transfer Learning for Sparsity Problem

Codebook Method

CST Method

Collective Link Prediction Method

Conclusions and Future Works

15

Network Data

Social Networks

Information Networks

The Web (1.0 with hyperlinks and 2.0 with

tags)

Citation network, Wikipedia, etc.

Biological Networks

Facebook, Twitter, Live messenger, etc.

Gene network, etc.

Other Networks

16

A Real World Study [Leskovec-Horvitz

WWW ‘08]

Who talks to whom on MSN messenger

Network: 240M nodes, 1.3 billion edges

Conclusions:

Average path length is 6.6

90% of nodes is reachable <8 steps

17

Network Structures: Links

Global network structures

Local network structures

Small world

Long tail distributions

Relational learning (relation==link)

Link prediction (e.g. recommendation)

Group (Cluster) structures

18

Global Network Structures

Small world

Six degrees of separation [Milgram 60s]

Clustering and communities

Evolutionary patterns

Long tail distributions

Personalized recommendation: Amazon

Also brings a challenge in recommendation

and relational learning: sparseness

19

Local Network Structures

Link Prediction

A form of Statistical Relational Learning

Object classification: predict category of an object

based on its attributes and links

Link classification: predict type of a link

Are they co-authors?

Link existence: predict whether a link exists or not

Is this person a student?

Does he like this book?

Link cardinality estimation: predict the number of

links of a node

An Introduction to statistical relational learning by Taskar and Getoor

20

Link Prediction

Task: predict missing links in a network

Main challenge: Network Data Sparsity

21

Product Recommendation

(Amazon.com)

22

22

Examples: Collaborative Filtering

1

3

1

5

3

2

4

?

3

1

?

2

3

?

4

4

Ideal

Setting

Training Data:

Dense Rating Matrix

?

?

?

Test Data:

CF model

Density >=2.0%,

RMSE:0.8721

Rating Matrix

Examples: Collaborative Filtering

1

?

5

?

3

?

4

4

?

Realistic

Setting

?

Training Data:

Sparse Rating Matrix

?

Test Data:

CF model

Rating Matrix

Density <=0.6%,

RMSE:0.9748

10%

Transfer Learning for

Collaborative Filtering?

IMDB Database

Amazon.com

26

26

Codebook Transfer

Bin Li, Qiang Yang, Xiangyang Xue.

Can Movies and Books Collaborate? Cross-Domain

Collaborative Filtering for Sparsity Reduction.

In Proceedings of the Twenty-First International Joint

Conference on Artificial Intelligence (IJCAI '09),

Pasadena, CA, USA, July 11-17, 2009.

Limitations of Codebook

Transfer

Same rating range

Homogenous dimensions

Source and target data must have the

same range of ratings [1, 5]

User and item dimensions must be similar

In reality

Range of ratings can be 0/1 or [1,5]

User and item dimensions may be very

different

37

Coordinate System Transfer

Weike Pan, Evan Xiang, Nathan Liu and Qiang Yang.

Transfer Learning in Collaborative Filtering for

Sparsity Reduction.

In Proceedings of the 24th AAAI Conference on

Artificial Intelligence (AAAI-10). Atlanta, Georgia,

USA. July 11-15, 2010.

Types of User Feedback

Explicit feedback: express preferences explicitly

1-5: Rating

?: Missing

Implicit feedback: express preferences implicitly

1: Observed browsing/purchasing

0: Unobserved browsing/purchasing

Target domain

R: explicit ratings

One auxiliary domain (two or more data sources)

: user u has implicitly browsed/purchased item j

Problem Formulation

One target domain

, n users and m items.

One auxiliary domain (two data sources)

user side:

users with R.

item side:

items with R.

, shares n common

, shares m common

Goal: predict the missing values in R.

Our Solution: Coordinate System Transfer

Step 1: Coordinate System Construction (

Step 2: Coordinate System Adaptation

)

Coordinate System Transfer

Step 1: Coordinate System Construction

Sparse SVD on auxiliary data

where the matrices

give the top d principle coordinates.

Definition (Coordinate System):

A coordinate system is a matrix with columns of orthonormal bases

(principle coordinates), where the columns are located in descending order

according to their corresponding eigenvalues.

Coordinate System Transfer

Step 1: Coordinate System Adaptation

Adapt the discovered coordinate systems from the auxiliary

domain to the target domain,

The effect from the auxiliary domain

Initialization: take

Regularization:

as seed model in target domain,

Coordinate System Transfer

Algorithm

Experimental Results

Data Sets and Evaluation Metrics

Data sets (extracted from MovieLens and Netflix)

Mean Absolute Error (MAE) and Root Mean Square Error

(RMSE),

Where

and

and

are the true and predicted ratings, respectively,

is the number of test ratings.

Experimental Results

Baselines and Parameter Settings

Baselines

Average Filling

Latent Matrix Factorization (Bell and Koren, ICDM07)

Collective Matrix Factorization (Singh and Gordon, KDD08)

OptSpace (Keshavan, Montanari and Oh, NIPS10)

Parameter settings

Tradeoff parameters

LFM: { 0.001, 0.01, 0.1, 1, 10, 100 }

CMF: { 0.1, 0.5, 1, 5, 10 }

CST:

Latent dimensions: { 5, 10, 15 }

Average results over 10 random trials are reported

Experimental Results

Results(1/2)

Observations:

CST performs 99% significantly better (t-test) than all baselines in all

sparsity levels,

Transfer learning methods (CST, CMF) beats two non-transfer learning

methods (AF, LFM).

Experimental Results

Results(2/2)

Observations:

CST consistently improves as d increases, demonstrating the usefulness

(avoid overfitting) using the auxiliary knowledge,

The improvement (CST vs. OptSpace) is more significant with fewer

observed ratings, demonstrating the effectiveness of CST in alleviating

data sparsity.

Related Work

Related Work (Transfer Learning)

Cross-domain collaborative filtering

Domain adaptation methods

One-sided:

Two-sided:

Limitation of CST and CBT

Users behave differently in different

domains

Rating bias

Users tend to rate items that they like

Our Solution: Collective Link

Prediction (CLP)

Jointly learn multiple domains together

Learning the similarity of different

domains

consistency between domains indicates

similarity.

Bin Cao, Nathan Liu and Qiang Yang.

Transfer Learning for Collective Link

Prediction in Multiple Heterogeneous

Domains.

In Proceedings of 27th International

Conference on Machine Learning (ICML

2010), Haifa, Israel. June 2010.

Recommendation with

Heterogeneous Domains

Items span multiple, heterogeneous

domains for many recommendation

systems.

ebay, GoogleReader, douban, etc.

Example: Collective Link Prediction

1

2

3

5

1

2

3

1

Sparse Training

4 Rating

Movie

Matrix

4

1

0

0

1

0

0

1

0

0

1

1

0

1

1

0

1

1

0

0Dense

1 Auxiliary

1

0

1

Movie Tagging

0 0Matrix

1

2

3

4

5

4

3

Dense Auxiliary

5 Rating

Book

Matrix

2

Transfer

Learning

?

RMSE:0.8855

?

?

? Test Data:

?

?

Rating

Matrix

A Nonparametric Bayesian

Approach

Based on Gaussian process models

Key part is the kernel modeling user

relation as well as task relation

Making Prediction

Similar to memory-based approach

Mean of prediction

Similarity between items

Similarity between tasks

Experimental Results

Conclusions and Future Work

Transfer Learning (舉一反三 )

Transfer Learning has many applications

Sensor Based Location Estimation

Sensor Based Activity Recognition

Future:

When to transfer, and when not to transfer

Finding source domains on World Wide Web

Heterogeneous transfer learning

59