Nov. 25: Chapter 9: Virtual Memory

advertisement

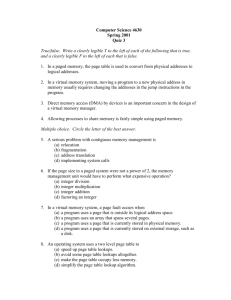

Chapter 9 – Virtual Memory (Pgs 357 - 409) CSCI 3431: OPERATING SYSTEMS Overview Instructions need to be in memory to be executed Address space (bus size) is usually bigger than physical space (memory size) Not all a program is needed at any point in time Loading only what is needed speeds up execution and permits more processes to be in memory Virtual Memory Separates logical memory (i.e., address space seen by compiler/user) from the physical memory (i.e., actual RAM on the motherboard) Each process can be given its own independent address space Sharing, forking, code reuse can all be improved and supported more effectively Demand Paging Load pages only as they are needed Pager – loads and stores pages from secondary storage Swapper – loads and stores processes from secondary storage Pages in memory are called "memory resident" and are usually identified using the "valid bit" in the page table Page Faults 1. Determine if the reference is valid (i.e., 2. 3. 4. 5. 6. check PCB to see if reference is part of process) If invalid, abort, else begin paging Find a free frame in physical memory Schedule load from disk to frame On completion, update page table Restart instruction Handling a Page Fault (9.6) Concepts in Paging Pure Demand Paging – Pages are only brought in when a page fault occurs Locality of Reference: a phenomenon whereby programs tend to use small sets of pages that tend to change slowly Instruction restarting – usually just repeating current instruction in PC Sometimes difficult to predict page faults for CISC hardware Performance 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. Trap to operating system Jmp to page fault handler Save registers and state Determine validity of address Identify an available frame Issue a disk read, block process in IO queue Run scheduler, execute another process ... After page load, Disk trap to operating system Jmp to disk interrupt handler Figure out the disk was handling a page fault Update page table for process, unblock process, reset process so it can resume at failed instruction Run scheduler, execute some process ... Resume where we left off when we are scheduled to run ... All this takes HOW LONG for ONE page fault? What this means ... Interrupt handlers are often hand-coded assembly for optimal performance Page fault times are horrible if there are a lot of disk requests Disk activity is the major wait factor We can compute Effective Access Time = ((1-p) * timemem) + (p * timefault) where p is the % probability of a fault And if there is no empty frame ... Copy on Write Pages only ever change if written to Pages that are not changed can be shared Thus, assume everything is shared, and only copy if a write occurs to a page Makes for very fast forks Sometimes OS reserves frames so they are available when needed for the copy Page Replacement Eventually the number of active pages exceeds the number of available frames 1. Swap the entire process and all its pages out 2. Swap out pages as needed to make space for new pages Most systems try to page out read-only pages because no write is needed (i.e., clean vs. dirty pages) Requires updating of the processes page table (which is why it is held by the kernel) Algorithms While we can use random data, it is best to use a "reference string" of page faults developed from actual runtime data when evaluating algorithms Goal is to minimise number of page replacements Key factor is ratio of pages:frames Higher = more faults, lower = fewer faults FIFO Replace the first one loaded (which is also the oldest) Mediocre performance Belady's Anomaly: Increasing number of frames INCREASES the fault rate! FIFO suffers from this anomaly for some reference strings (example, page 374) Optimal We know that a replacement strategy is optimal if it does the least work Page that is best to replace is the one that is going to be needed for the longest period of time Problem: Requires knowledge of when a page will be needed Not really possible and mostly used as a theoretical bound when measuring other strategies LRU Past history is the best indicator of future use Least likely to be used is the page that was Least Recently Used (LRU) Some page tables have a reference bit that is set whenever a page is referenced Good: enables us to delete pages not used very much Bad: setting the reference bit on every memory access is time consuming Reference bit allows us to approximate LRU Reference Shifts Use 8 bits (or more) for the reference bits Use some regular clock interval Zero the reference bits when a page is loaded Set the high-order (leftmost) bit to 1 when the page is referenced Every clock interval, shift the bits right by 1 Treat as an integer, higher means more recently used and more frequently used Second Chance Algorithm is a variant of FIFO When a page is needed, take the first If reference bit set, clear it and move page to back of the queue If not set, replace it Sometimes called the "clock" algorithm Can be easily implemented using a circular queue Can be enhanced by avoiding (if possible) pages that are dirty Other Options Reference counting – count references and replace the least frequently used Issue – LFU can replace a new page that hasn't had a chance to be used much yet P00ls of empty pages can be kept so a free page is always available – permits CPU to schedule the creation of more free pages for times when its less busy or disk isn't in use *** Next Class: 9.5+, Frame Allocation *** Raw Disk It is possible to write directly to, or read directly from, a disk block This technique is fast and avoids creating directory entries, file system data, etc. Used by some databases and other applications Bypasses paging mechanism – disk savings usually lost due to memory management problems Frame Allocation Page faults require a frame during servicing Are all frames equal? Do we just apply some variant of LRU, or do we need to ensure each user has their "fair share"? Performance requires that a process be given some minimum number of frames Hardware is a factor – What is the maximum number of frames that an instruction could possibly need to access? (This value makes a good minimum) Indirect addressing also adds to the page minimum (mostly an obsolete idea now) Also – stack & heap of process, OS tables, scheduler code, interrupt handlers etc. must be considered Strategies Equal Allocation: f frames are divided equally among u users (f/u per user) Proportional Allocation: Allocate memory in proportion to the size of the process I.e., if p2 is twice the size of p1, p2 gets 2/3 of memory and p1 gets 1/3 of memory p/S * f where S = total pages for all pi Allocation con't OS needs some pages to operate efficiently Process should always have its minimum Pool of free frames is useful Have not considered priority in any way Useful to consider scheduling – if a process isn't going to run, does it need much memory? IO bound processes do a lot of waiting, good candidates for less memory Global vs. Local Allocation Global Allocation: All frames are viable candidates and must be considered for replacement when a new page is loaded Local Allocation: Only the frames allocated to the process are considered for replacement NUMA just messes everything up ... > Good: being able to add additional memory on new memory cards > Bad: cards are slower than the memory on the motherboard and should not be paged as often Thrashing When a process spends more time being paged than it does being executed Occurs when page fault rate gets too high Local replacement can prevent processes from causing each other to thrash Locality: A set of pages that are used together A process can be viewed as a set of sequential localities that tend to overlap Locality Example (Fig 9.19) Working Set The set of pages accessed in some time period Can adjust the time period to produce smaller or (somewhat) larger working sets Captures a process' current locality Can be used to prevent thrashing Do not start (or swap out) a process when some process(s) do not have sufficient frames for their working set Working Set Size = number of total frames needed if the interval is greater than the life of the program = 1 if the interval contains only one instruction with not memory reference Generally try to use an interval that captures the locality of most processes Fault Rate Alternatively, we can ignore the working set Simply use the Page Fault Rate to avoid thrashing Goal is to keep the fault rate below some limit Very simple to calculate and keep track of Generally a good approach, but like all approaches, fails in some rare situations Memory Mapping Loading of a file into memory to minimise disk accesses Uses the standard paging system to do the loading Can cause synchronisation issues between inmemory version and on-disk version until the file is closed uses the mmap (or mmap2) system call often used as a technique for memory sharing supports concurrent file access by multiple processes IO Some IO devices have their buffers also mapped into main memory Writes to this location are then duplicated to the actual IO device Used for Disks, Monitors/Video Also used for hardware ports such as mouse, game controllers, USB If device uses a status/controller register for communication, it is programmed IO (PIO) If devices uses interrupts for communication it is interrupt driven Kernel Memory The OS often works with non-paged data Some data is very small (e.g., a semaphore) and doesn't need a page Some data is very large (i.e., a disk block) and must be on contiguous frames so its address space is continuous "Buddy System" Round all data sizes up to a power of 2 Easier to deal with memory blocks if they are 32 bytes, 64 bytes, 128 bytes etc. Large blocks easily split to form two smaller ones Smaller adjacent blocks combined to form one larger one Works well and is a very commonly used technique in all kinds of system programming Slab Allocation Keep a "slab" of memory for each specific kernel data structure (e.g., semaphore, PCB, Page Table) Manage the slap almost as if it was paged, with pages the same size as the data structure Has some issues if more pages are needed, as they won't be contiguous (which is necessary) Has been generalised and can be used for some user-mode requests This is how most modern OSs now function Prepaging Tries to avoid page faults by anticipating future faults Wastes time if unneeded page brought into memory Is often faster to read two sequential disk blocks than to issue two independent disk reads (so we can save time) Page Size Strongly dictated by CPU architecture Also influenced by: Bus Width/Size Disk block size System use characteristics (e.g., process and file sizes) Amount of physical memory available Time to service a page fault TLB Reach The amount of memory accessible from the TLB Should be greater than the working set size Can be influenced by page size TLB size may be configurable, depending upon the hardware Page Locking Sometimes a page should be locked into memory so that its not paged out, e.g., Disk buffer after an IO request issued The OS itself (i.e., scheduler, mmu - interrupt handlers, frame table) Solution is to put a "lock bit" into the frame table for each frame Programming Concerns Let the compiler optimise! Most situations that result in poor performance can be detected and avoided by the compiler (e.g., memory access in loops) Also note: Pointers and malloc will can be used very badly and can really harm performance 2. Objects almost always harm performance (as does method over-riding) – there is a reason no web browser, OS, compiler, VM, server ... is OO 1. To Do: Do Lab 5 Read Chapter 9 (pgs 357-409; this and next lecture) Continue working on Assignment 2