lecture14

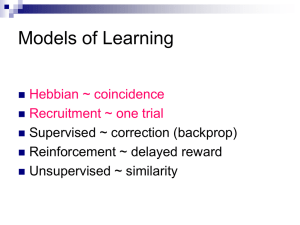

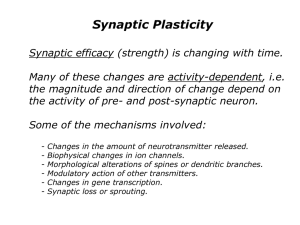

advertisement

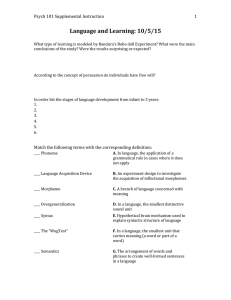

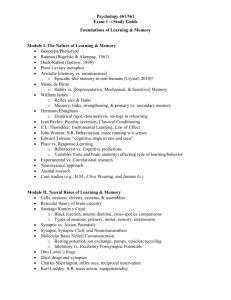

Issue #2: Shift Invariance Backprop cannot handle shift invariance (it cannot generalize from 0011, 0110 to 1100) But the cup is on the table whether you see it right in the center or from the corner of your eyes (i.e. in different areas of the retina map) What structure can we utilize to make the input shift-invariant? Topological Relations Separation Contact Coincidence: Overlap - Inclusion - - Encircle/surround Limitations Scale Uniqueness/Plausibility Grammar Abstract Concepts Inference Representation How does activity lead to structural change? The brain (pre-natal, post-natal, and adult) exhibits a surprising degree of activity dependent tuning and plasticity. To understand the nature and limits of the tuning and plasticity mechanisms we study How activity is converted to structural changes (say the ocular dominance column formation) It is centrally important for us to understand these mechanisms to arrive at biological accounts of perceptual, motor, cognitive and language learning Biological Learning is concerned with this topic. Learning and Memory: Introduction Memory Declarative Episodic memory of a situation Non-Declarative Semantic general facts Procedural skills Learning and Memory: Introduction There are two different types of learning – Skill Learning – Fact and Situation Learning • General Fact Learning • Episodic Learning • There is good evidence that the process underlying skill (procedural) learning is partially different from those underlying fact/situation (declarative) learning. Skill and Fact Learning involve different mechanisms • Certain brain injuries involving the hippocampal region of the brain render their victims incapable of learning any new facts or new situations or faces. – But these people can still learn new skills, including relatively abstract skills like solving puzzles. • Fact learning can be single-instance based. Skill learning requires repeated exposure to stimuli. • Implications for Language Learning? Short term memory How do we remember someone’s telephone number just after they tell us or the words in this sentence? Short term memory is known to have a different biological basis than long term memory of either facts or skills. We now know that this kind of short term memory depends upon ongoing electrical activity in the brain. You can keep something in mind by rehearsing it, but this will interfere with your thinking about anything else. (Phonological Loop) Long term memory • But we do recall memories from decades past. – These long term memories are known to be based on structural changes in the synaptic connections between neurons. – Such permanent changes require the construction of new protein molecules and their establishment in the membranes of the synapses connecting neurons, and this can take several hours. • Thus there is a huge time gap between short term memory that lasts only for a few seconds and the building of long-term memory that takes hours to accomplish. • In addition to bridging the time gap, the brain needs mechanisms for converting the content of a memory from electrical to structural form. Situational Memory • Think about an old situation that you still remember well. Your memory will include multiple modalities- vision, emotion, sound, smell, etc. • The standard theory is that memories in each particular modality activate much of the brain circuitry from the original experience. • There is general agreement that the Hippocampal area contains circuitry that can bind together the various aspects of an important experience into a coherent memory. • This process is believed to involve the Calcium based potentiation (LTP). Models of Learning Hebbian ~ coincidence Recruitment ~ one trial Supervised ~ correction (backprop) Reinforcement ~ delayed reward Unsupervised ~ similarity Hebb’s Rule The key idea underlying theories of neural learning go back to the Canadian psychologist Donald Hebb and is called Hebb’s rule. From an information processing perspective, the goal of the system is to increase the strength of the neural connections that are effective. LTP and Hebb’s Rule Hebb’s Rule: neurons that fire together wire together strengthen weaken Long Term Potentiation (LTP) is the biological basis of Hebb’s Rule Calcium channels are the key mechanism Chemical realization of Hebb’s rule It turns out that there are elegant chemical processes that realize Hebbian learning at two distinct time scales Early Long Term Potentiation (LTP) Late LTP These provide the temporal and structural bridge from short term electrical activity, through intermediate memory, to long term structural changes. Long Term Potentiation (LTP) These changes make each of the winning synapses more potent for an intermediate period, lasting from hours to days (LTP). In addition, repetition of a pattern of successful firing triggers additional chemical changes that lead, in time, to an increase in the number of receptor channels associated with successful synapses - the requisite structural change for long term memory. There are also related processes for weakening synapses and also for strengthening pairs of synapses that are active at about the same time. The Hebb rule is found with long term potentiation (LTP) in the hippocampus Schafer collateral pathway Pyramidal cells 1 sec. stimuli At 100 hz Computational Models based on Hebb’s rule The activity-dependent tuning of the developing nervous system, as well as post-natal learning and development, do well by following Hebb’s rule. Explicit Memory in mammals appears to involve LTP in the Hippocampus. Many computational systems for modeling incorporate versions of Hebb’s rule. Winner-Take-All: Recruitment Learning Units compete to learn, or update their weights. The processing element with the largest output is declared the winner Lateral inhibition of its competitors. Learning Triangle Nodes LTP in Episodic Memory Formation Hebb’s rule is not sufficient What happens if the neural circuit fires perfectly, but the result is very bad for the animal, like eating something sickening? A pure invocation of Hebb’s rule would strengthen all participating connections, which can’t be good. On the other hand, it isn’t right to weaken all the active connections involved; much of the activity was just recognizing the situation – we would like to change only those connections that led to the wrong decision. No one knows how to specify a learning rule that will change exactly the offending connections when an error occurs. Computer systems, and presumably nature as well, rely upon statistical learning rules that tend to make the right changes over time. More in later lectures. Models of Learning Hebbian ~ coincidence Recruitment ~ one trial Supervised ~ correction (backprop) Reinforcement ~ delayed reward Unsupervised ~ similarity LTP and one-shot memory Twin requirements of LTP induction: presynaptic activity + postsynaptic depolarization: LTP requires synchronous activity at multiple synapses of a postsynaptic cell (cooperativity) ideal for transforming a transient synchronousactivity based expression of a relation between multiple items into a persistent synaptic-efficacy based encoding of the relation (Shastri, 2001) Recruiting connections Given that LTP involves synaptic strength changes and Hebb’s rule involves coincident-activation based strengthening of connections How can connections between two nodes be recruited using Hebbs’s rule? The Idea of Recruitment Learning K Y X N B F = B/N Pno link (1 F ) BK Suppose we want to link up node X to node Y The idea is to pick the two nodes in the middle to link them up Can we be sure that we can find a path to get from X to Y? the point is, with a fan-out of 1000, if we allow 2 intermediate layers, we can almost always find a path Finding a Connection P = (1-F) **B**K P = Probability of NO link between X and Y N = Number of units in a “layer” B = Number of randomly outgoing units per unit F = B/N , the branching factor K = Number of Intermediate layers, 2 in the example K= N= 106 107 108 0 .999 .9999 .99999 1 .367 .905 .989 2 10-440 10-44 10-5 # Paths = (1-P k-1)*(N/F) = (1-P k-1)*B X Y X Y Finding a Connection in Random Networks For Networks with N nodes and N branching factor, there is a high probability of finding good links. (Valiant 1995) Recruiting a Connection in Random Networks Informal Algorithm 1. Activate the two nodes to be linked 2. Have nodes with double activation strengthen their active synapses (Hebb) 3. There is evidence for a “now print” signal based on LTP (episodic memory) Triangle nodes and feature structures A B C A B C Representing concepts using triangle nodes Recruiting triangle nodes Let’s say we are trying to remember a green circle currently weak connections between concepts (dotted lines) has-color blue has-shape green round oval Strengthen these connections and you end up with this picture has-color has-shape Green circle blue green round oval Has-color Green Has-shape Round Has-color GREEN Has-shape ROUND Models of Learning Hebbian ~ coincidence Recruitment ~ one trial Supervised ~ correction (backprop) Reinforcement ~ delayed reward, soon Unsupervised ~ similarity 5 levels of Neural Theory of Language Pyscholinguistic experiments Spatial Relation Motor Control Metaphor Grammar Cognition and Language abstraction Computation Structured Connectionism Triangle Nodes Neural Net and learning SHRUTI Computational Neurobiology Biology Neural Development Quiz Midterm Finals Tinbergen’s Four Questions How does it work? How does it improve fitness? How does it develop and adapt? How did it evolve? postsynaptic neuron science-education.nih.gov Brains ~ Computers 1000 operations/sec 100,000,000,000 units 10,000 connections/ graded, stochastic embodied fault tolerant evolves, learns 1,000,000,000 ops/sec 1-100 processors ~ 4 connections binary, deterministic abstract crashes designed, programmed Artist’s rendition of a typical cell membrane FlexorCrossed Extensor Reflex (Sheridan 1900) Reflex Circuits With Inter-neurons Painful Stimulus Gaits of the cat: an informal computational model Neural Tissue The skin and neural tissue arise from a single layer, known as the ectoderm in response to signals provided by an adjacent layer, known as the mesoderm. A number of molecules interact to determine whether the ectoderm becomes neural tissue or develops in another way to become skin Critical Periods in Development There are critical periods in development (pre and post-natal) where stimulation is essential for fine tuning of brain connections. Other examples of columns Orientation columns Pre-Natal Tuning: Internally generated tuning signals But in the womb, what provides the feedback to establish which neural circuits are the right ones to strengthen? Not a problem for motor circuits - the feedback and control networks for basic physical actions can be refined as the infant moves its limbs and indeed, this is what happens. But there is no vision in the womb. Recent research shows that systematic moving patterns of activity are spontaneously generated prenatally in the retina. A predictable pattern, changing over time, provides excellent training data for tuning the connections between visual maps. The pre-natal development of the auditory system is also interesting and is directly relevant to our story. Research indicates that infants, immediately after birth, preferentially recognize the sounds of their native language over others. The assumption is that similar activity-dependent tuning mechanisms work with speech signals perceived in the womb. Post-natal environmental tuning The pre-natal tuning of neural connections using simulated activity can work quite well – a newborn colt or calf is essentially functional at birth. This is necessary because the herd is always on the move. Many animals, including people, do much of their development after birth and activity-dependent mechanisms can exploit experience in the real world. In fact, such experience is absolutely necessary for normal development. As we saw, early experiments with kittens showed that there are fairly short critical periods during which animals deprived of visual input could lose forever their ability to see motion, vertical lines, etc. For a similar reason, if a human child has one weak eye, the doctor will sometimes place a patch over the stronger one, forcing the weaker eye to gain experience. Learning Rule – Gradient Descent on an Root Mean Square (RMS) Learn wi’s that minimize squared error 1 2 E[ w] (tk o k ) 2kO O = output layer Backpropagation Algorithm Initialize all weights to small random numbers For each training example do For For each hidden unit h: y ( w x ) hi i h i each output unit k: y ( w x ) kh h k k For each output unit k: y (1 y ) (t y ) k k k k k For each hidden unit h: y (1 y ) w h h h hk k Update each network weight wij: wij wij wij with k wij j xij Distributed vs Localist Rep’n John 1 1 0 0 John 1 0 0 0 Paul 0 1 1 0 Paul 0 1 0 0 George 0 0 1 1 George 0 0 1 0 Ringo 1 0 0 1 Ringo 0 0 0 1 What are the drawbacks of each representation? Distributed vs Localist Rep’n John 1 1 0 0 John 1 0 0 0 Paul 0 1 1 0 Paul 0 1 0 0 George 0 0 1 1 George 0 0 1 0 Ringo 1 0 0 1 Ringo 0 0 0 1 What happens if you want to represent a group? How many persons can you represent with n bits? 2^n What happens if one neuron dies? How many persons can you represent with n bits? n Word Superiority Effect Modeling lexical access errors Semantic error Formal error (i.e. errors related by form) Mixed error (semantic + formal) Phonological access error Phonological access error: Selection of incorrect phonemes Syl FOG f r d Onsets DOG k CAT m ae RAT MAT o t Vowels On Vo Co g Codas Adapted from Gary Dell, “Producing words from pictures or from other words” MRI and fMRI MRI: Images of brain structure. fMRI: Images of brain function. Tissues differ in magnetic susceptibility (grey matter, white matter, cerebrospinal fluid) Mirror Neurons: Area F5 Cortical Mechanism for Action Recognition Observed Action provides an early description of the action STS copies of the motor plans necessary to imitate actions for monitoring purposes adds additional somatosensory information to the movement to be imitated Parietal mirror neurons (PF) (inferior parietal lobule) Frontal mirror neurons (F5) (BA 44) codes the goal of the action to be imitated Somatotopy of Action Observation Foot Action Hand Action Mouth Action Buccino et al. Eur J Neurosci 2001 The WCS Color Chips Basic color terms: Single word (not blue-green) Frequently used (not mauve) Refers primarily to colors (not lime) Applies to any object (not blonde) FYI: English has 11 basic color terms Concepts are not categorical