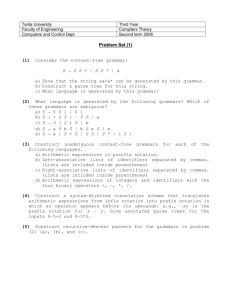

winterschool

advertisement

Issues in Computational Linguistics:

Grammar Engineering

Dick Crouch and Tracy King

Outline

What is a deep grammar?

How to engineer them:

–

–

–

–

–

robustness

integrating shallow resources

ambiguity

writing efficient grammars

real world data

What is a shallow grammar

often trained automatically from marked up

corpora

part of speech tagging

chunking

trees

POS tagging and Chunking

Part of speech tagging:

I/PRP saw/VBD her/PRP duck/VB ./PUNCT

I/PRP saw/VBD her/PRP$ duck/NN ./PUNCT

Chunking:

– general chunking

[I begin] [with an intuition]: [when I read] [a sentence], [I

read it] [a chunk] [at a time].

(Abney)

– NP chunking

[NP President Clinton] visitited [NP the Hermitage] in [NP

Leningrad]

Treebank grammars

Phrase structure tree (c-structure)

Annotations for heads, grammatical functions

Collins parser output

Deep grammars

Provide detailed syntactic/semantic analyses

– LFG (ParGram), HPSG (LinGO, Matrix)

– Grammatical functions, tense, number, etc.

Mary wants to leave.

subj(want~1,Mary~3)

comp(want~1,leave~2)

subj(leave~2,Mary~3)

tense(leave~2,present)

Usually manually constructed

– linguistically motivated rules

Why would you want one

Meaning sensitive applications

– overkill for many NLP applications

Applications which use shallow methods for

English may not be able to for "free" word

order languages

– can read many functions off of trees in English

SUBJ: NP sister to VP [S [NP Mary] [VP left]]

OBJ: first NP sister to V [S [NP Mary] [VP saw [NP John]]]

– need other information in German, Japanese, etc.

Deep analysis matters…

if you care about the answer

Example:

A delegation led by Vice President Philips, head of the chemical

division, flew to Chicago a week after the incident.

Question: Who flew to Chicago?

Candidate answers:

division

head

V.P. Philips

closest noun

next closest

next

delegation

furthest away but

Subject of flew

shallow but wrong

deep and right

Applications of Language Engineering

Post-Search

Sifting

Broad

Autonomous

Knowledge Filtering

Alta

Vista

AskJeeves

Document Base

Management

Narrow

Domain Coverage

Google

Restricted

Dialogue

Manually-tagged

Keyword Search

Knowledge

Fusion

Good

Translation

Useful

Summary

Natural

Dialogue

Microsoft

Paperclip

Low

Functionality

High

Traditional Problems

Time consuming and expensive to write

Not robust

– want output for any input

Ambiguous

Slow

Other gating items for applications that need

deep grammars

Why deep analysis is difficult

Languages are hard to describe

– Meaning depends on complex properties of words and sequences

– Different languages rely on different properties

– Errors and disfluencies

Languages are hard to compute

– Expensive to recognize complex patterns

– Sentences are ambiguous

– Ambiguities multiply: explosion in time and space

How to overcome this

Engineer the deep grammars

– theoretical vs. practical

– what is good enough

Integrate shallow techniques into deep

grammars

Experience based on broad-coverage LFG

grammars (ParGram project)

Robustness: Sources of Brittleness

missing vocabulary

– you can't list all the proper names in the world

missing constructions

– there are many constructions theoretical linguistics

rarely considers (e.g. dates, company names)

– easy to miss even core constructions

ungrammatical input

– real world text is not always perfect

– sometimes it is really horrendous

Real world Input

Other weak blue-chip issues included Chevron, which

went down 2 to 64 7/8 in Big Board composite trading

of 1.3 million shares; Goodyear Tire & Rubber, off 1

1/2 to 46 3/4, and American Express, down 3/4 to 37

1/4. (WSJ, section 13)

``The croaker's done gone from the hook –

(WSJ, section 13)

(SOLUTION 27000 20) Without tag P-248 the W7F3

fuse is located in the rear of the machine by the

charge power supply (PL3 C14 item 15.

(Eureka copier repair tip)

Missing vocabulary

Build vocabulary based on the input of

shallow methods

– fast

– extensive

– accurate

Finite-state morphologies

Part of Speech Taggers

Finite State Morphologies

Finite-state morphologies

falls -> fall +Noun +Pl

fall +Verb +Pres +3sg

Mary -> Mary +Prop +Giv +Fem +Sg

vienne -> venir +SubjP +SG {+P1|+P3} +Verb

Build lexical entry on-the-fly from the

morphological information

– have canonicalized stem form

– have significant grammatical information

– do not have subcategorization

Building lexical entries

Lexical entries

-unknown N

@(COMMON-NOUN %stem).

+Noun

N-SFX @(PERS 3).

+Pl

N-NUM @(NUM pl).

Rule

NOUN -> N

N-SFX

N-NUM.

Templates

– COMMON-NOUN :: (^ PRED)='%stem'

(^ NTYPE)=common

– PERS(3) :: (^ PERS)=3

– NUM(pl) :: (^ NUM)=pl

Building lexical entries

F-structure for falls

[ PRED 'fall'

NTYPE common

PERS 3

NUM pl ]

C-Structure for falls

Noun

N

fall

N-SFX

+Noun

N-NUM

+Pl

Guessing words

Use FST guesser if the morphology doesn't

know the word

– Capitalized words can be proper nouns

» Saakashvili -> Saakashvili +Noun +Proper +Guessed

– ed words can be past tense verbs or adjectives

» fumped -> fump +Verb +Past +Guessed

fumped +Adj +Deverbal +Guessed

Languages with more morphology allow for

better guessers

Using the lexicons

Rank the lexical lookup

1. overt entry in lexicon

2. entry built from information from morphology

3. entry built from information from guesser

Use the most reliable information

Fall back only as necessary

Missing constructions

Even large hand-written grammars are not

complete

– new constructions, especially with new corpora

– unusual constructions

Generally longer sentences fail

– one error can destroy the parse

Build up as much as you can; stitch together

the pieces

Grammar engineering approach

First try to get a complete parse

If fail, build up chunks that get complete

parses

Have a fall back for things without even chunk

parses

Link these chunks and fall backs together in a

single structure

Fragment Chunks: Sample output

the the dog appears.

Split into:

– "token" the

– sentence "the dog appears"

– ignore the period

C-structure

F-structure

Ungrammatical input

Real world text contains ungrammatical input

– typos

– run ons

– cut and paste errors

Deep grammars tend to only cover

grammatical output

Two strategies

– robustness techniques: guesser/fragments

– disprefered rules for ungrammatical structures

Rules for ungrammatical structures

Common errors can be coded in the rules

– want to know that error occurred

(e.g., feature in f-structure)

Disprefer parses of ungrammatical structure

– tools for grammar writer to rank rules

– two+ pass system

1. standard rules

2. rules for known ungrammatical constructions

3. default fall back rules

Sample ungrammatical structures

Mismatched subject-verb agreement

Verb3Sg = { SUBJ PERS = 3

SUBJ NUM = sg

|BadVAgr }

Missing copula

VPcop ==> { Vcop: ^=!

|e: (^ PRED)='NullBe<(^ SUBJ)(^XCOMP)>'

MissingCopularVerb}

{ NP: (^ XCOMP)=!

|AP: (^ XCOMP)=!

| …}

Robustness summary

Integrate shallow methods

– for lexical items

– morphologies

– guessers

Fall back techniques

– for missing constructions

– fragment grammar

– disprefered rules

Ambiguity

Deep grammars are massively ambiguous

Example: 700 from section 23 of WSJ

– average # of words: 19.6

– average # of optimal parses: 684

» for 1-10 word sentences: 3.8

» for 11-20 word sentences: 25.2

» for 50-60 word sentences: 12,888

Managing Ambiguity

Use packing to parse and manipulate the

ambiguities efficiently

(more tomorrow)

Trim early with shallow markup

– fewer parses to choose from

– faster parse time

Choose most probable parse for applications

that need a single input

Shallow markup

Part of speech marking as filter

I saw her duck/VB.

– accuracy of tagger (v. good for English)

– can use partial tagging (verbs and nouns)

Named entities

– <company>Goldman, Sachs & Co.</company> bought IBM.

– good for proper names and times

– hard to parse internal structure

Fall back technique if fail

– slows parsing

– accuracy vs. speed

Example shallow markup: Named entities

Allow tokenizer to accept marked up input:

parse {<person>Mr. Thejskt Thejs</person> arrived.}

tokenized string:

Mr. Thejskt Thejs TB +NEperson

Mr(TB). TB Thejskt TB Thejs

Add

TB arrived TB . TB

lexical entries and rules for NE tags

Resulting C-structure

Resulting F-structure

Results for shallow markup

Full/All

% Full

parses

Optimal

sol’ns

Best

F-sc

Time

%

Unmarked

76

482/1753

82/79

65/100

Named ent

78

263/1477

86/84

60/91

POS tag

62

248/1916

76/72

40/48

Lab brk

65

158/ 774

85/79

19/31

Kaplan and King 2003

Chosing the most probable parse

Applications may want one input

– or at least just a handful

Use stochastic methods to choose

– efficient (XLE English grammar: 5% of parse time)

Need training data

– partially labelled data ok

[NP-SBJ They] see [NP-OBJ the girl with the telescope]

Run-time performance

Many deep grammars are slow

Techniques depend on the system

– LFG: exploit the context-free backbone

ambiguity packing techniques

Speed vs. accuracy trade off

– remove/disprefer peripheral rules

– remove fall backs for shallow markup

Development expense

Grammar porting

Starter grammar

Induced grammar bootstrapping

How cheap are shallow grammars?

– training data can be expensive to produce

Grammar porting

Use an existing grammar as the base for a

new language

Languages must be typologically similar

– Japanese-Korean

– Balkan

Lexical porting via bi-lingual dictionaries

Main work in testing and evaluation

Starter grammar

Provide basic rules and templates

– including for robustness techniques

Grammar writer:

– chooses among them

– refines them

Grammar Matrix for HPSG

Grammar induction

Induce a core grammar from a treebank

– compile rule generalizations

– threshold rare rules

– hand augment with features and fallback

techniques

Requires

– induction program

– existing resources (treebank)

Conclusions

Grammar engineering makes deep grammars

feasible

– robustness techniques

– integration of shallow methods

Many current applications can use shallow

grammars

Fast, accurate, broad-coverage deep

grammars enable new applications