Making it happen (technical issues such as missing data, ID

advertisement

Operational CBT

Implementation Issues:

Making It Happen

Richard M. Luecht, PhD

Educational Research Methodology

University of North Carolina at Greensboro

Tenth Annual Maryland Assessment Conference: COMPUTERS AND

THEIR IMPACT ON STATE ASSESSMENT: RECENT HISTORY AND

PREDICTIONS FOR THE FUTURE. 18-19 October, College Park MD

2010 R.M. Luecht

1

What do you get if you

combine a psychometrician, a

test development specialist, a

computer hardware engineer,

a LSI software engineer, a

human factors engineer, a QC

expert, and a cognitive

psychologist?

2010 R.M. Luecht

A pretty useful

individual to have

around if you’re

implementing CBT!!!!

2

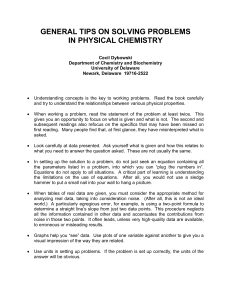

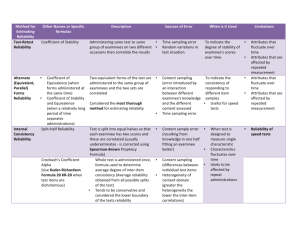

A Naïve View of Operational CBT*

Item #001

Item #001

Item #001

Item #001

Server

Data Server

Data

Data

Item Bank

Ethernet

Test Delivery Network

ˆ

ik max I U j

ui

: j R

Examinee

k

Ui=010120113

Item Selection/Test Assembly Algorithm

Response Vector

j

1

ˆ MAP

u i u i

1

, ,u ik 1

k 1

max g ui1 , , uik1 : ,

Ability Estimation/Scoring Algorithm

* Includes linear CBT, CAT, CMT CAST and other variants

2010 R.M. Luecht

3

The Challenge of CBT

Moving more complex data more quickly,

more securely and more accurately from

item generation through final scoring

Immediate responsiveness where possible

Re-engineering data management and

processing systems: end-to-end

99.999999% accuracy and eliminating

costly and error-prone human factors

through automation and better QC/QA

2010 R.M. Luecht

4

Systems Impacted by

Redesign and QC/QA

Item development and banking

Test assembly and composition

Examinee eligibility, registration, and

scheduling, fees

Test delivery

Psychometrics and post-examination

processing

Item analysis, key validation and quality assurance

Test analysis

Final scoring, reporting and communication

2010 R.M. Luecht

5

Item and Item Set Repositories

2010 R.M. Luecht

6

Test Data Repositories

2010 R.M. Luecht

7

Examinee Data Repositories

2010 R.M. Luecht

8

CBT: A System of Systems

2010 R.M. Luecht

9

Item-Level Data

Item or exercise rendering data

Stimulus information (e.g., MCQ stem, a

reading passage)

Response display labels (e.g., distractors as

labels for a check box control)

Scripts for interactivity

Template references

Content and other item attributes

Content category codes

Cognitive and other secondary classifications

Linguistic features

2010 R.M. Luecht

10

Item-Level Data – cont.

Statistical item data

Classical item statistics (p-values, biserial

correlations, etc.)

IRT statistics (1PL, 2PL, 3PL, GPCM parameter

estimates)

DIF statistics and other special indices

Operational data

Reuse history

Exposure rates and controls (for CAT)

Equating status

2010 R.M. Luecht

11

Test Unit Data

Object list to include (e.g., item identifies for

all items in the test unit)

Navigation functions, including presentation,

review and sequencing rules

Embedded adaptive mechanisms (score +

selection)

Timing controls and other information (e.g.,

how clock functions, time limit, etc.)

Title and instruction screens

2010 R.M. Luecht

12

Test Unit Data – cont.

Presentation template references

Helm look-and-feel (navigation style, etc.)

Functions (e.g., direction of cursor movement after

◄┘ or tab is pressed)

Reference and ancillary look-up materials

Calculators

Hyperlink to other BLOBs

Custom exhibits available to test takers

2010 R.M. Luecht

13

Standard Hierarchical

View of a “Test Form”

Test Form A

Section I

Section II

Group 1

Section III

Group 2

Set 1

Item

1

Item

2

2010 R.M. Luecht

Item

3

Item

4

Item

5

Item

6

Item

7

Item

8

Item

9

Item

10

Item

11

Item

12

14

Examinee Data

Identification information

Name and identification numbers

Photo, digital signature, retinal scan

information

Address and other contact information

Demographic information

Eligibility-to-test information

Jurisdiction

Eligibility period

Retest restrictions

2010 R.M. Luecht

15

Examinee Data – cont.

Scheduled test date(s)

Special accommodations required

Scores and score reporting information

Testing history and exam blocking

Security history (e.g., previous irregular

behaviors, flagged for cheating,

indeterminate scores, or large score gains)

General correspondence

2010 R.M. Luecht

16

Interactions of Examinee and

Items or Test Units

Primary information

Final responses

Captured actions/inactions (state and

sequencing of actions)

Secondary information

Cumulative elapsed time on “unit”

Notes, marks or other captured during testing

2010 R.M. Luecht

17

Response Processing in CBT

Response capturing agents convert

examinee responses or actions to storable

data representations

Examples

item.checkbox.state (T/F) item.response.choice=“A”

{A,B,C,D}

item.group.unit(j).state (T/F), j=1,…6

item.response.choice=“1,4” {1,2,3,4,5,6}

item.component.text(selected=position,length)

item.response.text=“text”

item.component.container(freeresponse.entry)

item.response.text=“text”

case.grid.cellRowCol(numeric.entry)

case.grid.cellref.response.text=“value”

2010 R.M. Luecht

18

Raw Response Representations for

Discrete Items

Convert

to

Uniform CPA Examination: Item

002

item002.response.choice=“3”

Item 1

Item 2

item002.stemtext.text="HTML formatted text"

item002.checkbox1.state="false"

item002.checkbox2.state="false"

item002.checkbox3.state="true"

item002.checkbox4.state="false"

item002.checkbox5.state="false"

2010 R.M. Luecht

19

Raw Response Representations for

a Performance Exercise

Convert

to…

CPA Examination:

Simulation A

caseA.tab5.sheet001.r3c4.text=“12501.99”

12-18-01

Item 1

Item 2

Item 3

Case A

Item 4

Item 5

2010 R.M. Luecht

Instructions

Context

Reports

Help

C1

C2

C3

C4

R1

Date

Account

Debit

Credit

R2

12-19-01

280-01

R3

12-19-01

280-04

R4

12-19-01

345-02

R5

12-19-01

280-01

R6

12-19-01

345-02

R7

12-19-01

280-04

12501.99

20

Raw Response

Representations for an Essay

Store as…

RichTextBox.Item001.text=“There were

two important changes that

characterized the industrial

revolution. First, individuals

migrated from rural to urban settings

in order to work at new factories and

in other industrial settings

(geographic change). Second,

companies began adopting

mechanisms to facilitate mass

production (changes in

manufacturing procedures, away). ”

2010 R.M. Luecht

21

Entering the Psychometric Zone: Data

Components of Scoring Evaluators

Responses

Selections, actions or inactions:

item.response.state=control.state (ON or OFF)

Entries: item.response.value=control.value

Answer expressions (rethinking IA is needed)

Answer keys

Rubrics of idealized responses or patterns of responses

Functions of other responses

Score evaluators process the responses

Scoring evaluators convert the stored responses to

numerical values—e.g., f(responseij, answer

keyi)xij [0,1]

Raw scoring or IRT scoringaggregation and scaling of

2010 R.M. Luecht

22

item-level numerical scores

Planning for Painless Data

Exchanges and Conversions

Systems and subsystems need to exchange

data on a regular basis, providing

different views and field conversions

The hand-off must have several fool-proof

QC steps

Verification of all inputs

Conversion success 100% verified

Reconciliation of all results, including counts,

discrepancies, missing values, etc.

2010 R.M. Luecht

23

Example of a (Partial)

Examinee’s Test Results Record

testp>wang>marcus>>605533641>0A1CD9>93bw100175>1>>501>001>ENU>CB1_CAST105>>90>0>0>0>

DTW>06/26/96>08:41:38>05:58:32>w10>2>apt 75>1000 soldiers field rd>north

fayette>IN>47900>USA>1>1235552021>>NOCOMPANYNAME>0>>>>>1>1235551378>>0>0>35>>142/21

8/0/u>1>1>7>CBSectionI.12>CB1>s>p>0>36>>72/108/0/u>Survey015>survey15>s>p>0>0>>0/0/0/u>CBSectionI>C

AST2S1>s>p>0>28>>28/62/0/u>CBSectionII>CAST2S4>s>p>0>42>>42/48/0/u>CBSectionII>CAST2S3>s>p>

0>0>>0/0/0/u>CBSectionII>CAST2S2>s>p>0>0>>0/0/0/u>Survey016>survey2>s>p>0>0>>0/0/0/u>0>372>

SAFM0377>2>0>E>5>s>E>1>76>>SAEB0549>2>0>D>5>s>A>0>68>>SAFM0378>2>0>A>5>s>A>1>72>>SA

AB1653>2>0>C>5>s>D>0>102>>SABA8868>2>0>B>5>s>C>0>85>>SCAA1388>2>0>E>8>s>E>1>53>>SAAA

8447>2>0>D>5>s>E>0>55>>SAAB1934>2>0>A>5>s>A>1>60>>SAAB2075>2>0>E>5>s>E>1>136>>SADA77

10>2>0>D>5>s>D>1>40>>SABB1040>2>0>B>5>s>E>0>46>>SCAA1396>2>0>H>10>s>A>0>93>>SACA8906

>2>0>D>5>s>E>0>75>>SADA8116>2>0>C>5>s>D>0>53>>SADA8673>2>0>B>5>s>B>1>41>>SACA8626>2>

0>B>5>s>D>0>48>>SAFM0374>2>0>C>5>s>D>0>80>>SABA6397>2>0>A>5>s>A>1>110>>SAAB1088>2>0>

C>5>s>C>1>55>>SACA8455>2>0>D>4>s>D>1>73>>SAAB1667>2>0>C>5>s>C>1>44>>SAAJ7633>2>0>C>5>

s>C>1>89>>SABA5745>2>0>D>5>s>A>0>43>>SCAA1389>2>0>B>8>s>H>0>61>>SADA8650>2>0>A>5>s>C

>0>39>>SAFB0112>2>0>C>5>s>C>1>132>>SAAB2513>2>0>B>5>s>B>1>120>>SAFA9248>2>0>E>5>s>A>0

>77>>SABJ1042>2>0>D>5>s>C>0>112>>SACJ5894>2>0>C>5>s>D>0>82>>SAAA0410>2>0>D>5>s>E>0>89

>>SAAB1681>2>0>C>5>s>C>1>88>>SAFM0365>2>0>A>5>s>A>1>65>>SAEA8980>2>0>A>5>s>B>0>52>>

2010 R.M. Luecht

24

Assessment XML

<?xml version="1.0" encoding="UTF-8"?>

<AssessmentResult xmlns="http://ns.hr-xml.org/2004-08-02"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://ns.hr-xml.org/2004-08-02

AssessmentResult.xsd">

<ClientId idOwner="Provider Inc">

<IdValue name="ClientCode">OurClient-1342</IdValue>

</ClientId>

<ProviderId idOwner="Customer Inc">

<IdValue>ePredix</IdValue>

</ProviderId>

<ClientOrderId>

<IdValue name="PO Number">53RR20031618</IdValue>

<IdValue name="Department Name">Administration</IdValue>

</ClientOrderId>

<Results>

<Profile>Customer Service</Profile>

<OverallResult>

<Description>Executive Manager</Description>

<Score type="raw score">51</Score>

<Score type="percentile">65</Score>

<Scale>40-60</Scale>

</OverallResult>

<AssessmentStatus>

<Status>In Progress</Status>

<Details>Remains: "GAAP Basic Knowledge"</Details>

<StatusDate>2003-04-05</StatusDate>

</AssessmentStatus>

<UserArea/>

</AssessmentResult>

2010 R.M. Luecht

25

Translating XML Entities

to a Data Structure

Application

Structured

Data

XML Parser

XML

Document

Content

Handler

Error

Handler

External

Data

Entity

External

Data

Entity

2010 R.M. Luecht

DTD and

Schema

Handler

Enitity

Resolver

External DTD

Schema or XSL

Sheets

26

Extracting Data Views

A data view is a set of restructuring functions

that produce a data set from raw data

Views begin with a query

Usually results a formatted file structure

Graphing functions produce graphic data sets

Database functions produce database record sets

Multiple views are possible for different uses

(e.g., test assembly, item analysis,

calibrations, scoring)

Well-designed views are reusable

2010 R.M. Luecht

Standardized queries of the database(s)

Each views as a template with “object” status

Views can be manipulated by changing their

properties (e.g., data types, presentation formats)

27

Types of Data Files (Views)

Implicit Files: File format implies a

structure for the data

Flat files with fixed columns (headers optional)

Comma, tab or other delimited files

Explicit Files: Variables, data types, formats

and the actual data are explicitly structured

Data base files: dBASE, Oracle, Access, etc.

Row-column worksheets with “variable sets”

(e.g., Excel in data mode, SPSS)

XML and SGML

2010 R.M. Luecht

28

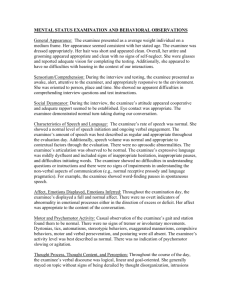

Explicit Structured “List View”

2010 R.M. Luecht

29

Structure of the Flat File

(A Type of “Metadata”)

Data Type

Definitions

2010 R.M. Luecht

Presentation

View Data

30

Relational DBM in Access

2010 R.M. Luecht

31

Classifications

PK

Classification Scheme

Classification Attribute Constraints

PK,FK1

Classification Scheme

Constraint Expression

Classification Description

Item Attribute Table

Attribute Table

PK

Attribute Code

FK1

Attribute Description

Classification Scheme

Relational

Schema

Across

Databases

for ATA

2010 R.M. Luecht

Attribute Code

PK

Item ID Code

Item Name

Classification Scheme

Attibute Code

Eligible Item Table

PK,FK1

Item ID Code

Item ID

Answer Key Type

Answer Key Expression Count

Score Categories

Item Mean

Item SD

Pt. Biserial

D Constant

A Parameter

B Parameter

C Parameter

Word Count

Readability

32

Data File Extractions for

Psychometric Processing

Item Analysis and Key Validation, Calibration and Equating

2010 R.M. Luecht

Table.Active_Test_Forms

Table.Active_Test_Form_Content

Table.Active_Examinee_Test_Forms

Table.Link_Item_Sets

Table.Active_Examinee_Responses

Table.Active_Item_Bank_Keyed

33

Query Test Form and

Item Databases

SELECT Item.Records IF(Query_Conditions=TRUE)

Test.TestID

TST0181

TST0181

TST0181

TST0181

TST0181

TST0181

TST0181

TST0181

TST0183

TST0183

TST0183

TST0183

TST0183

TST0183

TST0183

TST0183

2010 R.M. Luecht

Item.ID

ITM020920

ITM048392

ITM020342

ITM038632

ITM023833

ITM031935

ITM035329

ITM022222

ITM020921

ITM030102

ITM028902

ITM038886

ITM024519

ITM039981

ITM040027

ITM030253

Sort Item.ID

Test.TestID

TST0181

TST0181

TST0183

TST0181

TST0181

TST0183

TST0183

TST0183

TST0183

TST0181

TST0181

TST0181

TST0183

TST0183

TST0183

TST0181

Item.ID

ITM020342

ITM020920

ITM020921

ITM022222

ITM023833

ITM024519

ITM028902

ITM030102

ITM030253

ITM031935

ITM035329

ITM038632

ITM038886

ITM039981

ITM040027

ITM048392

34

The P I Query

SELECT Examinee.Records IF(Query_Conditions=TRUE)

Exam.PersonID Exam.TestID

107555

TST0183

517101

TST0181

670048

TST0181

758735

TST0183

754364

TST0183

827960

TST0183

619834

TST0183

615233

TST0182

429336

TST0182

Exam.PersonID Exam.TestID

107555

TST0183

107555

TST0183

107555

TST0183

107555

TST0183

107555

TST0183

107555

TST0183

107555

TST0183

107555

TST0183

517101

TST0181

2010 R.M. Luecht

Exam.ItemID

ITM020921

ITM030102

ITM028902

ITM038886

ITM024519

ITM039981

ITM040027

ITM030253

ITM020920

Exam.Status

F

F

F

F

F

R

F

R

F

Exam.iSequence

1

2

3

4

5

6

7

8

1

Exam.Date

11-Sep-04

11-Sep-04

13-Sep-04

13-Sep-04

13-Sep-04

13-Sep-04

13-Sep-04

13-Sep-04

14-Sep-04

Exam.iTime

39

131

39

61

58

67

61

34

65

Exam.Response

B

D

C

B

D

A

A

B

C

35

The P I Query

B

B C

D C D B

A D

ITM048392

ITM040027

ITM039981

ITM038886

ITM038632

ITM035329

ITM031935

ITM030253

ITM030102

ITM028902

ITM024519

ITM023833

ITM022222

ITM020921

ITM020920

Examinee.PersonID

107555

517101

ITM020342

GENERATE.FLATFILE(Examinee.Records,Item.Records)

B A A

C C B

A

Generate

“Masked”

Response

File

107555 9919911019991009

517101 1190199990019991

.PersonID

2010 R.M. Luecht

Items from TST0181 & TST0183

36

Implied Flat File View of Test Data

(Person by Item Flat Files)

Example 1: “raw response vectors” (input

to commercial item analysis software)

00001 BDCAABCAEDACBD

00002 BDBAABDAEDBCBD

00003 BCCBABCAEDABBD

Example 2: “scored response vectors”

(input to commercial item calibration

software) 00001 11111111111011

00002 11011101110011

00003 10101111111100

2010 R.M. Luecht

37

Reconciliation 101

Definitions: bringing into

harmony, aligning, balancing

Reconciliation is essential for

CBT data management and

quality assurance

Test forms, items, sets,

examinees, and transaction

output counts match input

counts

Results match expectations or

predictions

2010 R.M. Luecht

38

Reconciliation Example (Examinee

Data for IA/Calibrations)

File Reconcilation and Rectangular File Creator (R. Luecht, [c] 2009, 2010)

Date/time: mm-dd-yy hh:mm:ss

Control File: ControlFile.CON

Examinee_Test_Form File: ActiveExamineeTestForm.txt NP = 1687

Treatment of (Score_Status=1) items: INCLUDE Items and Responses

Item File: MasterItemFile.DAT NI = 3857, Total Read = 3857 Excluded= 0

===========================================================

Active_Examinee_Responses File=ActiveExamineeResponse.txt

No. IDs (from Active_Examinee_Test_Form)= 1687

File size (examinee transactions)= 506100

No. nonblank records input = 506100

No. records with unmatched items = 0

Forms = 8

1687 scored response records saved to Data-ResponseFile-Scored.RSP

1687 raw response records saved to Data-ResponseFile-Raw.RAW

ITEM LISTING and FORM ASSIGNMENTS DETECTED

Item Counts by Form

Form ID

01:SampleForm001

02:SampleForm002

01

02

03

04

05

06

07

08

300

0

0

37

0

37

0

0

0 300

0

0 200

0

0

0

0

0 200 200

03:SampleForm003

0

04:SampleForm004

37

05:SampleForm005

0 300

0

0 200

0

0 300

0

0

0 200

0

0

0

0

0

0 300

0

0

0 300

06:SampleForm006

37

0 200

07:SampleForm007

0

0 200

0

0

0 300 200

08:SampleForm008

0

0 200

0

0

0 200 300

ID Identifier NOpt Opts N-Count

NFrm Forms

Item_21801

5 ABCDE

41

1

04

Item_24601

5 ABCDE

97

3

01 03 07

Item_29801

5 ABCDE

97

2

02 07

:

<only partial records included to conserve space>

MISMATCH SUMMARY

---------------NO unmatched item IDs to FORMS

NO unmatched item IDs to RESPONSE RECORDS

2010 R.M. Luecht

39

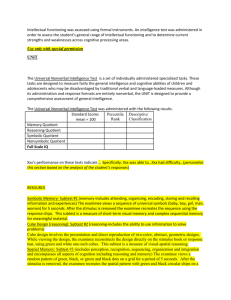

Follow the Single-Source Principle

A unique master record should exist for

every entity

Examinees registered/eligible to test

Items

Item sets

Modules, testlets or groups

Test forms

Changes should be made to the master

and forward-propagated for all processing

2010 R.M. Luecht

40

Example of Single Source

YES!!

NO!

2010 R.M. Luecht

41

Ignorable Missing Data?

Very little data is missing completely at

random, limiting the legitimate use of

imputation

Some preventable causes of missing data

Lost records due to crashes/transmission

errors

Corrupted response capturing/records

Purposeful omits

Running out of time/motivation to finish

2010 R.M. Luecht

42

Challenges of Real-Time

Test Assembly (CAT or LOFT)

Real-time item selection requires high

bandwidth and fast servers and pre-fetch

reduces precision

A “test form” does not exist until the

examination is complete

QC of test forms is very difficult, except by audit

sampling and careful refinement of test

specifications (objective functions/constraints)

QC of the data against “known” test-form entities

is NOT possible

2010 R.M. Luecht

43

2010 R.M. Luecht

44