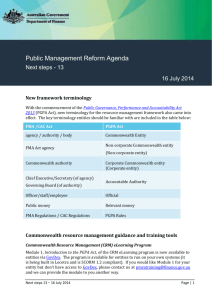

- Department of Finance

advertisement