OORT-1 - Department of Computer and Information Science

Some experiences at NTNU and Ericsson with Object-Oriented Reading Techniques (OORTs) for Design Documents

INTER-PROFIT/CeBASE seminar on

Empirical Software Engineering,

Simula Research Lab, Oslo, 22-23 Aug. 2002

-

Reidar Conradi, NTNU

Forrest Shull, Victor R. Basili, FC-Maryland (FC-MD)

Guilherme H. Travassos, Jeff Carver, Univ.Maryland (UMD)

{travassos, basili, carver}@cs.umd.edu; fshull@fc-md.umd.edu http://www.cs.umd.edu/projects/SoftEng/ESEG/ conradi@idi.ntnu.no, http://www.idi.ntnu.no/grupper/su/

E

J

COPP

UFR

Department of Computer Science

Experimental Software Engineering Group

Fraunhofer Center - Maryland

Slide 1

Table of contents

• Motivation and context p. 3

• Reading and Inspections p. 4

• OO Reading and UML Documents, OORTs p. 8

• OO Reading and Defect Types p. 13

• OO Reading-Related Concepts p. 18

• Ex. Gas Station Control System p. 20

• OORT experimentation p. 27

• E0: Post-mortem of SDL-inspections at Ericsson-Oslo, 1997-99 p. 30

• E1: Student OORT experiment at NTNU, Spring 2000 p. 32

• E2: Feasibility OORT study at NTNU, Autumn 2001 p. 40

• E3: Student OORT experiment at NTNU, Spring 2002 p. 42

• E4: Industrial OORT experiment at Ericsson-Grimstad, May 2002 p. 47

• Conclusion p. 50

• Appendix: OORT-1: Sequence Diagram x Class Diagram p. 51

Slide 2

Motivation and context

• Need to have better techniques for OO reading – UML/Java.

But OO software is not a set of simple, ”linear” documents.

• Norwegian OORT work started at Conradi’s sabbatical at Univ. Maryland in

1999/2000: Adapting OORT experimental material from CS735 course at

UMD, Fall 1999. All artifacts and instructions in English.

• E0. Data mining of SDL inspections at Ericsson-Oslo , 1997-99.

Internal database, but marginal data analysis. As 3 MSc theses.

• E1. 1st OORT exper. at NTNU , 4th year QA course, March 2000, 19 stud.

Leaner reading instructions as Qx.ij, no other changes. Pass/no-pass for stud.

• E2. OORT feasibility study at NTNU: Two MSc NTNU-students, Lars

Christian Hegde and Tayyaba Arif, repeating E1 in Autumn 2001.

•

E3. 2nd OORT exper. at NTNU , 4th year sw.arch. course, March 2002, 42 stud. Adjusted reading instructions (for E3), removed trivial defects in UML artifacts.

• E4. Industrial OORT exper. at Ericsson-Grimstad , 10 developers, 50/50 on old/new reading techniques, adjusted E3 techniques. Meagre internal baseline data. Part of PROFIT project - developers were paid 500 NOK/h, 4 MSc students (NTNU, HiA) and one PhD student at NTNU/Ericsson (Mohagheghi).

Slide 3

Reading and Inspections

Why read software?

• Reading (reviewing): Systematic reading of most software documents / artifacts (requirements, design, code, test data etc.) can increase:

– Reliability: since other persons are much better in finding defects

(“errors”) created by you. Often psychological block on this.

– Productivity : since defect fixing is much cheaper in earlier life-cycle phases, e.g. 30$ to correct a line in rqmts, $4000 to fix a code line.

And 2/3 of all defects can be found before testing, at 1/3 of the price.

– Understanding: e.g. for maintenance or error fixing.

– Knowledge transfer : novices should read the code of experts, and inversely.

–

Maintainability: by suggesting a “better” solution/architecture, e.g. to increase reuse.

– General design quality/architecture : combining some of the above.

• We should not only write software (write-once & never-read?), but also read it (own and other’s).

But need guidelines (e.g. OORTs) to learn to read efficiently, not ad-hoc.

Slide 4

Reading and Inspections (2)

Why NOT read software?

• Logistical problems: takes effort, how to schedule and attend meetings?

• Proper skills are unavailable: needs domain and/or technological insight.

• Sensitive criticism: may offend the author, so counter-productive?

•

Rewards or incentives: may be needed for both readers and writers.

• Boring: so need incentives, as above?

• General lack of motivation or insights.

Slide 5

Reading and Inspections (3)

Classic inspections

• “Fagan” inspections for defect discovery of any software artifact:

– I1. Preparation: what is to be done, plan the work etc. (*).

– I2. Individual reading: w/ complementary perspectives to maximize effect (*).

– I3. Common inspection meeting: assessing the reported defects.

– I4. Follow-up: correct and re-check quality, revise guidelines?

• Will look at steps I1-I2 here (*), with OORT1-7 guidelines for perspectives.

• In industry: typically 10% of effort on inspections, with net saving of 10-25%.

So quality is “free”!

• Recently: much emphasis on OO software development , e.g. using Rational Rose tool to create UML design diagrams.

But few, tailored reading techniques for such documents.

Over 200,000 UML licenses, so big potential.

• Example: Ericsson in Norway: previously using SDL, now UML and Java.

Had an old inspection process, but need new reading techniques.

Slide 6

Reading and Inspections (4)

Different needs and techniques of reading, e.g.:

Reading

PROBLEM SPACE

(needs)

Construction

Analysis

Defect

Detection

Usability

Design

Requirements Code User

Interface

UML Diagrams

SCR English Screen Shot

SOLUTION SPACE

(techniques, perspectives)

Traceability

Horizontal

Vertical

Omission

Ambiguity

Defect-based

Perspective-based Usability-based

Incorrect Expert

Developer Tester User

Inconsistent

Slide 7

OO Reading and UML Documents

• Unified Modeling Language, UML : just a notational approach, does not propose/define how to organize the design tasks (process) .

• Can be tailored to fit different development situations and software lifecycles (processes)

UML Artifacts/Diagrams, five used later (marked with *):

• Dynamic View

– Use cases (analysis) *

– Activities

– Interaction

sequences *

collaborations

– State machines *

• Static View

– Classes *

Relationships

Generalization - IsA

Composition - PartsOf

Association - HasA

Dependency - DependsOn

Realization

Extensibility

Constraints

Stereotypes

– Descriptions (generated from UML) *

– Packages

– Deployment

Slide 8

OO Reading and UML Documents (2)

Our six relevant software artifacts:

• Requirements descriptions, RD : here structured text with numbered items, e.g. for a Gas Station. In other contexts: possibly with extra ER- and flow-diagrams.

• (Requirement) Analysis documents, UC : here use case diagrams in UML, with associated pseudocode or comments (“textual” use case). A use case describes important concepts of the system and the functionalities it provides.

• Design documents, also in UML:

– Class diagrams, CD : describe the classes and their attributes, behaviors

(functions = message definitions) and relationships.

– Sequence diagrams ( special interaction diagrams), SqD: describe how the system objects are exchanging messages.

– State diagrams, StD : describe the states of the main system objects, and how state transitions can take place.

• Class descriptions, CDe : separate textual documentation of the classes, partly as UML-generated interfaces in some programming language.

Slide 9

OO Reading and UML Documents (3)

The six software artifacts: requirement description, use cases and four design documents in UML:

Specified Lender

Investor

Fanny May

Monthly Report

Request

Receive Reports

Investment Request

Generate Reports

Loan Analyst

Loan-Arranger Requirements Specification – Jan. 8, 1999

Background

Banks generate income in many ways, often by borrowing money from their depositors at a low interest rate, and then lending that same money at a higher interest rate in the form of bank loans. However, property loans, such as mortgages, typically have terms of

15, 25 or even 30 years. For example, suppose that you purchase a $150,000 house with a $50,000 down payment and borrow a $100,000 mortgage from National Bank for thirty years at 5% interest. That means that National Bank gives you $100,000 to pay the balance on your house, and you pay National Bank back at a rate of 5% per year over a period of thirty years. You must pay back both principal and interest. That is, the initial principal, $100,000, is paid back in 360 installments (once a month for 30 years), with interest on the unpaid balance. In this case the monthly payment is $536.82. Although the income from interest on these loans is lucrative, the loans tie up money for a long time, preventing the banks from using their money for other transactions. Consequently, the banks often sell their loans to consolidating organizations such as Fannie Mae and

Freddie Mac, taking less long-term profit in exchange for freeing the capital for use in other ways.

Lender name : text id : text contact : text phone_number : number

Loan amount : number interest rate : number settlement data : date term : date status : text original_value : number principal_original : number risk() set_status_default() set_status_late() set_status_good() discount_rate() borrowers() principal_remaining()

Bundle active time period : date profit : number estimated risk : number total : number loan analyst : id_number discount_rate : number investor_name : text date_sold : date risk() calculate_profit() cost() Borrower name : text id : number risk : number status : text risk() set_status_good() set_status_late() set_status_default() borrower_status() set_status()

Loan Arranger rec_monthly_report() inv_request() generate reports() identify_report_format() verify_report() look_for_a_lender() look_for_a_loan() identify_loan_by_criteria() manually_select_loans() optimize_bundle() calculate_new_bundle() identify_asked_report() aggregate_bundles() aggregate_loans() aggregate_borrowers() aggregate_lenders() format_report() show_report()

Receive Monthly

Report

A Lender :

Specified Lender monthly_report(lender, loans, borrowers)

Fanny May :

Loan Arranger identify_report_format() verify_report() lende r : look_for_a_lender(lender) update(lender) new_lender(name,contact, phone_number) look_for_a_loan(loan) update_loan(lender, borrower) new_loan(lender, borrowers)

Loan : Loan

Fixed_Rate Loan risk() principal_remaining()

Variable_Rate Loan principal_remaining : number risk() principal_remaing() look_for_a_ update_ new_

Loan Arranger Classes Description

Class name: Fixed_Rate Loan

Category: Logical View

Documentation:

A fixed rate loan has the same interest rate over the entire term of the mortgage

External Documents:

Export Control: Public

Cardinality:

Hierarchy:

Superclasses: Loan

Public Interface: n

Operations:

risk

principal_remaining

Loan State

Diagram receive a monthly report monthly report informing payment on time

[ payment time <= due time ]

Good monthly report informing payment on time

[ payment time <= due time ] monthly report informing late payment

[ payment time > due time + 10 ] monthly report informing late payment

[ due time < payment time < due time + 10 ]

State machine: No

Concurrency:

Persistence:

Sequential

Persistent

Operation name: risk

Public member of: Fixed_Rate Loan

Return Class: float

Documentation:

take the average of the risks' sum of all borrowers related to this loan

if the average risk is less than 1 round up to 1

else if the average risk is less than 100 round up to the nearest integer

otherwise round down to 100

Concurrency: Sequential

Late monthly report informing late payment

[ payment time > due time + 10 ]

Default

Slide 10

Borrower :

Borrower

OO Reading and UML Documents (4)

Software Artifacts, with OO Reading Techniques (OORTs) indicated:

Requirements

Specification/Analysis

Requirements

Descriptions

OORT-5

OORT-7

Use-Cases

OORT-6

High Level

Design

Class

Diagrams

OORT-4

Class

Descriptions

OORT-2

State

Diagrams

OORT-1

OORT-3

Vertical reading

Horizontal reading

Sequence

Diagrams

Slide 11

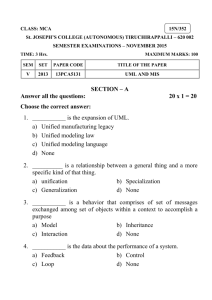

OO Reading and UML Documents (5)

The seven OO Reading Techniques (OORTs)

• OORT-1: Sequence Diagram x Class Diagram (horizontal, static)

• OORT-2: State Diagram x Class Description (horizontal, dynamic)

• OORT-3: State Diagram x Sequence Diagram (horizontal, dynamic)

• OORT-4: Class Diagram x Class Description (horizontal, static)

• OORT-5: Class Description x Requirement Description (vertical, static)

• OORT-6: Sequence Diagram x Use Case Diagram (vertical, dyn./stat.)

• OORT-7: State Diagram x Rqmt Descr. / Use Case Diagr. (vertical, dynamic)

• Abbreviations:

Requirement Description (RD), Use Case Diagram (UC),

Class Diagram (CD), Class Description (CDe) to supplement CD,

State Diagram (StD), Sequence Diagram (SqD).

Slide 12

OO Reading and Defect Types

Reading Techniques and defect types:

Domain Knowledge

General Requirements

Other Domain incorrect fact extraneous omission

Software

(Design) Artifacts inconsistency ambiguity

• Software reading techniques try to increase the effectiveness of inspections by providing procedural guidelines that can be used by individual reviewers to examine (or “read”) a given software artifact (design doc.) and identify defects.

• As mentioned, empirical evidence that tailored software reading increases the

effectiveness of inspections for many software artifacts, not just source code.

Slide 13

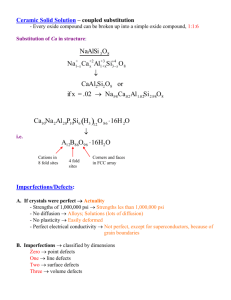

OO Reading and Defect Types (2)

Type of Defect

Omission

Description

One or more design diagrams that should contain some concept from the general requirements or from the requirements document do not contain a representation for that concept.

Incorrect Fact A design diagram contains a misrepresentation of a concept described in the general requirements or requirements document.

Inconsistency A representation of a concept in one design diagram disagrees with a representation of the same concept in either the same or another design diagram.

Ambiguity A representation of a concept in the design is unclear, and could cause a user of the document (developer, low-level designer, etc.) to misinterpret or misunderstand the meaning of the concept.

Extraneous

Information

The design includes information that, while perhaps true, does not apply to this domain and should not be included in the design.

Table 1 – Types of software defects, and their specific definitions for OO designs

Slide 14

OO Reading and Defect Types (3)

Examples of defect types:

• Omission ( conceptually using vertical information ) – “too little”:

Ex. Forgot to consider no-coverage on credit cards, forgot a state transition.

• Extraneous or irrelevant information ( most often vertical) – “too much”:

Ex. Has included both gasoline and diesel sales.

• Incorrect Fact ( most often vertical) – “wrong”:

Ex. The maximum purchase limit is $1000, not $100.

• Inconsistency ( most often horizontal) – “wrong”:

Ex. Class name spelled differently in two diagrams, forgot to declare a class function/attribute etc.

• Ambiguity ( most often horizontal) – “unclear”:

Ex.

Unclear state transition, e.g. how a gas pump returns to “vacant”.

• Miscellaneous: other kind of defects or comments.

May also have defect severity: minor (comments), major, supermajor

.

Also IEEE STD on code defects: interface, initialization, sequencing, …

Slide 15

OO Reading and Defect Types (4)

Horizontal vs. Vertical Reading:

• Horizontal Reading, for internal consistency of a design:

• Ensure that all design artifacts represent the same system.

• Design contains complementary views of the information:

– Static (class diagrams)

– Dynamic (interaction diagrams)

• Not obvious how to compare these different perspectives.

• Vertical Reading, for traceability between reqmts/analysis and design:

• Ensure that the design artifacts represent the same system as described by the requirements and use-cases.

• Comparing documents from different lifecycle phases:

– Level of abstraction and detail are different

Slide 16

OO Reading and Defect Types (5)

The design inspection process with OO reading techniques:

looking for consistency

horizontal reading

Reader 1

Reader 2 looking for consistency

horizontal reading looking for traceability

vertical reading

Meet as a team to discuss a comprehensive defect list.

Each reader is an “expert” in a different perspective

Reader 3

Final list of all defects sent to designer for repairing

Slide 17

OO Reading-Related Concepts

• Levels of functionality in a design (used later in the OORTs):

– Functionality: highlevel behavior of the system, usually from the user’s point of view. Often a use case.

Ex. In a text editor: text formatting.

Ex. At a gas station: fill-up-gasoline and pay.

– Service: mediumlevel action performed internally by the system; an “atomic unit” out of which system functionalities are composed.

Often a part of a use-case, e.g. a step in the pseudo-code.

Ex. In a text editor: select text, use pull-down menus, change font selection.

Ex. At a gas station: Transfer $$ from account N1 to N2, if there is coverage.

– Message (function): lowest-level behavior unit, out of which services and then functionalities are composed. Represents basic communication between cooperating objects to implement system behavior.

Messages may be shown on sequence diagrams and must be defined in their respective classes.

Ex. In a text editor: Write out on a character.

Ex. At a gas station: Add $$ to customer bill: add_to_bill(customer, $$, date).

Slide 18

OO Reading-Related Concepts (2)

• Constraints/Conditions in requirements:

– Condition (i.e. local pre-condition) : what must be true, before a functionality/service etc. can be executed.

Example from GSCS’s 7. Payment : (see p.20-22)

… If (payment time) is now , payment type must be by credit card or cash ...

… If (payment time) is monthly , payment type must be by billing account ...

– Constraint (more global) : must be always be true for some system functionality etc.

Example from GSCS’s 9.2 Credit card problem :

… The customer can only wait for 30 seconds for authorization from the Credit

Card System. …

– Constraints can, of course, be used in conditions to express exceptions .

– Both constraints and conditions can be expressed as notes in UML class / state

/ sequence diagrams.

Slide 19

Ex. Gas Station Control System

Ex. Simplified requirement specification for a Gas Station Control System (GSCS), mainly for payment:

• 1. Gas station: Sells gasoline from gas pumps, rents parking spots, has a cashier and a GSCS.

• 2. Gas pump: Gasoline is sold in self-service gas pumps. The pump has a computer display and keyboard connected to the GSCS, and similarly for a credit card reader. If the pump is vacant, the customer may dispense gasoline. He is assisted in this by the GSCS, who supervises payment (points

7-9), and finally resets the pump to vacant. Gasoline for up to $1000 can be dispensed at a time.

• 3. Parking spot: Regular customers may rent parking spots at the gas station. The cashier queries the GSCS for the next available parking spot, and passes this information back to the customer. See points 7-9 for payment.

• 4. Cashier: An employee of the gas station, representing the gas station owner. One cashier is on-duty at all time. The cashier has a PC and a credit card reader, both communicating with the GSCS. He can rent out parking spots, and receive payment from points 2 & 3 above, while returning a receipt.

Slide 20

Ex. Gas Station Control System (2)

• 5. Customer

May fill up gasoline at a vacant gas pump, rent a parking spot at a cashier, and pay at the gas pump (for gasoline) or at the cashier. Regular customers are employed in a local business, which is cleared for monthly billing.

• 6. GSCS

– Keeps inventory of parking spots and gasoline, a register of regular customers and their businesses and accounts, plus a log of purchases.

– Has a user interface at all gas pumps and at the cashier’s PC, and is connected to an external Credit Card System and to local businesses (via Internet).

– Computes the price for gasoline fill-ups, informs the cashier about this, and can reset the gas pump to vacant.

– Will assist in making payments (points 7-9).

• 7. Payment in general

Payment time and type is selected by the customer.

Payment time is either now or monthly :

– If it is now , payment type must be by credit card or cash (incl. personal check).

– If it is monthly , payment type must be by billing account to local business.

There are two kind of purchase items : gasoline fill-up and parking spot rental .

A payment transaction involves only one such item.

Slide 21

Ex. Gas Station Control System (3)

• 8. Payment type

• 8.1 By cash (or personal check): can only be done at the cashier.

• 8.2 By credit card: can be done either at the gas pump or at the cashier.

The customer must swipe his credit card appropriately, but with no PIN code.

• 8.3 By billing account: the customer must give his billing account to the cashier, who adds the amount to the monthly bill of a given business account.

• 9. Payment exception

• 9.1 Cash (check) problem: The cashier is authorized to improvise.

• 9.2 Credit card problem : The customer can only wait for 30 seconds for authorization from the Credit Card System. If no response or incorrect credit card number / coverage, the customer is asked for another payment type / credit card.

At the gas pump, only one payment attempt is allowed; otherwise the pump is reset to vacant (to not block the lane), and the customer is asked to see the cashier.

•

9.3 Business account problem: If the account is invalid, the customer is asked for another payment type / account number.

Slide 22

Ex. Gas Station Control System (4)

Example, part 1: Possible weaknesses in GSCS requirements:

• What about no more gasoline, or no more parking spots?

• How should the user interface dialogs be structured?

• Are any credit card allowed, including banking cards (VISA etc.)?

• What kind of information should be transferred between gas pumps and the GSCS, between the cashier and the GSCS etc.?

• How to collect monthly payment from local businesses?

• How many payment attempts should be given to the customer at the cashier?

• What if the customer ultimately cannot pay?

Can be found by special reading techniques for requirements, but this is outside our scope here.

Slide 23

Ex. Gas Station Control System (5)

• Example, part 2: Parking Spot related messages in a sequence diagram for Gas

Station.

Purchase :

Purchase

Customer :

Customer parking_spot_request( account_number) where_to_park( available parking_spot)

Gas Station Owner :

Gas Station Owner

Parking Spot :

Parking_Spot next_available( )

Credit Card System :

Credit_Card System

Customer Bill :

Bill

[ Payment type = Credit Card and payment time = now] lease_parking_spot(parking_spot, payment time, payment type) new_payment_type_request()

[ response time => 30 secs or credit card not authorized and payment time = now] authorize_payment(customer, amount, date)

[response time < 30 secs]

[ payment time = monthly] add_to_bill(customer, amount, date) new_purchase(customer, parking_spot, date)

Ex. Gas Station Control System (6)

• Example, part 3: Abstracting messages to two services for Gas Station –

GetParkingSpot (“dotted” lines) and PayParkingSpot (“whole” lines).

Customer :

Customer parking_spot_request( account_number) where_to_park( available parking_spot)

Gas Station Owner :

Gas Station Owner

Parking Spot :

Parking_Spot next_available( )

Credit Card System :

Credit_Card System

Customer Bill :

Bill

[ Payment type = Credit Card and pay ment time = now] lease_parking_spot( parking_spot, pay ment time, pay ment type) new_payment_type_request()

[ response time => 30 secs or credit card not authorized and pay ment time = now] authorize_pay ment(customer, amount, date)

[response time < 30 sec s]

[ payment time = monthly] add_to_bill(customer, amount, date) new_purchase(customer, parking_spot, date)

Purchase :

Purchase

Requirements for Gas Station Control System (7)

• Example, part 4: Checking whether a constraint is fulfilled in Gas Station class diagram:

Credit_Card System

(from External Systems)

+ authorize_payment(customer, amount, date)()

[ response time should be less than 30 seconds for all Credit Card Systems ]

Slide 26

OORT experimentation

Empirical Evaluations of OORTs

• Receiving feedback from users of the techniques:

Controlled Experiments

Observational Studies

• Revising the techniques based on feedback:

Qualitative (mostly)

Quantitative

• Continually evaluating the techniques to ensure they remain feasible and useful

• Negotiating with companies to implement OORTs on real development projects.

• Goal: To assess effectiveness on industrial projects …

Are time/effort requirements realistic?

Do the techniques address real development needs?

• … using experienced developers.

Is there “value added” also for more experienced software engineers?

Slide 27

OORT experimentation (2)

What we know:

• Techniques are feasible

• Techniques help find defects

• Vertical reading finds more defects of omission and incorrect fact

•

Horizontal reading finds more defects of inconsistency and ambiguity

What we don’t know:

• What influence does domain knowledge have on the reading process

– Horizontal x Vertical

• Can we automate a portion of the techniques, e.g. by an XMI-based UML tool?

– Some steps are repetitive and mechanical

– Need to identify clerical activities

• See also conclusion.

– http://www.cs.umd.edu/Dienst/UI/2.0/Describe/ncstrl.umcp/CS-TR-4070

– http://fc-md.umd.edu/reading.html

Slide 28

OORT experimentation (3)

Experiments so far:

• Controlled Experiment I, Autumn 1998:

– Undergraduate Software Engineering class, UMD

– Goal: Feasibility and Global Improvement

• Observational Studies, FC-UMD, Summer 1999:

– Goal: Feasibility and Local Improvements

• Observational Studies II, UMD, Autumn 1999:

– Two Graduate Software Engineering Classes, UMD,

– Goal: Observation and Local Improvement

• Controlled Experiment III, Spring 2000:

– Undergraduate Software Engineering Class, UMD,

– Goal: General life-cycle study (part of larger experiment)

• And more at UMD…

• One Postmortem, Three Controlled Exper., one Feasibility Study, 2000-02:

- The E0-E4 at NTNU/Ericsson

Slide 29

E0: Post-mortem of SDL-inspections at Ericsson-Oslo, 1997-99

• Overall goals: Study existing inspection process, with SDL diagrams (emphasis) and PLEX programs. Possibly suggest process changes.

• Context:

– Inspection process for SDL in Fagan-style, adapted by Tom Gilb / Gunnar Ribe at Ericsson in early 90s.

But now UML, not as “linear” as SDL.

– Good company culture for inspections.

– Internal, file-based inspection database also with data for some test phases.

– One project release A with 20,000 person-hours.

– Further releases B-F with 100,000 person-hours.

•

Post-mortem study:

– Three MSC students dug up and analyzed data.

– To learn and to test hypotheses, e.g. about role of complexity and persistent longitudinal relationships.

– Good support by local product line manager, Torbjørn Frotveit.

Slide 30

E0: Post-mortem of SDL-inspections at Ericsson-Oslo, 1997-99 (2)

• Main findings: (PROFES’99 and NASA-SEW’99 papers, chapter in Gilb-book’02)

– Three defect types: Supermajor, Major, (+ Comments) – and impossible to extend by us later.

– Effectiveness/efficiency: 70% of all defects caught in individual reading

(1ph/defect), 6% in later meetings (8ph/defect).

Average defect efficiency in later unit&function testing: 8-10ph/defect.

– Actual inspection rate: 5ph/SDL-page, recommended rate: 8ph/SDL-page; implies that we could have found 50% more defects at 1/6 of later test costs.

– Release A: 1474 ph on inspections, saves net 6700 ph (34%) of 20,000 ph.

– Release B-F: 20,515 ph on insp., saves net 21,000 ph (21%) of 100,000 ph.

– Release A: no correlation between module complexity (#states) and number of defects found in inspection – i.e. puts the best people on the hardest tasks!

– Release B-F: many non-significant correlations on defect-proneness across phases and releases – but too little data on the individual module level.

• But: Lots of interesting data, but little local after-analysis, e.g. to understand and tune process. However, new process with Java/UML from 1999/2000.

Slide 31

E1: First OORT experiment, NTNU, Spring 2000

• Overall goals: Learn defect detection techniques and specially OORTs, check if the OORTs are feasible and receive proposals to improve them, compare discovered defects with similar experiments at Univ. Maryland (course CS735).

• Context: QA/SPI course, 19 students, 9 groups, pass/nopass, T.Dingsøyr as TA.

• Process:

– Make groups: two students in each group (a pair), based on questionnaires.

Half of the groups are doing OORTs 2,3,6,7 (mainly state diagrams), the other half OORTs 1,4,5,6 (mainly class/sequence diagrams).

– General preparation: Two double lectures on principles and techniques (8 h).

– Special preparation (I1): Look at requirements and guidelines (2h, self study).

– OO Design Reading (I2): Read and fill out defect/observ. reports (8h, paired); one group member executes the reading, the other is observing the first.

• Given documents: lecture notes w/ guidelines for OORT1-7 and observation studies, defect and observation report forms, questionnaires to form groups and resulting group allocation, set of “defect-seeded” software documents (RD, UC,

CD, CDe, SqD, and StD) – either for Loan Arranger (LA) or Parking Garage

(PG) example. Three markup pens.

Slide 32

E1: First OORT experiment, Ntnu, Spring 2000 (2)

• Differences with UMD-experiment Autumn 1999:

– OORT instructions operationalized (as Qx.ij) for clarity and tracing by Conradi.

See Appendix for OORT-1.

– LA is considered harder and more static, PG easier and more dynamic?

– UMD and NTNU are different university contexts.

• Qualitative findings:

– Big variation in effort, dedication and results:

E.g. some teams did not report effort data, even did the wrong OORTs.

– Big variation in UML expertise.

– Students felt frustrated by the extent of the assignment, and that indicated efforts were too low -- felt cheated.

– Lengthy and tedious pre-annotation of artifacts, before real defect detection could start (”slave labor”). Discovered many defects already during annotation, even defects that remained unreported.

– OORTs too ”heavy” for the given (small) artifacts?

– Some confusion about the assigments: what, how, on which artifacts, ...?

– But also many positive and concrete comments.

Slide 33

E1: First OORT experiment, NTNU, Spring 2000 (3)

• Quantitative findings:

– Recorded defects and comments:

Parking Garage: 17 (of 32) seeded defects & 3 more + 43 comments.

Loan Arranger: 17 (of 30) seeded defects & 4 more + 44 comments.

– Defect and comm. occurr., 5 PG groups and 4 LA; sum, average, variance:

PG: sum:33, 6 (4..10) & 1 (0..2) + sum:68, 14 (3..22) comments.

LA: sum:52, 11 (7..14) & 2 (0..4) + sum:72, 18 (9..37) comments.

– Duplicately reported defects:

PG: 11 of 13 duplicate defect occurrences found by different OORTs.

LA: 12 of 31 duplicate defect occurrences found by different OORTs.

– Effort spent: (for 4 OORTs, counting one person per team)

(Discrepancy = defect or comment.)

PG: 6-7 person-hours, ca. 3 discrepancies/ph.

LA: 10-13 person-hours, ca. 2.5 discrepancies/ph.

– Note: 2X more ”comments” than pure defects … and long arguments on what is what! A comment can go on details, as well as architecture.

Slide 34

E1: First OORT experiment, NTNU, Spring 2000 (4)

• Quantitative findings (cont’d 2):

Defect/OORT types in PG inspection – for 33 defect occurrences

OORT \

Defect type

OORT OORT OORT OORTOORT OORT OORT Sum all

-1 -2 -3 4 -5 -6 -7 OORTs

1 6 3 1 1 Omission

Extraneous

Incorrect Fact

Ambiguity

Inconsistency

Miscellaneous

Total

”Mixed” profile

2

3

3

1

3 1

1

3

1

1

1

2

1

7 8 8 4

High High High Middle

1

2 1

16

2

11

1

3

-

33

Slide 35

E1: First OORT experiment, NTNU, Spring 2000 (5)

• Quantitative findings (cont’d 3):

Defect/OORT types in LA inspection – for 52 defect occurrences

OORT \

Defect type

Omission

Extraneous

Incorrect Fact

Ambiguity

Inconsistency

Miscellaneous

OORTOORT OORT OORTOORT OORT OORT

1 -2 -3 4 -5 -6 -7

Sum all

OORTs

7

3 5 1 3 2

7

-

14

20 2

Total 23 7

”Static” profile Very H.Middle

1

7

10

2

9

High High

2

-

31

-

52

Slide 36

E1: First OORT experiment, NTNU, Spring 2000 (6)

• Quantitative findings (cont’d 4):

Comment types/causes in PG inspection -- for 43 comments (not 68 occurrences)

Comment cause \ type

Missing

Behav.

Miss.

Attrib.

Typo /

Spell.

System

Border

Clarifi Sum all cationOther causes

7 5 10 1 2 Omission

Extraneous

Incorrect Fact

Ambiguity

Inconsistency

Miscellaneous

Total

1

8 5

4

4

2

12

1

3

3

8

4

6

3

43

5

4

25

-

6

Slide 37

E1: First OORT experiment, NTNU, Spring 2000 (7)

• Quantitative findings (cont’d 5):

Comment types/causes in LA inspection -- for 44 comments (not 72 occurrences)

Comment cause \ type

Missing Miss.

Behav.

Attrib.

Typo /

Spell.

System Clarific

Border ation Other

Sum all causes

Omission 16 1 3 20

Extraneous

Incorrect Fact

Ambiguity

Inconsistency

Miscellaneous

Total

1

17

1

2

3

3

6

2

5

1

2

3

1

7

5

2

7

-

7

7

6

4

44

Slide 38

E1: First OORT experiment, NTNU, Spring 2000 (8)

• Lessons:

– Some unclear instructions: Executor/Observer role, Norwegian file names, file access, some typos. First read RD?

– Some unclear concepts: service, constraint, condition, …

– UML: not familiar by some groups.

– Technical comments on artifacts and OORTs:

Add comments/rationale to diagrams: UC and CD are too brief.

CDe hard to navigate in -- add separators.

SqD had method parameters, but CD not -- how to check?

Need several artifacts (also RD) to understand some OORT questions.

Many trivial typos and naming defects in the artifacts, by UML tool?:

Parking Garage artifacts need more work

Fanny May = Loan Arranger? Lot = Parking Garage?

LA vs. Loan Arranger vs. LoanArranger, gate vs. Gate,

CardReaders vs. Card_Readers.

All relations in CDia had cardinalities reversed!

…really frustrating to deal with poor-quality artifacts

Slide 39

E2: OORT feasibility study at NTNU, Autumn 2001

• Overall goals: Go through all OORT-related material to learn and propose changes, first for NTNU experiment and later for Ericsson experiment.

• Context: Two senior MSc students at NTNU, Hegde and Arif in Depth Project course (half semester), each repeating E1 as a combined executor/observer.

• Process:

– Repeat E1 process, but so that each executor is doing all OORTs on either LA or PG.

– Analysing data and suggesting future improvements based on both E1 and E2.

• Findings (next slide):

– Used about 15 hours each, 2X that of E1 groups – but did all 7 OORTs, not 4.

– 28 resp. 27 defects found in PG and LA: 3X as many per OORT as in E1.

– Found 9 more PG defects: 35+9 = 44, 4 more LA defects: 34+4 = 38.

– Found 34 more PG comm.: 43+34 = 77, 34 more LA comm.: 34+10 = 44.

– About 4 discrepancies/ph, 50% more than in E1.

– Many good suggestions for improvements on OORTs, artifacts, and process.

– So motivation means a lot!

Slide 40

E2: OORT feasibility study at NTNU, Autumn 2001 (2)

• Quantitative findings (cont’d 2):

Results from PG/LA inspections – NB: #defects = #occurrences

Executor \ Background PG (by Hegde, and results doing all OORTs)

LA (by Arif, doing all OORTs)

Industrial background

UML background

Low-Med

Low

Low-Med

Med-High

#defects recorded

#comments recorded

28 (of 44, +9 new) 27 (of 38, +4 new)

40 (w/ 34 new) 25 (w/ 10 new)

Total effort (in min.)

Effort per Discrepency

(Defect+Comment)

900 910

13/min (4.5/ph) 17.5/min (3.8/ph)

Slide 41

E3: Second OORT experiment, NTNU, Spring 2002

• Overall goals: As in E1, but also to try out certain OORT changes for later industrial experiments in E4.

• Context: Sw Arch. course, 42 students, 21 groups, pass/no-pass, Hegde and Arif as TAs.

• Process:

– Mainly as in E1, but a web-application was used to manage artifacts and filledin forms.

– OORTs enhanced for readability (not as terse as in E1), and generally polished and corrected.

• Given documents: Mostly as in E1, but trivial defects (typos, spellings etc.) in artifacts corrected to reduce the amount of “comments”.

Slide 42

E3: Second OORT experiment at NTNU, Spring 2002 (2)

• Changes in OORTs for E3 experiment, based on E1/E2 insights:

Change type Count

Error in original OORTs from UMD 3

Error in E1-conversion to

Qx.ij

10

Question rephrased

Comments added

(more words)

20

22

Total 55

Slide 43

E3: Second OORT experiment at NTNU, Spring 2000 (3)

• Quantitative findings for PG:

The 11 groups OORTs

10

11

12

13

14

15

16

17

18

19

30

2,3,6,7

2,3,6,7

2,3,6,7

2,3,6,7

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

Sum

Mean

Median

Defects seeded

Old defect occurr.

44

44

44

44

44

44

44

44

44

44

44

(44)

Old comment occur.

New comment occur.

Total discrepEffort encies (min)

Efficiency

(disc./ph)

4

8

5

4

4

3

1

0

0

9

5

11

3

4

5

23

7

8

8

8

8

36

20

24

3

6

5

7

2

7

4

10

3

7

9

11

10

21

16

27

215

230

266

320

4

53 (21 defects)

10

59 (77 old)

11

91 (78

25 390 new) 203 3885

337

370

210

870

232

445

4.8

4

5.4

5

8.3

18.5

353.2

3.30

7 20 320 3.24

1.42

1.30

2.29

2.48

5.17

3.24

2.79

5.48

3.61

5.06

3.85

Slide 44

E3: Second OORT experiment, NTNU, Spring 2002 (4)

• Quantitative findings for LA:

The 10 groups

20

21

22

23

24

25

26

27

28

29

31

Sum

Mean

Median

OORTs

2,3,6,7

2,3,6,7

2,3,6,7

2,3,6,7

2,3,6,7

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

1,4,5,6

Defects defect seeded

Old occurr.

38

38

38

38

38

38

38

38

38

38

38

(38)

Old comment occur.

New comment occur.

Total discrepEffort encies (min)

Efficiency

(disc./ph)

3

0

11

2

3

5

0

0

9

0

4

2

17

3

11

3

9

5

5

16

12

20

3

31

4

14

4

11

2

10

1

13

7

17

4

11

13

41

9

35

400

280

265

270

12

69 (21 defects)

19

60 (44 old)

9

96 (72

40 315 new) 225 3108

286

245

162

270

230

385

6.3

4

5.5

2

8.7

20.5

282.5

4.30

9 16 270 4.44

1.05

3.92

4.44

4.44

0.78

4.83

1.95

8.97

2.04

7.78

7.62

Slide 45

E3: Second OORT experiment, NTNU, Spring 2002 (5)

• Findings, general :

– PG: 4-5 defects found per group, totally found 21 (of 44) defects in 53 occurr.,

150 comment occurrences with 78 new comments (*). Mean: 3.3 discr./ph.

– LA: 4-6 defects found per group, totally found 21 (of 38) defects in 69 occurr.,

156 comment occurrences with 72 new comments (*). Mean: 4.3 discr./ph.

– OORT-2,3,6,7: found less defects that OORT-1,4,5,6.

– Efficiency: 3-4 discrepencies/ph, or ca. 1 in industrial context (3-4 inspectors).

– Group variance: 1:4 (for PG) and 1:11 (for LA) in #defects found and in

#defects/ph. Motivation was rather poor and variable?

– Cleaning up artifacts for trivial defects (typos etc.): did not reduce number of comments, still 3X as many comments as defects!

*) New comments must be analyzed further, possibly new defects here also.

Slide 46

E4: Industrial OORT experiment at Ericsson-Grimstad, Spring 2002

• Overall goals: Investigate feasibility of new OORTs vs. old checklist/view-based reading techniques in an industrial environment at Ericsson-Grimstad.

• Context:

– Ericsson site in Grimstad, ca. 400 developers, 250 doing GPRS work, 10 developers paid to perform experiment, part of INCO and PROFIT projects.

Much internal turbulence in down-sizing in Spring 2002.

– Overall inspection process in place, need upgrade in individual techniques for

UML-reading and generally better metrics.

– Hegde and Arif as TAs, supplemented with two local MSc students from local

HiA, and NTNU PhD-student Mohagheghi from Ericsson as internal coord..

• Process:

– First lecture with revised OORTs, then individual reading (preparation), then common inspection meeting. Later data analysis by HiA/NTNU.

– The OORTs were adapted for lack of CDe (OORT-4: CDxCDe) and RD (using

UC instead), e.g. no OORT-5: CDxRD.

– Artifacts: as paper and on internal file catalogs, forms: on the web.

•

Given documents: no RD (too big and with appendices), UC, CD (extended and also serving as CDe), one StD (made hastily from a DFD), and SqD. Only increments was to be inspected, according to separate list.

Slide 47

E4: Industrial OORT experiment at Ericsson-Grimstad, Spring 2002 (2)

• Quantative results from Ericsson-Grimstad:

Defects and effort

#defect occur. by reading

#defect occur. in meeting

Partial baseline June

2001 - March 2002

Current view-based Revised OORTs, 4 techn., 5 persons,

May 02 persons,

May 02

84 17 (all different) 47 (39 different)

Reading effort

Meeting effort

Total effort

82

99.92 ph

(0.84 def/ph)

214.92 ph

(0.38 def/ph)

314.84 ph

(0.53 def/ph)

10 ph

8

(1.70 def/ph)

8,25 ph

(0.97 def/ph)

18,25 ph

(1.37 def/ph)

1

25.5 ph

(2,29 def/ph)

9 ph

0.11 (def/ph)

34,5 ph

(1.57 def/ph)

Slide 48

E4: Industrial OORT experiment at Ericsson-Grimstad, Spring 2002

• Lessons learned in E4 at Ericsson:

– General:

Reading (preparation) generally done too fast – already known.

Weak existing baseline and metrics, but under improvement.

Inspections to find defects; design reviews for “deeper” design comments

–

OORTs vs. view-based reading/inspection:

OORT data are for 4 inspectors, the 5th did not deliver his forms.

OORTs perform a bit better than view-based in efficiency.

But OORTs finds 2X defects.

View-based technique finds 1/3 of total defects in inspection meeting!

Need to cross-check duplicate defect occurrences,

but no overlap in defects found by view-based and OORTs!

Both outperforms inspection “baseline” by almost 3X in efficiency.

So many aspects to follow up!

Slide 49

Conclusion

• Lessons learned in general for E0-E4:

– Industrial artifacts are enormous:

Requirements of 100s of pages, with ER/DF/Sq diagrams (not only text)

Software artifacts cover entire walls – use increments and their CRs?

– Industrial baselines are thin, so hard to demonstrate a valid effect.

– OORTs seems to do the job, but:

Still many detailed Qx.ij that are not answerable (make transition matrices)

Some redundancies in annotations/questions + industry tailoring.

What to do with “comments” (2-3X the defects)?

Domain knowledge and UML expertise: does not matter?

– On using students:

Ethics: slave labor and intellectual rights – many angry comments back.

Norwegian students not so motivated as American – integrate OORTs better in courses, use paid volonteers? A 20h-exercise is too large?

– Conclusions:

Must do more experimentation in industry.

How to use more realistic artifacts for student experiments.

Slide 50

OORT-1: Sequence Diagram x Class Diagram

Appendix: OORT-1: Sequence Diagram x Class

Diagram, Spring 2000 version (E1 and E2)

• Inputs :

– 1. A class diagram, possibly in several packages.

– 2. Sequence diagrams.

• Outputs :

– 1. Annotated versions of above diagrams.

– 2. Discrepancy reports.

• Goal: To verify that the class diagram for the system describes classes and their relationships in such a way that the behaviors specified in the sequence diagrams are correctly captured.

• Instructions:

– Do steps R1.1 and R1.2.

Slide 51

OORT-1: Sequence Diagram x Class Diagram (2)

Step R1.1: From a sequence diagram – identify system objects, system services, and conditions.

• Inputs :

– 1. Sequence diagram (SqD).

• Outputs:

– 1. System objects, classes and actors (underlined with blue on SqD);

– 2. System services (underlined with green on SqD);

– 3. Constraints/conditions on the messages/services (circled in yellow on SqD).

I.e., a marked-up SqD is produced, and will be used in R1.2.

• Instructions: – matches outputs above.

– Q11.a: Underline system objects, classes and actors in blue on SqD.

– Q11.b: Underline system services in green on SqD.

– Q11.c: Circle constraints/conditions on messages/services in yellow on SqD.

Slide 52

OORT-1: Sequence Diagram x Class Diagram (3)

Step R1.2

: Check related class diagrams, to see if all system objects are covered.

• Inputs:

– 1. Marked up sequence diagrams (SqDs) – from R1.1.

– 2. Class diagrams (CDs).

• Outputs:

– 1. Discrepancy reports.

• Instructions (as questions – here and after):

– Q12.a: Can every object/class/actor in the SqD be found in the CD?

Possible [ inconsistency?

]

– Q12.b Can every service/message in the SqD be found in the CD, and with proper parameters? [ inconsistency?

]

– Q12.c: Are all system services covered by (low-level) messages in the SqD?

Possible [ omission?

]

– Q12.d: Is there an association or other relationship between two classes in case of message exchanges? [ omission?

]

– Q12.e: Is there a mismatch in behavior arguments or in how constraints / conditions are formulated between the two documents? [ inconsistency?]

Slide 53

OORT-1: Sequence Diagram x Class Diagram (4)

• Step R1.2 instructions (cont’d ) :

– Q12.f: Can the constraints from the SqD in R1.1 be fulfilled?

E.g. Number of objects that can receive a message (check cardinality in CD)?

E.g. Range of data values?

E.g. Dependencies between data or objects?

E.g. Timing constraints?

Report any problems. [ inconsistency?

]

– Q12.g: Overall design comments , based on own experience, domain knowledge, and understanding:

E.g. Do the messages and their parameters make sense for this object?

E.g. Are the stated conditions appropriate?

E.g. Are all necessary attributes defined?

E.g. Do the defined attributes/functions on a class make sense?

E.g. Do the classes/attributes/functions have meaningful names?

E.g. Are class relationships reasonable and of correct type?

(ex. association vs. composition relationships).

Report any problems. [ incorrect fact?

]

Slide 54