Hybrid computing

advertisement

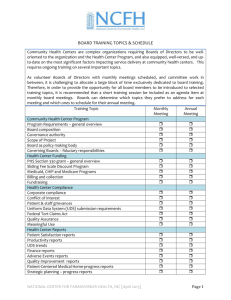

New Technology in High Performance Computing Bae WonSoung Ph.D. TAO Computing inc. genesis@taocomputing.com Contents 1.Introduction hardware acceleration technology (Hybrid computing) 2. CSX600 Acceleration technology parallelism based on SIMD architecture 3. CSX600 Performance 4. Approach to Blade system etc 5. Application Performance, Research & Development 6. Case Study & Prospect 7. Discussion Introduction Hybrid computing CPU operation + Accelerated operation Hybrid Computing Hybrid computing의 대두 현재의 cpu 의 기술의 한계 (chip의 직접화의 한계) multi-core 기술 산술연산기능상대적 비율의 감소 범용 (개인용 )위주의 개발 특화 Acceleration & Hybrid computing Acceleration & Hybrid computing technology 1. FPGA (Field Programmable Gate Array) xilinx, Altera (SGI) 2. GPU( Graphic Processor Unit) ATI (AMD) CTM project , NVIDIA (CUDA/Tesla) 3. Game Processor Cell, Mercury 4. SIMD processor ( Accelerated coprocessor) CSX600 FPGAs work best for bit and integer data types • • • • Excellent for bit-twiddling, like cryptography Fast for integer manipulations, like genomic algorithms for pattern matching Marginal for 32-bit floating-point; have to do over 200 at once to compete with current general purpose chips Poor at 64-bit floating-point… don’t use them as a supplementary FLOPS unit • “Programming” is really circuit design, though tools are making this easier. • Compare speed against meticulously coded assembler on node, not casual coding on node. • Where FPGAs make the most sense is in creating instructions very unlike those provided by the node instruction set. • FPGAs can cost more than an entire server! Where GPUs can help with HPC applications • • • • • • • Single-precision calculations where answer quality is less important than raw speed – Seismic exploration – Some types of Quantum Chromodynamics Graphics-type calculations, obviously, for visualization and result display Only 32-bit, and non-IEEE rounding degrades accuracy cumulatively Can use over 200 watts, multiple slots Often only have a few megabytes of local store (frame buffer architecture) Cheap hardware but very expensive software (the kind you create yourself) Game processors don’t match endian-ness of node CPUs Performance via limited power dissipation CSX600 Acceleration Technology X620 board • • • • • • • • e620 board Dual ClearSpeed CSX600 coprocessors 96 GFLOPS “theoretical” Peak R∞ ≈ 75 GFLOPS for 64-bit matrix multiply (DGEMM) calls – Hardware does also support 32-bit floating point and integer calculations 133 MHz PCI-X 2/3rds length (8”) form factor and PCI-e(8X) form factor 1GByte of memory on the board Drivers today for Linux (RedHat and Suse) and Windows(XP,sever2003(32/64 bit) wccs2003) Low power; 25 Watts typical Multiple boards can be used together for greater performance CSX600 Configuration CSX600 Architecture Introduction to PEs (processing Elements) CSX600 Performance Data 64-bit Function Operations per Second (Billions) 2.5 2.6 GHz dual-core Opteron 2.0 3 GHz dual-core Woodcrest ClearSpeed Advance card 1.5 1.0 0.5 0.0 Sqrt InvSqrt Exp Ln Cos Sin SinCos Inv Function name Typical speedup of ~8X over the fastest x86 processors, because math functions stay in the local memory on the card CSX600 DGEMM Performance GFLOPS 70 PCIe 60 PCI-X 50 40 Doubling host-to-card bandwidth has minor effect because of I/O overlap. A zero-latency connection would not visibly affect either curve! 30 20 10 Matrix size 2000 4000 6000 8000 10000 CSX600 LINPACK Performance System Specification Linpack Result (GFLOPS) Elapsed Time 4 nodes (16GB) w/o Advance boards 136.0 48.4 minutes 4 nodes (16GB) w/ 2 x Advance boards each 364.2 18.4 minutes 1 node (16GB) w/o Advance boards 34.0 1 node (16GB) w/ 2 x Advance boards 90.1 Note: Previously published Linpack results for similar single node systems were 34.9 GFLOPS for the standard node and 93 GFLOPS for an accelerated node with two ClearSpeed Advance boards. The variations are a result of small differences between system configurations and problem sizes used during the benchmark runs. FFT performance Approach to blade systems or etc Example of a possible 1U server • 2.66 GHz Dual-socket quad-core x86 plus 16 GB memory, 4x InfiniBand. Rack width (19 in.) • • • Two ClearSpeed PCIe cards on risers 1 or 2 GB DRAM/card Enclosure supplies 25W, cooling per card Total power draw: 450 W 6.1 in. card – – – – 277 peak GFLOPS (64-bit) ~225 DGEMM GFLOPS sustained with MKL+new DGEMM ~170 LINPACK GFLOPS sustained 18 or 20 GB DRAM on host: 2 or 4 GB on CS cards, 16 GB on x86 host Server and workstation installations • • • Can be installed in standard servers and workstations – E.g. HP DL380 takes 2 boards Some 4U servers could take 6-8 boards Potential for a PCI backplane chassis to take anything from 12 to 19 boards in 4U – A 19-board system could be under 750W in 4U – Could put 9 of these in a 42U rack -> 171 boards – If each board 10X a fast x86 core, equivalent to 1,710 cores in a single rack but for only 6.8kW of power Blade installations • Can be installed in blades and via expansion units – E.g. IBM PEU2 compatible with HS21 takes 2 boards – HP blade case using customizing expansion unit Case installation HP DL380 takes 2 boards A cluster of four nodes 136 GFLOPS Hardware configuration • Two Advance accelerator boards in each server • Intel®Xeon® 5160 (Woodcrest) dual core processors 4 nodes (16CPU) • Cluster performance increase over 364 GFLOPS • consuming 1,940 watts • Adding only 200 watts TOP500 (November 1996) •Center for Computational Science at the University Of Tsukuba in Japan •2048 CPU Hitachi system 368.2 GFLOPS Clustering system in CSX600 Clustering system 구축 ClearSpeed Way Conventional Way Increasing capacity to 2.2 TFLOPS • 32 kwatts X3+ α • 10x3+α sq. ft. • ~$500,000 X3+ α =~$1500,000+ α • 2.2TFLOPS(lower) • Reconstruction facility Expense Titech 500.org ranking • Announced on Monday 9th of October 2006: Tokyo Tech have accelerated their Linux supercomputer, TSUBAME, from 38 TFLOPS to 47 TFLOPS with 360 ClearSpeed Advance boards – An increase in performance of 24%, but for just a 1% increase in power consumption – 10,368 AMD Opteron cores with just 360 ClearSpeed Advance boards – #9 in November 2006 Top500 – 1st accelerated system in the Top500 Professor Matsuoka standing beside TSUBAME at Tokyo Tech Application performance & R&D LINPACK speed correlates with many real applications Ab initio Computational Chemistry Structural Analysis Electromagnetic Modeling Global Illumination Graphics Radar Cross-Section Application area (CAE CFD) • Dense matrix-matrix kernels: order N 3 ops on order N 2 data – CFD ,CAE by boundary element and Green’s function methods • N-body interactions: order N 2 ops on order N data – CFD, CAE with high mean-free-path • Some sparse matrix operations: order NB where B is the average matrix band size 2 ops on order NB data – CFD,CAE with finite element methods • Time-space marching: order N 4 ops on order N 3 data – CFD,CAE with finite difference methods; data must reside on board • Fourier transforms: order N log N ops on order N data, with other processing to increase data re-use – CFD, CAE by spectral methods or pseudo spectral methods Solving N equations takes order N 3 work ClearSpeed accelerates the DGEMM kernel of equation solving that takes over 90% of the time. Volume = 1⁄3 N3 multiply-adds N equations N iterations N unknowns Accelerating sparse solvers: ANSYS & LS-DYNA Accelerating sparse solvers: ANSYS & LS-DYNA 10 million degrees of freedom (sparse) DGEMM on x86 host becomes… 50,000 dense equations Accelerator can solve at over 50 GFLOPS 3.6x net application acceleration Non-solver Solver setup 10x Non-solver Solver setup DGEMM with ClearSpeed • Potentially pure plug-and-play • No added license fee • Demands ClearSpeed’s 64-bit precision and speed • Enabled by recent DGEMM improvements; still needs symmetric ATA modification • Could enable some Computational Fluid Dynamics acceleration (codes based on finite elements) Matlab /Mathematica DGEMM performance NEW DGEMM 이용 62.16 GFLOPs in Matlab2006a xeon system Amber acceleration • AMBER (porting complete) accelerate 4~10x 대표적인 molecular dynamics 해석 및 시뮬레이션프로그램 분자레벨의 동역학 모의실험에 많이 이용되고 있으며 생물분야에서도 단백질구조 체에 대한 거동해석 및 모의실험에도 많이 이용. AMBER의 경우는 상용 이고 GROMACS의 경우는 프리웨어임 분자동력학 분석 및 모의실험을 수행하는 프로그램 성격상 많은 대단위 행렬 계산이 필요 병렬연산에 관한 부분까지 포함 하여 많은 부분이수치적연산에 의존 Amber acceleration AMBER module Host Advance X620 Speedup Gen. Born 1: 83.5 min. 24.6 min. 3.4x speedup Gen. Born 2: 84.6 min. 23.5 min. 3.6x speedup Gen. Born 6: 37.9 min. 4.0min. 9.4x speedup Host: 2.8GHz Pentium 4 EMT64, OS: RHEL4-64, CSXL: version 2.50 CSX600 Monte Carlo Improvement I Monte Carlo methods exploit high local bandwidth • • • Monte Carlo methods are ideal for ClearSpeed acceleration: – High regularity and locality of the algorithm – Very high compute to I/O ratio – Very good scalability to high degrees of parallelism – Needs 64-bit Excellent results for parallelization – Achieving 10X performance per Advance card vs. highly optimized code on the fastest x86 CPUs available today – Maintains high precision required by the computations True 64bit IEEE 754 floating point throughout – 25 W per card typical when card is computing ClearSpeed has a Monte Carlo example code, available in source form for evaluation CSX600 Monte Carlo Improvement II Monte Carlo scale like the NAS “EP” benchmark • • • • 1 1 2 4 CPU, no acceleration : 400M samples, 60 seconds Advance board : 400M samples, 2.9 seconds, 20x speed up Advance boards : 400M samples, 1.5 seconds, 40x speed up Advance boards : 400M samples, 0.8 seconds, 79x speed up CSX600 Monte Carlo Improvement III Why do Monte Carlo apps need 64-bit? • • • Accuracy increases as the square root of the number of trials, so five-decimal accuracy takes 10 billion trials. But, when you sum many similar values, you start to scrape off all the significant digits. 64-bit summation needed to get a single-precision result! Single precision: 1.0000x108 + 1 = 1.0000x108 Double precision: 1.0000x108 + 1 = 1.00000001x108 CSX600 Financial Application Black-Scholes analytic pricing formula Binomial method Monte Carlo Finite difference method Broadie-Glasserman random tree method Overall speed up gain range from 2x~ 70X Precision double Customizing CSX600 지원 software 개발 환경 Windows series CSX600 Summary & discussion • • • • • • • For acceleration of numerically-intensive codes, such as – Math functions (RNGs, sin, cos, log, exp, sqrt etc.) – Standard libraries (Level 3 BLAS, LAPACK, FFTs) ~70 GFLOPS sustained from a ClearSpeed Advance™ board Accelerated functions are callable from C/C++ or Fortran ~25 watts per single-slot board – Performance measured in GFLOPS per watt rather than MFLOPS per watt Multiple ClearSpeed Advance™ boards can be used together for even higher performance and compute density Current ClearSpeed board is mature product in production systems at top500 site ClearSpeed can deliver: – > 100 TFLOPS for June top500 and – > 1 PFLOPS for November 2006 top500