Chapter 3 R Handout - Winona State University

advertisement

Ch 3. – Regression Analysis – Computing in R

Example 1: Patient Satisfaction Survey (pgs. 79 – 83)

First read these data in R using the same approach introduced in the Chapter 2 R

handout.

> head(HospSatis)

Age Severity Satisfaction

1 55

50

68

2 46

24

77

3 30

46

96

4 35

48

80

5 59

58

43

6 61

60

44

We can visualize the pairwise relationships via a scatterplot matrix using the pairs()

command.

> pairs(HospSatis)

Note: this only work if all columns in data frame are numeric!

We can see both age and severity of illness are negatively correlated with the response

satisfaction, and are positively correlated with each other as would be expected.

We first examine the simple linear regression model for satisfaction using patient age as

the sole predictor, i.e. we will assume the following holds for the mean satisfaction as a

function of patient age: 𝐸(𝑆𝑎𝑡𝑖𝑠𝑓𝑎𝑐𝑡𝑖𝑜𝑛|𝐴𝑔𝑒) = 𝛽𝑜 + 𝛽1 𝐴𝑔𝑒 .

1

Thus we will fit the following model to the observed data from the patient survey:

𝑦𝑖 = 𝛽𝑜 + 𝛽1 𝑥𝑖 + 𝑒𝑖 i = 1, … ,25

where,

𝑦𝑖 = 𝑖 𝑡ℎ 𝑝𝑎𝑡𝑖𝑒𝑛𝑡 ′ 𝑠 𝑟𝑒𝑝𝑜𝑟𝑡𝑒𝑑 𝑠𝑎𝑡𝑖𝑠𝑓𝑎𝑐𝑡𝑖𝑜𝑛

𝑥𝑖 = 𝑖 𝑡ℎ 𝑝𝑎𝑡𝑖𝑒𝑛𝑡 ′ 𝑠 𝑎𝑔𝑒

To fit this model in R we use the lm() command. The basic form to use when calling

this function is lmfit = lm(y ~ x1 + x2 + … + xk) where y is the name of the response

variable in the data frame and x1 + x2 + … + xk are the terms you want to include in

your model. Applying this for the simple linear regression of patient satisfaction on

the patient age is as follows.

>

>

>

>

satis.lm = lm(Satisfaction ~ Age)

attach(HospSatis)

satis.lm = lm(Satisfaction ~ Age)

summary(satis.lm)

Residuals:

Min

1Q

-21.270 -6.734

Median

2.164

3Q

5.918

Max

18.193

Coefficients:

Estimate Std. Error t value

(Intercept) 131.0957

6.8322 19.188

Age

-1.2898

0.1292 -9.981

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01

Pr(>|t|)

1.19e-15 ***

7.92e-10 ***

‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 9.375 on 23 degrees of freedom

Multiple R-squared: 0.8124,

Adjusted R-squared: 0.8043

F-statistic: 99.63 on 1 and 23 DF, p-value: 7.919e-10

R-square = .8124 or 81.24% of the variation in the patient satisfaction responses can be

explained by the simple linear regression on patients age.

RMSE = 9.375 = 𝜎̂

Testing Parameters and CI’s for Parameters:

Coefficients:

Estimate Std. Error t value

(Intercept) 131.0957

6.8322 19.188

Age

-1.2898

0.1292 -9.981

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01

Pr(>|t|)

1.19e-15 ***

7.92e-10 ***

‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Approximate 95% CI’s for 𝛽𝑗 would be given by 𝛽̂𝑗 ± 2𝑆𝐸(𝛽̂𝑗 ) which you could easily do

by hand if needed.

2

Examining residuals (𝑒𝑖 ), Leverage (hii ), and Influence (Di )

To examine the residuals and case diagnostics it is easiest to simply type

plot(modelname). Here our simple linear regression of patient satisfaction on patient age

is called satis.lm. This will produce a sequence of four plots which you view in turn,

hitting Enter to view the next plot in the sequence.

>

plot(satis.lm)

Plot 1

Plot 3

Plot 2

Plot 4

The first plot is a plot of the residuals (𝑒𝑖 ) vs. the fitted values (𝑦̂)

𝑖 which can be used to

look for lack of fit (inappropriate mean function) and constant error variance. The

second plot is a normal quantile plot of the studentized residuals (𝑟𝑖 ) which allows us to

assess the normality of the errors and check for outliers. The third plot is the square

root of the absolute value of the studentized residuals (√|𝑟𝑖 |) vs. the fitted values (𝑦̂)

𝑖

with a smooth curve fit added. If this plot and/or smooth shows an increasing trend

3

then the assumption of constant error variance may be violated. The final plot is the

studentized residuals (𝑟𝑖 ) vs. leverage (ℎ𝑖𝑖 ). In addition to displaying the leverage on

the horizontal axis, contours are drawn on the plot using Cook’s Distance (𝐷𝑖 ). Here

we can see that patient 9 has a relatively large Cook’s Distance (𝐷𝑖 > .50) but it is not

above 1.0, so we will not worry too much about the influential effects of this case.

> plot(Age,Satisfaction,xlab="Patient Age (yrs.)",ylab="Patient

Satisfaction",main="Simple Linear Regression of Satisfaction on Age")

> abline(satis.lm)

Confidence Intervals for the Mean of Y and Prediction of a New Y (visually only!)

> SLRcipi(satis.lm)

4

Durbin-Watson Statistic in R

The dwtest() function conducts the Durbin-Watson test for autocorrelation in the

residuals and is found in the R library lmtest which needs to installed from one of the

CRAN mirror sites. To do this in R select Install Packages(s)… from the Packages pulldown menu as shown below.

You will then be asked to select a CRAN Mirror site to download the package lmtest

from. The choice is irrelevant really but I generally use Iowa State’s US (IA). Once you

install a package from CRAN you need still need to load the new package using that

option in Packages pull-down menu, i.e. Load package…

To use perform the Durbin-Watson test on residuals from a regression model you

simply type:

> dwtest(satis.lm,alternative="two.sided")

Durbin-Watson test

data: satis.lm

DW = 1.3564, p-value = 0.07496

alternative hypothesis: true autocorrelation is not 0

The default is to conduct an upper-tail test which tests for significant positive lag 1

autocorrelation only. Here I have specified the two-sided alternative which tests for

significant lag 1 autocorrelation of either type, positive or negative.

As time is not an issue for the patient satisifaction study, there really is no reason to test

for autocorrelation.

5

Example 2: Soda Concentrate Sales (Durbin-Watson statistic/test example)

After reading these data into R and then fitting the simple linear regression model of

Sales on Expenditures we can conduct the Durbin-Watson test for the residuals from

this fit. Given that the observations are collected over time, the significance of this test

suggests addressing the significant autocorrelation through our modeling is important.

> SodaConc = read.table(file.choose(),header=T,sep=",")

> names(SodaConc)

[1] "Year"

"Expend" "Sales" notice I changed the column names in JMP 1 st !!

> head(SodaConc)

Year Expend Sales

1

1

75 3083

2

2

78 3149

3

3

80 3218

4

4

82 3239

5

5

84 3295

6

6

88 3374

> detach(HospSatis)

> attach(SodaConc)

> plot(Expend,Sales,xlab="Advertising Expenditure",ylab="Soda Concentrate

Sales")

> sales.lm = lm(Sales~Expend)

> abline(sales.lm)

6

> summary(sales.lm)

Call:

lm(formula = Sales ~ Expend)

Residuals:

Min

1Q

-32.330 -10.696

Median

-1.558

3Q

8.053

Max

40.032

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1608.5078

17.0223

94.49

<2e-16 ***

Expend

20.0910

0.1428 140.71

<2e-16 ***

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 20.53 on 18 degrees of freedom

Multiple R-squared: 0.9991,

Adjusted R-squared: 0.999

F-statistic: 1.98e+04 on 1 and 18 DF, p-value: < 2.2e-16

> plot(sales.lm)

7

The plot of the residuals (𝑒𝑖 ) vs. the fitted values (𝑦̂)

𝑖 for this regression suggests

nonlinearity, i.e. we have indication that 𝐸(𝑆𝑎𝑙𝑒𝑠|𝐸𝑥𝑝𝑒𝑛𝑑𝑖𝑡𝑢𝑟𝑒) ≠ 𝛽𝑜 + 𝛽1 𝐸𝑥𝑝𝑒𝑛𝑑𝑖𝑡𝑢𝑟𝑒.

Also non-normality of the residuals is evident.

The sequence of commands below will save the residuals from the simple linear

regression of sales on advertising expenditure and then connect the points over time

using a dashed (lty=2) blue line (col = blue).

> e = resid(sales.lm)

> plot(Year,e,main="Plot of Residuals vs. Time (Soda Concentrate Sales)")

> lines(Year,e,lty=2,col="blue")

𝐻𝑜 : 𝜙 = 0 (𝑛𝑜 𝑙𝑎𝑔 1 𝑎𝑢𝑡𝑜𝑐𝑜𝑟𝑟𝑒𝑙𝑎𝑡𝑖𝑜𝑛)

𝐻𝑎 : 𝜙 > 0 (𝑝𝑜𝑠𝑖𝑡𝑖𝑣𝑒 𝑙𝑎𝑔 1 𝑎𝑢𝑡𝑜𝑐𝑜𝑟𝑟𝑒𝑙𝑎𝑡𝑖𝑜𝑛)

Durbin-Watson p-value = .0061008 which implies

we reject the null hypothesis and conclude there is

significant positive autocorrelation present in the

residual time series from the simple linear

regression of Soda Concentrate Sales on

Advertising Expenditure.

> dwtest(sales.lm)

Durbin-Watson test

data: sales.lm

DW = 1.08, p-value = 0.006108

alternative hypothesis: true autocorrelation is greater than 0

8

Fitting a Multiple Regression Model

As an example we consider the for hospital satisfaction using both age of the patient

and severity of illness as terms in our model, i.e. we fit the following model to our

survey results,

𝑦𝑖 = 𝛽𝑜 + 𝛽1 𝑥𝑖1 + 𝛽2 𝑥𝑖2 + 𝑒𝑖

where,

yi = ith patient ′ s satisfaction

𝑥𝑖1 = 𝑖𝑡ℎ 𝑝𝑎𝑡𝑖𝑒𝑛𝑡 ′ 𝑠 𝑎𝑔𝑒

𝑥𝑖2 = 𝑖 𝑡ℎ 𝑝𝑎𝑡𝑖𝑒𝑛𝑡 ′ 𝑠 𝑠𝑒𝑣𝑒𝑟𝑖𝑡𝑦 𝑜𝑓 𝑖𝑙𝑙𝑛𝑒𝑠𝑠

We fit this model below using R.

> attach(HospSatis)

> names(HospSatis)

[1] "Age"

"Severity"

"Satisfaction"

> satis.lm2 = lm(Satisfaction~Age+Severity)

> summary(satis.lm2)

Call:

lm(formula = Satisfaction ~ Age + Severity)

Residuals:

Min

1Q

-17.2800 -5.0316

Median

0.9276

3Q

4.2911

Max

10.4993

Coefficients:

Estimate Std. Error t value

(Intercept) 143.4720

5.9548 24.093

Age

-1.0311

0.1156 -8.918

Severity

-0.5560

0.1314 -4.231

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01

Pr(>|t|)

< 2e-16 ***

9.28e-09 ***

0.000343 ***

‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 7.118 on 22 degrees of freedom

Multiple R-squared: 0.8966,

Adjusted R-squared: 0.8872

F-statistic: 95.38 on 2 and 22 DF, p-value: 1.446e-11

We can see that both predictors, Age & Severity are significant (p < .001).

The residual plots and plots of leverage & Cook’s distance are shown on the following

page and are obtained by plotting the model.

> plot(satis.lm2)

9

No model inadequacies are evident from these plots.

The authors consider adding more terms based on the predictors Age and Severity in

Example 3.3 on page 91 of the text. The terms they are add are: 𝐴𝑔𝑒 ×

𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦, 𝐴𝑔𝑒 2 , & 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦 2 , giving us the model:

2

2

𝑦𝑖 = 𝛽𝑜 + 𝛽1 𝑥𝑖1 + 𝛽2 𝑥𝑖2 + 𝛽12 𝑥𝑖1 𝑥𝑖2 + 𝛽11 𝑥𝑖1

+ 𝛽22 𝑥𝑖2

+ 𝑒𝑖

To fit this model in R we first need to form the squared terms for Age and Severity.

> Age2 = Age^2

> Severity2 = Severity^2

> satis.lm3 = lm(Satisfaction~Age+Severity+Age*Severity+Age2+Severity2)

10

> summary(satis.lm3)

Call:

lm(formula = Satisfaction ~ Age + Severity + Age * Severity +

Age2 + Severity2)

Residuals:

Min

1Q

-16.915 -3.642

Median

2.015

3Q

4.000

Max

9.677

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 127.527542 27.912923

4.569 0.00021 ***

Age

-0.995233

0.702072 -1.418 0.17251

Severity

0.144126

0.922666

0.156 0.87752

Age2

-0.002830

0.008588 -0.330 0.74534

Severity2

-0.011368

0.013533 -0.840 0.41134

Age:Severity

0.006457

0.016546

0.390 0.70071

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 7.503 on 19 degrees of freedom

Multiple R-squared: 0.9008,

Adjusted R-squared: 0.8747

F-statistic: 34.5 on 5 and 19 DF, p-value: 6.76e-09

None of the additional terms are significant. We can begin dropping these terms one at

a time sequentially starting with 𝐴𝑔𝑒 2 as it has the largest p-value (p = .7453). Another

approach is consider dropping all of these terms simultaneously using the “General Ftest” as shown below.

𝑁𝐻: 𝐸(𝑆𝑎𝑡𝑖𝑠𝑓𝑎𝑐𝑡𝑖𝑜𝑛|𝐴𝑔𝑒, 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦) = 𝛽𝑜 + 𝛽1 𝐴𝑔𝑒 + 𝛽2 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦

𝐴𝐻: 𝐸(𝑆𝑎𝑡𝑖𝑠𝑓𝑎𝑐𝑡𝑖𝑜𝑛|𝐴𝑔𝑒, 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦)

= 𝛽𝑜 + 𝛽1 𝐴𝑔𝑒 + 𝛽2 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦 + 𝛽12 𝐴𝑔𝑒 ∗ 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦 + 𝛽11 𝐴𝑔𝑒 2

+ 𝛽22 𝑆𝑒𝑣𝑒𝑟𝑖𝑡𝑦 2

The General F-test for comparing nested models.

𝐹=

(𝑅𝑆𝑆𝑁𝐻 − 𝑅𝑆𝑆𝐴𝐻 )/𝑟

~ 𝐹𝑟 ,𝑛−𝑝

2

𝜎̂𝐴𝐻

where,

𝑟 = 𝑑𝑖𝑓𝑓𝑒𝑟𝑒𝑛𝑐𝑒 𝑖𝑛 𝑡ℎ𝑒 𝑛𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑝𝑎𝑟𝑎𝑚𝑒𝑡𝑒𝑟𝑠 𝑏𝑒𝑡𝑤𝑒𝑒𝑛 𝑡ℎ𝑒 𝑁𝐻 𝑎𝑛𝑑 𝐴𝐻 𝑚𝑜𝑑𝑒𝑙𝑠

2

𝜎̂𝐴𝐻

= 𝑀𝑆𝐸𝐴𝐻

The relevant output for both models is shown below.

11

Compare these rival models in R we can use the anova() command to compare these

models using the F-test described above.

> anova(satis.lm2,satis.lm3)

Analysis of Variance Table

Model 1:

Model 2:

Res.Df

1

22

2

19

Satisfaction ~ Age + Severity

Satisfaction ~ Age + Severity + Age * Severity + Age2 + Severity2

RSS Df Sum of Sq

F Pr(>F)

1114.5

1069.5 3

45.044 0.2667 0.8485

With a p-value = .8485 we clearly fail to reject the null hypothesis model and conclude

the more complex model is unnecessary.

Variable Selection Methods - Stepwise Regression (see section 3.6 of text)

Backward Elimination – include potential term of interest initially and then remove

variables one-at-time until no more terms can be removed.

Forward Selection – add the best predictor first, then add predictors successively until

none of the potential predictors are significant.

In R, the easiest method to employ is backward elimination and thus that is the only

one we will consider. The function step() in R will perform backward elimination on

“full” model.

> detach(HospSatis)

> HospFull = read.table(file.choose(),header=T,sep=",")

> names(HospFull)

[1] "Age"

"Severity"

"Surgical.Medical" "Anxiety"

[5] "Satisfaction"

> attach(HospFull)

> satis.full = lm(Satisfaction~Age+Severity+Surgical.Medical+Anxiety)

> summary(satis.full)

Call:

lm(formula = Satisfaction ~ Age + Severity + Surgical.Medical +

Anxiety)

Residuals:

Min

1Q

Median

3Q

Max

-18.1322 -3.5662

0.5655

4.7215 12.1448

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept)

143.8672

6.0437 23.804 3.80e-16 ***

Age

-1.1172

0.1383 -8.075 1.01e-07 ***

Severity

-0.5862

0.1356 -4.324 0.000329 ***

Surgical.Medical

0.4149

3.0078

0.138 0.891672

Anxiety

1.3064

1.0841

1.205 0.242246

12

Residual standard error: 7.207 on 20 degrees of freedom

Multiple R-squared: 0.9036,

Adjusted R-squared: 0.8843

F-statistic: 46.87 on 4 and 20 DF, p-value: 6.951e-10

Perform backward elimination on the full model and save the final model chosen to a

model named satis.step.

> satis.step = step(satis.full)

Start: AIC=103.18

Satisfaction ~ Age + Severity + Surgical.Medical + Anxiety

- Surgical.Medical

- Anxiety

<none>

- Severity

- Age

Df Sum of Sq

RSS

1

1.0 1039.9

1

75.4 1114.4

1038.9

1

971.5 2010.4

1

3387.7 4426.6

AIC

101.20

102.93

103.18

117.68

137.41

Step: AIC=101.2

Satisfaction ~ Age + Severity + Anxiety

- Anxiety

<none>

- Severity

- Age

Df Sum of Sq

RSS

1

74.6 1114.5

1039.9

1

971.8 2011.8

1

3492.7 4532.6

AIC

100.93

101.20

115.70

136.00

Step: AIC=100.93

Satisfaction ~ Age + Severity

Df Sum of Sq

<none>

- Severity

- Age

1

1

RSS

AIC

1114.5 100.93

907.0 2021.6 113.82

4029.4 5143.9 137.17

> summary(satis.step)

Call:

lm(formula = Satisfaction ~ Age + Severity)

Residuals:

Min

1Q

-17.2800 -5.0316

Median

0.9276

3Q

4.2911

Max

10.4993

Coefficients:

Estimate Std. Error t value

(Intercept) 143.4720

5.9548 24.093

Age

-1.0311

0.1156 -8.918

Severity

-0.5560

0.1314 -4.231

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01

Pr(>|t|)

< 2e-16 ***

9.28e-09 ***

0.000343 ***

‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 7.118 on 22 degrees of freedom

Multiple R-squared: 0.8966,

Adjusted R-squared: 0.8872

F-statistic: 95.38 on 2 and 22 DF, p-value: 1.446e-11

Here we can see the based model with Age and Severity only as predictors is chosen via

backward elimination using the AIC criterion.

13

Weighted Least Squares (WLS) – Example 3.9 (pg . 117)

In weighted least squares we give more weight to some observations in the data than

others. The general concept is that we wish to give more weight to observations where

the variation in the response is small and less weight to observations where the

variability in the response is large. In the scatterplot below we can see that the variation

in strength increases with weeks and strength. That is larger values of strength have

more variation than smaller values.

> detach(HospFull)

> StrWeeks = read.table(file.choose(),header=T,sep=",")

> names(StrWeeks)

[1] "Weeks"

"Strength"

> attach(StrWeeks)

> head(StrWeeks)

Weeks Strength

1

20

34

2

21

35

3

23

33

4

24

36

5

25

35

6

28

34

> plot(Weeks,Strength,main="Plot of Concrete Strength vs. Weeks")

> str.lm = lm(Strength~Weeks)

> abline(str.lm)

In WLS the parameter estimates (𝛽̂𝑗 ) are found by minimizing the weight least squares

criteria:

𝑛

𝑆𝑆𝐸 = ∑ 𝑤𝑖 (𝑦𝑖 − (𝛽̂𝑜 + 𝛽̂1 𝑥𝑖1 + 𝛽̂2 𝑥𝑖2 + ⋯ + 𝛽̂𝑘 𝑥𝑖𝑘 ))

2

𝑖=1

The problem with weighted least squares is how do we determine appropriate

weights? A procedure for doing this in the case of nonconstant variance as we have in

this situation is outlined on pg. 115 of the text. We will implement the procedure

outlined in the text in R.

14

> summary(str.lm)

Call:

lm(formula = Strength ~ Weeks)

Residuals:

Min

1Q

-14.3639 -4.4449

Median

-0.0861

3Q

5.8671

Max

11.8842

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 25.9360

5.1116

5.074 2.76e-05 ***

Weeks

0.3759

0.1221

3.078 0.00486 **

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 7.814 on 26 degrees of freedom

Multiple R-squared: 0.2671,

Adjusted R-squared: 0.2389

F-statistic: 9.476 on 1 and 26 DF, p-value: 0.004863

First we need to save the residuals from an OLS (equal weight for all observations)

regression model fit to these data. Then we either square these residuals or take the

absolute value of these residuals and fit a regression model using either residual

transformation as the response. In general, I believe the absolute value of the residuals

from OLS fit works better.

>

>

>

>

>

e = resid(str.lm) save residuals from OLS fit

abse = abs(e) take the absolute value of the residuals

abse.lm = lm(abse~Weeks) perform the regression of |e| on Weeks (X)

plot(Weeks,abse,ylab="|Residuals|",main="Absolute OLS Residuals vs. Weeks")

abline(abse.lm)

Below is a scatterplot of the absolute residuals vs. week (X) with an OLS simple linear

regression fit added.

15

We then save the fitted values from this regression and form weights equal to the

reciprocal of the square of the fitted values, i.e.

̂𝑖 |2

𝑤𝑖 = 1/|𝑒

> ehat = fitted(abse.lm)

> wi = 1/(ehat^2)

Finally we fit the weighted least squares (WLS) line using these weights.

> str.wls = lm(Strength~Weeks,weights=wi)

> summary(str.wls)

Call:

lm(formula = Strength ~ Weeks, weights = wi)

Residuals:

Min

1Q Median

-1.9695 -1.0450 -0.1231

3Q

1.1507

Max

1.5785

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 27.54467

1.38051 19.953 < 2e-16 ***

Weeks

0.32383

0.06769

4.784 5.94e-05 ***

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 1.119 on 26 degrees of freedom

Multiple R-squared: 0.4682,

Adjusted R-squared: 0.4477

F-statistic: 22.89 on 1 and 26 DF, p-value: 5.942e-05

The resulting WLS fit is shown on the below.

> plot(Weeks,Strength,main="WLS Fit to Concrete Strength Data")

> abline(str.wls)

16

Contrast these parameter estimates with those obtained from the OLS fit.

OLS

> str.lm$coefficients

(Intercept)

Weeks

25.9359842

0.3759291

WLS

> str.wls$coefficients

(Intercept)

Weeks

27.5446681

0.3238344

If the parameter estimates change markedly after the weighting process described

above, we typically repeat the process using the residuals from first WLS fit to construct

new weights and repeat until the parameter estimates do not change much from one

weighted fit to the next. In this case the parameter estimates do not differ much, we do

not need to consider repeating. The process of repeating WLS fits is called iteratively

reweighted least squares (IRLS).

While we will not be regularly fitting WLS models, we will see later in the course many

methods for modeling time series involve giving more weight to some observations and

less weight to others. In time series modeling we typically give more weight to

observations close in time to the value we are trying to predict or forecast. This is

precisely idea of Discounted Least Squares covered in section 3.7.3 (pgs. 119-133). We

will not cover Discounted Least Squares because neither JMP nor R have built-in

methods for performing this type of analysis and the mathematics required to properly

discuss it are beyond the prerequisites for this course. However, in Chapter 4 we will

examine exponential smoothing which does use weights in the modeling process. In

exponential smoothing all observations up to time t are used to make a prediction or

forecast and we give successively smaller weights to observations as we look further

back in time.

17

Detecting and Modeling with Autocorrelation

Durbin-Watson Test (see above as well)

Cochrane-Olcutt Method

Use of lagged variables in the model

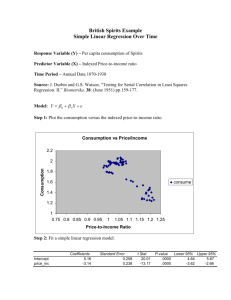

Example 3.14 – Toothpaste Market Share and Price (pgs. 143-145)

In this example the toothpaste market share is modeled as function of price. The data is

collected over time so there is the distinct possibility of autocorrelation. We first use R

to fit the simple linear regression of market share (Y) on price (X).

> detach(StrWeeks)

> Toothpaste = read.table(file.choose(),header=T,sep=",")

> names(Toothpaste)

[1] "MarketShare" "Price"

> head(Toothpaste)

MarketShare Price

1

3.63 0.97

2

4.20 0.95

3

3.33 0.99

4

4.54 0.91

5

2.89 0.98

6

4.87 0.90

> attach(Toothpaste)

> tooth.lm = lm(MarketShare~Price)

> summary(tooth.lm)

Call:

lm(formula = MarketShare ~ Price)

Residuals:

Min

1Q

Median

-0.73068 -0.29764 -0.00966

3Q

0.30224

Max

0.81193

Coefficients:

Estimate Std. Error t value

(Intercept)

26.910

1.110

24.25

Price

-24.290

1.298 -18.72

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01

Pr(>|t|)

3.39e-15 ***

3.02e-13 ***

‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.4287 on 18 degrees of freedom

Multiple R-squared: 0.9511,

Adjusted R-squared: 0.9484

F-statistic: 350.3 on 1 and 18 DF, p-value: 3.019e-13

> plot(Price,MarketShare,xlab="Price of Toothpaste",ylab="Market Share")

> abline(tooth.lm)

18

> dwtest(tooth.lm)

Durbin-Watson test

data: tooth.lm

DW = 1.1358, p-value = 0.009813

alternative hypothesis: true autocorrelation is greater than 0

The Durban-Watson statistic d = 1.1358 which is statistically significant (p = .0098). Thus

we conclude a significant autocorrelation (𝑟1 = .409) exists in the residuals (𝑒𝑡 ) and we

conclude the errors are not independent. This is a violation of the assumptions required

for OLS regression. The ACF plot below shows the autocorrelation present in the

residuals from the OLS fit.

> resid.acf = acf(resid(tooth.lm)) compute ACF for the residuals from OLS

> resid.acf

Autocorrelations of series ‘resid(tooth.lm)’, by lag

0

1

2

3

4

5

6

7

8

9

10

1.000 0.409 0.135 0.006 0.073 -0.208 -0.132 -0.040 -0.223 -0.354 -0.326

11

12

13

-0.144 -0.153 0.072

> plot(resid.acf)

19

Cochrane-Olcutt Method

The Cochrane-Olcutt method for dealing with this autocorrelation transforms both the

response series for market share and the predictor series for price using the lag 1

autocorrelation in the residuals. We form the series 𝑥𝑡′ and 𝑦𝑡′ using the following

formulae:

𝑥𝑡′ = 𝑥𝑡 − 𝜙̂𝑥𝑡−1

𝑦𝑡′ = 𝑦𝑡 − 𝜙̂𝑦𝑡−1

where 𝜙̂ = .409, the lag-1 autocorrelation in the residuals from the OLS fit. These

transformed version of the response and predictor incorporate information from the

previous time period in the modeling process, thus addressing the autocorrelation.

Below is the regression of 𝑦𝑡′ on 𝑥𝑡′ fit using a function I wrote called cochrane.orcutt()

which takes a simple linear regression model fit to these data as an argument.

> tooth.com = cochrane.orcutt(tooth.lm)

> summary(tooth.com)

Call:

lm(formula = ytprime ~ xtprime)

Residuals:

Min

1Q

Median

-0.56115 -0.24889 -0.05455

3Q

0.25247

Max

0.83009

Coefficients:

Estimate Std. Error t value

(Intercept) 15.8043

0.9502

16.63

xtprime

-24.1310

1.9093 -12.64

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01

Pr(>|t|)

5.95e-12 ***

4.53e-10 ***

‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.3956 on 17 degrees of freedom

Multiple R-squared: 0.9038,

Adjusted R-squared: 0.8981

F-statistic: 159.7 on 1 and 17 DF, p-value: 4.535e-10

> dwtest(tooth.com)

Durbin-Watson test

data: tooth.com

DW = 1.9326, p-value = 0.3896

alternative hypothesis: true autocorrelation is greater than 0

We can see that the Cochrane-Orcutt Method has eliminated the autocorrelation in the

residuals (Durbin-Watson p-value = .3896).

20

> acf(resid(tooth.com))

We can see from the ACF plot of the residuals there is no significant autocorrelation in

the residuals from the model fit using the Cochrane-Olcutt method.

Another approach to dealing with autocorrelation in a OLS regression is to include lags

of the response series and the predictors series in the regression model. Equations 3.119

and 3.120 in the text give two models for doing this. The first incorporates first order

lags of the response series and the predictor series as terms in the model and second

uses only the first order lag of response series.

𝑦𝑡 = 𝜙𝑦𝑡 + 𝛽0 + 𝛽1 𝑥𝑡 + 𝛽2 𝑥𝑡−1 + 𝑎𝑡 where 𝑎𝑡 ~𝑁(0, 𝜎 2 )

(3.119)

𝑦𝑡 = 𝜙 𝑦𝑡−1 + 𝛽𝑜 + 𝛽1 𝑥𝑡 + 𝑎𝑡 where 𝑎𝑡 ~𝑁(0, 𝜎 2 ) (3.120)

We will fit both of these models to toothpaste market sales data. In R the lag()

requires that the arguments be viewed as time series. Thus in order to fit the models

above we need form a new data frame that converts both market share and price to time

series objects & in addition creates the lag-1 series for both of these times series. The

code to do this is shown below and is rather clunky!

> Toothpaste.lag = ts.intersect(MarketShare=ts(MarketShare),Price=ts(Price),

Price1=lag(ts(Price),-1),MarketShare1=lag(ts(MarketShare),-1),dframe=TRUE)

MarketShare1 and Price1 are the series 𝑦𝑡−1 and 𝑥𝑡−1 respectively.

We MUST be sure to detach the data frame Toothpaste and attach the new data frame

Toothpaste.lag.

21

>

>

>

>

detach(Toothpaste)

attach(Toothpaste.lag)

tooth.laglm = lm(MarketShare~MarketShare1+Price+Price1) Fit model (3.119)

summary(tooth.laglm)

Call:

lm(formula = MarketShare ~ MarketShare1 + Price + Price1)

Residuals:

Min

1Q

Median

-0.59958 -0.23973 -0.02918

3Q

0.26351

Max

0.66532

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept)

16.0675

6.0904

2.638

0.0186 *

MarketShare1

0.4266

0.2237

1.907

0.0759 .

Price

-22.2532

2.4870 -8.948 2.11e-07 ***

Price1

7.5941

5.8697

1.294

0.2153

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.402 on 15 degrees of freedom

Multiple R-squared: 0.96,

Adjusted R-squared: 0.952

F-statistic: 120.1 on 3 and 15 DF, p-value: 1.037e-10

Same model fit in JMP – notice the coefficients, standard errors, and p-values match!

> dwtest(tooth.laglm)

Durbin-Watson test

data: tooth.laglm

DW = 2.0436, p-value = 0.4282

alternative hypothesis: true autocorrelation is greater than 0

> acf(resid(tooth.laglm))

22

First notice the Durbin-Watson test and ACF of the residuals do not suggest significant

autocorrelation.

As the lag term (𝑥𝑡−1) is not significant we can drop it from the model and fit the model

in equation 3.120. This model fits the data well and does not suffer from

autocorrelation.

> tooth.laglm2 = lm(MarketShare~MarketShare1+Price) Fit model 3.120

> summary(tooth.laglm2)

Call:

lm(formula = MarketShare ~ MarketShare1 + Price)

Residuals:

Min

1Q

-0.78019 -0.31005

Median

0.05071

3Q

0.24264

Max

0.59342

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept)

23.27450

2.51374

9.259 7.93e-08 ***

MarketShare1

0.16190

0.09236

1.753

0.0987 .

Price

-21.17843

2.39298 -8.850 1.46e-07 ***

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.4104 on 16 degrees of freedom

Multiple R-squared: 0.9556,

Adjusted R-squared: 0.95

F-statistic: 172.1 on 2 and 16 DF, p-value: 1.517e-11

> dwtest(tooth.laglm2)

Durbin-Watson test

data: tooth.laglm2

DW = 1.6132, p-value = 0.1318

alternative hypothesis: true autocorrelation is greater than 0

Again the results of this fit agree with the one from JMP. No autocorrelation is detected

when using only 𝑦𝑡−1 in the model.

23

Final Examples: Beverage Sales

We return again to the beverage sales example and consider developing a few

regression models that can be used predict or forecast future sales. One model will

make use of trigonometric functions of time to model the seasonal behavior in this time

series (Model 1), the other will use month as a factor term (Model 2). Autocorrelation

issues will be explored for both models. The mean functions for these two modeling

approaches are outline below.

Model 1:

𝐸(𝑆𝑎𝑙𝑒𝑠|𝑇𝑖𝑚𝑒) = 𝐸(𝑦|𝑡) = 𝛽𝑜 + 𝛽1 𝑡 + 𝛽2 𝑡 2 + 𝛽3 𝑡 3 + 𝛽4 sin (

2𝜋𝑡

2𝜋𝑡

) + 𝛽5 cos (

)

12

12

Additional terms may be added to the model for example other trigonometric

terms at other periodicities or lag terms based on the series 𝑦𝑡 .

Model 2:

𝐸(𝑆𝑎𝑙𝑒𝑠|𝑇𝑖𝑚𝑒) = 𝛽𝑜 + 𝛽1 𝑡 + 𝛽2 𝑡 2 + 𝛽3 𝑡 3 + 𝛼1 𝐷1 + ⋯ + 𝛼11 𝐷11

where,

1 𝑖𝑓 𝑀𝑜𝑛𝑡ℎ = 𝐽𝑎𝑛𝑢𝑎𝑟𝑦

𝐷1 = {

0 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

1 𝑖𝑓 𝑀𝑜𝑛𝑡ℎ = 𝑁𝑜𝑣𝑒𝑚𝑏𝑒𝑟

… 𝐷11 = {

0 𝑜𝑡ℎ𝑒𝑟𝑤𝑖𝑠𝑒

Additional lag terms of the time series 𝑦𝑡 may be added to deal with

potential autocorrelation issues.

Fitting Model 1: In order to fit model 1 we first need to form the trigonometric terms in

R. There is a built-in constant for 𝜋 in R called pi. In code below we create trignometric

terms of degrees 6 and 12 for inclusion in the model.

> attach(BevSales)

> names(BevSales)

[1] "Time" "Month" "Year" "Date"

> head(BevSales)

Time Month Year

Date Sales

1

1

1 1992 01/01/1992 3519

2

2

2 1992 02/01/1992 3803

3

3

3 1992 03/01/1992 4332

4

4

4 1992 04/01/1992 4251

5

5

5 1992 05/01/1992 4661

6

6

6 1992 06/01/1992 4811

"Sales"

24

>

>

>

>

sin12 = sin(2*pi*Time/12)

cos12 = cos(2*pi*Time/12)

sin6 = sin(2*pi*Time/6)

cos6 = cos(2*pi*Time/6)

To specify a cubic polynomial for the long term time trend we can use the function

poly(Time,k) where k will be 3 for a cubic polynomial.

> model1 = lm(Sales~poly(Time,3)+sin12+cos12+sin6+cos6)

> summary(model1)

Call:

lm(formula = Sales ~ poly(Time, 3) + sin12 + cos12 + sin6 + cos6)

Residuals:

Min

1Q

-465.46 -107.50

Median

11.08

3Q

120.73

Max

522.17

Coefficients:

(Intercept)

poly(Time, 3)1

poly(Time, 3)2

poly(Time, 3)3

sin12

cos12

sin6

cos6

--Signif. codes:

Estimate Std. Error t value Pr(>|t|)

5238.66

14.34 365.201 < 2e-16

8771.94

192.79 45.499 < 2e-16

639.84

192.45

3.325 0.00108

1294.88

193.24

6.701 2.84e-10

-179.53

20.37 -8.812 1.31e-15

-465.55

20.29 -22.942 < 2e-16

-111.79

20.31 -5.505 1.32e-07

31.84

20.29

1.569 0.11847

***

***

**

***

***

***

***

0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 192.5 on 172 degrees of freedom

Multiple R-squared: 0.9422,

Adjusted R-squared: 0.9398

F-statistic: 400.4 on 7 and 172 DF, p-value: < 2.2e-16

> plot(Time,Sales,type="p",xlab="Time",ylab="Beverage Sales",main="Plot of

Time Series with Fitted Values from Model 1")

> lines(Time,fitted(model1),lwd=2,col="blue")

25

> plot(model1)

There is no evidence of a violation of regression assumptions from these plots. We now

consider the Durbin-Watson test.

> dwtest(model1)

Durbin-Watson test

data: model1

DW = 1.8779, p-value = 0.1036

alternative hypothesis: true autocorrelation is greater than 0

Despite the fact that the Durbin-Watson test statistic is not significant, examination of

an ACF for the residuals from this model suggests there is still some autocorrelation

structure in the residuals that should be accounted for, particularly at lags 3, 6, 9, and

12.

26

Fitting Model 2: Fitting this model requires first building terms for the cubic

polynomial in time and then specifying that Month should be treated as

categorical/nominal, i.e. as a factor. This is done in R by using the factor() command.

> Month.fac = factor(Month)

> model1 = lm(Sales~poly(Time,3)+Month.fac)

> summary(model1)

Call:

lm(formula = Sales ~ poly(Time, 3) + Month.fac)

Residuals:

Min

1Q

-385.93 -112.07

Median

-1.22

3Q

100.67

Max

373.30

Coefficients:

(Intercept)

poly(Time, 3)1

poly(Time, 3)2

poly(Time, 3)3

Month.fac2

Month.fac3

Month.fac4

Month.fac5

Month.fac6

Month.fac7

Month.fac8

Month.fac9

Month.fac10

Month.fac11

Month.fac12

--Signif. codes:

Estimate Std. Error t value Pr(>|t|)

4530.70

40.05 113.130 < 2e-16 ***

8749.17

155.21 56.370 < 2e-16 ***

639.89

154.87

4.132 5.71e-05 ***

1260.11

155.66

8.095 1.19e-13 ***

203.52

56.55

3.599 0.000422 ***

644.49

56.55 11.396 < 2e-16 ***

701.17

56.56 12.396 < 2e-16 ***

1190.22

56.57 21.039 < 2e-16 ***

1297.04

56.59 22.922 < 2e-16 ***

930.63

56.60 16.442 < 2e-16 ***

1149.57

56.62 20.303 < 2e-16 ***

820.60

56.64 14.487 < 2e-16 ***

647.84

56.67 11.432 < 2e-16 ***

520.30

56.70

9.177 < 2e-16 ***

390.22

56.73

6.879 1.19e-10 ***

0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 154.9 on 165 degrees of freedom

Multiple R-squared: 0.9641,

Adjusted R-squared: 0.961

F-statistic: 316.4 on 14 and 165 DF, p-value: < 2.2e-16

The absense of a coefficient for the first month, we can see that January is used as the

reference month where JMP used December.

> plot(Time,Sales,xlab="Time",ylab="Beverage Sales",main="Plot of Beverage

Sales and Fitted Values from Model 2")

> lines(Time,fitted(model2),lwd=2,col="red")

27

Examining the residuals and testing for first order autocorrelation using the DurbinWatson test we find.

> dwtest(model2)

Durbin-Watson test

data: model2

DW = 1.0517, p-value = 1.082e-10

alternative hypothesis: true autocorrelation is greater than 0

We could add the term 𝑦𝑡−1 to the model in attempts to deal with the autocorrelation.

This is actually very difficult to do in R. You need basically to consider the regression

of the sales for time periods t = 2 to t = 180 on the sales from t = 1 to t = 179. All other

terms in the model need to be considered for times t = 2 and t = 180 only.

>

>

>

>

Sales.yt = Sales[2:180] 𝑦𝑡

Sales.yt1 = Sales[1:179] 𝑦𝑡−1

Time.t = Time[2:180] 𝑐𝑜𝑛𝑠𝑖𝑑𝑒𝑟 𝑡𝑖𝑚𝑒 𝑝𝑒𝑟𝑖𝑜𝑑 𝑡 = 2 𝑡𝑜 𝑡 = 180 𝑡𝑜 𝑎𝑙𝑖𝑔𝑛 𝑤𝑖𝑡ℎ 𝑡ℎ𝑒 𝑟𝑒𝑠𝑝𝑜𝑛𝑠𝑒

Month.t = Month.fac[2:180]

> model3 = lm(Sales.yt~Sales.yt1+poly(Time.t,3)+Month.t)

28

> summary(model3)

Call:

lm(formula = Sales.yt ~ Sales.yt1 + poly(Time.t, 3) + Month.t)

Residuals:

Min

1Q

-364.42 -84.34

Median

-8.72

3Q

88.07

Max

381.53

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept)

2.225e+03 3.390e+02

6.562 6.74e-10 ***

Sales.yt1

4.667e-01 6.854e-02

6.809 1.79e-10 ***

poly(Time.t, 3)1 4.659e+03 6.077e+02

7.666 1.51e-12 ***

poly(Time.t, 3)2 3.460e+02 1.425e+02

2.429

0.0162 *

poly(Time.t, 3)3 7.035e+02 1.614e+02

4.358 2.32e-05 ***

Month.t2

4.069e+02 5.747e+01

7.080 4.07e-11 ***

Month.t3

7.527e+02 5.235e+01 14.379 < 2e-16 ***

Month.t4

6.034e+02 5.347e+01 11.286 < 2e-16 ***

Month.t5

1.066e+03 5.482e+01 19.442 < 2e-16 ***

Month.t6

9.443e+02 7.439e+01 12.694 < 2e-16 ***

Month.t7

5.279e+02 7.991e+01

6.607 5.31e-10 ***

Month.t8

9.177e+02 6.254e+01 14.674 < 2e-16 ***

Month.t9

4.865e+02 7.237e+01

6.722 2.87e-10 ***

Month.t10

4.671e+02 5.844e+01

7.992 2.30e-13 ***

Month.t11

4.200e+02 5.354e+01

7.845 5.40e-13 ***

Month.t12

3.494e+02 5.140e+01

6.796 1.92e-10 ***

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 136.2 on 163 degrees of freedom

Multiple R-squared: 0.9718,

Adjusted R-squared: 0.9692

F-statistic: 374.2 on 15 and 163 DF, p-value: < 2.2e-16

> dwtest(model3)

Durbin-Watson test

data: model3

DW = 2.2462, p-value = 0.9193

alternative hypothesis: true autocorrelation is greater than 0

The inclusion of the lag-1 response term has addressed the autocorrelation in the

residuals, which is confirmed by the Durbin-Watson test (p = .9193) and the ACF plot

below which shows only lag-3 autocorrelation as being significant.

> acf(resid(model3))

29

> plot(Time,Sales,xlab="Time",ylab="Beverage Sales",main="Plot of Beverage

Sales and Fitted Values from Model 3")

> lines(Time.t,fitted(model3),lwd=2,col="black")

30