Document

advertisement

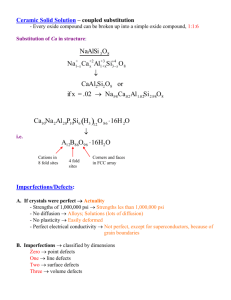

MMSA.KPI.UA PHD. MARYNA DIDKOVSKA SOFTWARE TESTING FUNDAMENTALS Introduction into Software Testing Definitions. Software Testing Methods, Levels and Types INTRODUCTION INTO SOFTWARE TESTING 2 Software Testing. What Does It Mean? PREAMBLE Application testing was seriously taken in ninetieth in the U.S. Those years were mad ones for High Tech industry. Mass application of internet, wide use of quick-develop-technologies caused the creation of huge programming systems at that time. And the complexity has been growing day after day. Consequently the quantity of potential problems has been growing as well. It is difficult to take own place in the market selling really poor, incomprehensible programs with a lot of defects. And nowadays most of companies, producing software, pay more and more attention on their testing. 3 SOFTWARE TESTING DEFINITION Software Testing – is a process of software analysis and defect detecting. Actions of defect detecting are directed to defining as many as possible defects, which should be fixed. IEEE Standard Glossary of Software Engineering Terminology says that testing is «The process of exercising software to verify that it satisfies specified requirements and to detect errors». Why is testing a process? Because testing is a planned and well-ordered activity. This moment is important because under time shorted conditions well planned and systematic approach leads to defects detecting more rapidly than poorly planned testing activity. 4 SOFTWARE TESTING DEFINITION Defect (bug) – non-conformance to requirements or functional specification. It is something that does not correspond to valid Customer’s expectations that are assumed but may be not described in product requirements (what can it be? your ideas!?). A defect may have a place in requirements or any other documentation, in design or architecture. A bug can be result of incorrect environment, configuration or data. Expected Result – the behaviour of the system (software product) we expect to see in result of our actions (inputs). In other words – this is correct behaviour of the software product. If the actual behaviour of the program we see does not correspond to that one which we expect (not the same), such behaviour may be considered as wrong and we may say that we 5 have found the defect. SOFTWARE TESTING DEFINITION Test Case – a set of test inputs, execution conditions and expected results developed for a particular objective, such as to exercise a particular program path or to verify compliance with a specific requirement. Test Plan – is a part of the overall project documentation describing what, when, how and who will be involved in the testing process. This is basic document that describes a list of tested components, quality criteria and risks of testing, resources and graphs of testing, testing strategy and testing types. Build – is an intermediate version of a software product which is released by developers for testing. 6 WORK PRODUCTS TO BE TESTED Work Products that can be tested: • Programs at installing, launching and operating. • Program’s code without launching. • Product prototype. • Product documentation: user manual. • Project documentation: Product architecture, product design. Project plan, Test plan. Test cases. Product requirements; Functional specifications; On-line help; Installation guide and 7 SOFTWARE TESTING 8 Methods. Levels. Types SOFTWARE TESTING APPROACHES Static Testing is a process, which is associated with analysis of software. Static testing provides for testing (verifying) of any work product, e.g. code, requirements documents, functional specification, architecture, design documents, etc. Static testing is one of the most effective ways of defects detecting in the early stages of product process. Actually static testing is what can be made for defects detecting without running the code. Dynamic Testing is a testing activity providing for software operating. Dynamic testing can not avoid running the code. Better to say, dynamic testing consists of launching the program, running all functional modules and comparing the product’s behaviour with expected one using user interface. 9 SOFTWARE TESTING METHODS 10 Black box. White box. Grey box. SOFTWARE TESTING METHODS Black box testing. Testing software based on functional and business requirements at launching and operating it without knowledge of the internal structure or program source code. A tester tests a product so as an end-user would work with it at launching and operating it. The purpose of this method is to check the proper work of all functions, to verify whether all functions correspond to functional requirements. Advantages: Disadvantages: Tester does not need programming knowledge. Tests are done from the point of view of the client (end user), not the designer/coder. Test cases can be designed as soon as the specifications (or requirements) are complete. Tests can be redundant if the coder has already run the same test cases. Test cases are difficult to design very difficult when coding is done ahead of specifications/design documentation. Testing every possible input stream is unrealistic because 11it would take a inordinate amount of time. SOFTWARE TESTING METHODS White box testing (glass box testing). Tester uses his understanding of source code and access the code to develop and execute test cases. This method tests the architecture of the system. It tests the design and programming that goes into building system. White box testing is usually applied when application is not entirely assembled, but it is necessary to check each of the components, modules, procedures and sub-functions. White box testing is close interrelated with unit testing, which is often performed by developer who understands the logic of code. Advantages: Disadvantages: Some hidden problems can be revealed easily. Bug diagnosis can be done quickly. We can «run» such tests even if we have no executable code yet. We need good technical skills to perform such tests. It is difficult to imitate end user behaviour – some «typical» bugs can be missed. 12 BLACK BOX VS WHITE BOX TESTING Synonyms for black-box include: behavioral, functional, opaque-box, and closed-box. Synonyms for white-box include: structural, glass-box and clear-box. Generally black box testing will begin early in the software development i.e. in requirement gathering phase itself. But for white box testing approach one has to wait for the designing has to complete. We can use black testing strategy almost any size either it may be small or large. But white box testing will be effective only for small lines of codes or piece of codes. In white box testing we can not test Performance of the application. But in Black box testing we can do it. 13 SOFTWARE TESTING METHODS Gray box testing. In addition to white and black testing sometimes gray box testing is mentioned. This specific method is used when we have access to some part of the developed product, but do not have such access to another parts (f.e. we use precompiled libraries or some external tools as parts of our project). Advantages: Disadvantages: We can use the best practices from black and white methods. We know for sure what we are going to test and what we are not. Additional planning and documentation is needed. We collect the worst from black and white methods. 14 SOFTWARE TESTING LEVELS 15 SOFTWARE TESTING LEVELS Time Level of specificity •Unit testing •Integration testing •System testing •System integration testing •Regression testing •Acceptance testing •Alpha testing •Beta testing 16 SOFTWARE TESTING LEVELS: TIME Unit Testing. This level of testing allows to perform the testing of separate module of the system. It may be a testing even of any particular part of the code (class). Integration Testing. Testing of combined parts of an application to determine if they function together correctly. Also, interactions between applications of big system can be checked within this type of testing. In this case this testing is known as Crossproduct testing. Usually it is performed after unit and functional testing of modules. System Testing. Checking the operation of the system in a whole. This testing checks as functional as non-functional requirements. Unit testing Integration testing 17 System testing SOFTWARE TESTING LEVELS: TIME System Integration Testing verifies that a system is integrated to any external or third-party systems defined in the system requirements. Testing the integration of systems and packages; testing interfaces to external organizations (e.g. Electronic Data Interchange, Internet). 18 SOFTWARE TESTING LEVELS: LEVEL OF SPECIFICITY Acceptance Testing: Formal testing with respect to user needs, requirements, and business processes conducted to determine whether or not a system satisfies the acceptance criteria and to enable the user, customers or other authorized entity to determine whether or not to accept the system. Regression Testing: Testing of a previously tested program following modification to ensure that defects have not been introduced or uncovered in unchanged areas of the software, as a result of the changes made. It is performed when the software or its environment is changed. 19 SOFTWARE TESTING LEVELS: LEVEL OF SPECIFICITY Alpha Testing: Simulated or actual operational testing by potential users/customers or an independent test team at the developers’ site, but outside the development organization. Alpha testing is often employed for off-the-shelf software as a form of internal acceptance testing. Beta Testing: Operational testing by potential and/or existing users/customers at an external site not otherwise involved with the developers, to determine whether or not a component or system satisfies the user/customer needs and fits within the business processes. Beta testing is often employed as a form of external acceptance testing for off-the-shelf software in order to acquire feedback from the market. 20 FUNCTIONAL SOFTWARE TESTING 21 FUNCTIONAL TESTING Functional Testing is the process of attempting to find discrepancies between the program and its functional requirements or specifications. Functional testing may be performed manually (manual testing) or using specific tools (automated testing). Some quotations: «Testing can be used to show the presence of bugs but never their absence». (E. Dijkstra) «Testing is the process of executing a program with the intention of finding errors». (G. Myers, The Art of Software Testing, Wiley, 1979) 22 FUNCTIONAL TESTING The goals are: Defining defects in software product and its documenting. Determine if software meets its requirements as defined in the Software Requirements Specification (SRS) and other relevant documents. Take an objective decision about possibility of software product delivery to customer; the decision should be documented in test result report. 23 FUNCTIONAL TESTING LEVELS Smoke Test It is the first and the shortest test, which checks the basic functionality of software product. This test takes approximately 1-4 hours depending on program complexity, by results of which lead tester takes a decision if to continue further testing or not. Critical Path Test It is a basic type of test when functions significant for end-user are checked on regular working basis. In view of this type of testing, most of requirements to the product are verified as a rule. Extended Test It is a profound test, when non-typical system’s behavior is 24 checked. When logically tricky scenarios are run, and some actions are performed which end-user may perform rarely. FUNCTIONAL TESTING TYPES Regression It is the selective re-testing to detect faults introduced during modification of a system or system component, to verify that modifications or bug-fixing have not caused new defects, and to verify that a modified system or system component still meets its specified requirements. New Feature Testing Testing the new features that have been added since the previous test cycle (since previous build). 25 POSITIVE/NEGATIVE TESTING Positive Testing is that testing which attempts to show that a given module of an application does not do what it is supposed to do. We pass absolutely correct data, execute application in absolutely correct environment and conditions. It it fails – things are bad. Negative Testing is that testing which attempts to show that the module does something that it is not supposed to do. We «play tricks» with application, f.e. try to divide by zero or so on. Application is supposed to react correctly (i.e. to point to user mistakes but not to fail). 26 SMOKE TESTING VS SANITY TESTING When a build is received, a smoke test is run to ascertain if the build is stable and it can be considered for further testing. Once a new build is obtained with minor revisions, instead of doing a through regression, a sanity is performed so as to ascertain the build has indeed rectified the issues and no further issue has been introduced by the fixes. It’s generally a subset of regression testing. 27 FUNCTIONAL TESTING: RECOMMENDATIONS Make software fail, don’t just confirm that it works. Focus on abnormal and stressful conditions, boundary testing. Testing against specifications and outside specifications. Assume the hardware may fail. Do not plan tests assuming that no errors will be found. Be sceptical of everything! Involve Customers if you can, act Like a Customer if you can’t. Emphasise testing of critical functions. 28 NON-FUNCTIONAL SOFTWARE TESTING 29 Testing is a process to test application's user interface and to make sure that it confirms the design requirements. GUI TESTING GUI Text Box Radio Button Check Boxes Command Buttons Aesthetic Conditions Validation Conditions Usability Conditions Data Integrity Conditions Date Field Checks Alpha Field Checks 30 USABILITY TESTING Usability Testing analyses user reaction to a product. It checks whether the product is useful, easy to use and learn. All in all, it must satisfy users needs. 31 USABILITY TESTING 32 P ERFORMANCE TESTING Performance Testing: The goal of performance testing is not to find bugs, but to eliminate bottlenecks and establish a baseline for future regression testing. Load Testing: To verify application behavior under normal and peak load conditions Stress Testing: To determine application’s behavior when it is pushed beyond normal or peak load conditions. a. double the baseline number for concurrent users/HTTP connections Volume Testing: Testing where the system is subjected to large volumes of data. a. testing a word processor by editing a very large document b. testing a printer by sending it a very large job Capacity Testing: To determine how many users and/or transactions a given system will support and still meet performance goals. Soak Testing: involves testing a system with a significant load extended over a significant period of time, to discover how the 33 system behaves under sustained use. PERFORMANCE TESTING. EXAMPLE If chair is designed for 100 kg weight, and my weight is 70 kg then that testing is called as normal testing. If my weight is 100 kg then that testing is called as load testing. If my wt is 120 kg then that testing called as stress testing 34 INSTALLATION TESTING Installation / Un-installation: This testing checks correctness of application installation / un-installation under the environment very close to the real one. An Installation Test investigates the effects on the application and on the target execution system when installed and executed on the target system. Original installation on a clean target system, update installation on a pre-existing installation, and reinstallation options are explored. The Un-installation Test investigates the effects on the target system when the previously installed application is removed. 35 LOCALIZATION TESTING Globalization Testing (Internationalization Testing): The goal is to detect potential problems in application design that could inhibit globalization. It makes sure that the code can handle all international support without breaking functionality that would cause either data loss or display problems. Localizability Testing: verifies that you can easily translate the user interface of the program to any target language without reengineering or modifying code. Localization Testing: checks the quality of a product's localization for a particular target culture/locale. 36 NON-FUNCTIONAL SOFTWARE TESTING TYPES Configuration: Thanks to configuration testing the compatibility between software and hardware is tested. As a rule, software product is created to work in at most various environments. These tests are conducted in order to define a product’s interaction with hardware and software environment. Products’ reaction to different settings can be checked as well. Compatibility: Compatibility testing checks functional possibilities and stability of a product under supported browsers (if talking about web-applications), OS and other third party software that is used by our product. 37 RECOVERY TESTING Recovery Testing is the activity of testing how well an application is able to recover from crashes, hardware failures and other similar problems. While an application is running, suddenly restart the computer, and afterwards check the validness of the application's data integrity. While an application is receiving data from a network, unplug the connecting cable. After some time, plug the cable back in and analyze the application's ability to continue receiving data from the point at which the network connection disappeared. Restart the system while a browser has a definite number of sessions. Afterwards, check that the browser is able to recover all of them. 38 SECURITY TESTING Security Testing is a process to determine that an information system protects data and maintains functionality as intended. The six basic security concepts that need to be covered by security testing are: Confidentiality Integrity Authentication Availability Authorization Non-repudiation 39 QUALITY AND ITS CRITERIA 40 QUALITY AND ITS CRITERIA Quality is property which shows if a product meets its requirements or not. And quality is defined on the base of using exactly established metrics and criteria. There are two aspects of the quality: product quality and process quality. Some quality criteria assumptions: If customer accepts the implemented product this is enough to say that the quality of the product is good. Product quality is defined by good processes (development, management, testing etc.) If the product meets its requirements – this is good product. Good product has some advantages and doesn’t have critical problems. 41 QUALITY CRITERIA EXAMPLES Test coverage not less than 70% 100% of P1 bugs fixed, 90% of P2 bugs fixed, 25% of P3 bugs fixed. All new functionality and changes to existing one must be implemented fully according to defined requirements and in time. Overall passed tests percentage is equal or greater than some value: X = (Passed/Executed)*100% 42 QUALITY CRITERIA EXAMPLES: FUNNY BUT TRUTH Bob Martin on «Agile 2008» had mentioned the following universal metric for any documentation review: «WTF per minute». You only need a timer and a counter. Start the timer and read the document. Every time you find something strange – increment the counter. 43 SOFTWARE TESTING AVAILABILITY OF DOCUMENTATION 44 AND SOFTWARE TESTING AND AVAILABILITY OF DOCUMENTATION Formal Testing Exploratory Testing ( Ad hoc testing) Exploratory testing is simultaneous learning, test design, and test execution 45 SOFTWARE TESTING 46 Typical Mistakes CLASSIC TESTING MISTAKES OR TESTING MYTHS Assurance that you can find all defects in the software product or to test the program completely. Thinking the testing team is the only responsible for assuring quality. Thinking that goal of testing is to find bugs only. Not finding the important bugs. Not reporting usability problems. Starting testing too late (bugs detection, not bugs reduction!). Putting stress and load testing off to the last minute. Not testing the documentation. 47 CLASSIC TESTING MISTAKES OR TESTING MYTHS (2) Not testing installation procedures. Failing to correctly identify risky areas. Paying more attention to running tests than to designing them. Not reviewing test designs. Checking that the product does what it is supposed to do, but not that it doesn’t do what it is not supposed to do. Poor bug reporting. Attempting to automate all tests. Expecting to re-run all manual tests. 48 TYPICAL TESTER’S MISTAKES Assuming that the program works correctly. Unwillingness to register the each found error. Ignoring or even hiding the problem. Falling under influence of developers which ask you to not submit found defects or to ignore existing intelligence about defects without adequate reasons. Escalating conflicts with developer. Insufficient attention to test planning. Writing the diffuse defect reports about non existing problems. 49