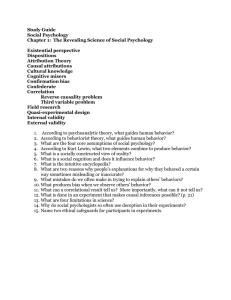

Assessment Bias in the Classroom

advertisement

Chapter 4- “Validity, Reliability, and Bias” Presented by Sara Floyd and Sandi Criswell Three of the most important measurement ideas are validity, reliability, and assessment bias. Basic assessment literacy is really a professional obligation. As teachers, we can’t guarantee that any test-based inference we make is accurate, but a basic understanding of the measurement ideas increases the odds in our favor. The fallout from invalid inferences could be invalid conclusions by administrators, by parents, and by politicians that you and your school are doing a sub-par job. Validity There is no such thing as a valid test. It’s the inference that’s based on a student’s test performance that can be valid or invalid. All validity analysis should center on the test-based inference, rather than on the test itself. If the interpretation is accurate, the teacher has arrived at a valid test-based inference. Educational tests don’t possess some sort of inherent accurate or inaccurate essence, then you will more likely realized that assessment validity rests on human judgment about the inferences derived from students’ test performances...human judgement is sometimes faulty. With tests used in large-scale assessments, the quest is to assemble a collection of evidence that supports the validity of the test-based inferences that are drawn from the test. It is necessary to consider the collection of validity evidence in a number of studies. Three kinds of validity evidence 1) criterion-related evidence 2) construct-related evidence 3) content-related evidence Validity studies are required by state authorities prior to test-selection or prior to “launching” a test they commissioned. Content-related evidence of validity- the kind classroom teachers might collect. This tries to establish that a test’s items satisfactorily reflect the content the test is supposed to represent, but it relies on human judgement. Collect content-related evidence for only very important tests, such as midterm or final exams and use perhaps a colleague or two and/or a parent or two. Keep a list of the relevant objectives on hand and create specific items to address those objectives. Those tests are more likely to yield valid score-based inferences. Validity in the Classroom You need to look at the content of your exams to see if they can contribute to the kinds of inferences about your students that you truly need to make. RELIABILITY Assessment Reliability = Assessment’s Consistency - You should be able to give the same, or almost the same, test and get similar results. Three kinds of reliability: 1. Stability Reliability: Concerns the consistency with which a test measures something over time (ex: Ex: If a student took a test today, and then retook the test at the end of the month (with no intervention), the student would score about the same) 2. Alternate-form Reliability: Refers to the possibility that there are two or more "equivalent" forms of a test (ex: Ex: John should score well on Form B if he has scored well on Form A.) 3. Internal-consistency Reliability: Focuses on the constancy within the test items (questions) - Do all the test items seem to being the same kind of measuring? - A single test should be aimed at one variable....no more NOTE: RELIABILITY IN ONE AREA DOES NOT ENSURE RELIABILITY IN ANOTHER!! Reliability in the classroom - Popham argues that teachers need to only UNDERSTAND reliability and the types of reliability. - It's also important to know that one isn't equivalent to another. - He also suggests that teachers need to be finding out the characteristics of the test (Is there evidence of reliability present?) ___________________________________________________________________________________________________________________________________ ASSESSMENT BIAS Assessment bias occurs when test items offend or unfairly penalize students for reasons related to students' personal characteristics (Popham, p. 55) - These include: gender, ethnicity, religion or socioeconomic status Large-Scale Assessments Example: Statewide Tests Today, Bias-review committees are created (15-25 members) - comprised of mostly all minority groups. They will go through an assessment item by item and render judgment of bias. Assessment Bias in the Classroom Teachers MUST act as a bias review committee for their own assessments. - Item-by-item scrutiny will ensure that bias in NOT present ______________________________________________________________________________________________ Quick Tips Recognize that validity refers to a test-based inference, not to a test itself Understand that there are 3 types of validity evidence – all can contribute to the confidence that teachers have in the accuracy of a test-based inference about students. Assemble content-related evidence of validity for your most important classroom tests. Know there are 3 types of reliability – they are related but different kinds of evidence that can be collected for educational tests. Give serious attention to the detection and elimination of assessment bias in classroom tests.