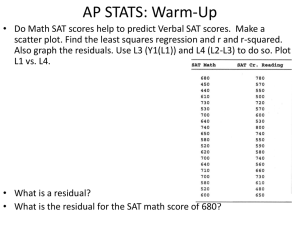

What does really represent?

advertisement

Chapter 8.3 –𝑟 2 and Sum of Squares What you should learn: 1. How to calculate 𝑟 2 , the coefficient of determination using Sum of Squares 2. Explain the meaning of 𝑟 2 in terms of variation. 3. How to interpret 𝑟 2 in the context of a problem. What does 𝒓𝟐 really represent? We say that 𝑟 2 represents the percentage of variation in 𝑦 that can be explained by 𝑥 using the regression line. But what does that really mean? Consider a scatterplot with no variation in the y-direction. Meaning, as 𝑥 varies, nothing happens to the yvalue. Here’s an example: Example 1: x 1 2 3 4 5 6 y 5 5 5 5 5 4 y 2 0 0 1 2 3 4 5 6 x Clearly, the mean of the y-values is 𝑦̅ = 5, and the distance every point has from the 𝑦̅ is 0. Also, if I were to draw the LSRL (Least Squares Regression Line) for this set of data, it would be a straight line right through all 5 points. The main thing to understand here is that the distance between each point and the mean is 0, and the distance between each point and the LSRL is 0, meaning there is no variation. Now consider a scatterplot with a perfectly positive correlation of 𝑟 = +1. Here’s an example: 8 Example 2: x 1 2 3 4 5 6 y 3 4 5 6 7 y 4 2 0 0 1 2 3 4 5 6 x Our first example had no variation at all in the y-value, but in this example, the y-value increases as x increases. The change means we have variation—but can we measure the amount of variation we have? Just as we’ve seen in the way we calculate SD or Variance, one way we can measure the amount of variation in our data is by finding the difference between each point and 𝑦̅, then squaring it to make them positive. Lastly, we can add all these variations together to get the total amount of variation in our data. ∑(𝑦𝑖 − 𝑦̅)2 This is called Sum of Squares. So let’s calculate the amount of variation in the y-values of our data. First we need to find the mean of the y-values: 𝑦̅ = Next, find the differences between Difference Square Each Diff. each y-value and the 𝑦̅, then square 𝑦𝑖 − 𝑦̅ (𝑦𝑖 − 𝑦̅)2 These are called “squared differences.” your differences: 3 − 𝑦̅ = 4 − 𝑦̅ = 5 − 𝑦̅ = 6 − 𝑦̅ = 7 − 𝑦̅ = Now, find the total sum of the squared differences: TOTAL: ∑(𝑦𝑖 − 𝑦̅)2 This is often called the Total Sum of Squares (SST) The Total Sum of Squares (SST) the number we use to measure how much variation there is in y. Consider now the LSRL that goes through the points in the scatterplot. If we estimate the y-values using the LSRL, we get the same exact y-values as the actual values. So, if we were to find the sum of squares again, but this time using the estimated values instead of the actual values, we would get the same total. The sum of squares using the actual values is called the Total Sum of Squares (SST), but the sum of squares using the estimated values is called the Sum of Squares due to Regression (SSR). Since the SSR is the same as the SST, we can say that 100% of the variation in our y-variable can be explained by the LSRL. Now, let’s look at one last example, this time one not perfectly correlated. Example 3: 6 x y 4 y 1 2 2 2 4 0 3 3 0 2 4 6 4 5 x 5 4 Note: 𝑟 = 0.69 so it’s a moderately strong linear correlation in the positive direction. First let’s calculate the Total Sum of Squares (SST) for our data: 1. Find the mean, 𝑦̅: 𝑦̅ = 2. Find the squared differences, (𝑦𝑖 − 𝑦̅)2 : 3. Find the Total Sum of Squares, ∑(𝑦𝑖 − 𝑦̅)2 : SST = Put these lists into your calculator lists. Now let’s find the Sum of Squares due to Regression (SSR): 1. Find the LSRL using your calculator: Recall the calculator method is… 𝑦̂ = 𝑎 + 𝑏𝑥 STATCALC8:LinReg(a+bx) 2. Find the estimated values of y by substituting the values 1 through 5 into 𝒙 your LSRL equation: 1 ̂ 𝒚 2 3 4 5 6 5 3. Find the Squared Differences for each estimated value, 𝑦̂: 4 (𝑦̂𝑖 − 𝑦̅)2 𝑦̅ = 3.6 (Use the same 𝑦 you used for SST.) y 3 2 1 4. Find the Sum of Squares due to 0 Regression (SSR): 0 SSR = 2 ∑(𝑦̂𝑖 − 𝑦̅) This time, the SSR is not the same as the SST. It doesn’t explain ALL of the variation in our yvariable…but it does explain some of it! How much of it is explained by the regression line? Good Question. Let’s find the percent of our Total Sum of Squares percent explained by the Regression Line (Percent of SST made up of SSR). 𝑆𝑆𝑅 2.5 = = 0.48 = 48% 𝑆𝑆𝑇 5.2 Thus, 48% of the total variation in 𝒚 can be explained by the LSRL. 1 2 3 x 4 5 6 So now let’s back up to where I gave you the value of 𝑟 = 0.69. What happens if we square that number? 𝑟 2 = (0.69)2 = 0.48 𝑆𝑆𝑅 Whoa! That’s the same number we got when calculating 𝑆𝑆𝑇 . The proof is below, but that’s not important. The important thing is that now we know we have a shortcut for calculating how much of our variation can be explained by our regression line. Just find 𝑟 2 . 𝑟2 = 𝑆𝑆𝑅 𝑆𝑆𝑇 And we can interpret the coefficient of determination value of 𝑟 2 using the following context sentence: “ [𝒓𝟐 ] percent of the total variation in [y-variable] can be explained by the variation in [x-variable] using the linear regression model.” Overall Grade (pre-final ) Revisited Example: Let’s revisit our homework and overall grade example: We found an 𝑟 value of 0.82. Squaring this gives us an 𝑟 2 value of 0.67. Thus, we interpret this as, “67% of the variation in overall grades can be explained by the variation in homework percentage, using our regression model.” 100 90 80 70 60 50 40 0 50 100 150 Homework % Proof that 𝑺𝑺𝑹 𝑺𝑺𝑻 = 𝒓𝟐 : 2 𝑆𝑆𝑅 ∑(𝑦̂ − 𝑦̅) ∑[(𝑎 + 𝑏𝑥𝑖 ) − (𝑎 + 𝑏𝑥 = = 2 𝑆𝑆𝑇 ∑(𝑦𝑖 − 𝑦̅) ∑(𝑦𝑖 − 𝑦̅)2 )]2 = )]2 ∑[𝑏(𝑥𝑖 − 𝑥 = ∑(𝑦𝑖 − 𝑦̅)2 2 𝑠𝑦 ∑ [𝑟 ∙ 𝑠 (𝑥𝑖 − 𝑥 )] 𝑥 ∑(𝑦𝑖 − 𝑦̅)2 = 𝑟2 ∙ 2 𝑠𝑦 ∑ [𝑠 (𝑥𝑖 − 𝑥 )] 𝑥 ∑(𝑦𝑖 − 𝑦̅)2 Note the following reasoning behind the second to last step in our proof: 𝑥𝑖 − 𝑥 2 ∑(𝑥𝑖 − 𝑥 )2 ∑(𝑥𝑖 − 𝑥 )2 ∑( ) = = =𝑛−1 ∑(𝑥𝑖 − 𝑥 )2 𝑠𝑥 𝑠𝑥2 𝑛−1 The same applies for the y-variable. 𝑥 −𝑥 2 ∑ ( 𝑖𝑠 ) 𝑛−1 𝑥 2 2 = 𝑟2 ∙ 2 = 𝑟 ∙𝑛−1 = 𝑟 𝑦 − 𝑦̅ ∑ ( 𝑖𝑠 ) 𝑦