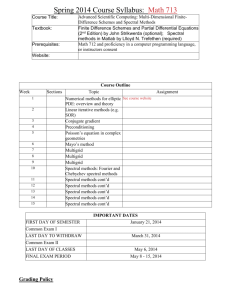

Document

advertisement

Spectral methods for

initial value problems

and integral equations

Tang Tao

Department of Mathematics, Hong Kong Baptist University

International Workshop on Scientific Computing

On the Occasion of Prof Cui Jun-zhi’s 70th Birthday

Outline of the talk

Motivations (accuracy in time)

Spectral postprocessing (efficiency)

Singular kernels

Delay-differential equations

Extensions

Joint with Cheng Jin, Xu Xiang (Fudan)

2

Spectral postprocessing (Tang and X. Xu/Fudan)

We begin by considering a simple ordinary differential equation with given

initial value:

y’(x) = g(y; x), 0 < x T,

(1.1)

y(0) = y0.

(1.2)

Can we obtain exponential rate of convergence for (1.1)-(1.2)?

For BVPs, the answer is positive and well known.

For the IVP (1.1)-(1.2), spectral methods are not attractive since (1.1)(1.2) is a local problem

A global method requires larger storage and computational time (need

to solve a linear system for large T or a nonlinear system in case that g

in (1.1) is nonlinear).

3

Spectral postprocessing

Purpose: a spectral postprocessing technique

which uses lower order methods to provide

starting values.

A few Gauss-Seidal type iterations for a well

designed spectral method.

Aim: to recover the exponential rate of

convergence with little extra computational

resource.

4

Formulas …

We introduce the linear coordinate transformation

T t0

T t0

x

s

,

1 s 1,

2

2

and the transformations

T t0

T t0

T t0

T t0

Y ( s) y

s

G (Y ; s ) g Y ;

s

,

.

2

2

2

2

Then problem (1.1)-(1.2) becomes

Y’(x) = G(Y; s),

1 < s 1;

Y(1) = y0.

N

Let {s j } j 0 be the Chebyshev-Gauss-Labbato points:

j

s j cos ,

0 j N.

N

We project G to the polynomial space PN:

N

G(Y ; s) G(Y j ; s j ) Fj ( s),

j 0

where Fj is the j-th Lagrange interpolation polynomial associated with the

Chebyshev-Gauss-Labbato points.

5

Formulas …

Since Fj PN, it can be expanded by the Chebyshev basis functions:

N

F j ( s ) mjTm ( s ).

m 0

N

Assume it is satisfied in the collocation points {si }i 0 , i.e.,

N

F j ( si ) mjTm ( si ),

0 i N,

m 0

which gives

2

jm

mj ~ ~ cos

,

Ncm c j

N

we finally obtain the following numerical scheme

N

Y j y0 ijG(Y j ; s j ),

j 0

where

1

ij ~

Nc j

(1.3)

1

1

2(1) m

jm 1

cos

Tm1 ( si )

Tm1 ( si ) 2

.

~

m 1

m 1

N m 1

m 1 cm

N

It is noticed that Tm1 ( si ) cos(i(m 1) / N ).

6

Legendre collocation (Lobatto III)

N

Let {x j } j 0 be the Legendre-Gauss-Labatto points, we obtain the following

numerical scheme

N

Yi y0 w jiG(Y j , x j ),

where

(1.4)

j 0

1 N Lm ( x j ) 1

Lm1 ( xi ) Lm1 ( xi ).

w ji

N 1 m 0 LN ( x j ) 2 m 1

7

Example 1

Consider a simple example

y’ = y + cos(x+1)ex+1, x (1,1],

y(1)=1.

The exact solution of the is y=(1+sin(x+1))exp(x+1).

First use explicit Euler method to solve the

problem (with a fixed mesh size h=0.1).

Then we use the spectral postprocessing

formulas to update the solutions using the

Gauss-Seidal type iterations.

8

(a)

(b)

(c)

Example 1: errors vs Ns for

spectral postprocessing method

(1.4), with (a): Euler, (b): RK2,

and (c): RK4 solutions as the

initial data.

9

Spectral postprocessing for Hamiltonian systems

As an application, we apply the spectral postprocessing

technique for the Hamiltonian system:

dp

q H ( p, q ),

dt

dq

p H ( p, q),

dt

t0 t T ,

(1.5)

with the initial value p(t0) = p0, q(t0) = q0,

Feng Kang, Difference schemes for Hamiltonian formalism an symplectic

geometry, J. Comput. Math., 4 1986, pp. 279-289.

4th-order explicit Runge-Kutta

4th-order explicit symplectic method

10

Spectral postprocessing for Hamiltonian systems

Integrating (1.5) leads to a system of integral equation

t

t

p(t ) pk q H ( p, q)ds,

q(t ) qk p H ( p, q)ds.

tk

tk

(1.6)

Assume (1.6) holds at the Legendre or Chebyshev collocation points:

t kj

t kj

p(t kj ) pk q H ( p, q)ds,

q(t kj ) qk p H ( p, q)ds. (1.7)

tk

tk

where

tkj = (tk + 1) + j, 0 j N.

We can discretize the integral terms in (1.7) using Gauss quadrature

together with the Lagrange interpolation:

p(t kj ) pk

q(t kj ) qk

t kj t k

2

tkj t k

2

N

l 0

N

l 0

q

H ( I N p( skjl ), I N q( skjl ) wl ,

p

H ( I N p( skjl ), I N q( skjl ) wl .

11

Example 2

Consider the Hamiltonian problem (1.5) with

1 2

H ( p, q) ( p q 2 ),

2

p(t0 ) sin( t0 ), q(t0 ) cos(t0 ).

This system has an exact solution (p, q) = (sint, cost).

We take T=1000 in our computations.

Table 1(a) presents the maximum error in t[0,1000] using

both the RK4 method and the symplectic method.

Table 2(b) shows the performance of the postprocessing

with initial data in [tk, t2+2] generated by using RK4 t=0.1).

To reach the same accuracy of about 1010, the symplectic

scheme without postprocessing requires about 5 times

more CPU time.

12

(a)

RK4

Symplectic

Max. Error CPU time Max. Error CPU time

t = 101

blow up

9.20e-03

0.16s

t = 102

1.81e-0

1.60s

1.32e-06

1.52s

t = 103

2.43e-2

12.02s

9.16e-11

10.60s

(b)

iter step=3

Max. Error CPU time

iter step=6

Max. Error CPU time

N=8

1.74e-2

1.796s

5.49e-07

1.828s

N = 10

1.74e-2

1.813s

5.49e-07

1.843s

N = 12

1.74e-2

1.828s

5.49e-07

1.859s

(c)

iter step=3

Max. Error

CPU time

N=8

2.23e-5

1.797s

7.07e-10

1.843s

N = 10

2.17e-5

1.812s

6.83e-10

1.862s

N = 12

2.14e-5

1.860s

6.83e-10

1.906s

iter step=6

Max. Error CPU time

Example 2.

(a): the maximum errors

obtained by RK4 and the

symplectic method;

(b): spectral postprocessing

results using the RK4 (t =

0.1) as the initial data in

each sub-interval [tk, tk+2];

(c): same as (b), except that

RK4 is replaced by the

symplectic method. Here N

denotes the number of

spectral collocation points

used.

13

(a)

(b)

Example 2: errors vs Ns and iterative steps with (a): RK4

results and (b): symplectic results as the initial data.

14

Spectral postprocessing for Volterra integral equations

Legendre spectral method is proposed and analyzed for Volterra type

integral equations:

x

u( x) k ( x, s, u(s))ds g ( x),

a

x [a, b]

(1.8)

where the kernel k and the source term g are given.

Let { i }i s0 be the zeros of Legendre polynomials of degree Ns+1, i.e.,

LNs+1(x). Then the spectral collocation points are

ba

ba

xis

i

.

2

2

We collocate (1.8) at the above points:

N

xis

u ( x ) g ( x ) k ( xis , s, u ( s)) ds g ( x),

s

i

s

i

a

Using the linear transform

xis a

xis a

xa

xa

s( )

, si ( )

2

2

2

2

we have

x s a Ns

u( xis ) g ( xis )

i

2

0 i Ns .

1 1

s

k

x

i , si (k ), u(si (k )) wk .

k

15

Example 4

Consider Eq. (1.8) with

2 tan( u )

k ( x, s , u )

, a 1, b 1,

2

2

1 x s

g ( x) arctan( x) ln( 1 2 x 2 ) ln( 2 x 2 ).

Example 4: errors vs Ns and

iterative steps.

16

The convergence analysis

[Tang, Xu, Cheng/Fudan Univ]

x

u( x) K ( x, s)u(s)ds g ( x),

x [1, 1].

1

(1.9)

Theorem 1 Let u be the exact solution of the Volterra

equation (1.9) and assume that

N

U ( x) u j Fj ( x),

j 0

where uj is given by spectral collocation method and Fj(x) is

the j-th Lagrange basis function associated with the Gausspoints {x j }Nj0 . If u Hm(I), then for m 1,

~

u U L ( I ) CN 1/ 2m max K ( xi , s( xi , )) ~

u L ( I ) CN m | u |H~ ( I ) ,

1i N

H m ,n ( I )

2

m ,n

provided that N is sufficiently large.

17

The convergence analysis (Proof ingredients)

Lemma 3.1 Assume that a (N+1)-point Gauss, or Gauss-Radau, or GaussLobatto quadrature formula relative to the Legendre weight is used to

integrate the product u, where u Hm(I), with I:=(1, 1) for some m 1 and

PN. Then there exists a constant C independent of N such that

1

1

u ( x) ( x)dx (u, ) N CN m | u |H~ m ,N ( I ) || || L2 ( I ) ,

Lemma 3.2 Assume that u Hm(I) and denote INu its interpolation

polynomial associated with the (N+1)-point Gauss, or Gauss-Radau, or

N

Gauss-Lobatto points {x j } j 0 . Then

u INu

2

L (I )

CN m | u |H~ m ,N ( I ) .

Lemma 3.3 Assume that Fj(x) is the N-th Lagrange interpolation

polynomials associated with the Gauss, or Gauss-Radau, or GaussLobatto points. Then

N

23 / 2 1/ 2

max | F j ( x) |

N .

x( 1,1)

j 0

18

Methods and convergence analysis for

t

u(t ) (t s) k (t , s)u(s)ds g (t ),

0

0 t T.

[Yanping Chen and Tang]

(a)

(b)

Chebyshev spectral for \alpha=0.5

Jacobi-spectral for general \alpha

19

Spectral methods for

pantograph-type DDEs

Ishtiaq Ali (CAS)

Hermann Brunner (Newfoundland/HKBU)

Tao Tang

Consider the delay differential equation:

u(x) = a(x)u(qx), 0 < x T,

u(0) = y0,

where 0 < q < 1 is a given constant …

Using a simple transformation, the above problem

becomes

y(t) = b(t)y(qt + q1), -1 < t 1,

y(-1) = y0.

21

Difficulties in using finite-difference type methods

(a). u(qx) – un-matching of the grid points so interpolations

are needed – difficult to obtain high order methods

(b). Difficult in obtaining stable numerical methods (analysis

has been available for q=0.5 only)

(c). Difficult when q close to 0 or 1.

22

1 qt j q1 v q1

y (v)dv,

y (t j ) y0

b

q 1

q

j 1.

Projecting the above integrand to N , we have

N

v q1

t k q1

y (v) b

y (t k ) Fk (v),

b

q

k 0

q

Expand Fk (v) in terms of the Legendre polynomial s :

N

Fk (v) ckm Lm (v).

m 0

N

Y j y0 bk Yk wk , j ,

1 j N,

k 0

wk , j

1

2

qN ( N 1)LN ( xk )

N

L

m 0

m

( xk )Lm 1 qt j q1 Lm 1 qt j q1 .

23

Theorem: If the function b is sufficiently smooth (which also implies

that the solution is smooth), then

Yy

L ( I )

CN

m 1/ 2

b yq q1 H~

CN 1/ 2m b H~

y

m ,N ( I )

m ,N ( I )

,

L2 ( I )

provided that N is sufficiently large

24

Consider the general pantograph equation

y(t ) a(t ) y(t ) b(t ) y(qt ) c(t ) y(qt ) g (t ),

y0 0.

t (1,1]

with a(t) = sin(t), b(t) = cos(qt),

c(t) = -sin(qt), g(t) = cos(t) – sin2(t).

The exact solution of the problem is y(t) = sin(t).

25

Figure: L errors for general pantograph equation with neutral term.

(a): q = 0.5 and (b): q = 0.99.

26

Spectral methods for fractional diffusion equation

(Huang/Xu/Tang)

Consider the time fractional diffusion equation of the form

u ( x, t ) 2u ( x, t )

f ( x, t ), x , 0 t T

2

t

x

subject to the following initial and boundary conditions:

u(x,0) = g(x), x ,

u(0,t) = u(L,t)=0, 0 t T,

u ( x, t )

t

where is the order of the time fractional derivative.

is

defined as the Caputo fractional derivatives of order given

by

t u ( x, s )

u ( x, t )

1

ds

, 0 1.

0

t

(1 )

s (t s)

27

Basic equations for Viscoelastic flows

v 0,

v

( v v) p 0 2 v S 0 g,

t

0

where S is an elastic tensor related to the extra-stress tensor

T

S

where

v

(

v

)

of the fluid by

is the rate of

0

deformation tensor.

The extra-stress tensor is given by an adequate constitutive

equation,

t

(t ) M (t t ) H ( I1 , I 2 )Bt (t )dt

where the memory function is

m1

M (t t )

m 1

am

m

e

t t

m

28

(a)

(b)

Predicted streamlines for the flow through a 4:1 planar

contraction for Re=1 using the finite volume code of Alves et al.

(a) Newtonian; (b) UCM model with We=4.

29

Happy Birthday, Professor Cui!

30

Methods and error analysis for delay equations

qt

u(t ) a(t )u(qt ) (t s) b(t , s)u(s)ds,

0

0 t T.

[H. Brunner/Newfoundland and HKBU and Tang]

31