slides - Microsoft Research

advertisement

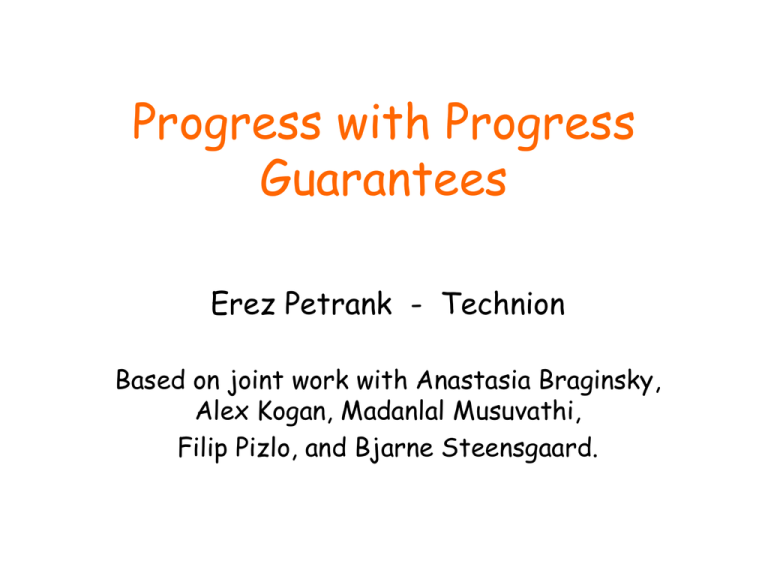

Progress with Progress Guarantees Erez Petrank - Technion Based on joint work with Anastasia Braginsky, Alex Kogan, Madanlal Musuvathi, Filip Pizlo, and Bjarne Steensgaard. Responsive Parallelism • Develop responsive parallel systems by developing stronger algorithms and better system support. • Main tool: progress guarantees. – Guaranteed responsiveness, good scalability, avoiding deadlocks and livelocks. • Particularly important in several domains – real-time systems – Interactive systems (also OS’s) – operating under service level agreement. • Always good to have – If performance is not harmed too much. A Progress Guarantee • Intuitively: “ No matter which interleaving is scheduled, my program will make progress. ” • “Progress” is something the developer defines. – Specific lines of code Progress Guarantees Great guarantee, but difficult to achieve. Wait-Freedom If you schedule enough steps of any thread, it will make progress. Lock-Freedom If you schedule enough steps across all threads, one of them will make progress. The middle way ? Obstruction-Freedom Somewhat weak. If you let any thread run alone for enough steps, it will make progress. This Talk • Advances with progress guarantees. – Some of it is work-in-progress. • • • • • • Attempts for lock-free garbage collection [PLDI 08, ISMM 07] Bounded lock-freedom and lock-free system services [PLDI 09] A lock-free locality-conscious linked-list [ICDCN 11] A Lock-free balanced tree [submitted] A wait-free queue [PPOPP 11] Making wait-freedom fast [submitted] A Lock-Free Garbage Collector • There is no such thing right now. • A recent line of work on real-time GC, allows moving objects concurrently (while the program accesses them). • See Pizlo, Petrank, and Steensgaard at [ISMM 2007, PLDI 2008]. What does it mean to “support” lock-freedom? Services Supporting LockFreedom Services Supporting LockFreedom • Consider system services: event counters, memory management, micro-code interpreters, caching, paging, etc. • Normally, designs of lock-free algorithms assume that the underlying system operations do not foil lock-freedom. Can We Trust Services to Support Lock-Freedom ? • Valois’ lock-free linked-list algorithm has a well known C++ implementation, which uses the C++ new operation. • A hardware problem: lock Free algorithms typically use CAS or LL/SC, but LL/SC implementations are typically weak: spurious failures are permitted. • Background threads increase the chaos (if syncing on shared variables). Conclusion: system support matters. In the Paper • Definition of a lock-free supporting service (including GC), • Definition of a lock-free program that uses services, • A composition theorem for the two. • Also: bounded lock-freedom Musuvathi, Petrank, and Steensgaard. Progress Guarantee via Bounded Lock-Freedom. [PLDI 2009] Open Questions for Progress Guarantees • Some important lock-free data structures are not known – Can we build a balanced tree? • Wait-free data structures are difficult to design. – Can we design some basic ones? • Wait-free implementations are slow. – Can we make them fast? A Locality-Conscious Linked-List [ICDCN’11] A Lock-Free B-Tree [Submitted] Anastasia Braginsky & Erez Petrank 12 A B-Tree • An important instance of balanced trees, suitable for file systems. Typically a large node with many children. • Fast access to the leaves in a very short traversal. • A node is split or merged when it becomes too dense or sparse. 9 52 92 3 7 9 26 31 40 52 63 77 89 92 Lock-Free Locality-Conscious Linked Lists 3 7 9 12 18 25 26 31 40 52 63 77 89 92 • List of constant size ''chunks", with minimal and maximal bounds on the number of elements contained. • Each chunk consists of a small linked list. • When a chunk gets too sparse or dense, it is split or merged with its preceding chunk. • Lock-free, locality-conscious, fast access, scalable 14 Chunk Split or Merge • A chunk that needs splitting or merging is frozen. – No operations are applied on it anymore. – New chunks (with the updated data) replace it . 15 A list Structure (2 Chunks) NULL HEAD Chunk A NextChunk NextChunk EntriesHead EntriesHead Key: 3 Data: G Chunk B Key: 14 Data: K Key: 89 Data: M Key: 67 Data: D Key: 25 Data: A 16 When No More Space for Insertion NULL HEAD Freeze Chunk A NextChunk Key: 6 Data: B NextChunk EntriesHead EntriesHead Key: 3 Data: G Chunk B Key: 14 Data: K Key: 9 Data: C Key: 12 Data: H Key: 89 Data: M Key: 67 Data: D Key: 25 Data: A 17 Split NULL HEAD Freeze Chunk A NextChunk Key: 6 Data: B Chunk C Key: 14 Data: K Key: 9 Data: C Key: 12 Data: H NextChunk EntriesHead Key: 3 Data: G NextChunk EntriesHead EntriesHead Key: 3 Data: G Chunk B Key: 6 Data: B Key: 89 Data: M Key: 67 Data: D Key: 25 Data: A Chunk D NextChunk EntriesHead Key: 9 Data: C Key: 14 Data: K Key: 12 Data: H 18 Split NULL HEAD Freeze Chunk A NextChunk Key: 6 Data: B Chunk C Key: 14 Data: K Key: 9 Data: C Key: 12 Data: H NextChunk EntriesHead Key: 3 Data: G NextChunk EntriesHead EntriesHead Key: 3 Data: G Chunk B Key: 6 Data: B Key: 89 Data: M Key: 67 Data: D Key: 25 Data: A Chunk D NextChunk EntriesHead Key: 9 Data: C Key: 14 Data: K Key: 12 Data: H 19 When a Chunk Gets Sparse HEAD NULL Freeze slave Chunk C Chunk B NextChunk EntriesHead Key: 3 Data: G Key: 6 Data: B NextChunk EntriesHead Key: 9 Data: C Key: 89 Data: M Chunk D Key: 67 Data: D Key: 25 Data: A NextChunk EntriesHead Key: 14 Data: K Freeze master 20 Merge HEAD NULL Freeze slave Chunk C Chunk B NextChunk EntriesHead Key: 3 Data: G EntriesHead Key: 6 Data: B Key: 9 Data: C Chunk E Key: 89 Data: M NextChunk Chunk D EntriesHead Key: 3 Data: G NextChunk Key: 6 Data: B Key: 67 Data: D Key: 25 Data: A NextChunk EntriesHead Key: 14 Data: K Freeze master Key: 9 Data: C Key: 14 Data: K 21 Merge HEAD NULL Freeze slave Chunk C Chunk B NextChunk EntriesHead Key: 3 Data: G EntriesHead Key: 6 Data: B Key: 9 Data: C Chunk E Key: 89 Data: M NextChunk Chunk D EntriesHead Key: 3 Data: G NextChunk Key: 6 Data: B Key: 67 Data: D Key: 25 Data: A NextChunk EntriesHead Key: 14 Data: K Freeze master Key: 9 Data: C Key: 14 Data: K 22 Extending to a Lock-Free B-Tree • Each node holds a chunk, handling splits and merges. • Design simplified by a stringent methodology. • Monotonic life span of a node: infant, normal, frozen, & reclaimed, reduces the variety of possible states. • Care with replacement of old nodes with new ones (while data in both old and new node simultaneously). • Care with search for a neighboring node to merge with and with coordinating the merge. 23 Having built a lock-free B-Tree, let’s look at a … Wait-Free Queue Kogan and Petrank PPOPP’10 FIFO queues • A fundamental and ubiquitous data structures enqueue dequeue 3 2 9 Existing wait-free queues • There exist universal constructions – but are too costly in time & space. • There exist constructions with limited concurrency – [Lamport 83] one enqueuer and one dequeuer. – [David 04] multiple enqueuers, one concurrent dequeuer. – [Jayanti & Petrovic 05] multiple dequeuers, one concurrent enqueuer. A Wait-Free Queue • First wait-free queue (which is dynamic, parallel, and based on CASes). • We extend the lock-free design of Michael & Scott. – part of Java Concurrency package “Wait-Free Queues With Multiple Enqueuers and Dequeuers”. Kogan & Petrank [PPOPP 2011] Building a Wait-Free Data Structure • Universal construction skeleton: The first makes it inefficient. – Publish an operation before starting execution. – Next, help delayed threads and then start executing operation. – Eventually a stuck thread succeeds because all threads help it. • Solve for each specific data structures: – The interaction between threads running the same operation. – In particular, apply each operation exactly once and obtain a consistent return code. The second makes it hard to design. Results • The naïve wait-free queue is 3x slower than the lockfree one. • Optimizations can reduce the ratio to 2x. Can we eliminate the overhead for the wait-free guarantee? Wait-free algorithms are typically slower than lock-free (but guarantee more). Can we eliminate the overhead completely ? Kogan and Petrank [submitted] Reducing the Help Overhead • Standard method: Help all previously registered operations before executing own operation. • But this goes over all threads. • Alternative: help only one thread (cyclically). • This is still wait-free ! An operation can only be interrupted a limited number of times. • Trade-off between efficiency and guarantee. But this is still costly ! Why is Wait-Free Slow ? • Because it needs to spend a lot of time on helping others. • • • • Teacher: Boy: Teacher: Boy: why are you late for school again? I helped an old lady cross the street. why did it take so long? because she didn’t want to cross! • Main idea: typically help is not needed. – Ask for help when you need it; – Provide help infrequently. Fast-Path Slow-Path • When executing an operation, start by running the fast lock-free implementation. Fast path • Upon several failures “switch” to the wait-free implementation. Slow path – Ask for help, – Keep trying. • Once in a while, threads on the fast path check if help is needed and provide help. The ability to switch between modes Start Do I need to help ? yes no Help Someone Apply my op fast path (at most n times) Success ? no Apply my op using slow path yes Return Results for the Queue • There is no observable overhead ! • Implication: it is possible to avoid starvation at no additional cost. – If you can create a fast- and slow- path and can switch between them. Conclusion • Some advances on lock-free garbage collection. • Lock-free services, bounded lock-freedom. • A few new important data structures with progress guarantees: – Lock-free chunked linked-list – Lock-free B-Tree – Wait-free Queue • We have proposed a methodology to make waitfreedom as fast as lock-freedom. 36