Program Effectiveness Plan - Accrediting Bureau of Health

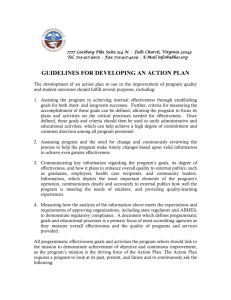

advertisement

Program Effectiveness Plan PAUL PRICE ABHES EDUCATION ADVISOR The Purpose of the PEP Why Use the PEP? How does the PEP assess programs? What purposes should a successful PEP fulfill? How is student achievement measured? How Does the PEP Assess Programs? PEP replaces the IEP (more of a program focus) PEP evaluates each individual program within an educational institution by: Establishing and documenting specific goals Collecting outcomes data relevant to these goals Defining criteria for measurement of goals Analyzing outcomes against both minimally acceptable benchmarks and the program’s short- and long-term objectives Setting strategies to improve program performance What Purposes Does the PEP Fulfill? Developing and using the PEP should fulfill several purposes: Defining criteria for measurement of goals Used to unify administrative and educational activities Assessing progress and the need for change Communicating to the public a process for quality improvement Demonstrating regulatory compliance for approving or accrediting organizations Such a document is a primary focus of most accrediting agencies How is Student Achievement Measured? Program success is based on student achievement in relation to its mission Consideration of the following outcomes indicators: Retention rates Participation in and results of external validating exams Graduation rates Job placement rates Survey responses from students, clinical externship sites, graduates, and employers THE PURPOSE OF THE PEP The PEP requires each program within an institution to look at its past, present, and future, and to continuously ask: Where have we been? Establishes baselines Where are we now? Compares with baselines for needed change Where do we want to go? Setting How goals do we get there? Process used to achieve new direction DEVELOPING THE PEP How do you begin development of the PEP? What kind of data is collected? What do you do with the data? When is data collected and who is responsible? HOW DO YOU BEGIN? First: Collect data on outcomes and achievement of its occupational objectives Chapter V, Section I of the ABHES Accreditation Manual, including Appendix C, Program Effectiveness Plan Maintain data for future comparisons WHAT KIND OF DATA IS COLLECTED? Collect data on each of the educational outcomes areas and achievement of its occupational objectives for each of the programs offered by the institution Include data relevant to improving the program’s overall effectiveness Clearly evidence the level of educational outcomes and satisfaction experienced by current students, graduates, and employers WHAT KIND OF DATA IS COLLECTED? Annual reports IEPs Objectives Retention rate Job placement rates Credentialing exam (participation) Credentialing exam (pass rates) Program assessment exams Satisfaction surveys Faculty professional growth activities In-services WHAT DO YOU DO WITH THE DATA? Next: Analyze data and compare with previous findings Identify necessary changes in operations or activities Set baseline rates WHEN AND WHO? When? Fiscal/calendar year-end In conjunction with annual reporting period (July 1 – June 30) Who? Process involves: Program faculty Administrators Staff Advisory board members Students. Grads, Employers Section I Subsection 1 Program Effectiveness Content OBJECTIVES RETENTION RATE JOB PLACEMENT CREDENTIALING EXAM (PARTICIPATION) CREDENTIALING EXAM (PASS RATES) PROGRAM ASSESSMENT SATISFACTION SURVEYS FACULTY PROFESSIONAL GROWTH AND INSERVICES Program Objectives Standard: Program objectives are consistent with the field of study and the credential offered and include as an objective the comprehensive preparation of program graduates for work in the career field. See examples 1-3, pages 10-11 Program description “At the completion of the program, the student will be able to:” “Objectives of the program are to:” Program Retention Rate Standard: At a minimum, an institution maintains the names of all enrollees by program, start date, and graduation date. The method of calculation, using the reporting period July 1 through June 30, is as follows: (EE + G / (BE + NS + RE) = R% EE = G BE = = NS RE R% = = = Ending enrollment (as of June 30 of the reporting period) Graduates Beginning enrollment (as of July 1 of the new reporting period) New starts Re-entries Retention percentage Ending enrollment How many students were enrolled at the end of the reporting period in that program. So if Rad Tech had 100 students at beginning (July 1) and 65 at end (June 30) what happened to those 35 students (graduate, drop)? See page 12 for examples Program Retention Rate: Establishing Goals A program may elect to establish its goal by an increase of a given percentage each year (for example, 5%) or by determining the percent increase from year to year of the three previous years. Pharmacy Technician Program Retention rates for the past three years, taken from the Annual Report: 2005-2006 80% 2006–2007 81% 2007–2008 85% 1% 4% Avg = 2.5% Projection for 2009: 85 + 2.5 = 87.5% Program Retention Rate: Establishing Goals Some programs may address retention by assigning quarterly grade distribution goals in the percentage of As and Bs for selected courses/classes. See page 13 This chart describes the quarterly grade distribution goals for the anatomy and physiology course in the Radiologic Technology program Based on this distribution, the program might elect to develop strategies to maintain the 82% rate or raise the goal to 85%. A quarterly intervention plan might be developed for those struggling students who are not achieving the higher scores. Such an intervention plan might enhance retention. Program Retention Rate: Establishing Goals Similarly quarterly grade distribution goals could be set in overall enrollment performance. See the chart for Average Quarterly Grade Distribution on page 13-14 Job Placement Rate in the Field Standard: An institution has a system in place to assist with the successful initial employment of its graduates. At a minimum, an institution maintains the names of graduates, place of employment, job title, employer telephone numbers, and employment dates. For any graduates identified as self-employed, an institution maintains evidence of employment. Documentation in the form of employer or graduate verification forms or other evidence of employment is retained. Job Placement Rate in the Field The method of calculation, using the reporting period July 1 through June 30, is as follows: (F + R) / (G-U) = +P% F= R= G= U* = P% = Graduates placed in their field of training Graduates placed in a related field of training Total graduates Graduates unavailable for placement Placement percentage *Unavailable is defined only as documented: health-related issues, military obligations, incarceration, death, or continuing education status. See pages 14-15 for examples Credentialing Examination Participation Rate Standard: Participation of program graduates in credentialing or licensure examinations required for employment in the field in the geographic area(s) where graduates are likely to seek employment. The method of calculation, using ABHES’ reporting period July 1 through June 30, is as follows: Examination participation rate = T/G T = Total graduates eligible to sit for examination G = Total graduates taking examination Credentialing Examination Participation Rate Include results of periodic reviews of exam results along with goals for the upcoming year Devise alternate methods for collection of results that are not easily accessible without student consent Include the three most recent years of data collection (by program or by class) Credentialing Examination Participation Rate EXAMPLE: Nursing Assistant Program PROGRAM Nursing Assistant GRADS NUMBER TOOK EXAM % GRADS TOOK EXAM ‘05 ’06 ‘07 ‘05 ’06 ‘07 ‘05 ’06 ‘07 20 15 75 26 24 20 21 77 88 [2% (percent increase between 2005 & 2006) + 11(percent increase between 2006 & 2007) ÷ 2 = 6.5] So the goal for the number taking the nursing assistant exam in 2008 would be 94.5% (88% + 6.5% = 94.5%) Use trends or averages Credentialing Examination Participation Rate PROGRAM GRADS ’05 ’06 NUMBER TOOK EXAM PERCENT GRADS TOOK EXAM ‘07 Dental Assisting Winter Spring Summer Fall Other data to demonstrate student-learning outcomes may include entrance assessments, pre- and post-tests, course grades, GPA, standardized tests, and portfolios Credentialing Examination Pass Rate Standard: An ongoing review of graduate success on credentialing and/or licensing examinations required for employment in the field in the geographic area(s) where graduates are likely to seek employment is performed to identify curricular areas in need of improvement A program maintains documentation of such review and any pertinent curricular changes made as a result. Credentialing Examination Pass Rate The method of calculation, using ABHES’ reporting period July 1 through June 30, is as follows: F / G= L% F = Graduates passing examination (any attempt) G = Total graduates taking examination L = Percentage of students passing examination Credentialing Examination Pass Rate EXAMPLE: Nursing Assistant Program PROGRAM Nursing Assistant GRADS NUMBER NUMBER TOOK EXAM PASSED PERCENT PASSED ‘05 ’06 ‘07 ‘05 ’06 ‘07 ‘05 ’06 ‘07 ‘05 ’06 ‘07 20 15 12 80 26 24 80 + 75 + 76 / 3 = 77% 20 21 15 16 75 76 Credentialing Examination Participation Rate PROGRAM GRADS ’05 ’06 Dental Assisting Winter Spring Summer Fall NUMBER TOOK EXAM ‘07 NUMBER PASSED PERCENT PASSED Program Assessment Standard: The program assesses each student prior to graduation as an indicator of the program’s quality. The assessment tool is designed to assess curricular quality and to measure overall achievement in the program, as a class, not as a measurement of an individual student’s achievement or progress toward accomplishing the program’s objectives and competencies (e.g., exit tool for graduation). Results of the assessment are not required to be reported to ABHES, but are considered in annual curriculum revision by such parties as the program supervisor, faculty, and the advisory board and are included in the Program Effectiveness Plan. Program Assessment PAEs pinpoint curricular deficiencies Should be designed to incorporate all major elements of the curriculum A well-designed PAE will point directly to that segment of the curriculum that needs remedy Try scoring with ranges, rather than pass/fail. Student, Clinical Extern Affiliate, Graduate, and Employer Satisfaction Standard: A program must survey each of the constituents identified above The purpose of the surveys is to collect data regarding student, extern, clinical affiliate, graduate, and employer perceptions of a program’s strengths and weaknesses For graduates and employers only, the survey used must include the basic elements provided by ABHES in Appendix J, Surveys The required questions identified must be included, in numeric order, to more easily report the basic elements and specific questions provided. Student, Clinical Extern Affiliate, Graduate, and Employer Satisfaction At a minimum, an annual review of results of the surveys is conducted, and results are shared with administration, faculty, and advisory boards Decisions and action plans are based upon review of the surveys, and any changes made are documented (e.g., meeting minutes, memoranda). Survey Participation Survey participation rate: SP / NS = TP SP = NS = TP = Survey Participation (those who actually filled out the survey) Number Surveyed (total number of surveys sent out) Total Participation by program, by group; meaning the number of students/clinical extern affiliates/graduates/employers by program who were sent and completed the survey during the ABHES reporting period (July 1–June 30). Survey Participation For each group surveyed, programs must identify and describe the following: The rationale for the type of data collected How the data was collected Goals A summary and analysis of the survey results How data was used to improve the learning process. Survey Participation The report table format should look like this: Rationale for Data Collection Procedures Goals Summary /Analysis Improvement Strategies Satisfaction Benchmarks and Reporting Student Surveys: Evaluations exhibit student views relating to: Course importance Satisfaction Administration Faculty Training (including externship) Attitudes about the classroom environment Establish a survey return percentage Survey Reporting EXAMPLE Rationale for Data Collection Procedures Goals Summary /Analysis Improvement Strategies Rationale for Data Secure feedback from students on importance and satisfaction on customer service and overall attitudes related to the institution’s administration. Data used to reflect on what worked or didn’t work. End of term student evaluations used as composite of student views relating to course importance and satisfaction and overall class attitudes about the classroom environment. Faculty use the data to determine effective/ineffective activities and compare this information with other classes. Survey Reporting EXAMPLE Rationale for Data Collection Procedures Goals Summary /Analysis Improvement Strategies Collection Procedures Student satisfaction surveys are collected semiannually Survey Reporting Rationale for Data Collection Procedures Goals Summary /Analysis Improvement Strategies EXAMPLE Collection Goals (Benchmarks) Using student satisfaction surveys (orientation through graduation) the benchmarks are: Tutoring Academic Advising Admissions Support Financial Aid Career Services Library Spirited/Fun Environment Orientation Sessions Recognition Mission Statement Admin Accessibility Facility Social Activities 80% 80% 75% 75% 75% 80% 50% 75% 65% 50% 80% 70% 50% Survey return percentage 90% Survey Reporting Rationale for Data Collection Procedures Goals Summary /Analysis Improvement Strategies Summary/Analysis Feedback obtained from completed surveys tallied for each category. Survey Reporting Rationale for Data Collection Procedures Goals Summary /Analysis Improvement Strategies Improvement Strategies The data is collected and benchmarks are set and analyzed for improvement strategies when measures fall below established baselines. Failure to achieve a baseline goal will be addressed at faculty and in-service meetings Clinical Affiliate Standard: Externship site evaluations include a critique of student knowledge and skills upon completion of their in-school training and reflect how well the students are trained to perform their required tasks. They include an assessment of the strengths and weaknesses, and proposed changes, in the instructional activities for currently enrolled students. The sites also evaluate the responsiveness and support provided by the designated school representative, who visited the site and remained in contact with the site throughout the duration of the students’ externship. Clinical Affiliate 2 parts: Student satisfaction with clinical experience Can be secured from Student Surveys Clinical affiliate satisfaction Adequate entry-level knowledge from didactic and lab for clinicals Responsiveness and support of school rep Clinical Affiliate Students on externship should evaluate this experience just as they did in the classroom Should reflect how well the students are trained to perform their required tasks Include assessment of the strengths and weaknesses Proposed changes The sites should also evaluate school representative’s responsiveness and support See pages 20-21 for example Graduate Standard: A program has a systematic plan for regularly surveying graduates. At a minimum, an annual review of the results is conducted and shared with administration, faculty, and advisory boards. Decisions and action plans are based upon the review of the surveys, and any changes made are documented (e.g., meeting minutes, memoranda). The results of the survey questions required by ABHES and identified in Appendix J, Surveys are summarized by numeric value and reported to ABHES in the Annual Report (July 1–June 30 reporting period). Graduate Standardized surveys have been developed by ABHES for graduate satisfaction (located in Appendix J of the Accreditation Manual, 16th ed.) The items must be provided in the order presented. The graduate survey is to be provided to graduates no sooner than 10 days following graduation. Graduate 5 = Strongly Agree 4 = Agree 3 = Acceptable 2 = Disagree 1 = Strongly Disagree 1. I was informed of any credentialing required to work in the field. 2. The classroom/laboratory portions of the program adequately prepared me for my present position. 3. The clinical portion of the program adequately prepared me for my present position. 4. My instructors were knowledgeable in the subject matter and relayed this knowledge to the class clearly. 5. Upon completion of my classroom training, an externship site was available to me, if applicable. 6. I would recommend this program/institution to friends or family members. Graduate The program may use the provided survey only, or may include additional items for internal assessment. Only those items provided by ABHES for graduate satisfaction assessment are to be included in the PEP. May include: Relevance and currency of curricula Quality of advising Administrative and placement services provided Information should be current, representative of the student population, and comprehensive. See pages 22-23 for reporting example Employer Standard: A program has a systematic plan for regularly surveying employers. At a minimum, an annual review of the results is conducted and shared with administration, faculty, and advisory boards. Decisions and action plans are based upon the review of the surveys, and any changes made are documented (e.g., meeting minutes, memoranda). The results of the survey questions required by ABHES and identified in Appendix J, Surveys, are reported to ABHES in the Annual Report (July 1–June 30 reporting period). Standardized surveys have been developed by ABHES for employer satisfaction (located in Appendix J of the Accreditation Manual, 16th ed.). The items must be provided in the order presented. The program may use the provided survey only, or may include additional items for internal assessment. Only those items provided by ABHES for graduate satisfaction assessment are to be included in the PEP. Employer The employer survey is to be provided to the employer no fewer than 30 days following employment. 5 = Strongly Agree 4 = Agree 3 = Acceptable 2 = Disagree 1 = Strongly Disagree Employer survey satisfaction items are as follows: 1. The employee demonstrates acceptable training in the area for which he/she is employed. 2. The employee has the skill level necessary for the job. 3. I would hire other graduates of this program (Yes / No) Employer Major part of determining program effectiveness Reflects how well employees (graduates) are trained to perform their required tasks Includes an assessment of the strengths and weaknesses Proposed changes The program should also establish a percentage survey return goal See page 24 for reporting example Faculty Professional Growth and InService Activities Evidence faculty participation in professional growth activities and in-service sessions Include schedule, attendance roster, and topics discussed Show that sessions promote continuous evaluation of the Program of study Training in instructional procedures Review of other aspects of the educational programs Include the past two years and professional activities outside the institution for each faculty member See Page 25 for reporting example Subsection 2 Outcomes Assessment HISTORICAL OUTCOMES TYPES AND USES OF DATA BASELINES AND MEASUREMENTS SUMMARY AND ANALYSIS OF DATA USING DATA FOR IMPROVEMENT GOAL ADJUSTMENTS GOAL PLANS FOR FOLLOWING YEAR Outcome Assessment Outcomes, though not limited to, are generally defined in terms of the following indicators: Retention Job placement External validation (e.g., PAE, certification/licensing exam) Student, graduate, extern affiliate, and employer satisfaction (through surveys). Outcome Assessment The PEP offers a program the chance to evaluate its overall effectiveness by: Systematically collecting data on each of the outcomes indicators Analyzing the data and comparing it with previous findings Identifying changes to be made (based on the findings) Use at least three years’ historical outcomes The last three PEPs (or IEPs if applicable) and Annual Reports should provide the necessary historical data Baselines and Measurements Evaluate at least annually to determine initial baseline rates and measurements of results after planned activities have occurred. Evaluate at least once per year at a predetermined time (monthly or quarterly) Complete an annual comprehensive evaluation Data Collection Data should clearly evidence the level of educational outcomes for retention, placement and satisfaction Information relevant to improving overall effectiveness In-service training programs Professional growth opportunities for faculty Data Collection Studies of student performance might include: Admission assessments Grades by course Standardized tests Quarterly grade distribution Pre-test and post-test results Portfolios Graduate certification examination results Average daily attendance A few examples of possible surveys and studies include: New or entering student surveys Faculty evaluation studies Student demographic studies Program evaluations Alumni surveys Labor market surveys Categories Data Collection Types used for assessment How collected Rationale for use Timetable for collection Parties responsible for collection Rationale for Use Goals Who Responsible Review Dates Summary/Analysis Strategy Adjustment Summary/Analysis Improvement Strategies Problems/Deficiencies Specific Activities Data Collection Rationale for Use Data Rationale Collection for Use Types used for assessment Goals Goals Goals How collected Who Responsible Summary/ Analysis Improvement Strategies Summary/ Problems/ Analysis Deficiencies Timetable for collection Review Dates Summary/ Analysis Specific Activities Parties responsible for collection Strategy Adjustment Categories Example of Goals, Who Responsible, Review Dates, Summary/Analysis, Strategy Adjustment Page 29 Goals Programs establish specific goals for benchmarks to measure improvement Goals can be set as an annual incremental increase or set as a static goal (e.g., 85 percent for retention and placement). Annually monitor activities conducted Categories Summary and Analysis Provide a summary and analysis of data collected and state how continuous improvement is made to enhance expected outcomes. Provide overview of the data collected Summarize the findings that indicate the program’s strong and weak areas with plans for improvements Use results to develop the basis for the next annual review Present new ideas for changes Categories Summary and Analysis An example of how a program may evaluate the PEP could be by completing the following activities: Measuring the degree to which educational goals have been achieved. Conducting a comprehensive evaluation of the core indicators Summarizing the programmatic changes that have been developed Documenting changes in programmatic processes Revised goals Planning documents Program goals and activities Categories Strategies to Improve Examples of changes to a process that can enhance a program: If a course requires a certain amount of outside laboratory or practice time and an analysis of the students’ actual laboratory or practice time demonstrates that the students are not completing the required hours, formally scheduling those hours or adding additional laboratory times may dramatically increase the effectiveness of that course. If an analysis of the data demonstrates that a large number of students are failing a specific course or are withdrawing in excessive numbers, the program may change the prerequisites for that course or offer extra lab hours or tutoring to see if the failure or withdrawal rate are positively affected. Categories Strategies to Improve Examples of changes to a program that can enhance a program: If the analysis of the data indicates that large numbers of students are dropping or failing a course when taught by a particular instructor, the instructor may need additional training or a different instructor may need to be assigned to teach that course. If surveys from employers and graduates indicate that a particular software program should be taught to provide the students with up-to-date training according to industry standards, the program could add instruction in the use of the particular software program. Examples for Reporting of Outcomes Examples Data Collection and Rationale for Use Goals, Summary/Analysis, Improvement Strategies Page 27 (Bottom) Summary/Analysis, Use of Data to Improve Page 27 (Top) Page 28, 29 Problems/Deficiencies, Specific Activities Page 30 CONCLUSION The results of a PEP are never final. It is a working document An effective PEP is regularly reviewed by key personnel and used in evaluating the effectiveness of the program. STUDENT LEARNING OUTCOMES DEFINED EMPLOYERS SATISFIED GRADUATES SATISFIED GRADUATES PLACED IN FIELD STUDENTS ADMITTED STUDENT AS THE MAIN ACTOR STUDENTS SATISFIED, ACADEMICALLY SUCCESSFUL (RETENTION) STUDENTS GRADUATE