PPTX file

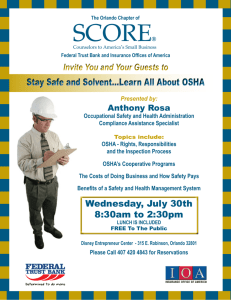

advertisement