Multimodal Emotion Recognition

advertisement

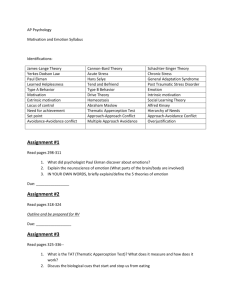

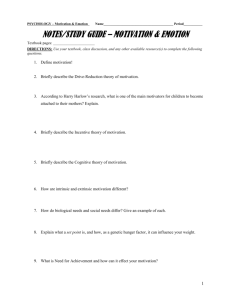

multimodal emotion recognition and expressivity analysis ICME 2005 Special Session Stefanos Kollias, Kostas Karpouzis Image, Video and Multimedia Systems Lab National Technical University of Athens expressivity and emotion recognition • affective computing – capability of machines to recognize, express, model, communicate and respond to emotional information • computers need the ability to recognize human emotion – everyday HCI is emotional: three-quarters of computer users admit to swearing at computers – user input and system reaction are important to pinpoint problems or provide natural interfaces July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 2 the targeted interaction framework • Generating intelligent interfaces with affective, learning, reasoning and adaptive capabilities. • Multidisciplinary expertise is the basic means for novel interfaces, including perception and emotion recognition, semantic analysis, cognition, modelling and expression generation and production of multimodal avatars capable of adapting to the goals and context of interaction. • Humans function due to four primary modes of being, i.e., affect, motivation, cognition, and behavior; these are related to feeling, wanting, thinking, and acting. • Affect is particularly difficult requiring to understand and model the causes and consequences of emotions. The latter, especially as realized in behavior, is a daunting task July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 3 July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 4 everyday emotional states • dramatic extremes (terror, misery, elation) are fascinating, but marginal for HCI. • the target of an affect-aware system – register everyday states with an emotional component – excitement, boredom, irritation, enthusiasm, stress, satisfaction, amusement – achieve sensitivity to everyday emotional states July 7, 2005 I think you might be getting just a *wee* bit bored – maybe a coffee? multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 5 affective computing applications • detect specific incidents/situations that need human intervention – e.g. anger detection in a call center • naturalistic interfaces – keyboard/mouse/pointer paradigm can be difficult for the elderly, handicapped people or children – speech and gesture interfaces can be useful July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 6 the EU perspective • Until 2002, related research was dominated by mid-scale projects – ERMIS: multimodal emotion recognition (facial expressions, linguistic and prosody analysis) – NECA: networked affective ECAs – SAFIRA: affective input interfaces – NICE: Natural Interactive Communication for Edutainment – MEGA: Multisensory Expressive Gesture Applications – INTERFACE: Multimodal Analysis/Synthesis System for Human Interaction to Virtual and Augmented Environments July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 7 the EU perspective • FP6 (2002-2006) issued two calls for multimodal interfaces – Call 1 (April 2003) and Call 5 (September 2005) covering multimodal and multilingual areas – Integrated Projects: AMI – Augmented Multi-party Interaction and CHIL - Computers In the Human Interaction Loop – Networks of Excellence: Humaine and Similar – Other calls covered “Leisure and entertainment”, “eInclusion”, “Cognitive systems” and “Presence and Interaction” July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 8 the HUMAINE Network of Excellence • FP6 Call 1 Network of Excellence: Research on Emotions and Human-Machine Interaction • start: 1st January 2004, duration: 48 months • IST thematic priority: Multimodal Interfaces – emotions in human-machine interaction – creation of a new, interdisciplinary research community – advancing the state of the art in a principled way July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 9 the HUMAINE Network of Excellence • 33 partner groups from 14 countries • coordinated by Queen’s University of Belfast • goals of HUMAINE: – integrate existing expertise in psychology, computer engineering, cognition, interaction and usability – promote shared insight • http://emotion-research.net July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 10 moving forward • future EU orientations include (extracted from Call 1 evaluation, 2004): – adaptability and re-configurable interfaces – collaborative technologies and interfaces in the arts – less explored modalities, e.g. haptics, bio-sensing – affective computing, including character and facial expression recognition and animation – more product innovation and industrial impact • FP7 direction: Simulation, Visualization, Interaction, Mixed Reality – blending semantic/knowledge and interface technologies July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 11 the special session • segment-based approach to the recognition of emotions in speech – M. Shami, M. Kamel, University of Waterloo – T. Vogt, E. Andre, University of Augsburg – L. Vidrascu, L. Devillers, LIMSI-CNRS, France – G. Zoric, I. Pandzic, University of Zagreb – Cheng-Yao Chen, Yue-Kai Huang, Perry Cook, Princeton University – R. Cowie, E. Douglas-Cowie, Queen’s University of Belfast, J. Taylor, King's College, S. Ioannou, M. Wallace, IVML/NTUA • comparing feature sets for acted and spontaneous speech in view of automatic emotion recognition • annotation and detection of blended emotions in real human-human dialogs recorded in a call center • a real-time lip sync system using a genetic algorithm for automatic neural network configuration • visual/acoustic emotion recognition • an intelligent system for facial emotion recognition July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 12 the big picture • feature extraction from multiple modalities – prosody, words, face, gestures, biosignals… • unimodal recognition • multimodal recognition • using detected features to cater for affective interaction July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 13 audiovisual emotion recognition User Interface Speech Linguistic Analysis Subsystem Speech Paralinguistic Analysis Subsystem Video Facial Analysis Subsystem Phonetic Parameters Linguistic Parameters Emotion Recognition Subsystem Facial Parameters • the core system combines modules dealing with – visual signs – linguistic content of speech (what you say) – paralinguistic content (how you say it) • and recognition based on all the signs Emotional State July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 14 facial analysis module • face detection, i.e. finding a face without prior information about its location • using prior knowledge about where to look – face tracking – extraction of key regions and points in the face – monitoring of movements over time (as features for user’s expressions/emotions) • provide confidence level for the validity of each detected feature July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 15 facial analysis module • face detection, obtained through SVM classification • facial feature extraction, by robust estimation of the primary facial features, i.e., eyes, mouth, eyebrows and nose • fusion of different extraction techniques, with confidence level estimation. • MPEG-4 FP and FAP feature extraction to feed the expression and emotion recognition task. • 3-D modeling for improved accuracy in FP and FAP feature estimation, at an increased computational load, when the facial user model is known. July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 16 facial analysis module the extracted mask for the eyes July 7, 2005 detected feature points in the masks multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 17 FAP estimation Absence of clear quantitative definition of FAPs It is possible to model FAPs through FDP feature points movement using distances s(x,y) e.g. close_t_r_eyelid (F20) - close_b_r_eyelid (F22) D13=s (3.2,3.4) f13= D13 - D13-NEUTRAL July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 18 face detection … Quick rejection - variance - skin color Preprocessing … … July 7, 2005 face Classify Subspace Projection no face SVM classifier multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 19 face detection detected face July 7, 2005 estimation of the active contour of the face multimodal emotion recognition and expressivity analysis ICME 2005 Special Session extraction of the facial area 20 facial feature extraction extraction of eyes and mouth – key regions within the face July 7, 2005 extraction of MPEG-4 Facial Points (FPs) – key points in the eye & mouth regions multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 21 other visual features • visemes, eye gaze, head pose – movement patterns, temporal correlations • hand gestures, body movements – deictic/conversational gestures – “body language” • measurable parameters to render expressivity on affective ECAs – spatial extent, repetitiveness, volume, etc. July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 22 video analysis using 3D • Step 1: Scan or approximate 3d model (in this case estimated from video data only using face space approach) July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 23 video analysis using 3D • Step 2: Represent 3d model using a predefined template geometry, the same template is used for expressions. This template shows higher density around eyes, and mouth and lower density around flatter areas such as cheeks, forehead, etc. July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 24 video analysis using 3D •Step 3: Construct database of facial expressions by recording various actors. The statistics derived from these performances is stored in terms of a “Dynamic Face Space” •Step 4: Apply the expressions to the actor in the video data: July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 25 video analysis using 3D •Step 5: Matching : rotate head + apply various expressions and match current state with 2D video frame - Global Minimization process July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 26 video analysis using 3D • the global matching/minimization process is complex • it is sensitive to – – – – – illumination, which may vary across sequence, shading, shadowing effects on the face, color changes, or color differences variability in expressions, some expressions can not be generated using the statistics of the a priori recorded sequences • it is time consuming (several minutes per frame) July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 27 video analysis using 3D Local template matching July 7, 2005 Pose estimation multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 28 video analysis using 3D July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 29 video analysis using 3D 3D models July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 30 video analysis using 3D Add expressions July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 31 auditory module • linguistic analysis aims to extract the words that the speaker produces • paralinguistic analysis aims to extract significant variations in the way words are produced - mainly in pitch, loudness, timing, and ‘voice quality’ • both are designed to cope with the less than perfect signals that are likely to occur in real use July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 32 linguistic analysis (a) Speech Signal Short-term spectral domain Enhanced Speech Signal Text Speech Recognition Module Singular value decomposition Text Post-Processing Module Signal Enhancement/ Adaptation Module Enhanced Speech Signal Linguistic Parameters (a) The Linguistic Analysis Subsystem Acoustic Modeling Language Modeling (b) The Speech Recognition Module (b) Parameter Extraction Module July 7, 2005 Search Engine multimodal emotion recognition and expressivity analysis Text ICME 2005 Special Session Dictionary 33 paralinguistic analysis • ASSESS, developed by QUB, describes speech at multiple levels – intensity & spectrum; edits, pauses, frication; raw pitch estimates & a smooth fitted curve; rises & falls in intensity & pitch July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 34 integrating the evidence • level 1: – facial emotion – phonetic emotion – linguistic emotion • level 2: – “total” emotional state (result of the "level 1 emotions") • modeling technique: fuzzy set theory (research by Massaro suggests this models the way humans integrate signs) July 7, 2005 Linguistic Emotion State Detection Phonetic Emotion State Detection Emotional State Detection Facial Emotion State Detection multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 35 integrating the evidence • mechanisms linking attention and emotion in the brain form a useful model Goals (Inhibition) ACG July 7, 2005 Goals (SFG) IMC (hetermodal CX) Visual Input Salience Valence NBM (Amygdala) (Ach source) multimodal emotion recognition and expressivity analysis ICME 2005 Special Session Thalamus /Superior Colliculus 36 biosignal analysis • different emotional expressions produce different changes in autonomic activity: – anger: increased heart rate and skin temperature – fear: increased heart rate, decreased skin temperature – happiness: decreased heart rate, no change in skin temperature • easily integrated with external channels (face and speech) presentation by J. Kim in the HUMAINE WP4 workshop, September 2004 July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 37 biosignal analysis Acoustics and noise EEG – Brain waves Respiration – Breathing rate July 7, 2005 Temperature BVP- Blood volume pulse EMG – Muscle tension GSR – Skin conductivity EKG– Heart rate multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 38 biosignal analysis • skin-sensing requires physical contact • need to improve accuracy, robustness to motion artifacts – vulnerable to distortion • most research measures artificially elicited emotions in a lab environment and a from single subject • different individuals show emotion with different response in autonomic channels (hard for multi-subjects) • rarely studied physiological emotion recognition, literature offers ideas rather than well-defined solutions July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 39 multimodal emotion recognition • recognition models- application dependency – discrete / dimensional / appraisal theory models • theoretical models of multimodal integration – direct / separate / dominant / motor integration • modality synchronization – visemes/ EMGs & FAPs / SC-RSP & speech • temporal evolution and modality sequentiality – multimodal recognition techniques • classifiers + context + goals + cognition/attention + modality significance in interaction July 7, 2005 multimodal emotion recognition and expressivity analysis ICME 2005 Special Session 40