Scalable On-line Automated Performance Diagnosis

advertisement

The Distributed Performance Consultant:

Automated Performance Diagnosis on

1000s of Processors

Philip C. Roth

pcroth@cs.wisc.edu

Computer Sciences Department

University of Wisconsin-Madison

Madison, WI 53706

USA

April 14, 2004

High Performance Computing Today

• Large parallel computing resources

– Tightly coupled systems

• Earth Simulator (Japan, 5120 CPUs)

• ASCI Purple (LLNL, 12K CPUs planned)

• BlueGene/L (LLNL, 128K CPUs planned)

– Clusters

• Lightning (LANL, 2816 CPUs)

• Aspen Systems (Forecast Systems Lab, 1536 CPUs)

– Grid

• Large applications

– ASCI Q job sizes (1/1 to 1/24 2004)

• 257–512 cpus: 19.7%

• 513–1024 cpus: 24.6%

• 1024–2048 cpus: 16.4%

© Philip C. Roth 2004

-2-

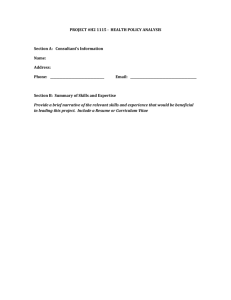

Distributed Performance Consultant

Barriers to Large-Scale Performance Diagnosis

• Managing performance data volume

• Communicating efficiently between

distributed tool components

• Making scalable presentation of

data and analysis results

Tool Front End

Tool

Daemons

d0

d1

d2

d3

dP-4

dP-3

dP-2

dP-1

App

Processes

a0

a1

a2

a3

aP-4

aP-3

aP-2

aP-1

© Philip C. Roth 2004

-3-

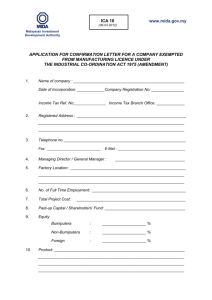

Distributed Performance Consultant

Overcoming Scalability Barriers: Our Approach

1. MRNet: multicast/reduction infrastructure

for building scalable tools

2. SStart: strategy for improving tool start-up

latency

3. Distributed Performance Consultant: strategy

for efficiently finding performance

bottlenecks in large-scale applications

4. Distributed Data Manager: for efficient,

distributed performance data management

5. Sub-Graph Folding Algorithm: algorithm for

effectively presenting bottleneck search

results for large-scale applications

© Philip C. Roth 2004

-4-

Distributed Performance Consultant

Overcoming Scalability Barriers: Our Approach

1. MRNet: multicast/reduction infrastructure

for building scalable tools

2. SStart: strategy for improving tool start-up

latency

3. Distributed Performance Consultant: strategy

for efficiently finding performance

bottlenecks in large-scale applications

4. Distributed Data Manager: for efficient,

distributed performance data management

5. Sub-Graph Folding Algorithm: algorithm for

effectively presenting bottleneck search

results for large-scale applications

© Philip C. Roth 2004

-5-

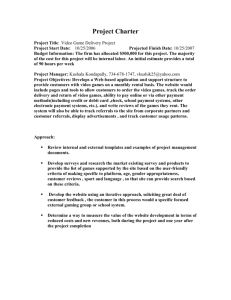

Distributed Performance Consultant

The Performance Consultant

• Automated performance diagnosis

• Search for application performance problems

– Start with global, general experiments (e.g., test

CPUbound across all processes)

– Collect performance data using dynamic instrumentation

– Make decisions about truth of each experiment

– Refine search: create more specific experiments based

on “true” experiments

CPUbound

– Repeat

main

Do_row

Do_col

c001.cs.wisc.edu

c002.cs.wisc.edu

main

main

…

c128.cs.wisc.edu

main

…

© Philip C. Roth 2004

-6-

Distributed Performance Consultant

The Performance Consultant

• Works well for sequential and small-scale

parallel applications…

• …but front-end is a bottleneck when looking

for performance problems in large-scale

applications

– High data processing load

– Limited network bandwidth

© Philip C. Roth 2004

-7-

Distributed Performance Consultant

Our Approach

• MRNet to reduce load for processing global

performance data (e.g., average CPU

utilization across all processes)

• But front-end still processes local

performance data (e.g., CPU utilization in

process 5247 on host blue26.pacific.llnl.gov)

Examine behavior of a specific process on

the host that runs the process

© Philip C. Roth 2004

-8-

Distributed Performance Consultant

Our Approach: Example

cham.cs.wisc.edu

c001.cs.wisc.edu

c002.cs.wisc.edu

c128.cs.wisc.edu

CPUbound

myapp367

myapp4287

myapp27549

main

Do_row

Do_col

Do_mult

…

c001.cs.wisc.edu

c002.cs.wisc.edu

myapp{367}

myapp{4287}

myapp{27549}

main

main

main

…

Do_row

Do_col

Do_row

Do_mult

Do_mult

-9-

…

Do_row

Do_col

…

…

Do_mult

…

…

…

© Philip C. Roth 2004

Do_col

c128.cs.wisc.edu

Distributed Performance Consultant

The Distributed Performance Consultant (DPC)

• Distributed

performance problem

search strategy

• Distributed

performance data

management

• DPC-aware Dynamic

Instrumentation

management

Control

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

© Philip C. Roth 2004

-10-

Distributed Performance Consultant

The Distributed Performance Consultant (DPC)

• Distributed

performance problem

search strategy

• Distributed

performance data

management

• DPC-aware Dynamic

Instrumentation

management

Control

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

© Philip C. Roth 2004

-11-

Distributed Performance Consultant

DPC: Search Strategy

• Local Performance

Consultant agents (LPCs)

in each daemon, Global

PC in front-end

• GPC controls overall

search, manage global

experiments

• LPCs control portion of

search specific to their

local host

Control

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

© Philip C. Roth 2004

-12-

Distributed Performance Consultant

DPC: Search Strategy

• Like traditional PC, DPC begins search

with global, general experiments (e.g.,

CPUbound across all processes)

• When search is refined to specific host,

GPC delegates that portion of the

search to LPC on that host

• LPC examines local behavior of all

processes on the local host, returns

results to GPC

© Philip C. Roth 2004

-13-

Distributed Performance Consultant

The Distributed Performance Consultant (DPC)

• Distributed

performance problem

search strategy

• Distributed

performance data

management

• DPC-aware Dynamic

Instrumentation

management

Control

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

© Philip C. Roth 2004

-14-

Distributed Performance Consultant

DPC: Performance Data Management

Control

• Local Data Managers

(LDMs) in each daemon,

Global Data Manager in

front-end

• Publish/subscribe model

for data flow

• MRNet for global data

aggregation

• Possible caching at LDMs

(no caching in initial

implementation)

© Philip C. Roth 2004

-15-

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

Distributed Performance Consultant

The Distributed Performance Consultant (DPC)

• Distributed

performance problem

search strategy

• Distributed

performance data

management

• DPC-aware Dynamic

Instrumentation

management

Control

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

© Philip C. Roth 2004

-16-

Distributed Performance Consultant

DPC: Instrumentation Management

• Local Instrumentation

Manager (LIM) services

requests from GPC and

LPC without starving

either

• Instrumentation

management tailored to

distributed nature of DPC

– New model for

instrumentation cost

– New policy for scheduling

instrumentation

© Philip C. Roth 2004

-17-

Control

Data

Front-End

Data

Manager

Performance

Consultant

MRNet

Daemon

Local

Instrumentation

Manager

Local

Data

Manager

Local

Performance

Consultant

Data

Collector

Distributed Performance Consultant

DPC: Instrumentation Management

• Mismatch between centralized control of

instrumentation cost and distributed nature

of DPC

• DPC’s instrumentation management

– New model for cost of Dynamic Instrumentation

– New policy for scheduling Dynamic

Instrumentation requests

© Philip C. Roth 2004

-18-

Distributed Performance Consultant

DPC: Instrumentation Cost Model

• User-specified cost threshold, broadcast to

each Local Instrumentation Manager (LIM)

• Each LIM restricts active instrumentation

to cost threshold

• GPC throttles instrumentation requests

when it does not receive timely responses to

instrumentation requests (avoids need to

gather instrumentation cost from all LIMs

to front-end)

© Philip C. Roth 2004

-19-

Distributed Performance Consultant

DPC: Instrumentation Scheduling Policy

• LIM needs to schedule instrumentation requests

from both LPC and GPC

– GPC requests must be satisfied by LIM in all daemons to

be useful

– LPC requests are restricted to a single daemon

• Prototype LIM’s instrumentation scheduling policy

chosen to ensure both local and global searches

make progress

– Distinguishes instrumentation cost by instrumentation

type (local vs. global)

– If both types are present, guarantee a percentage of

total instrumentation cost threshold for each type

(percentage is user-configurable)

© Philip C. Roth 2004

-20-

Distributed Performance Consultant

DPC: Status

• Implementation well underway, but prototype

implementation not yet complete

– Daemons have been multithreaded

– Local Data Managers in place

– Local Performance Consultant agents in progress

• Planning experiments to evaluate overall

scalability with various instrumentation cost

models and scheduling policies

© Philip C. Roth 2004

-21-

Distributed Performance Consultant

Summary

The DPC is a major part of our approach for

overcoming barriers that limit the scalability of

automated performance diagnosis tools.

Using a distributed search strategy, distributed

performance data manager, and instrumentation

management techniques tailored to its distributed

nature, the DPC will greatly increase the scalability

of Paradyn’s automated performance diagnosis

functionality.

http://www.paradyn.org

paradyn@cs.wisc.edu

© Philip C. Roth 2004

-22-

Distributed Performance Consultant