wright_29_Jan_2010_S..

advertisement

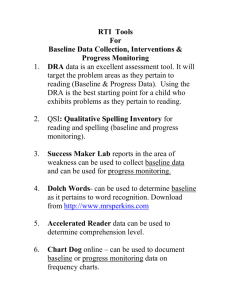

Response to Intervention RTI: Assessment & Progress-Monitoring at the Middle & High School Level Jim Wright www.interventioncentral.org www.interventioncentral.org Data Collection: Defining Terms Response to Intervention Evaluation. “the process of using information collected through assessment to make decisions or reach conclusions.” (Hosp, 2008; p. 364). Example: A student can be evaluated for problems in ‘fluency with text’ by collecting information using various sources (e.g., CBM ORF, teacher interview, direct observations of the student reading across settings, etc.), comparing those results to peer norms or curriculum expectations, and making a decision about whether the student’s current performance is acceptable. Assessment. “the process of collecting information about the characteristics of persons or objects by measuring them. ” (Hosp, 2008; p. 364). Example: The construct ‘fluency with text’ can be assessed using various measurements, including CBM ORF, teacher interview, and direct observations of the student reading in different settings and in different material. Measurement. “the process of applying numbers to the characteristics of objects or people in a systematic way” (Hosp, 2008; p. 364). Example: Curriculum-Based Measurement Oral Reading Fluency (CBM ORF) is one method to measure the construct ‘fluency with text’ www.interventioncentral.org 2 Response to Intervention Use Time & Resources Efficiently By Collecting Information Only on ‘Things That Are Alterable’ “…Time should be spent thinking about things that the intervention team can influence through instruction, consultation, related services, or adjustments to the student’s program. These are things that are alterable.…Beware of statements about cognitive processes that shift the focus from the curriculum and may even encourage questionable educational practice. They can also promote writing off a student because of the rationale that the student’s insufficient performance is due to a limited and fixed potential. “ p.359 Source: Howell, K. W., Hosp, J. L., & Kurns, S. (2008). Best practices in curriculum-based evaluation. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp.349-362). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 3 Response to Intervention RTI: Assessment & Progress-Monitoring • • • To measure student ‘response to instruction/intervention’ effectively, the RTI model measures students’ academic performance and progress on schedules matched to each student’s risk profile and intervention Tier membership. Benchmarking/Universal Screening/Local Norming. All children in a grade level are assessed at least 3 times per year on a common collection of academic assessments. Strategic Monitoring. Students placed in Tier 2 (supplemental) reading groups are assessed 1-2 times per month to gauge their progress with this intervention. Intensive Monitoring. Students who participate in an intensive, individualized Tier 3 intervention are assessed at least once per week. Source: Burns, M. K., & Gibbons, K. A. (2008). Implementing response-to-intervention in elementary and secondary schools: Procedures to assure scientific-based practices. New York: Routledge. www.interventioncentral.org 4 Response to Intervention Screening: Defining Terms • • • Screening: The same data are collected or the same assessment is given to all students in a grade or other group. Local Norms: The results of a screening are analyzed to develop performance norms based on the local group that was assessed. Benchmarking: Students are screened and the results are compared to external ‘research’ norms or benchmarks of expected performance. Source: Hosp, J. L. (2008). Best practices in aligning academic assessment with instruction. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp.363-376). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 5 Response to Intervention Screening: Finding Students That Need Academic or Behavioral Assistance When screening students for RTI services, error is unavoidable, as data are not perfect. The screening cut-offs established by schools will lead to two possible types of errors in recruiting students for RTI services: • • Type 1 Error [False Positives]: The screening cut-off is set higher, IDENTIFYING all students who need RTI intervention services but also including a few students who do not need intervention. Type 2 Error [False Negatives]: The screening cut-off is set lower, screening OUT all students who do not need RTI intervention services but also excluding some students who do need intervention. Source: Hosp, J. L. (2008). Best practices in aligning academic assessment with instruction. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp.363-376). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 6 Response to Intervention Local Norms: Screening All Students (Stewart & Silberglit, 2008) Local norm data in basic academic skills are collected at least 3 times per year (fall, winter, spring). • Schools should consider using ‘curriculum-linked’ measures such as Curriculum-Based Measurement that will show generalized student growth in response to learning. • If possible, schools should consider avoiding ‘curriculum-locked’ measures that are tied to a single commercial instructional program. Source: Stewart, L. H. & Silberglit, B. (2008). Best practices in developing academic local norms. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp. 225-242). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 7 Response to Intervention Local Norms: Using a Wide Variety of Data (Stewart & Silberglit, 2008) Local norms can be compiled using: • Fluency measures such as Curriculum-Based Measurement. • Existing data, such as office disciplinary referrals. • Computer-delivered assessments, e.g., Measures of Academic Progress (MAP) from www.nwea.org Source: Stewart, L. H. & Silberglit, B. (2008). Best practices in developing academic local norms. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp. 225-242). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 8 Response to Intervention Academic Screening Tools: Choices… Assessments used for academic screening can assess: • Basic academic skills (e.g., CBM Oral Reading Fluency, Math Computation). • Advanced concepts (e.g., math assessments at www.easycbm.com that are tied to Math Focal Points from the NCTM). • Student performance according to curriculum expectations (e.g., Measures of Academic ProgressMAP-from NWEA.org). www.interventioncentral.org 9 Response to Intervention Curriculum-Based Measurement: Advantages as a Set of Tools to Monitor RTI/Academic Cases • • • • • • • • Aligns with curriculum-goals and materials Is reliable and valid (has ‘technical adequacy’) Is criterion-referenced: sets specific performance levels for specific tasks Uses standard procedures to prepare materials, administer, and score Samples student performance to give objective, observable ‘low-inference’ information about student performance Has decision rules to help educators to interpret student data and make appropriate instructional decisions Is efficient to implement in schools (e.g., training can be done quickly; the measures are brief and feasible for classrooms, etc.) Provides data that can be converted into visual displays for ease of communication Source: Hosp, M.K., Hosp, J. L., & Howell, K. W. (2007). The ABCs of CBM. New York: Guilford. www.interventioncentral.org 10 Response to Intervention CBM Measures: Internet Sources • • • • • • DIBELS (https://dibels.uoregon.edu/) AimsWeb (http://www.aimsweb.com) Easy CBM (http://www.easycbm.com) iSteep (http://www.isteep.com) EdCheckup (http://www.edcheckup.com) Intervention Central (http://www.interventioncentral.org) www.interventioncentral.org 11 Response to Intervention Measures of Academic Progress (MAP) www.nwea.org www.interventioncentral.org 12 Response to Intervention Universal Screening at Secondary Schools: Using Existing Data Proactively to Flag ‘Signs of Disengagement’ “Across interventions…, a key component to promoting school completion is the systematic monitoring of all students for signs of disengagement, such as attendance and behavior problems, failing courses, off track in terms of credits earned toward graduation, problematic or few close relationships with peers and/or teachers, and then following up with those who are at risk.” Source: Jimerson, S. R., Reschly, A. L., & Hess, R. S. (2008). Best practices in developing academic local norms. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp. 1085-1097). Bethesda, MD: National Association of School Psychologists. p.1090 www.interventioncentral.org 13 Response to Intervention Mining Archival Data: What Are the ‘Early Warning Flags’ of Student Drop-Out? • • • • A sample of 13,000 students in Philadelphia were tracked for 8 years. These early warning indicators were found to predict student drop-out in the sixth-grade year: Failure in English Failure in math Missing at least 20% of school days Receiving an ‘unsatisfactory’ behavior rating from at least one teacher Source: Balfanz, R., Herzog, L., MacIver, D. J. (2007). Preventing student disengagement and keeping students on the graduation path in urban middle grades schools: Early identification and effective interventions. Educational Psychologist,42, 223–235. . www.interventioncentral.org 14 Response to Intervention What is the Predictive Power of These Early Warning Flags? Number of ‘Early Warning Flags’ in Student Record Probability That Student Would Graduate None 56% 1 36% 2 21% 3 13% 4 7% Source: Balfanz, R., Herzog, L., MacIver, D. J. (2007). Preventing student disengagement and keeping students on the graduation path in urban middle grades schools: Early identification and effective interventions. Educational Psychologist,42, 223–235. . www.interventioncentral.org 15 Response to Intervention Local Norms: Set a Realistic Timeline for ‘PhaseIn’ (Stewart & Silberglit, 2008) “If local norms are not already being collected, it may be helpful to develop a 3-5 year planned rollout of local norm data collection, reporting, and use in line with other professional development and assessment goals for the school. This phased-in process of developing local norms could start with certain grade levels and expand to others.” p. 229 Source: Stewart, L. H. & Silberglit, B. (2008). Best practices in developing academic local norms. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp. 225-242). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 16 Response to Intervention Team Activity: Draft a Plan to Conduct an Academic Screening in Your School or District • • • • • Directions: Develop a draft plan to screen your student population for academic (and perhaps behavioral) concerns: Question 1: How will you determine what mix of measures to include in the screening (e.g., basic academic skills, advanced concepts, student performance according to curriculum expectations)? Question 2: How might you make use of existing data — e.g., disciplinary office referrals, grades, behavior — in your screening plan? Question 3: How frequently would you collect data for each element included in the screening plan? Question 4: Who would assist in implementing the screening plan? www.interventioncentral.org 17 Response to Intervention Intervention Tip: View Cognitive Strategy Interventions at: http://www.unl.edu/csi/ www.interventioncentral.org 18 Response to Intervention Instructional Assessment: Understanding the Learning Needs of the Individual Student Jim Wright www.interventioncentral.org www.interventioncentral.org Response to Intervention Instructional Assessment: Moving Beyond Screening Data • Screening data is intended to rapidly and efficiently identify which students are struggling. • However, schools also need the capacity to complete more detailed ‘instructional assessments’ on some students flagged in a screening to map out those students’ relative strengths and weaknesses. • Action Step: Schools should identify people on staff and develop materials to conduct instructional assessments. www.interventioncentral.org 20 Response to Intervention Local Norms: Supplement With Additional Academic Testing as Needed (Stewart & Silberglit, 2008) “At the individual student level, local norm data are just the first step toward determining why a student may be experiencing academic difficulty. Because local norms are collected on brief indicators of core academic skills, other sources of information and additional testing using the local norm measures or other tests are needed to validate the problem and determine why the student is having difficulty. … Percentage correct and rate information provide clues regarding automaticity and accuracy of skills. Error types, error patterns, and qualitative data provide clues about how a student approached the task. Patterns of strengths and weaknesses on subtests of an assessment can provide information about the concepts in which a student or group of students may need greater instructional support, provided these subtests are equated and reliable for these purposes.” p. 237 Source: Stewart, L. H. & Silberglit, B. (2008). Best practices in developing academic local norms. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp. 225-242). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 21 Response to Intervention Local Norms: Supplement With Additional Academic Testing as Needed (Stewart & Silberglit, 2008) “Whereas screening measures are more general (i.e., they sample skills), analytic measures should be specific and detailed. A math calculation measure with only one item of a specific skill type (e.g., double-digit addition without carrying) may provide sufficient information for screening decisions (provided it includes other skill types that are relevant), but it will not be useful for analytic decisions. If a decision needs to be reached about a student’s specific skill such as double-digit addition without carrying, there needs to be enough samples of that behavior (i.e., items) in order to make a reliable and accurate decision. How many items are necessary depends on the task and the items themselves, but a good rule of thumb is that if there are fewer than three to five items of a given skill, there probably are not enough.” p. 369-370 Source: Hosp, J. L. (2008). Best practices in aligning academic assessment with instruction. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp.363-376). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 22 Response to Intervention Breaking Down Complex Academic Goals into Simpler Sub-Tasks: Discrete Categorization www.interventioncentral.org Response to Intervention Identifying and Measuring Complex Academic Problems at the Middle and High School Level • Students at the secondary level can present with a range of concerns that interfere with academic success. • One frequent challenge for these students is the need to reduce complex global academic goals into discrete sub-skills that can be individually measured and tracked over time. www.interventioncentral.org 24 Response to Intervention Discrete Categorization: A Strategy for Assessing Complex, Multi-Step Student Academic Tasks Definition of Discrete Categorization: ‘Listing a number of behaviors and checking off whether they were performed.’ (Kazdin, 1989, p. 59). • Approach allows educators to define a larger ‘behavioral’ goal for a student and to break that goal down into sub-tasks. (Each subtask should be defined in such a way that it can be scored as ‘successfully accomplished’ or ‘not accomplished’.) • The constituent behaviors that make up the larger behavioral goal need not be directly related to each other. For example, ‘completed homework’ may include as sub-tasks ‘wrote down homework assignment correctly’ and ‘created a work plan before starting homework’ Source: Kazdin, A. E. (1989). Behavior modification in applied settings (4th ed.). Pacific Gove, CA: Brooks/Cole.. www.interventioncentral.org 25 Response to Intervention Discrete Categorization Example: Math Study Skills General Academic Goal: Improve Tina’s Math Study Skills Tina was struggling in her mathematics course because of poor study skills. The RTI Team and math teacher analyzed Tina’s math study skills and decided that, to study effectively, she needed to: Check her math notes daily for completeness. Review her math notes daily. Start her math homework in a structured school setting. Use a highlighter and ‘margin notes’ to mark questions or areas of confusion in her notes or on the daily assignment. Spend sufficient ‘seat time’ at home each day completing homework. Regularly ask math questions of her teacher. www.interventioncentral.org 26 Response to Intervention Discrete Categorization Example: Math Study Skills General Academic Goal: Improve Tina’s Math Study Skills The RTI Team—with teacher and student input—created the following intervention plan. The student Tina will: Approach the teacher at the end of class for a copy of class note. Check her daily math notes for completeness against a set of teacher notes in 5th period study hall. Review her math notes in 5th period study hall. Start her math homework in 5th period study hall. Use a highlighter and ‘margin notes’ to mark questions or areas of confusion in her notes or on the daily assignment. Enter into her ‘homework log’ the amount of time spent that evening doing homework and noted any questions or areas of confusion. Stop by the math teacher’s classroom during help periods (T & Th only) to ask highlighted questions (or to verify that Tina understood that week’s instructional content) and to review the homework log. www.interventioncentral.org 27 Response to Intervention Discrete Categorization Example: Math Study Skills Academic Goal: Improve Tina’s Math Study Skills General measures of the success of this intervention include (1) rate of homework completion and (2) quiz & test grades. To measure treatment fidelity (Tina’s follow-through with sub-tasks of the checklist), the following strategies are used : Approached the teacher for copy of class notes. Teacher observation. Checked her daily math notes for completeness; reviewed math notes, started math homework in 5th period study hall. Student work products; random spot check by study hall supervisor. Used a highlighter and ‘margin notes’ to mark questions or areas of confusion in her notes or on the daily assignment. Review of notes by teacher during T/Th drop-in period. Entered into her ‘homework log’ the amount of time spent that evening doing homework and noted any questions or areas of confusion. Log reviewed by teacher during T/Th drop-in period. Stopped by the math teacher’s classroom during help periods (T & Th only) to ask highlighted questions (or to verify that Tina understood that week’s instructional content). Teacher observation; student sign-in. www.interventioncentral.org 28 Response to Intervention Monitoring Student Academic Behaviors: Daily Behavior Report Cards www.interventioncentral.org Response to Intervention Daily Behavior Report Cards (DBRCs) Are… brief forms containing student behavior-rating items. The teacher typically rates the student daily (or even more frequently) on the DBRC. The results can be graphed to document student response to an intervention. www.interventioncentral.org 30 Response to Intervention http://www.directbehaviorratings.com/ www.interventioncentral.org 31 Response to Intervention Daily Behavior Report Cards Can Monitor… • • • • • • Hyperactivity On-Task Behavior (Attention) Work Completion Organization Skills Compliance With Adult Requests Ability to Interact Appropriately With Peers www.interventioncentral.org 32 Response to Intervention Daily Behavior Report Card: Daily Version Jim Blalock Mrs. Williams www.interventioncentral.org May 5 Rm 108 Response to Intervention Daily Behavior Report Card: Weekly Version Jim Blalock Mrs. Williams Rm 108 05 05 07 05 06 07 05 07 07 05 08 07 05 09 07 40 www.interventioncentral.org 0 60 60 50 Response to Intervention Daily Behavior Report Card: Chart www.interventioncentral.org Response to Intervention RIOT/ICEL Framework: Organizing Information to Better Identify Student Behavioral & Academic Problems www.interventioncentral.org Response to Intervention www.interventioncentral.org Response to Intervention RIOT/ICEL Framework Sources of Information • Review (of records) • Interview • Observation • Test Focus of Assessment • Instruction • Curriculum • Environment • Learner www.interventioncentral.org 38 Response to Intervention RIOT/ICEL Definition • The RIOT/ICEL matrix is an assessment guide to help schools efficiently to decide what relevant information to collect on student academic performance and behavior—and also how to organize that information to identify probable reasons why the student is not experiencing academic or behavioral success. • The RIOT/ICEL matrix is not itself a data collection instrument. Instead, it is an organizing framework, or heuristic, that increases schools’ confidence both in the quality of the data that they collect and the findings that emerge from the data. www.interventioncentral.org 39 Response to Intervention RIOT: Sources of Information • Select Multiple Sources of Information: RIOT (Review, Interview, Observation, Test). The top horizontal row of the RIOT/ICEL table includes four potential sources of student information: Review, Interview, Observation, and Test (RIOT). Schools should attempt to collect information from a range of sources to control for potential bias from any one source. www.interventioncentral.org 40 Response to Intervention Select Multiple Sources of Information: RIOT (Review, Interview, Observation, Test) • Review. This category consists of past or present records collected on the student. Obvious examples include report cards, office disciplinary referral data, state test results, and attendance records. Less obvious examples include student work samples, physical products of teacher interventions (e.g., a sticker chart used to reward positive student behaviors), and emails sent by a teacher to a parent detailing concerns about a student’s study and organizational skills. www.interventioncentral.org 41 Response to Intervention Select Multiple Sources of Information: RIOT (Review, Interview, Observation, Test) • Interview. Interviews can be conducted face-to-face, via telephone, or even through email correspondence. Interviews can also be structured (that is, using a predetermined series of questions) or follow an open-ended format, with questions guided by information supplied by the respondent. Interview targets can include those teachers, paraprofessionals, administrators, and support staff in the school setting who have worked with or had interactions with the student in the present or past. Prospective interview candidates can also consist of parents and other relatives of the student as well as the student himself or herself. www.interventioncentral.org 42 Response to Intervention Select Multiple Sources of Information: RIOT (Review, Interview, Observation, Test) • Observation. Direct observation of the student’s academic skills, study and organizational strategies, degree of attentional focus, and general conduct can be a useful channel of information. Observations can be more structured (e.g., tallying the frequency of call-outs or calculating the percentage of on-task intervals during a class period) or less structured (e.g., observing a student and writing a running narrative of the observed events). www.interventioncentral.org 43 Response to Intervention Select Multiple Sources of Information: RIOT (Review, Interview, Observation, Test) • Test. Testing can be thought of as a structured and standardized observation of the student that is intended to test certain hypotheses about why the student might be struggling and what school supports would logically benefit the student (Christ, 2008). An example of testing may be a student being administered a math computation CBM probe or an Early Math Fluency probe. www.interventioncentral.org 44 Response to Intervention Formal Tests: Only One Source of Student Assessment Information “Tests are often overused and misunderstood in and out of the field of school psychology. When necessary, analog [i.e., test] observations can be used to test relevant hypotheses within controlled conditions. Testing is a highly standardized form of observation. ….The only reason to administer a test is to answer well-specified questions and examine well-specified hypotheses. It is best practice to identify and make explicit the most relevant questions before assessment begins. …The process of assessment should follow these questions. The questions should not follow assessment. “ p.170 Source: Christ, T. (2008). Best practices in problem analysis. In A. Thomas & J. Grimes (Eds.), Best practices in school psychology V (pp. 159-176). Bethesda, MD: National Association of School Psychologists. www.interventioncentral.org 45 Response to Intervention ICEL: Factors Impacting Student Learning • Investigate Multiple Factors Affecting Student Learning: ICEL (Instruction, Curriculum, Environment, Learner). The leftmost vertical column of the RIO/ICEL table includes four key domains of learning to be assessed: Instruction, Curriculum, Environment, and Learner (ICEL). A common mistake that schools often make is to assume that student learning problems exist primarily in the learner and to underestimate the degree to which teacher instructional strategies, curriculum demands, and environmental influences impact the learner’s academic performance. The ICEL elements ensure that a full range of relevant explanations for student problems are examined. www.interventioncentral.org 46 Response to Intervention Investigate Multiple Factors Affecting Student Learning: ICEL (Instruction, Curriculum, Environment, Learner) • Instruction. The purpose of investigating the ‘instruction’ domain is to uncover any instructional practices that either help the student to learn more effectively or interfere with that student’s learning. More obvious instructional questions to investigate would be whether specific teaching strategies for activating prior knowledge better prepare the student to master new information or whether a student benefits optimally from the large-group lecture format that is often used in a classroom. A less obvious example of an instructional question would be whether a particular student learns better through teacher-delivered or self-directed, computer-administered instruction. www.interventioncentral.org 47 Response to Intervention Investigate Multiple Factors Affecting Student Learning: ICEL (Instruction, Curriculum, Environment, Learner) • Curriculum. ‘Curriculum’ represents the full set of academic skills that a student is expected to have mastered in a specific academic area at a given point in time. To adequately evaluate a student’s acquisition of academic skills, of course, the educator must (1) know the school’s curriculum (and related state academic performance standards), (2) be able to inventory the specific academic skills that the student currently possesses, and then (3) identify gaps between curriculum expectations and actual student skills. (This process of uncovering student academic skill gaps is sometimes referred to as ‘instructional’ or ‘analytic’ assessment.) www.interventioncentral.org 48 Response to Intervention Investigate Multiple Factors Affecting Student Learning: ICEL (Instruction, Curriculum, Environment, Learner) • Environment. The ‘environment’ includes any factors in the student’s school, community, or home surroundings that can directly enable their academic success or hinder that success. Obvious questions about environmental factors that impact learning include whether a student’s educational performance is better or worse in the presence of certain peers and whether having additional adult supervision during a study hall results in higher student work productivity. Less obvious questions about the learning environment include whether a student has a setting at home that is conducive to completing homework or whether chaotic hallway conditions are delaying that student’s transitioning between classes and therefore reducing available learning time. www.interventioncentral.org 49 Response to Intervention Investigate Multiple Factors Affecting Student Learning: ICEL (Instruction, Curriculum, Environment, Learner) • Learner. While the student is at the center of any questions of instruction, curriculum, and [learning] environment, the ‘learner’ domain includes those qualities of the student that represent their unique capacities and traits. More obvious examples of questions that relate to the learner include investigating whether a student has stable and high rates of inattention across different classrooms or evaluating the efficiency of a student’s study habits and test-taking skills. A less obvious example of a question that relates to the learner is whether a student harbors a low sense of self-efficacy in mathematics that is interfering with that learner’s willingness to put appropriate effort into math courses. www.interventioncentral.org 50 Response to Intervention www.interventioncentral.org Response to Intervention Activity: Use the RIOT/ICEL Framework • Discussion: How can this framework be used in your school to improve the quality of the data that you collect on the student? www.interventioncentral.org 52 Response to Intervention Defining Academic Problems: Get It Right and Interventions Are More Likely to Be Effective Jim Wright www.interventioncentral.org www.interventioncentral.org Response to Intervention Defining Academic Problems: Recommended Steps 1. Be knowledgeable of the school academic curriculum and key student academic skills that are taught. The teacher should have a good survey-level knowledge of the key academic skills outlined in the school’s curriculum—for the grade level of their classroom as well as earlier grade levels. If the curriculum alone is not adequate for describing a student’s academic deficit, the instructor can make use of research-based definitions or complete a task analysis to further define the academic problem area. Here are guidelines for consulting curriculum and research-based definitions and for conducting a task analysis for more global skills. www.interventioncentral.org 54 Response to Intervention Defining Academic Problems: Recommended Steps Curriculum. The teacher can review the school’s curriculum and related documents (e.g., score-and-sequence charts; curriculum maps) to select specific academic skill or performance goals. First, determine the approximate grade or level in the curriculum that matches the student’s skills. Then, review the curriculum at that alternate grade level to find appropriate descriptions of the student‘s relevant academic deficit. For example, a second-grade student had limited phonemic awareness. The student was not able accurately to deconstruct a spoken word into its component sound-units, or phonemes. In the school’s curriculum, children were expected to attain proficiency in phonemic awareness by the close of grade 1. The teacher went ‘off level’ to review the grade 1 curriculum and found a specific description of phonemic awareness that she could use as a starting point in defining the student’s skill deficit. www.interventioncentral.org 55 Response to Intervention Defining Academic Problems: Recommended Steps Research-Based Skill Definitions. Even when a school’s curriculum identifies key skills, schools may find it useful to corroborate or elaborate those skill definitions by reviewing alternative definitions published in research journals or other trusted sources. For example, a student had delays in solving quadratic equations. The math instructor found that the school’s math curriculum did not provide a detailed description of the skills required to successfully complete quadratic equations. So the teacher reviewed the National Mathematics Advisory Panel report (Fennell et al., 2008) and found a detailed description of component skills for solving quadratic equations. By combining the skill definitions from the school curriculum with the more detailed descriptions taken from the research-based document, the teacher could better pinpoint the student’s academic deficit in specific terms. www.interventioncentral.org 56 Response to Intervention Defining Academic Problems: Recommended Steps Task Analysis. Students may possess deficits in more global ‘academic enabling’ skills that are essential for academic success. Teachers can complete an task analysis of the relevant skill by breaking it down into a checklist of constituent subskills. An instructor can use the resulting checklist to verify that the student can or cannot perform each of the subskills that make up the global ‘academic enabling’ skill. For example, teachers at a middle school noted that many of their students seemed to have poor ‘organization’ skills. Those instructors conducted a task analysis and determined that--in their classrooms--the essential subskills of ‘student organization’ included (a) arriving to class on time; (b) bringing work materials to class; (c) following teacher directions in a timely manner; (d) knowing how to request teacher assistance when needed; and (e) having an uncluttered desk with only essential work materials. www.interventioncentral.org 57 Response to Intervention Defining Academic Problems: Recommended Steps 2. Describe the academic problem in specific, skill-based terms (Batsche et al., 2008; Upah, 2008). Write a clear, brief description of the academic skill or performance deficit that focuses on a specific skill or performance area. Here are sample problem-identification statements: – John reads aloud from grade-appropriate text much more slowly than his classmates. – Ann lacks proficiency with multiplication math problems (double-digit times double-digit with no regrouping). – Tye does not turn in homework assignments. – Angela produces limited text on in-class writing assignments. www.interventioncentral.org 58 Response to Intervention Defining Academic Problems: Recommended Steps 3. Develop a fuller description of the academic problem to provide a meaningful instructional context. When the teacher has described the student’s academic problem, the next step is to expand the problem definition to put it into a meaningful context. This expanded definition includes information about the conditions under which the academic problem is observed and typical or expected level of performance. – Conditions. Describe the environmental conditions or task demands in place when the academic problem is observed. – Problem Description. Describe the actual observable academic behavior in which the student is engaged. Include rate, accuracy, or other quantitative information of student performance. – Typical or Expected Level of Performance. Provide a typical or expected performance criterion for this skill or behavior. Typical or expected academic performance can be calculated using a variety of sources, www.interventioncentral.org 59 Response to Intervention www.interventioncentral.org 60 Response to Intervention Defining Academic Problems: Recommended Steps 4. Develop a hypothesis statement to explain the academic skill or performance problem. The hypothesis states the assumed reason(s) or cause(s) for the student’s academic problems. Once it has been developed, the hypothesis statement acts as a compass needle, pointing toward interventions that most logically address the student academic problems. www.interventioncentral.org 61 Response to Intervention www.interventioncentral.org 62 Response to Intervention Activity: Using the Academic ProblemIdentification Guide • Discussion: How can your school use the framework for defining academic problems to support the RTI process? www.interventioncentral.org 63 Response to Intervention Curriculum-Based Measurement: Writing Jim Wright www.interventioncentral.org www.interventioncentral.org Response to Intervention Curriculum-Based Measurement/Assessment : Defining Characteristics: • Assesses preselected objectives from local curriculum • Has standardized directions for administration • Is timed, yielding fluency, accuracy scores • Uses objective, standardized, ‘quick’ guidelines for scoring • Permits charting and teacher feedback www.interventioncentral.org Response to Intervention CBM Writing: Preparation www.interventioncentral.org Response to Intervention CBM Writing Assessment: Preparation • Select a story starter • Create a CBM writing probe: a lined sheet with the story starter at the top www.interventioncentral.org Response to Intervention CBM Writing Assessment: Preparation Story Starter Tips: • Create or collect story starters that students will find motivating to write about. (And toss out starters that don’t inspire much enthusiasm!) • Avoid story starters that allow students simply to generate long lists: e.g., “What I want for my birthday is…” www.interventioncentral.org Response to Intervention CBM Writing Probes: Administration www.interventioncentral.org Response to Intervention www.interventioncentral.org Response to Intervention CBM Writing Probes: Scoring www.interventioncentral.org Response to Intervention CBM Writing Assessment: Scoring Total Words: I woud drink water from the ocean and I woud eat the fruit off of the trees. Then I woud bilit a house out of trees, and I woud gather firewood to stay warm. I woud try and fix my boat in my spare time. Total Words = 45 www.interventioncentral.org Response to Intervention CBM Writing Assessment: Scoring Total Words: Useful for tracking a student’s fluency in writing (irrespective of spelling, punctuation, etc.) www.interventioncentral.org Response to Intervention CBA Research Norms: Writing www.interventioncentral.org Response to Intervention CBM Writing Assessment: Scoring Correctly Spelled Words: I woud drink water from the ocean and I woud eat the fruit off of the trees. Then I woud bilit a house out of trees, and I woud gather firewood to stay warm. I woud try and fix my boat in my spare time. Correctly Spelled Words = 39 www.interventioncentral.org Response to Intervention CBM Writing Assessment: Scoring Correctly Spelled Words: Permits teachers to (a) monitor student spelling skills in context of writing assignments, and (b) track student vocabulary usage. www.interventioncentral.org Response to Intervention CBM Writing Assessment: Scoring Correct Writing Sequences: Most global CBM measure. Looks at quality of writing in context. www.interventioncentral.org Response to Intervention CBM Writing Assessment: Scoring Correct Writing Sequences: I woud drink water from the ocean and I woud eat the fruit off of the trees. Then I woud bilit a house out of trees, and I woud gather firewood to stay warm. I woud try and fix my boat in my spare time. Correct Writing Sequences = 37 www.interventioncentral.org Response to Intervention Trainer Question: What objections or concerns might teachers have about using CBM writing probes? How would you address these concerns? www.interventioncentral.org Response to Intervention Homework: Draft a Plan to Conduct an Academic Screening in Your School or District • • • • • Directions: Develop a draft plan to screen your student population for academic (and perhaps behavioral) concerns: Question 1: How will you determine what mix of measures to include in the screening (e.g., basic academic skills, advanced concepts, student performance according to curriculum expectations)? Question 2: How might you make use of existing data — e.g., disciplinary office referrals, grades, behavior — in your screening plan? Question 3: How frequently would you collect data for each element included in the screening plan? Question 4: Who would assist in implementing the screening plan? www.interventioncentral.org 83