PPT Lecture Notes (T

Outline of Today’s Discussion

1.

Single Sample & Paired T-Tests

2.

The Independent Samples T-Test

3.

Two Tails or One?

Part 1

Single Sample & Paired T-Tests

t

Test for a Single Sample

• Used for comparing the mean of a sample with a population for which the mean is known but the variance is unknown

• Question: How is this different from the Z-

Tests that we’ve done?

t

Test for a Single Sample

• Estimating population variance from sample scores

– Variance in sample generally slightly smaller than population

• Sample is a biased estimate of population

• So, divide by N-1 rather than N to correct for bias

– N-1 is known as the

“degrees of freedom,” the number of scores that are free to vary

t

Test for a Single Sample

• The comparison distribution is not a normal curve

– t distribution

– Like a normal curve

• Bell-shaped

• Unimodal

• Symmetrical

– But has more scores at the extremes

(i.e., heavier tails) and varies somewhat according to degrees of freedom

• Sample mean thus has to be slightly more extreme to be significant with a t distribution than with a normal curve

t

Test for a Single Sample

• Cool Fact:

Unlike a Gaussian distribution, The shape of the t-distribution changes with the DF!

• the t-distribution approaches Gaussian shape as DF increases .

t

Test for a Single Sample

• Determine cutoff sample score for rejecting the null hypothesis (using t table)

• Figure sample mean’s score on the t distribution ( t score)

NOW: t

Test for Dependent Means

• Used to compare two sets of scores where there are two scores for each participant

– Repeated-measures

– Within-subjects

– Paired

• Compares mean difference score across pairs of scores against a difference of 0 under the null hypothesis.

• In other respects, t test for dependent means is just like a single sample t test with a population mean of 0.

Assumptions:

1 sample or “Within”

• Assume that the population of individuals from which the sample was taken is normally distributed

• In practice, one seldom knows if a population is normally distributed

– OK because many distributions in nature do approximate a normal curve

– The t test is often still fairly accurate even when this assumption is violated

Effect Size: 1 sample or “Within”

• Effect size for the t test for dependent means is

– the mean of the difference scores

– divided by the estimated SD of the population of individual difference scores

• Studies using the t test for dependent means typically have larger effect sizes and more power than do studies with participants divided into two groups

• Effect size conventions

– Small = .20

– Medium = .50

– Large = .80

The One Sample T-Test

1. Example: Assume that you are a therapist who has received a ‘sample’ of 20 patients diagnosed with clinical depression.

2. After 5 weeks of your treatment, you might ask whether the mean of your sample is statistically indistinguishable from non-depressed patients, who have a mean of, say, 500 on a standard mood assessment.

The One Sample T-Test

1. The null hypothesis would be as follows:

Ho: In the population, the mean mood score of patients who have completed 5 weeks of (my) therapy is equal to that of non-depressed people.

2. In this example, the test value would be equal to whatever the mean of the non-depressed population is…let’s say ‘500’. So, the test value = 500 here.

The One Sample T-Test

1. Example 2:

Ho: In the population, the mean age when first married is equal to 20.

2. So, the test value = 20 here.

3. The steps in SPSS are simple…

The One Sample T-Test

SPSS Steps:

Analyze Compare Means One Sample T-Test

Test Value Box Enter the value that is to be compared to the sample mean

•The sample mean of 22.79,

•is compared to our test value,

•Say, 20.

The ‘ sig ’ value indicates that we should reject Ho, i.e., we should reject that the sample mean is equal to the test value.

The One Sample T-Test

SPSS Steps:

Analyze Compare Means One Sample T-Test

Test Value Box Enter the value that is to be compared to the sample mean

•The sample mean of 22.79,

•is compared to our test value,

•Say, 20.

The mean difference of 2.792 is significantly different from zero (Ho).

Note: If Ho were true, than the 95% confidence interval would contain a zero.

The One Sample T-Test

SPSS Steps:

Analyze Compare Means One Sample T-Test

Test Value Box Enter the value that is to be compared to the sample mean

•The sample mean of 22.79,

•is compared to our test value,

•Say, 22.7

.

The ‘ sig ’ value indicates that we should retain Ho, i.e., we should accept that the sample mean is equal to the test value.

The One Sample T-Test

SPSS Steps:

Analyze Compare Means One Sample T-Test

Test Value Box Enter the value that is to be compared to the sample mean

•The sample mean of 22.79,

•is compared to our test value,

•Say, 22.7

.

•The mean difference of 0.092 is NOT significantly different from zero (Ho).

•Note: When Ho is true, than the 95% confidence interval contains a zero.

The One Sample T-Test

1. Typically, we prefer to run two samples…one experimental group and one control group.

2. However, that may not always be possible.

3. The one sample t-test allows for a statistical comparison to some abstract standard (the

‘test value’), rather than to a control group.

NOWPaired T-Tests in SPSS

1.

For the Paired-Samples T-Test

2.

SPSS Analyze Compare Means

Paired Samples T-Test

Paired Variable(s) Box (slide two variables into here)

OK

Paired T-Tests in SPSS

• SPSS Output – Paired Samples T-test

• This is the numerator of the t-ratio.

• The mean difference between the scores.

Paired T-Tests in SPSS

• SPSS Output – Paired Samples T-test

• This is the sd of the difference the scores.

• It’s NOT directly relevant to the t-ratio.

• But it’s kind of like an old buddy.

Paired T-Tests in SPSS

• SPSS Output – Paired Samples T-test

• This is the denominator of the t-ratio.

• Computationally = to

Paired T-Tests in SPSS

• SPSS Output – Paired Samples T-test

• Here’s the 95% confidence interval on the difference.

Why don’t we want this interval to “contain” zero?

Paired T-Tests in SPSS

• SPSS Output – Paired Samples T-test

• This is the info for our Results Section.

• t(6) = 3.946, p = 0.008

Part 2

The Independent Samples T-Test

t

Test for Independent Means

• Like the t test for dependent means…

– Used to compare two groups of scores when you do not know the population variance

• Unlike the t test for dependent means…

– Two groups of scores come from two entirely different groups of people

– In other words, the scores are “independent” of one another

Computing the ‘t’ Statistic

1.

The independent samples “t” statistic is based on 3 assumptions.

2.

The first assumption is the distribution of scores should be bell-shaped .

3.

The second assumption is that the two populations from which the samples are selected must have (at least approximately) equal variances .

4.

The third assumption is independence (the value of any datum does not depend on the any other datum).

Computing the ‘t’ Statistic

1. How might we quantitatively assess the first assumption, i.e., the normalcy assumption ? Hint:

We’ve done it before (kind of).

2. There are ways to test the equal variance assumption quantitatively. SPSS will help us with that later.

3. The independence assumption requires that we investigate how the data were obtained. (No stats here.)

The independence assumption does NOT pertain to the within-subject t-test.

Effect Size

There is Trouble in Paradise

(Say it with me)

Effect Size

1. One major problem with Null Hypothesis

Testing (i.e., inferential statistics) is that the outcome depends on sample size .

2. For example, a particular set of scores might generate a non-significant t-test with n=10.

But if the exact same numbers were duplicated

(n=20) the t-test suddenly becomes

“significant”.

Effect Size

1. Effect Size – The magnitude of the influence that the IV has on the DV.

2. Effect size does NOT depend on sample size!

(“And there was much rejoicing!”)

Effect Size

1. A commonly used measure of effect size is

Cohen’s d .

2. Conventions for Cohen’s d: d = 0.2 small effect d = 0.5 medium effect d = 0.8 large effect

Power for a

t

Test

For Independent Means

• The power associated with small, medium, and large effect sizes can be determined from a power table.

• Power is greatest when participants are divided into equal groups.

Practice With T-Tests in SPSS

1.

For the Independent-Samples T-Test

2.

SPSS Analyze Compare Means

Independent Samples T-Test

Test Variable(s) Box (place D.V. Here)

Grouping Variable Box (place I.V. Here)

Define Groups (insert the TWO levels of the I.V. you wish to compare)

OK

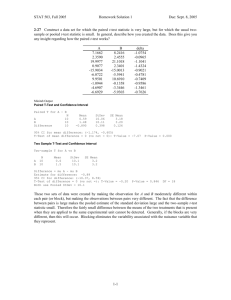

SPSS: Independent Samples T-Test

SPSS Output – Independent Samples T-test

Equal variance assumption is tested here.

We want puny F values, and sig values near 1.

SPSS: Independent Samples T-Test

SPSS Output – Independent Samples T-test

This is the info for our Results Section.

t(12) = 3.565, p = 0.004

SPSS: Independent Samples T-Test

SPSS Output – Independent Samples T-test

This is the numerator of the t-ratio.

The difference between the means

.

SPSS: Independent Samples T-Test

SPSS Output – Independent Samples T-test

This is the denominator of the t-ratio.

Computationally = to

SPSS: Independent Samples T-Test

SPSS Output – Independent Samples T-test

Here’s the 95% confidence interval on the difference.

Why don’t we want this interval to “contain” zero?

Computing the ‘t’ Statistic

Formula for the independent samples “t” statistic

What does “x bar” represent, again?

Computing the ‘t’ Statistic

Denominator term in the independent samples “t” statistic

What does this “s” represent, again?

Computing the ‘t’ Statistic

Degrees of Freedom for the independent samples “t” statistic df = N - 2

Where N equals the sum of the two sample sizes (n1 + n2).

Computing the ‘t’ Statistic

The Effe ctive ne s s of Dr ug x The Effe ctive ne s s of Dr ug x

12

10

8

6

4

2

0

8

6

4

2

0

12

10

Drug x

Tr eatm e nt

Placebo Drug x

Tr eatm e nt

Placebo

Which graph makes a more convincing case for Drug X, and why?

For t-tests…the context is the variability.

Computing the ‘t’ Statistic

The Effe ctive ne s s of Dr ug x The Effe ctive ne s s of Dr ug x

12

10

8

6

4

2

0

8

6

4

2

0

12

10

Drug x

Tr eatm e nt

Placebo Drug x

Tr eatm e nt

Placebo

Which Graph would be associated with the larger ‘t’ statistic, and why?

Part 3

Two Tails or One?

•Two-Tailed Case

Two Tails or One?

1. Here’s are some hypotheses for t-tests

H

0

: In the population, the means for the control and experimental groups will be equal .

H

1

: In the population, the means for the control and experimental groups will NOT be equal .

2. The alternate hypothesis is said to be “ two tailed ” because it is non-directional…it does

NOT state which of the two means will be larger.

Two-Tailed Case

Two Tails or One?

Non-directional hypotheses are evaluated with a two-tailed test!

.025 is lower

•than this

.025 is higher

•than this

We could reject the null hypothesis whether the observed t-statistic is in either critical region … but the observed “ t ” value must be at least 47.5 percentiles away from the mean!

mean = 50 th percentile:

50 - 47.5 = 2.5 percentile (left) : 50 + 47.5 = 97.5 percentile (right)

•One-Tailed Case

Two Tails or One?

1. Here’s are some hypotheses for t-tests

H

0

: In the population, the means for the control and experimental groups will be equal .

H

1

: In the population, the mean for the control group will be greater than the mean for the experimental group.

2. The alternate hypothesis is said to be “ one tailed ” because it is directional …it states which mean will be larger.

One-Tailed Case

Two Tails or One?

Directional hypotheses are evaluated with a one-tailed test!

We can only reject the null hypothesis when the observed t-statistic is in the predicted critical region … but the observed “ t ” value only needs to be at least 45 percentiles away from the mean!

mean = 50 th percentile:

50 + 45= 95 percentile (right)

Two Tails or One?

1.

When do we use two tails versus one tail?

2.

It depends!

3.

If you want to make a directional prediction, use one-tail, otherwise use two-tails.

4.

Two-tails are often preferred because they are more conservative

(47.5 percentiles from mean for two-tailed significance, versus a mere

45 percentiles from mean for one tailed significance).