Intermediate Applied Statistics STAT 460

advertisement

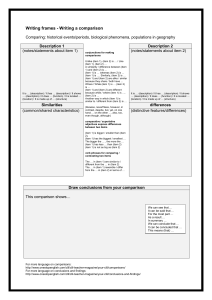

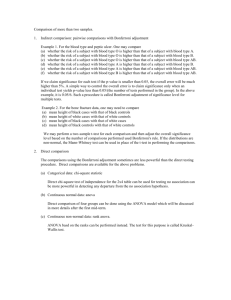

Intermediate Applied Statistics STAT 460 Lecture 10, 10/1/2004 Instructor: Aleksandra (Seša) Slavković sesa@stat.psu.edu TA: Wang Yu wangyu@stat.psu.edu Failure Times Example from Sleuth, p. 171 Boxplots of TIME by COMPOUND (means are indicated by solid circles) 25 TIME 20 15 10 5 5 4 3 2 COMPOUND 1 0 One-way ANOVA One-way ANOVA: TIME versus COMPOUND Analysis of Variance for TIME Source DF SS MS COMPOUND 4 401.3 100.3 Error 45 899.2 20.0 Total 49 1300.5 Level 1 2 3 4 5 N 10 10 10 10 10 Pooled StDev = Mean 10.693 6.050 8.636 9.798 14.706 4.470 StDev 4.819 2.915 3.291 5.806 4.863 F 5.02 P 0.002 Individual 95% CIs For Mean Based on Pooled StDev --+---------+---------+---------+---(------*------) (------*------) (-------*------) (------*-------) (------*------) --+---------+---------+---------+--4.0 8.0 12.0 16.0 ANOVA So far we have talked about testing the null hypothesis H0: μ1 = μ2 = μ3 = . . . = μk versus the alternative hypothesis HA: at least one pair of groups has μi ≠ μ j Next, we want to know where the difference comes from? Approaches to Comparisons A. B. C. D. Pairwise comparisons Multiple comparison with a control group Multiple comparison with the “best” group Linear contrasts Each of these procedures, (e.g. pairwise confidence intervals for the difference) are available in addition to corresponding tests, in most statistical packages. All Pairwise Comparisons In this approach, we don’t just want to know whether there is any significant difference, we want to know which groups are significantly different from which other groups. The most obvious approach to testing pairwise comparisons is just to do a t-test on each pair of groups. The problem with this is sometimes called “compound uncertainty” or “capitalizing on chance.” Note: By significantly we mean systematically or demonstrably (statistical significance), not greatly or importantly (practical significance). Fails 5% of time Fails 5% of time Fails 5% of time Fails 5% of time Fails 5% of time If there are five independent parts and each fail 5% of the time, then the whole machine fails 1-(0.95)5 = 23% of the time! All Pairwise Comparisons If you have c tests, each of which has its individual false positive rate controlled at , then the total false positive rate may be as high as 1(1-)c c. If we want to do all possible pairwise comparisons then the number of tests will be k * (k 1) 2 All Pairwise Comparisons If we want to do all possible pairwise comparisons, then the number of tests will be k * (k 1) c where k is the number of groups. If there are 5 groups then there are 10 tests! 2 All Pairwise Comparisons So we have to decide whether we want to control only individual type I error risk, or experiment-wide (or “familywise”) type I error risk. We have to be much more conservative to control the latter than we would need to be to control the former. In fact, we might have to make so low that β (power) will become very high. All Pairwise Comparisons One possible answer is to control individual type I error risk for planned comparisons (a few comparisons which were of special interest as you were planning the study) and control experimentwide type I error risk for unplanned comparisons (those you are making after the study has been done just for exploratory purposes). Suppose for now that there aren’t any comparisons of special interest, we just want to look at all possible pairwise comparisons. Then we definitely should try to control experiment-wide error. Method 1: Fisher’s Protected LSD (Least Significant Difference) A simple approach to trying to control experimentwide error: 1. First do an overall F-test to see if there are any differences at all 2. If this test does not reject H0 then conclude that no differences can be found. 3. If it does reject H0 then go ahead and do all possible two-group t-tests. (You might use a standard deviation pooled over all groups instead of each pair separately, but otherwise act just as in the two-sample case.) Method 1: Fisher’s Protected LSD (Least Significant Difference) The only problem with that approach is that it doesn’t work. At least according to some experts, the LSD procedure does not really control experiment-wide error. You still have the multiple comparisons problem. Method 2: Bonferroni Correction One way to do this is to use a “Bonferroni correction” on . The idea here is that we first set a family-wise , say .05, and then figure out how small the individual * would need to be in order to keep the family-wise type I error rate at . For example, if *=.01 and c=5, then .05. Method 2: Bonferroni Correction Bonferroni Inequality: For independent events A1, …, Ak of equal probability, P( A1, A2 , A3 ,..., Ac ) 1 (1 P( Ai )) c P( Ai ) c Method 2: Bonferroni Correction So if we just set * then the c experiment-wide error rate will be controlled. An advantage of this approach is that it is a simple idea (just divide up your acceptable risk equally among all the tests you want to do). A disadvantage is that it can be inefficient. The can become quite small, e.g., .05/21 .002. c Method 3: Tukey’s (HSD) Tests Tukey’s “Honest Significant Difference” test takes a different approach that does not use the t or F distributions but the distribution of the “studentized range” or Q statistic. In a Tukey test, the Q distribution is used to find a boundary on differences between group averages , such that 95% of the time if the null hypothesis was true, no pair of groups would have a difference larger than this boundary. (Ymax Ymin ) M SE So any pair of groups with a difference larger than this critical value is declared significantly different. Confidence Intervals Much like the usual t-intervals, the Bonferroni and Tukey confidence intervals for μ1-μ2 is yi y j m * SE yi y j best estimate for μ1-μ2 “margin of error” or “half-width” of interval SE yi y j s p 1 1 n1 n2 where m is some multiplier. But m is chosen differently for each procedure. yi y j m * SE yi y j actually, t stands for t 2 For a t-interval or LSD t-interval, For a Bonferroni t-interval, m t m t * dfe,1 2 2 2 with α*= α/c For a Tukey confidence interval, m (dfTreat )( FdfTrea t,df Erro r )(1 ) Method 4: Scheffe’s test Also available are Scheffé tests and intervals, which use m (dfTreat )( FdfTreat,df Error )(1 ) but they lead to a test that may be too conservative. How to compare specific groups in an ANOVA If you have a few planned comparisons of theoretical importance in mind, then you can just do them with a slightly adjusted t-test a different value for sP and a different degrees of freedom for the t statistic based on the fact that you pool variance over all groups, not just two. If you want to do many comparisons then you may wish to try some means of controlling compound uncertainty (i.e., correcting for multiple comparisons). In the case where you want to test for all pairwise differences, choices include Fisher’s LSD (too lenient), Bonferroni, and Tukey (the latter also known as TukeyKramer when the sample sizes are different). There are also various other procedures I didn’t (and won’t) mention. Tukey's pairwise comparisons This is an abbreviation for, “We are 95% confident according to the Tukey procedure that the signed Family error rate = 0.0500 Individual error rate = 0.00670 Critical value = 4.02 difference μ1-μ2 is between -1.040 4 hours and +10.326 hours.” So we can’t Intervals for (column level mean) - (row level mean) 1 2 3 conclude that μ1≠μ2. 2 -1.040 10.326 3 -3.626 7.740 -8.269 3.097 4 -4.788 6.578 -9.431 1.935 -6.845 4.521 5 -9.696 1.670 -14.339 -2.973 -11.753 -0.387 -10.591 0.775 Conclude μ2≠μ5 and μ3≠μ5. More specifically, μ2<μ5 and μ3<μ5. Tukey's pairwise comparisons Family error rate = 0.0500 Individual error rate = 0.00670 Critical value = 4.02 Intervals for (column level mean) - (row level mean) 1 2 3 2 -1.040 10.326 3 -3.626 7.740 -8.269 3.097 4 -4.788 6.578 -9.431 1.935 -6.845 4.521 5 -9.696 1.670 -14.339 -2.973 -11.753 -0.387 4 -10.591 0.775 Tukey Simultaneous Tests Response Variable TIME All Pairwise Comparisons among Levels of COMPOUND COMPOUND = 1 subtracted from: Level Difference SE of COMPOUND of Means Difference 2 -4.643 1.999 3 -2.057 1.999 4 -0.895 1.999 5 4.013 1.999 T-Value -2.322 -1.029 -0.448 2.007 Adjusted P-Value 0.1567 0.8406 0.9914 0.2792 COMPOUND = 2 subtracted from: Level Difference SE of COMPOUND of Means Difference 3 2.586 1.999 4 3.748 1.999 5 8.656 1.999 T-Value 1.294 1.875 4.330 Adjusted P-Value 0.6965 0.3454 0.0008 COMPOUND = 3 subtracted from: Level Difference SE of COMPOUND of Means Difference 4 1.162 1.999 5 6.070 1.999 T-Value 0.5812 3.0363 Adjusted P-Value 0.9772 0.0309 COMPOUND = 4 subtracted from: Level Difference SE of COMPOUND of Means Difference 5 4.908 1.999 T-Value 2.455 Adjusted P-Value 0.1196 2 3 4 1 5 (“not significantly different”) (“not significantly different”) Multiple Comparisons with a Control A different situation arises when you have a control group and are only interested in the comparison of other groups to the control group, not to one another. Then there is something like Tukey’s test, but modified, called Dunnett’s test. Multiple Comparisons with a Control Tukey confidence intervals are for μi - μJ and there is one of them for every pair of distinct groups, which comes out to be k*(k1)/n of them. Dunnett confidence intervals are for μi - μControl and there are only k-1 of them, one for each noncontrol group. Multiple Comparisons with a Control Suppose compound 5 is thought of as a control group because it is the compound that has been in use previously. We want to know if any of the other compounds have different population mean survival times than compound 5. Multiple Comparisons with a Control Dunnett's comparisons with a control Family error rate = 0.0500 Individual error rate = 0.0149 Critical value = 2.53 Control = level (5) of COMPOUND Intervals for treatment mean minus control mean Level 1 2 3 4 Lower -9.074 -13.717 -11.131 -9.969 Center -4.013 -8.656 -6.070 -4.908 Upper -----+---------+---------+---------+-1.048 (------------*------------) -3.595 (-----------*------------) -1.009 (------------*-----------) 0.153 (------------*-----------) -----+---------+---------+---------+--12.0 -8.0 -4.0 0.0 For compounds 2 and 3, we are confident that their difference from the control is nonzero. Multiple Comparisons with a Control Dunnett Simultaneous Tests Response Variable TIME Comparisons with Control Level COMPOUND = 5 subtracted from: Level COMPOUND 1 2 3 4 Difference of Means -4.013 -8.656 -6.070 -4.908 SE of Difference 1.999 1.999 1.999 1.999 T-Value -2.007 -4.330 -3.036 -2.455 Adjusted P-Value 0.1555 0.0003 0.0141 0.0597 Again we conclude that compounds 2 and 3 are different from the control group (actually, poorer). Multiple comparison with the best There is also a kind of test called Hsu’s multiple comparison with the best (MCB). This test tries to decide which groups could plausibly have come from the population with the highest (or lowest, if you prefer) mean. The group with the highest (lowest) sample average is automatically the leading candidate, but any group that does not significantly differ from this group is still included as a possible best. So Hsu’s confidence intervals, one for each group, would be for μi -μbest. This approach is harder to understand than the others, perhaps because it seems to be doing more than one thing at the same time. Next Lecture Linear Contrasts