Department Chairs' Presentation

advertisement

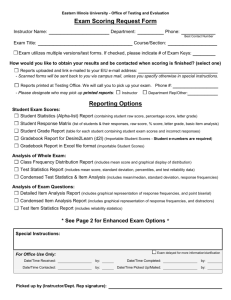

Insight Improvement Impact® Using IDEA for Faculty Evaluation University of Alabama Birmingham September 11, 2012 Shelley A. Chapman, PhD What makes IDEA unique? 1. 2. 3. 4. 5. 6. Focus on Student Learning Focus on Instructor’s Purpose Adjustments for Extraneous Influences Validity and Reliability Comparison Data Flexibility Conditions for Good Use I. The instrument • Focuses on learning • Provides a diagnostic Conditions for Good Use II. The Administrative Process • 30-50% of evaluation of teaching • Not over-interpreted (3-5 performance categories) • 6-8 classes, more if small (<10) Conditions for Good Use III. The Faculty • Trust the process • Value student feedback • Are motivated to make improvements Conditions for Good Use IV. Campus Culture • Teaching excellence: high priority • Student ratings: appropriate weight • Resources are provided Student Learning Framework: 2 Assumptions Assumption 1: Types of learning must reflect the instructor’s purpose. Student Diagnostic Form Assumption 2: Effectiveness determined by students’ progress on objectives stressed by instructor Online Response Rates – Best Practices • Create value for student feedback • Monitor and Communicate through multiple modalities: • Twitter • Facebook • Other • Prepare Students • Talk about it • Syllabus Example: Course Syllabus IDEA Center Learning Objective Course Learning Outcomes Students will be able to apply the methods, processes, and Objective 3: Learning to principles of earth science to apply course material (to understanding natural improve thinking, problem phenomena solving, and decisions) Students will think more critically about the earth and environment Objective 8: Developing Students will be able to skill in expressing myself present scientific results in written and oral forms orally or in writing Using Results To Evaluate Faculty Three-Phase Process for Faculty Evaluation Collect Data Set Expectations Use Data Three-Phase Process for Faculty Evaluation I. Set Expectations What does this entail regarding IDEA? Criterion-Referenced Standards Criterion • Use averages on 5-point scale • Recognize that some objectives are more difficult to achieve • “Authenticate” objectives Page 1 NormReferenced Standards Use Converted Average • IDEA? • Discipline? • Institution? Comparative Information: Converted Averages T Score Distribution Gray Band 40% Similar 10% Much Lower 20% Lower 20% Higher 10% Much Higher Comparison Scores Create Categories of Performance Below Acceptable Standards Marginal, Needs Improvement Does Not Meet Expectations Meets Expectations Meets Expectations Exceeds Expectations Outstanding Exceeds Expectations Performance Categories: EXAMPLE Criterion Average Rating Effectiveness Category Normative T-Score Below 3.0 Below acceptable standards Below 38 3.0-3.4 Marginal, improvement needed 38-44 3.5-3.9 Meets expectations 45-54 4.0-4.4 Exceeds expectations 55-62 4.5 or higher Outstanding 63 or higher Three-Phase Process for Faculty Evaluation II. Collect Data What do you look for regarding IDEA? Reports For Personnel Decisions: Pages 1 and 2 What were students’ perceptions of the course and their learning? Things to Consider… Were the appropriate objectives selected? • How many? • Do they match the course? • How might you “authenticate” the objectives selected? FIF: Selecting Objectives • 3-5 as “Essential” or “Important” • Is it a significant part of the course? • Do you do something specific to help students accomplish the objective? • Does the student’s progress on the objective influence his or her grade? Be true to your course. Things to Consider… What were the students’ perceptions of their course and their learning? How Did Students Rate Their Learning? How Did Students Rate Their Learning ? A. Progress on Relevant Objectives1 Four objectives were selected as relevant (Important or Essential—see page 2) 1If Your Average (5-point Scale) Raw Adj. 4.1 4.3 you are comparing Progress on Relevant Objectives from one instructor to another, use the converted average. Progress On Relevant Objectives 4.3 + 4.3 4.1 4.2 4 3.6 5 Summary Evaluation: Five-Point Scale Your Average Score (5-point scale) Report Page 1 Raw Adj. 4.1 4.3 4.7 4.9 4.1 4.4 D. Average of B & C 4.4 4.7 Summary Evaluation (Average of A & D) 4.3 4.5 A. Progress on Relevant Objectives Four objectives were selected as relevant (Important or Essential—see page 2) Overall Ratings B. Excellent Teacher C. Excellent Course 50% 25% 25% Understanding Adjusted Scores Impact of Extraneous Factors • Gaining Factual Knowledge – Average Progress Ratings Work Habits (Item 43) Technical Report 12, page 40 Student Motivation (Item 39) High High Avg. Avg. Low Avg. Low High 4.48 4.38 4.28 4.13 4.04 High Avg. 4.38 4.29 4.14 3.96 3.76 Average 4.28 4.14 4.01 3.83 3.64 Low Avg. 4.15 4.05 3.88 3.70 3.51 Low 4.11 3.96 3.78 3.58 3.38 Impact of Extraneous Factors • Gaining Factual Knowledge – Average Progress Ratings Work Habits (Item 43) High High Avg. Low Avg. Low High 4.48 4.38 High Avg. 4.38 4.29 Low Avg. 3.70 3.51 Low 3.58 3.38 Average Technical Report 12, page 40 Student Motivation (Item 39) Avg. 4.01 Raw or Adjusted Scores Purpose How much did students learn? Raw or Adjusted? Raw What were the instructor’s contributions to learning? Adjusted How do faculty compare? Adjusted When to Use Adjusted Scores Are adjusted scores lower or higher than raw scores? Lower Higher Yes No Use adjusted scores *Expectations defined by your unit. Do raw scores meet or exceed expectations?* Use raw scores Three-Phase Process for Faculty Evaluation III. Use Data Which data will you use and how? Faculty IDEA Worksheet Created by Pam Milloy, Grand View University . • Keep track of reports • Look for longitudinal trends • Use for promotion and tenure Available from The IDEA Center Website Use Other Sources of Evidence • Self-evaluation/reflective statement • Course materials • tests, assignments, rubrics, etc. • Artifacts of student learning • papers, projects, completed assignments • Classroom assessment/research efforts Other Sources of Evidence (Continued) • Evidence of student interest • Student/alumni feedback • Feedback from mentors/colleagues • Eminence Measures • Honors and awards • Invited presentations • Classroom visitations or observations Classroom Observations Time What Happened What Was Said Classroom Observations Time What Happened What Was Said 8:05 Instructor (I): OK, Class. Let’s begin. Make sure you turned in your homework as you came in. Instructor shut door Students are shuffling papers, opening books. Today we will begin our discussion on the brain. Turn in your textbooks to chapter 5. 8:10 Student comes in late Several students raise hands 8:15 Is your brain more like a computer or a jungle? Who would like to respond first? Female in first row is called on Student (S) My brain is a jungle! I am so unorganized! (class laughs)… Flow of Communication Map Instructor M M F F F F F M M F M M M M F M F F F F F Using the Data Summative (pp.1-2) • Criterion or Norm• • • • referenced Adjusted or raw Categories of Performance 30-50% of Teaching Evaluation 6-8 Classes (more if small) Formative (p.3) • Identify areas to improve • Access applicable resources from IDEA website • Read and have conversations • Implement new ideas Using the Report to Improve Course Planning and Teaching Page 2: What did students learn? Suggested Action Steps #16 #18 #19 POD-IDEA Notes IDEA Website POD-IDEA Notes • Background • Helpful Hints • Assessment Issues • References and Resources IDEA Papers Resources for • Faculty Evaluation • Faculty Development Reflective Practice Try something new Meet with colleagues to reflect Collect Feedback Improve Reflect & Discuss Paper or Online Interpret Results Read & Learn POD-IDEA Notes IDEA Papers Interpret Reports The Group Summary Report How did we do? How might we improve? Defining Group Summary Reports (GSRs) • Institutional • Departmental • Service/Introductory Courses • Major Field Courses • General Education Program Adding Questions Up to 20 Questions can be added • Institutional • Departmental • Course-based • All of the above Local Code Use this section of the FIF to code types of data. Defining Group Summary Reports • Local Code • 8 possible fields • Example: Column one – Delivery Format • 1=Self-paced • 2=Lecture • 3=Studio • 4=Lab • 5=Seminar • 6=Online Example from Benedictine University Example Using Local code Assign Local Code Request Reports • 1=Day, Tenured • All Day Classes • Local Code=1, 3, & 5 • 2=Evening, Tenured • All Evening Classes • 3=Day, Tenure Track • 4=Evening, Tenure Track • Local Code=2, 4, & 6 • Courses Taught by • 5=Day, Adjunct Adjuncts • 6=Evening, Adjunct • Local Code=5 & 6 Curriculum Review • Multi-section courses • Prerequisite-subsequent courses • Curriculum committee review Curriculum Review IDEA Learning Objectives Name of Course 1 2 3 4 5 6 7 English Composition X X Finite Mathematics X X X Biology for Non-Majors X X General Psychology X Western Civilization X X 11 X X X X X 12 X X Art Appreciation Fitness and Wellness X 8 9 10 X X X X X Identifying Gaps IDEA Learning Objectives Name of Course 1 2 3 4 5 6 7 English Composition X X Finite Mathematics X X X Biology for Non-Majors X X General Psychology X Western Civilization X X 11 X X X X X 12 X X Art Appreciation Fitness and Wellness X 8 9 10 X X X X X 9. Learning how to find and use resources for answering questions or solving problems Questions